If your FastDFS file system needs high availability and needs to be deployed on multiple machines, and you only run FastDFS on these servers, then FastDFS may not be suitable for deployment with Docker, it can be deployed directly on the machines according to the official documentation, there is no need to use containers to deploy. In fact, FastDFS is not suitable for containerized deployment, because the tracker server reports its IP to the storage server, and this IP is the IP inside the container. This is a private IP segment of Docker, which will prevent clients from accessing the storage server. Of course, if you use a host network or a network solution that connects clients to storage, such as flannel, calico, etc., this is possible, and in Kubernetes based on service discovery, clients can also access the storage server.

So what scenario is FastDFS deployment using Docker suitable for? In fact, it is suitable for small and medium-sized projects that do not require high availability and high performance. Or all FastDFS services can be wrapped in a container and started with other services using docker-compose, which is perfect. My project is this scenario, because of limited server resources, it is impossible to deploy a separate FastDFS server cluster, blah blah blah the whole high availability. All project components are deployed on one machine using Docker, and nginx in FastDFS is also not deployed separately, and tracker, storage server together in a container. There is no way to save resources 😂

Building a docker image

Officially, there are two ways to build docker images, but I feel that they are not good, one is to use the centos base image build, the size of the build out is nearly, there is no need to distinguish between local build or network build. The whole process of building you need to connect to the extranet to download the build package and so on, and what network build and local build to distinguish? So here we just need to prepare the configuration file.

1

2

3

4

|

╭─root@docker-230 ~/root/container/fastdfs/fastdfs/docker/dockerfile_network ‹master›

╰─# docker images

REPOSITORY TAG IMAGE ID CREATED SIZE

fastdfs centos c1488537c23c 8 seconds ago 483MB

|

Preparing the configuration file

1

2

3

4

5

6

7

8

9

10

|

# 将所有的配置文件一并放到 conf 目录下

cd ~

mkdir -p fastdfs/conf

git clone https://github.com/happyfish100/fastdfs.git --depth 1

cp -rf fastdfs/docker/dockerfile_local/conf/* conf

cp fastdfs/docker/dockerfile_local/fastdfs.sh conf

touch Dockerfile.debian

touch Dockerfile.alpine

# 复制出来配置文件,把源码移除掉就可以

rm -rf fastdfs

|

Modify the configuration file

All the configuration files are in conf, so we can modify each one according to our needs.

I modified the default configuration, the data storage directory modified to /var/fdfs, in writing Dockerfile when you need to create this directory, if your directory is not modified, the Dockerfile there after the path to create the folder modified to the default can be.

tracker_server modified to tracker_server=tracker_ip:22122, when the container starts using the environment variable injected FASTDFS_IPADDR to replace it can.

1

2

3

4

5

6

7

8

9

10

11

|

╭─root@debian-deploy-132 ~/fastdfs/conf

╰─# tree

.

├── client.conf # C 语言版本客户端配置文件,可以忽略

├── fastdfs.sh # docker 容器启动 fastdfs 服务的脚本

├── http.conf # http 配置文件,参考官方文档

├── mime.types #

├── mod_fastdfs.conf # fastdfs nginx 模块配置文件

├── nginx.conf # nginx 配置文件,根据自身项目修改

├── storage.conf # storage 服务配置文件

└── tracker.conf # tracker 服务配置文件

|

tracker service configuration file tracker.conf

1

2

3

4

|

disabled=false #启用配置文件

port=22122 #设置tracker的端口号

base_path=/var/dfs #设置tracker的数据文件和日志目录(需预先创建)

http.server_port=28080 #设置http端口号

|

storage service configuration file storage_ids.conf

1

2

3

4

5

6

|

# storage服务端口

port=23000 # 监听端口号

base_path=/var/dfs # 数据和日志文件存储根目录

store_path0=/var/dfs # 第一个存储目录

tracker_server=tracker_ip:22122 # tracker服务器IP和端口

http.server_port=28888

|

nginx configuration file nginx.conf

1

2

3

4

5

6

7

8

9

10

11

12

|

# 在 nginx 配置文件中添加修改下面这段

server {

listen 28888; ## 该端口为storage.conf中的http.server_port相同

server_name localhost;

location ~/group[0-9]/ {

ngx_fastdfs_module;

}

error_page 500 502 503 504 /50x.html;

location = /50x.html {

root html;

}

}

|

nginx module configuration file mod_fastdfs.conf

1

2

3

|

tracker_server=tracker_ip:22122 # tracker服务器IP和端口

url_have_group_name=true # url 中包含 group 的名称

store_path0=/var/dfs # 数据和日志文件存储根目录

|

Script for the fastdfs service fastdfs.sh

The official script is written very arbitrarily, I will modify a ha, do not modify according to the official to also ok

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

|

#!/bin/bash

new_val=$FASTDFS_IPADDR

old="tracker_ip"

sed -i "s/$old/$new_val/g" /etc/fdfs/storage.conf

sed -i "s/$old/$new_val/g" /etc/fdfs/mod_fastdfs.conf

echo "start trackerd"

/etc/init.d/fdfs_trackerd start

echo "start storage"

/etc/init.d/fdfs_storaged start

echo "start nginx"

/usr/local/nginx/sbin/nginx

tail -f /dev/null

|

Replace bash with sh

In fact, this step can not be done, if you use bash boot, you need to install bash in alpine, will increase the size of the image of about 6MB, it does not feel necessary to do so 😂

1

2

3

4

5

6

7

8

9

10

11

|

sed -i 's/bash/sh/g' `grep -nr bash | awk -F ':' '{print $1}'`

# 替换后

grep -nr \#\!\/bin\/sh

stop.sh:1:#!/bin/sh

init.d/fdfs_storaged:1:#!/bin/sh

init.d/fdfs_trackerd:1:#!/bin/sh

conf/fastdfs.sh:1:#!/bin/sh

restart.sh:1:#!/bin/sh

docker/dockerfile_local/fastdfs.sh:1:#!/bin/sh

docker/dockerfile_network/fastdfs.sh:1:#!/bin/sh

|

Dockerfile

alpine:3.10

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

|

FROM alpine:3.10

COPY conf/ /home

RUN set -xe \

&& echo "http://mirrors.aliyun.com/alpine/latest-stable/main/" > /etc/apk/repositories \

&& echo "http://mirrors.aliyun.com/alpine/latest-stable/community/" >> /etc/apk/repositories \

&& apk update \

&& apk add --no-cache --virtual .build-deps alpine-sdk gcc libc-dev make perl-dev openssl-dev pcre-dev zlib-dev tzdata \

&& cp /usr/share/zoneinfo/Asia/Shanghai /etc/localtime \

&& echo "Asia/Shanghai" > /etc/timezone \

&& mkdir -p /usr/local/src \

&& cd /usr/local/src \

&& git clone https://github.com/happyfish100/libfastcommon.git --depth 1 \

&& git clone https://github.com/happyfish100/fastdfs.git --depth 1 \

&& git clone https://github.com/happyfish100/fastdfs-nginx-module.git --depth 1 \

&& wget http://nginx.org/download/nginx-1.15.4.tar.gz \

&& tar -xf nginx-1.15.4.tar.gz \

&& cd /usr/local/src/libfastcommon \

&& sed -i 's/sys\/poll\.h/poll\.h/g' src/sockopt.c \

&& ./make.sh \

&& ./make.sh install \

&& cd /usr/local/src/fastdfs/ \

&& ./make.sh \

&& ./make.sh install \

&& cd /usr/local/src/nginx-1.15.4/ \

&& ./configure --add-module=/usr/local/src/fastdfs-nginx-module/src/ \

&& make && make install \

&& apk del .build-deps tzdata \

&& apk add --no-cache pcre-dev bash \

&& mkdir -p /var/fdfs /home/fastdfs/ \

&& mv /home/fastdfs.sh /home/fastdfs/ \

&& mv /home/*.conf /home/mime.types /etc/fdfs \

&& mv /home/nginx.conf /usr/local/nginx/conf/ \

&& chmod +x /home/fastdfs/fastdfs.sh \

&& sed -i 's/bash/sh/g' /etc/init.d/fdfs_storaged \

&& sed -i 's/bash/sh/g' /etc/init.d/fdfs_trackerd \

&& sed -i 's/bash/sh/g' /home/fastdfs/fastdfs.sh \

&& rm -rf /usr/local/src/* /var/cache/apk/* /tmp/* /var/tmp/* $HOME/.cache

VOLUME /var/fdfs

EXPOSE 22122 23000 28888 28080

CMD ["/home/fastdfs/fastdfs.sh"]

|

debian:stretch-slim

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

|

FROM debian:stretch-slim

COPY conf/ /home/

RUN set -x \

&& cp /usr/share/zoneinfo/Asia/Shanghai /etc/localtime \

&& echo "Asia/shanghai" > /etc/timezone \

&& sed -i 's/deb.debian.org/mirrors.aliyun.com/g' /etc/apt/sources.list \

&& sed -i 's|security.debian.org/debian-security|mirrors.aliyun.com/debian-security|g' /etc/apt/sources.list \

&& apt update \

&& apt install --no-install-recommends --no-install-suggests -y build-essential libpcre3 libpcre3-dev zlib1g \

git wget ca-certificates zlib1g-dev libtool libssl-dev \

&& rm -rf /var/lib/apt/lists/* \

&& mkdir -p /usr/local/src \

&& cd /usr/local/src \

&& git clone https://github.com/happyfish100/libfastcommon.git --depth 1 \

&& git clone https://github.com/happyfish100/fastdfs.git --depth 1 \

&& git clone https://github.com/happyfish100/fastdfs-nginx-module.git --depth 1 \

&& wget http://nginx.org/download/nginx-1.15.4.tar.gz \

&& tar -xf nginx-1.15.4.tar.gz \

&& cd /usr/local/src/libfastcommon \

&& ./make.sh \

&& ./make.sh install \

&& cd /usr/local/src/fastdfs/ \

&& ./make.sh \

&& ./make.sh install \

&& cd /usr/local/src/nginx-1.15.4/ \

&& ./configure --add-module=/usr/local/src/fastdfs-nginx-module/src/ \

&& make && make install \

&& apt purge -y build-essential libtool git wget ca-certificates \

&& apt autoremove -y \

&& mkdir -p /var/dfs /home/fastdfs/ \

&& mv /home/fastdfs.sh /home/fastdfs/ \

&& mv /home/*.conf /home/mime.types /etc/fdfs \

&& mv /home/nginx.conf /usr/local/nginx/conf/ \

&& chmod +x /home/fastdfs/fastdfs.sh \

&& rm -rf /var/lib/apt/list /usr/local/src/*

VOLUME /var/fdfs

EXPOSE 22122 23000 28888 28080

|

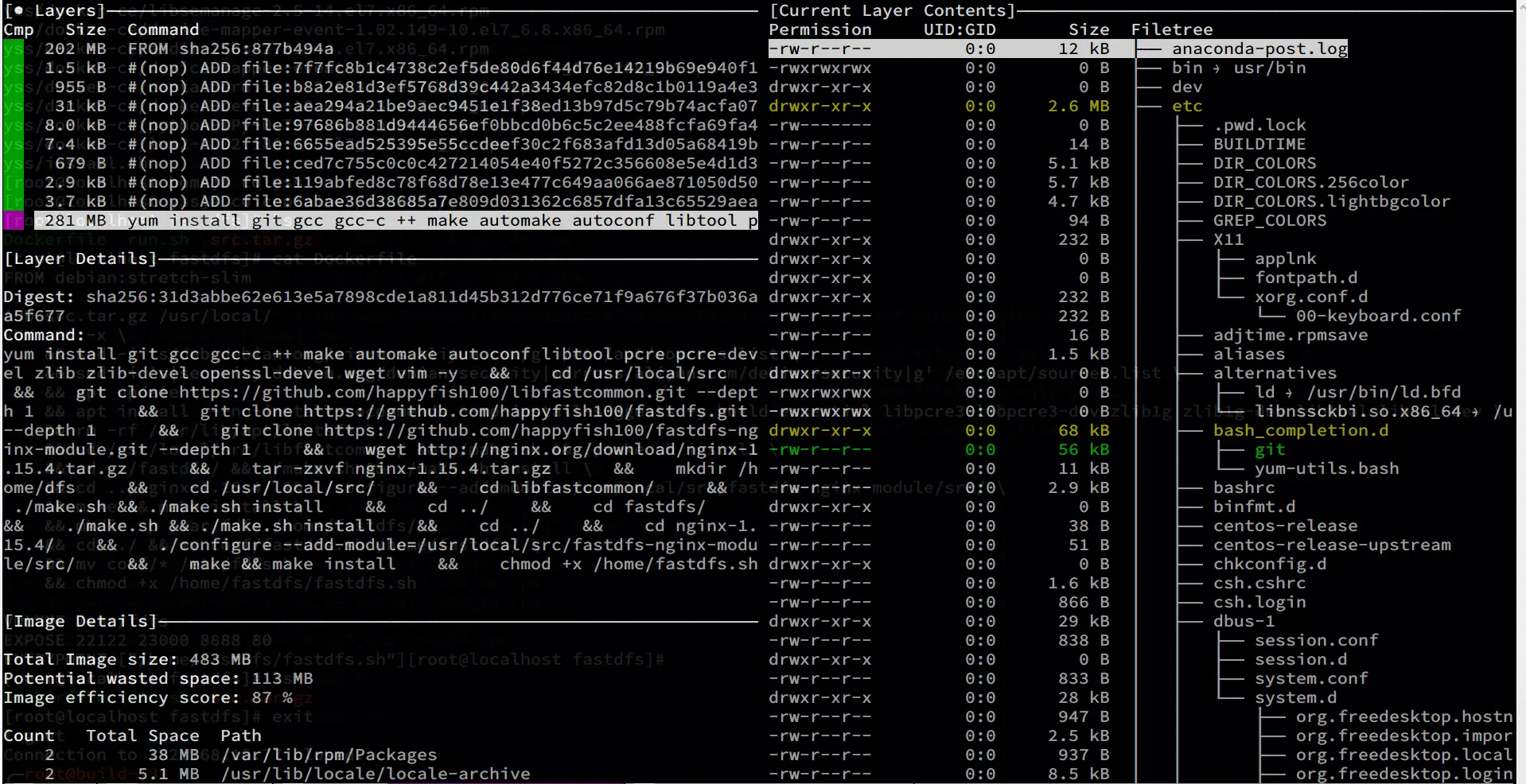

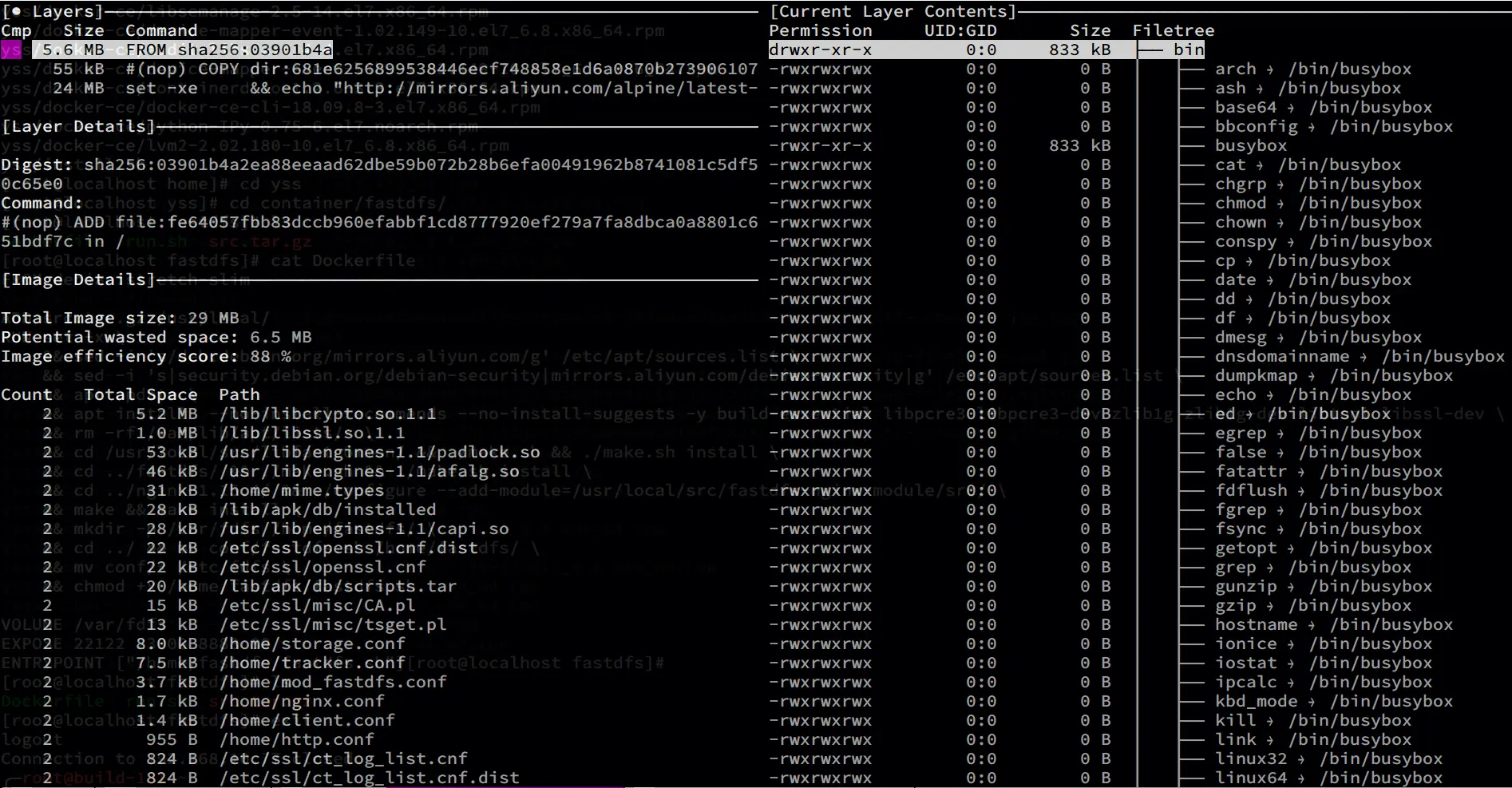

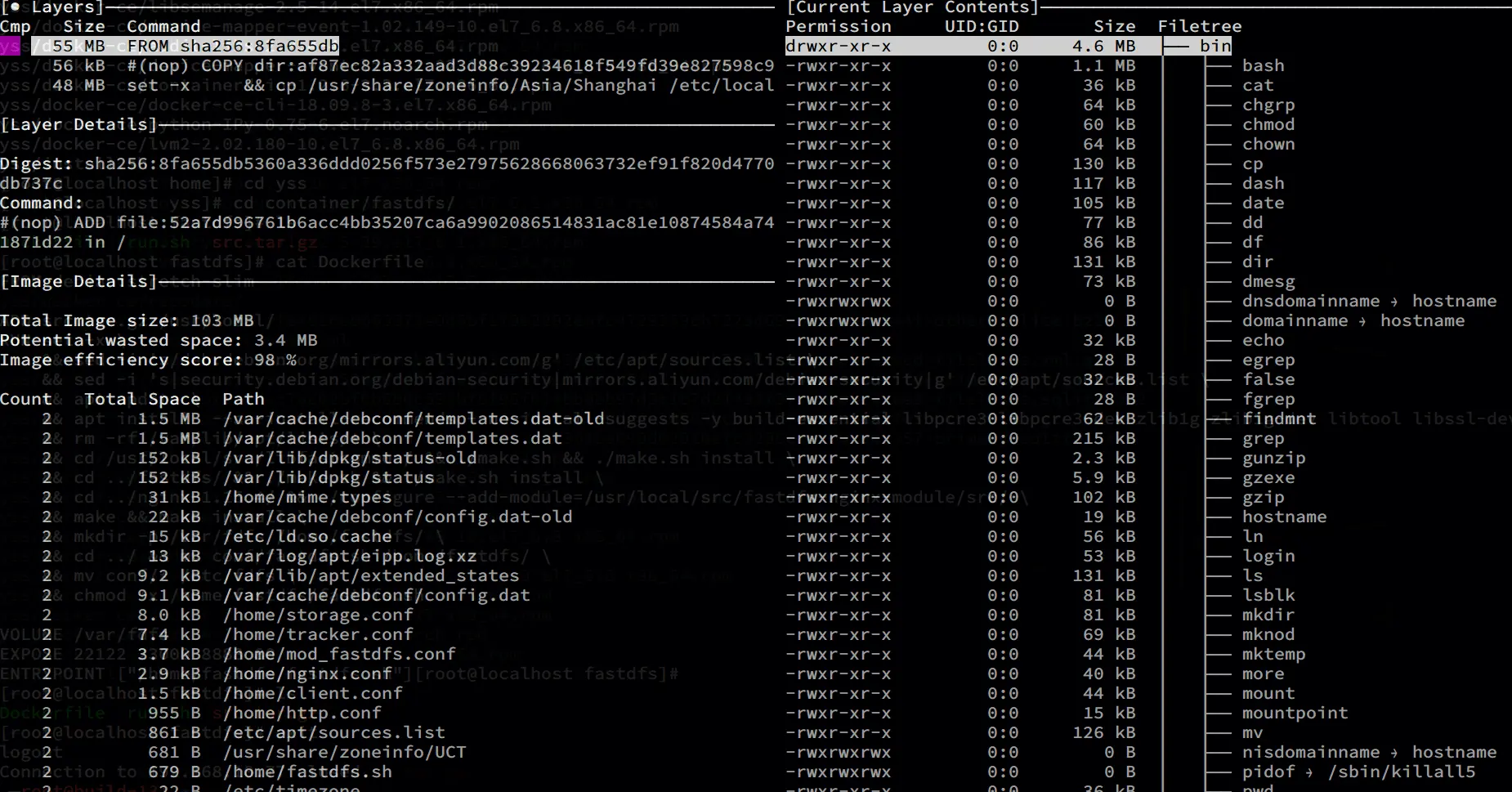

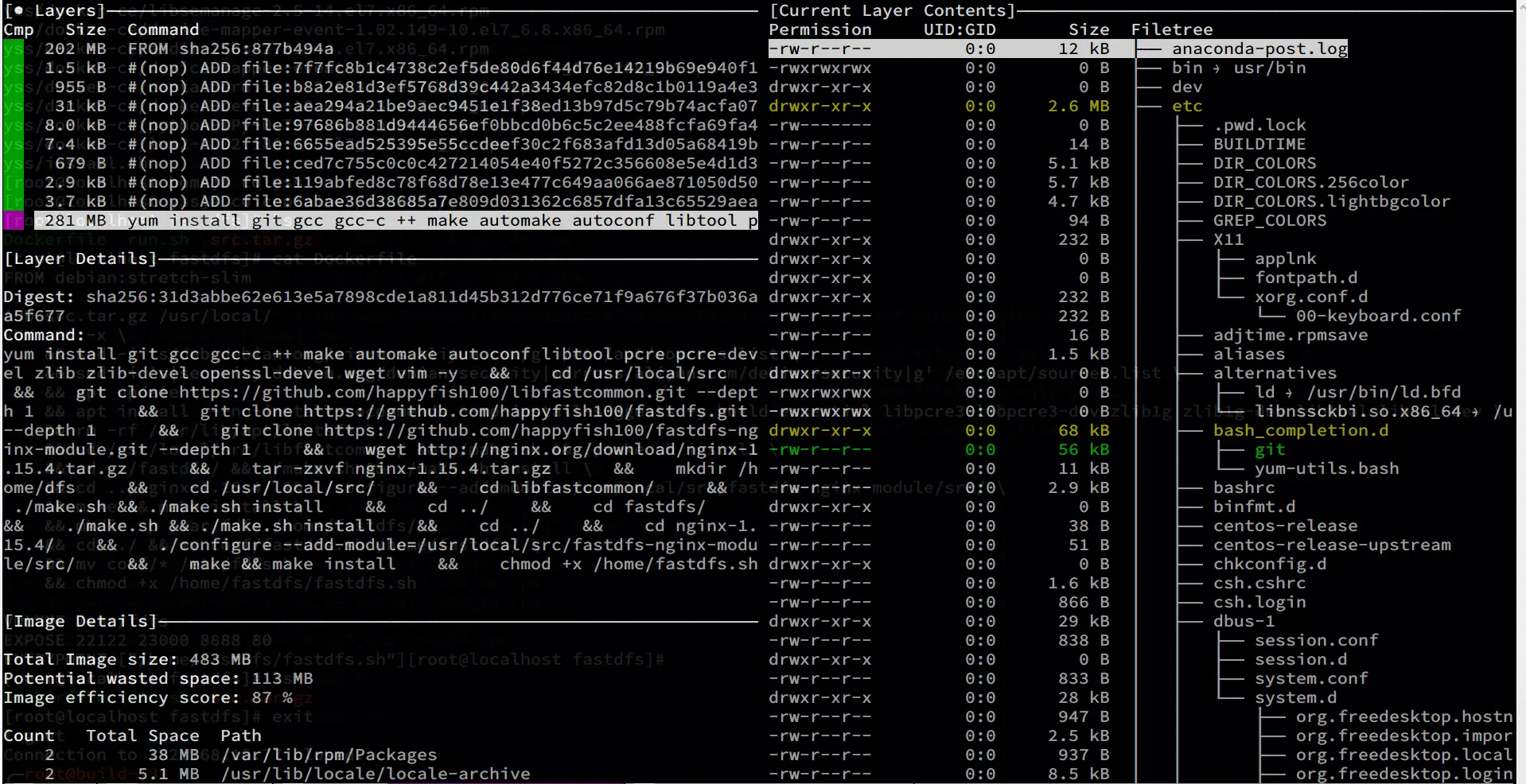

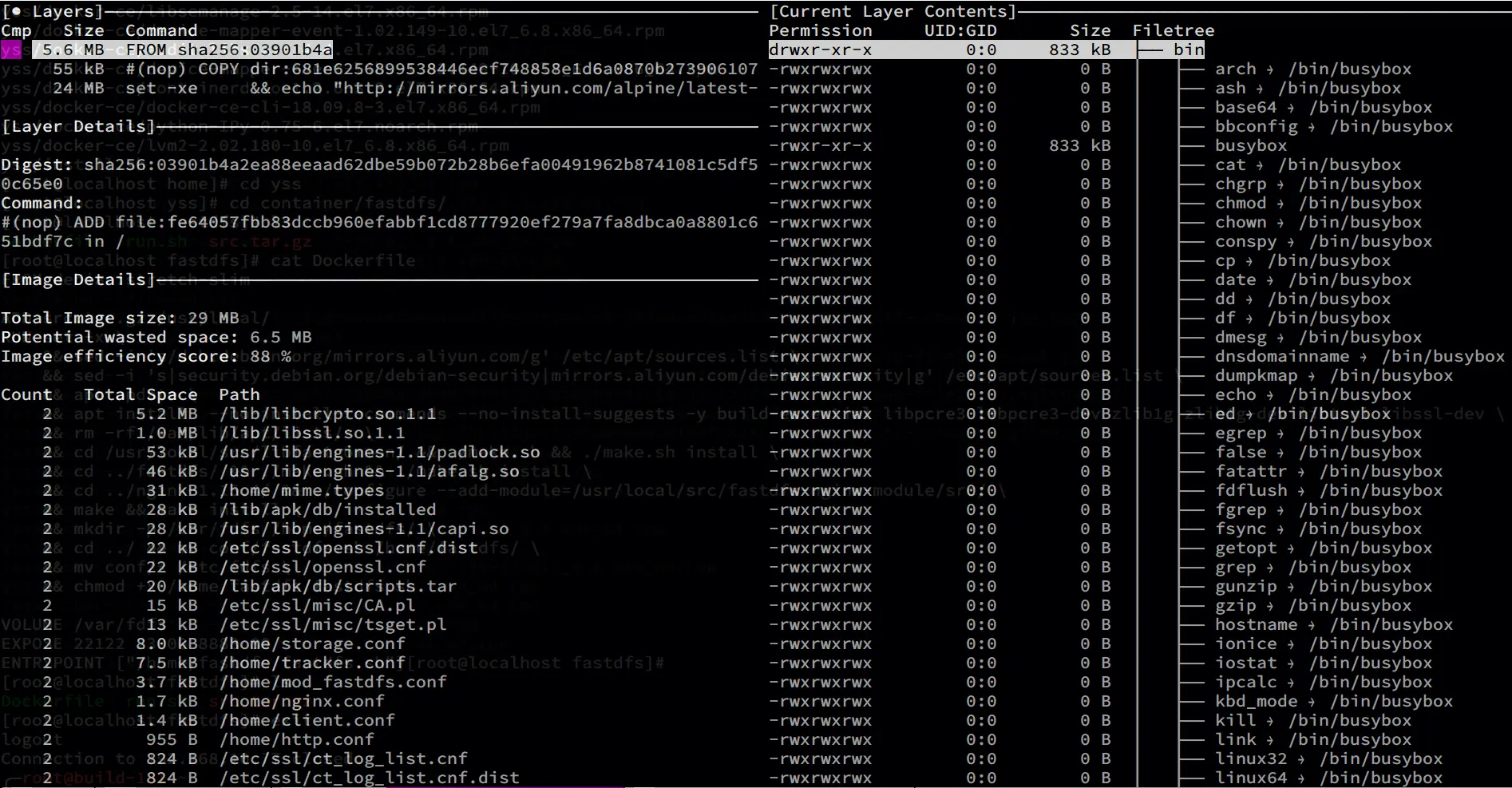

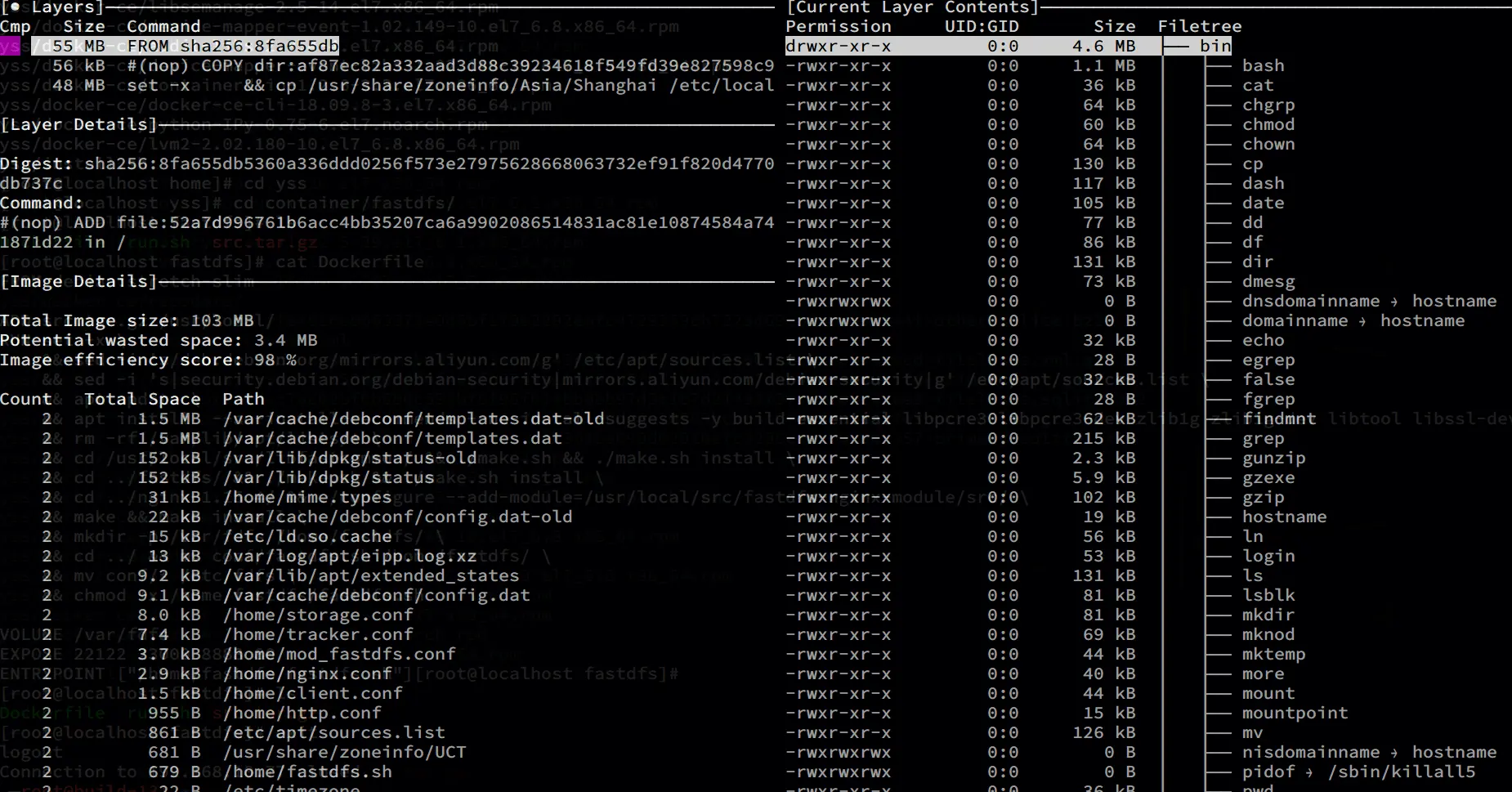

Comparison of the size built out of different base images

The official build out image is nearly 500MB

1

2

3

|

fastdfs alpine e855bd197dbe 10 seconds ago 29.3MB

fastdfs debian e05ca1616604 20 minutes ago 103MB

fastdfs centos c1488537c23c 30 minutes ago 483MB

|

Analyze individual images using dive

Official

alpine

After the alpine-based base image is built, there are only three layers of images 😂

debian

Problems caused by musl libc

However, it should be noted that there is a big difference between the dynamic link libraries of CentOS and Alpine base images, when you use ldd to check the compiled fastdfs of both, Alpine lacks many dynamic link libraries because the c library of alpine is musl libc, not the orthodox glibc , and for some large projects that rely on glibc, like openjdk, tomcat, rabbitmq, etc., it is not recommended to use alpine base images, because musl libc can cause some strange problems with jvm. This is why tomcat does not officially give the base image as a Dockerfile of alpine

1

2

3

4

5

6

7

8

|

centos# find /usr/bin/ -name "fdfs*" | xargs ldd

linux-vdso.so.1 => (0x00007fffe1d30000)

libpthread.so.0 => /lib64/libpthread.so.0 (0x00007f86036e6000)

libfastcommon.so => /lib/libfastcommon.so (0x00007f86034a5000)

libc.so.6 => /lib64/libc.so.6 (0x00007f86030d8000)

/lib64/ld-linux-x86-64.so.2 (0x00007f8603902000)

libm.so.6 => /lib64/libm.so.6 (0x00007f8602dd6000)

libdl.so.2 => /lib64/libdl.so.2 (0x00007f8602bd2000)

|

1

2

3

4

|

alpine # find /usr/bin/ -name "fdfs*" | xargs ldd

/lib/ld-musl-x86_64.so.1 (0x7f4f91585000)

libfastcommon.so => /usr/lib/libfastcommon.so (0x7f4f91528000)

libc.musl-x86_64.so.1 => /lib/ld-musl-x86_64.so.1 (0x7f4f91585000)

|

1

2

3

4

5

6

7

8

|

debian # find /usr/bin/ -name "fdfs*" | xargs ldd

linux-vdso.so.1 (0x00007ffd17e50000)

libpthread.so.0 => /lib/x86_64-linux-gnu/libpthread.so.0 (0x00007feadf317000)

libfastcommon.so => /usr/lib/libfastcommon.so (0x00007feadf0d6000)

libc.so.6 => /lib/x86_64-linux-gnu/libc.so.6 (0x00007feaded37000)

/lib64/ld-linux-x86-64.so.2 (0x00007feadf74a000)

libm.so.6 => /lib/x86_64-linux-gnu/libm.so.6 (0x00007feadea33000)

libdl.so.2 => /lib/x86_64-linux-gnu/libdl.so.2 (0x00007feade82f000)

|

Container startup method

Note that FastDFS containers need to use the host network, because the fastdfs storage server needs to report its IP to the tracker server, and this IP needs to be injected into the relevant configuration file by means of environment variables.

1

2

3

|

docker run -d -e FASTDFS_IPADDR=10.10.107.230 \

-p 28888:28888 -p 22122:22122 -p 23000:23000 -p 28088:28080 \

-v $PWD/data:/var/fdfs --net=host --name fastdfs fastdfs:alpine

|

Testing

- upload client:

fdfs_upload_file

- concurrent execution tool:

xargs 3.

- test sample: 10W emoji images, size between 8KB-128KB 4.

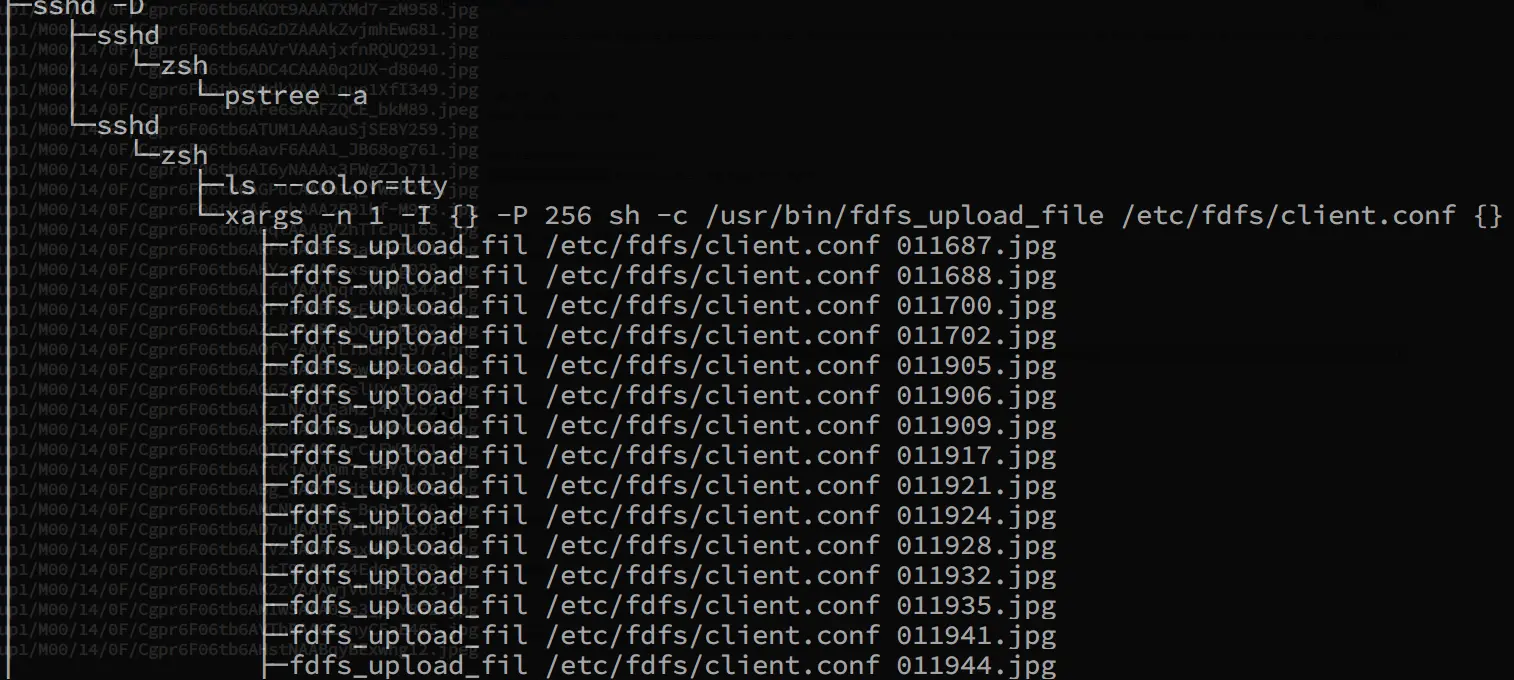

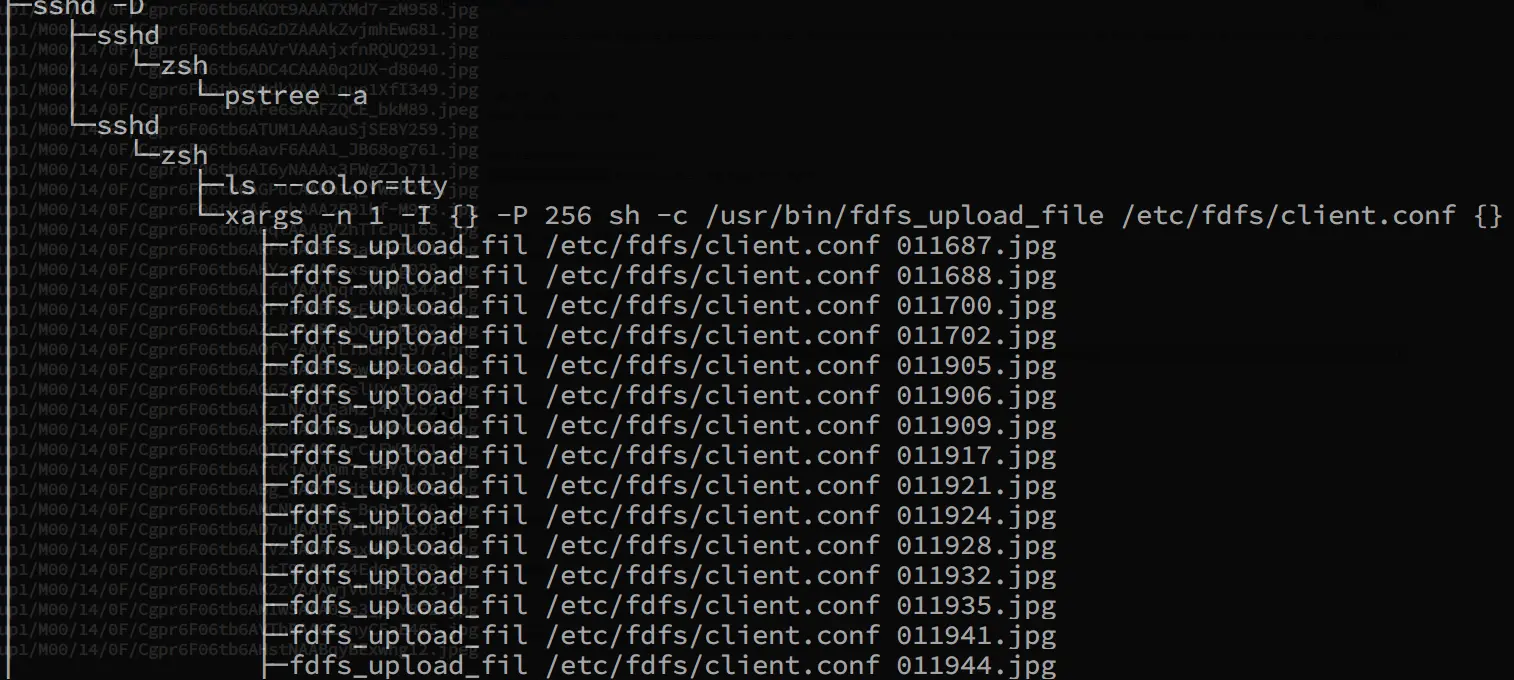

- upload test command: `time ls | xargs -n 1 -I {} -P 256 sh -c “/usr/bin/fdfs_upload_file /etc/fdfs/client.conf {}"`` -p parameter specifies the number of concurrently executed tasks

- download the test tool:

wget

1

|

下载测试命令:`time cat url.log | xargs -n 1 -I {} -P 256 sh -c "wget {}"`

|

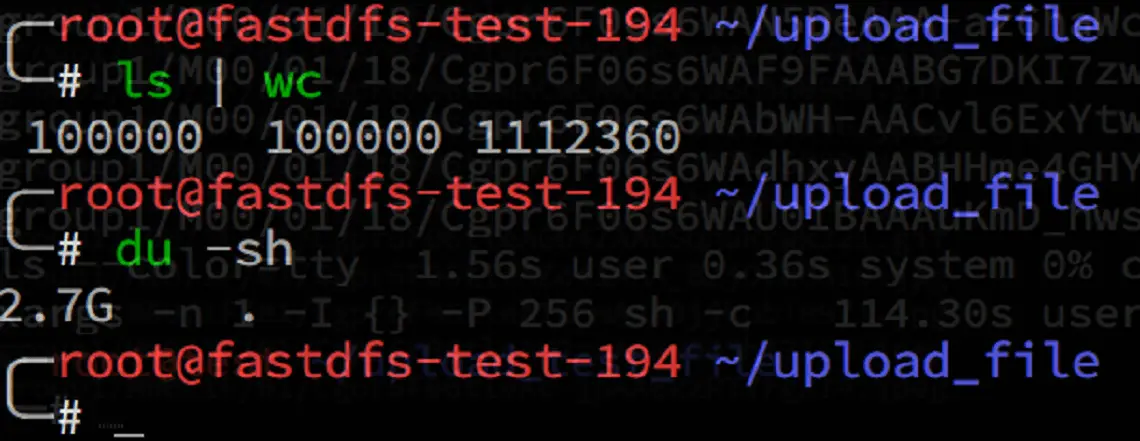

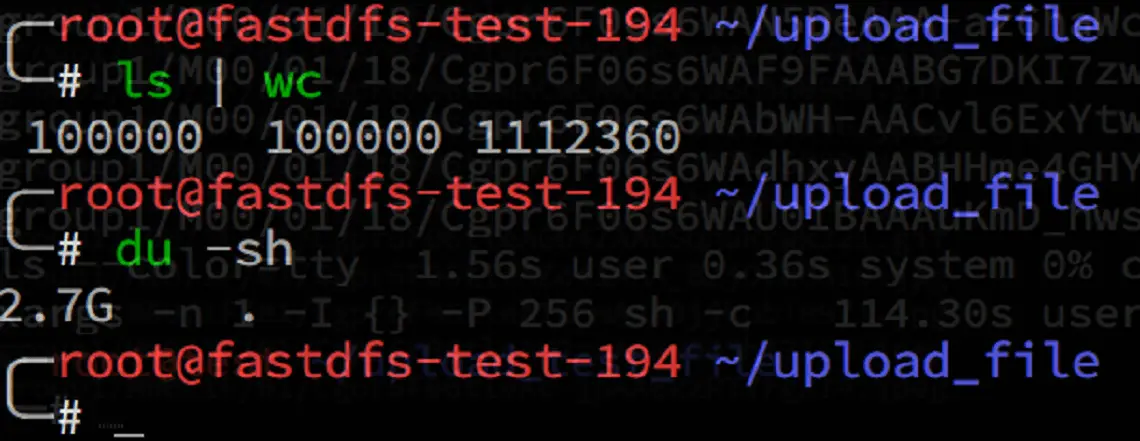

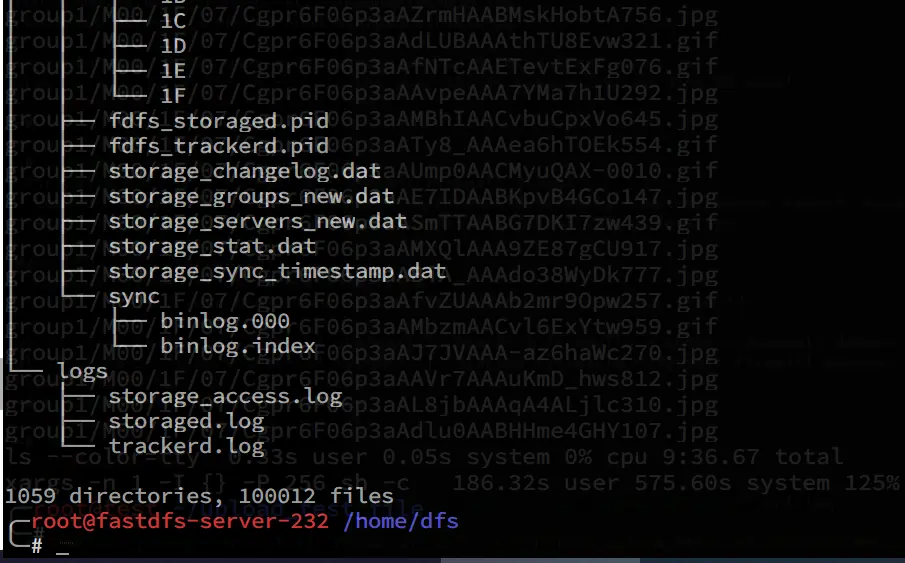

File upload test

The test sample is 10W images ranging in size from 8KB-100 KB

Number and size of test files

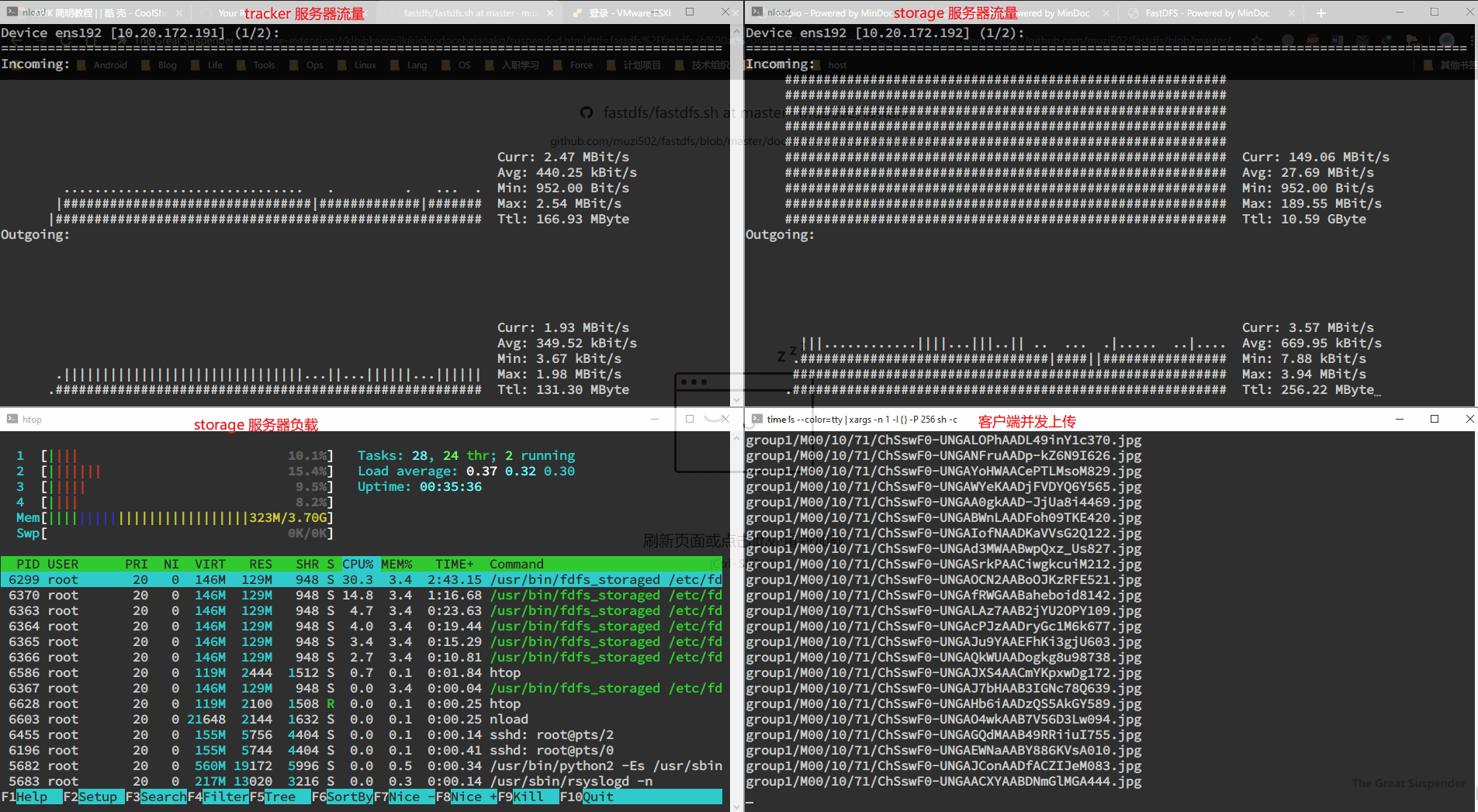

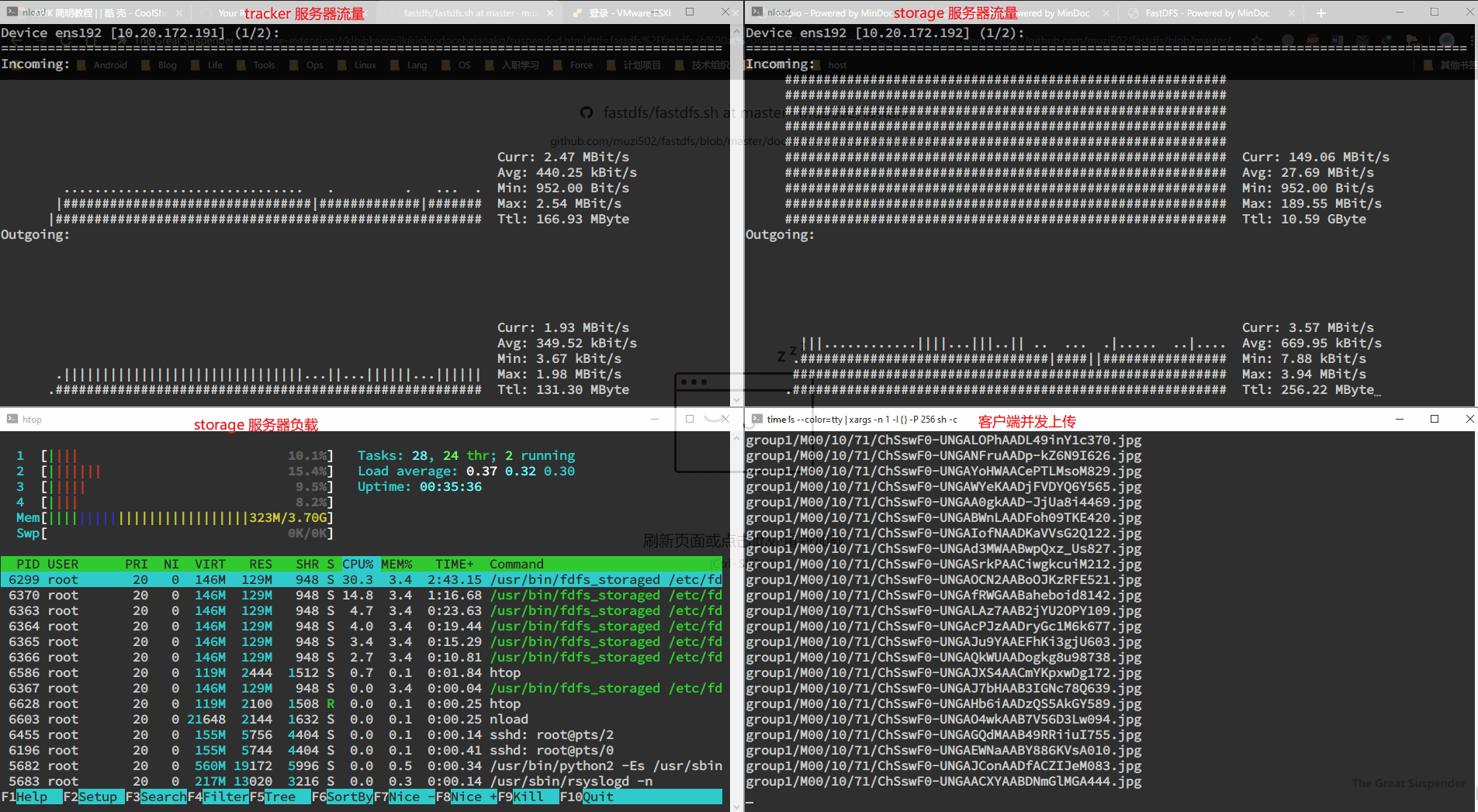

The time taken to execute 256 processes and upload 10w photos concurrently using xargs is about 2 minutes (intranet)

Time: 2 minutes 11 seconds

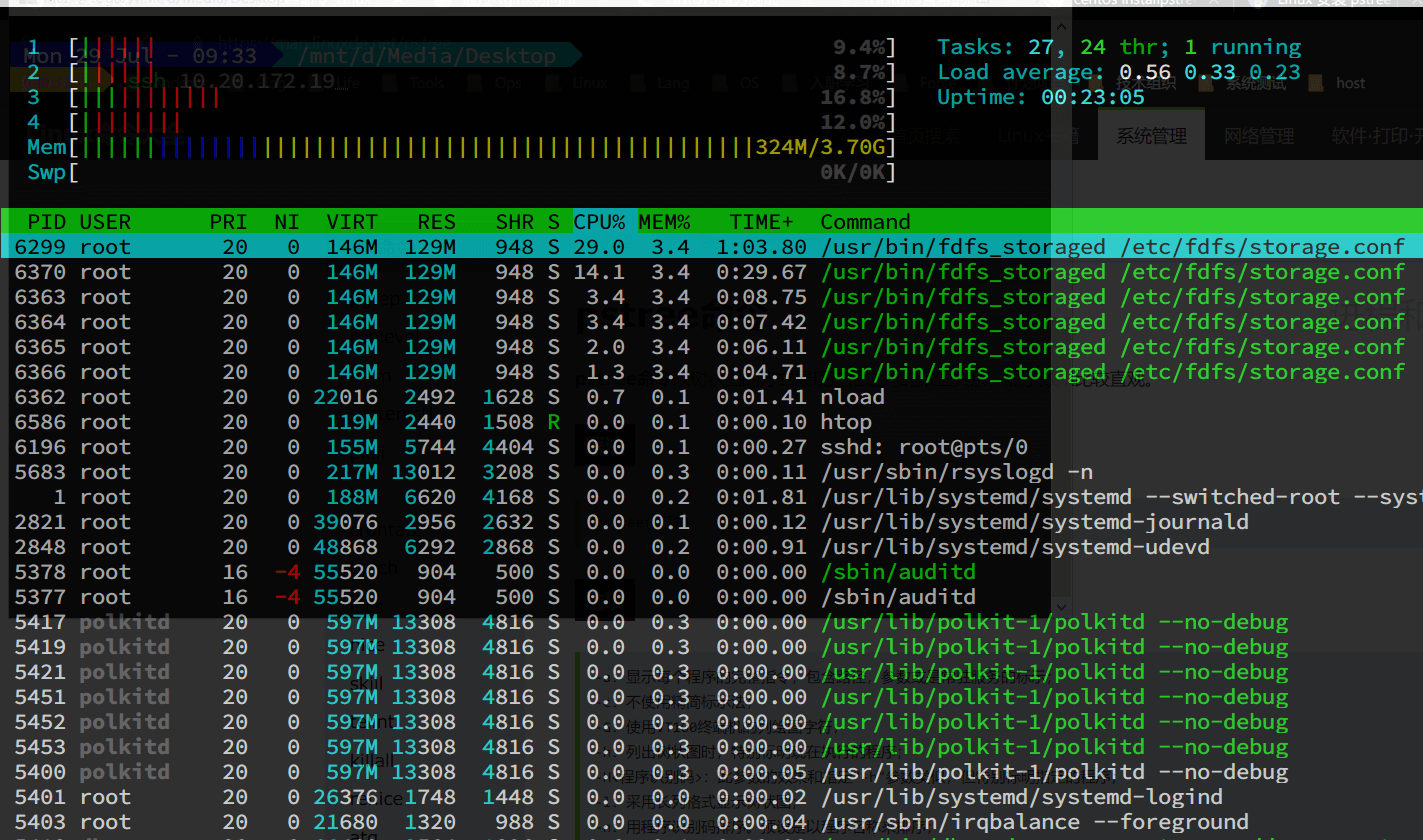

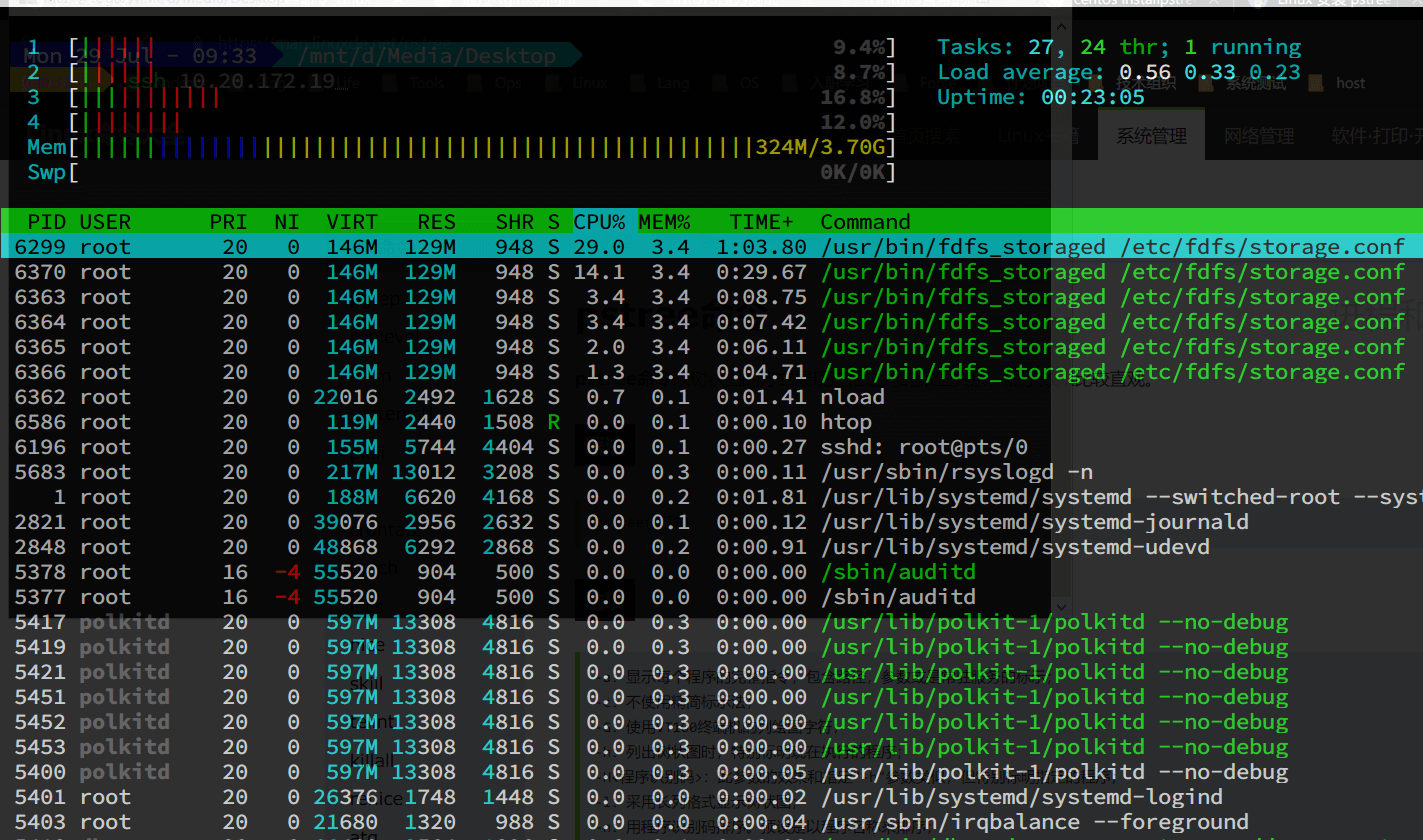

Client load profile

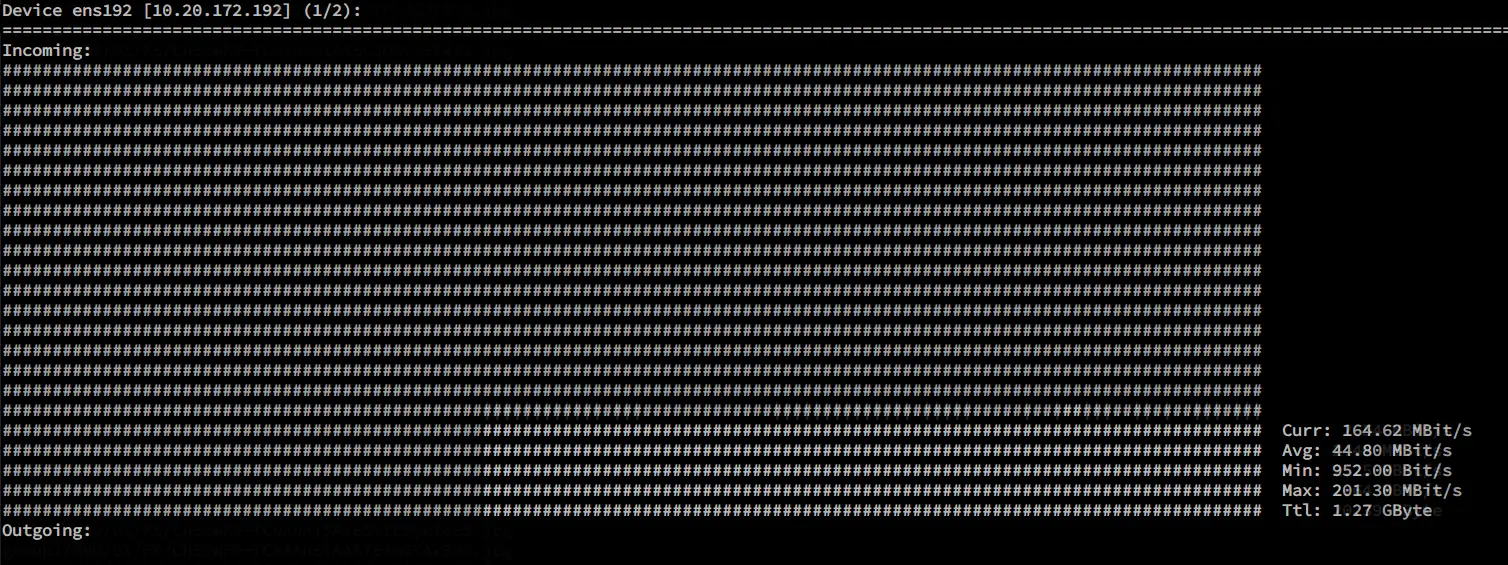

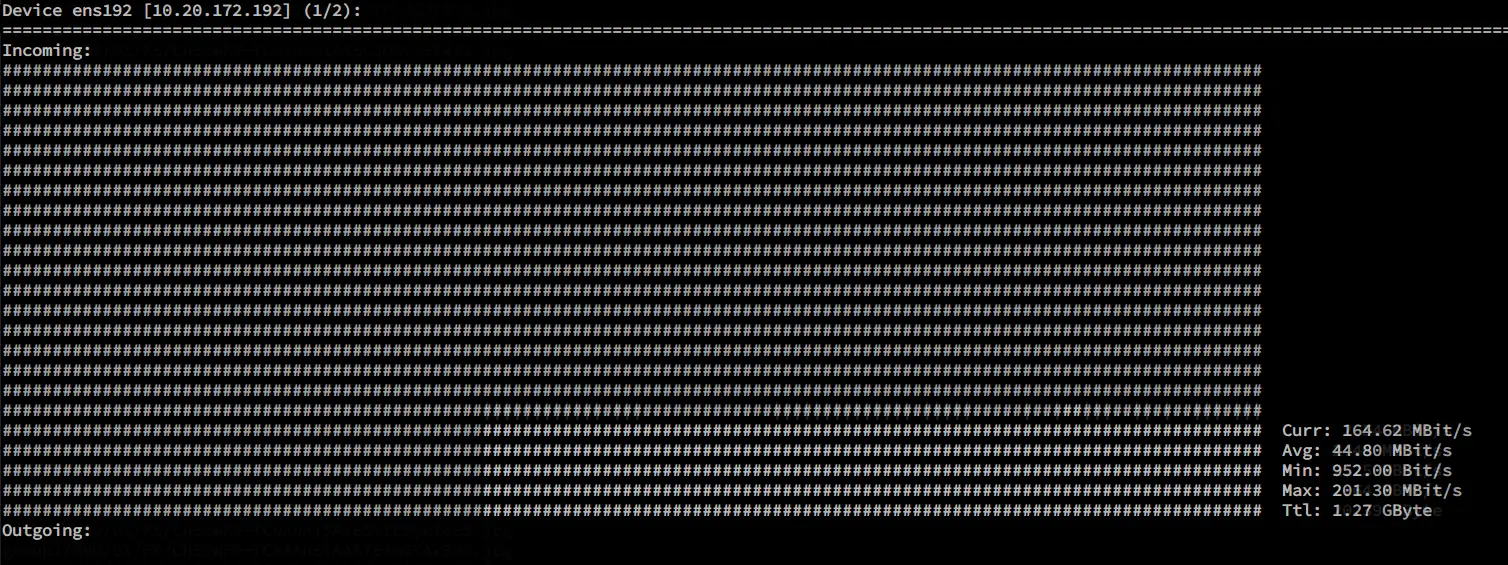

Server-side bandwidth traffic

Server-side bandwidth traffic

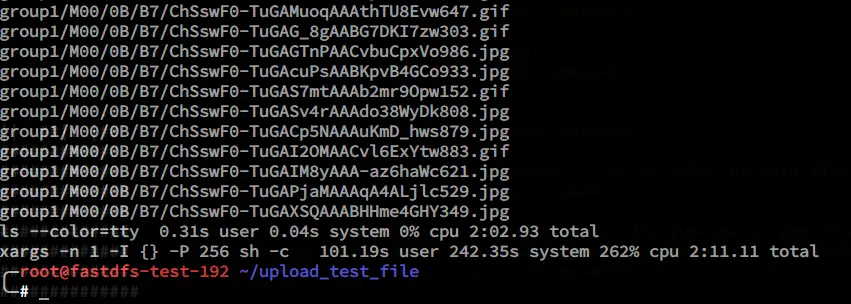

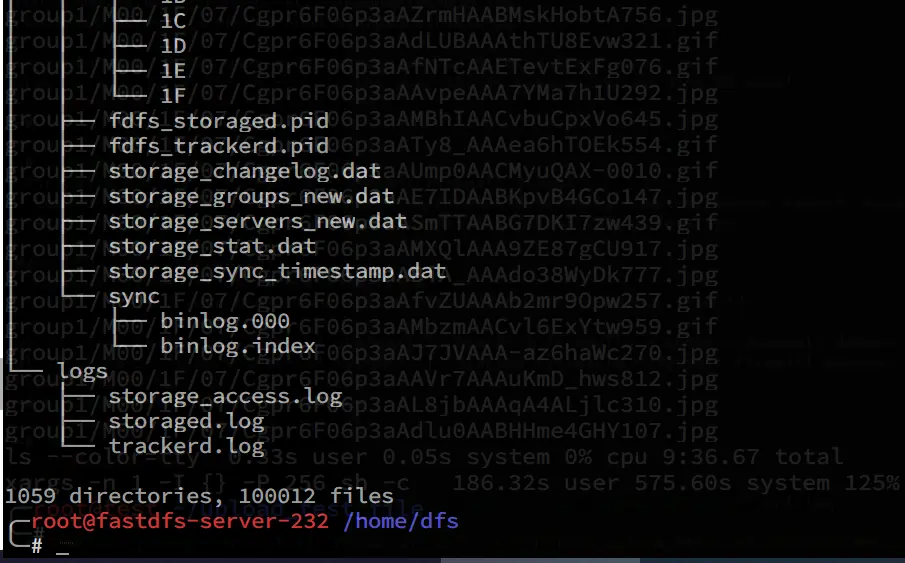

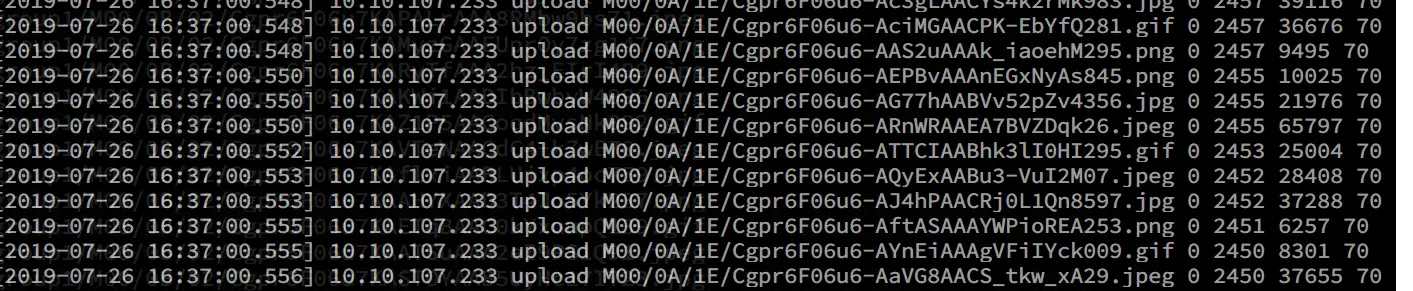

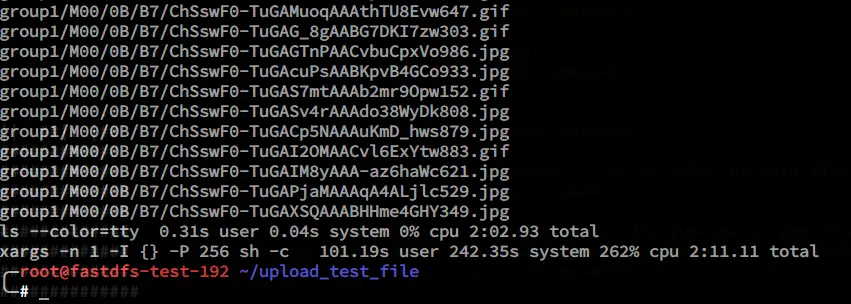

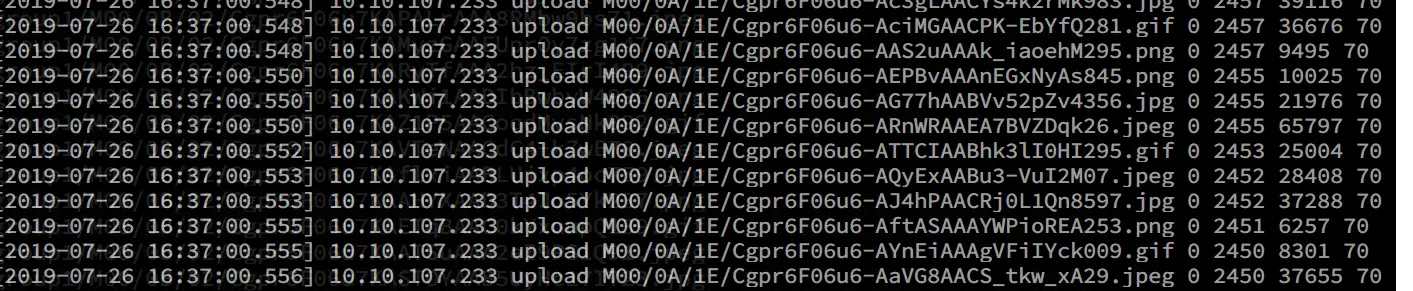

Server-side upload results

Server-side upload logs, all without error output

File Download Test

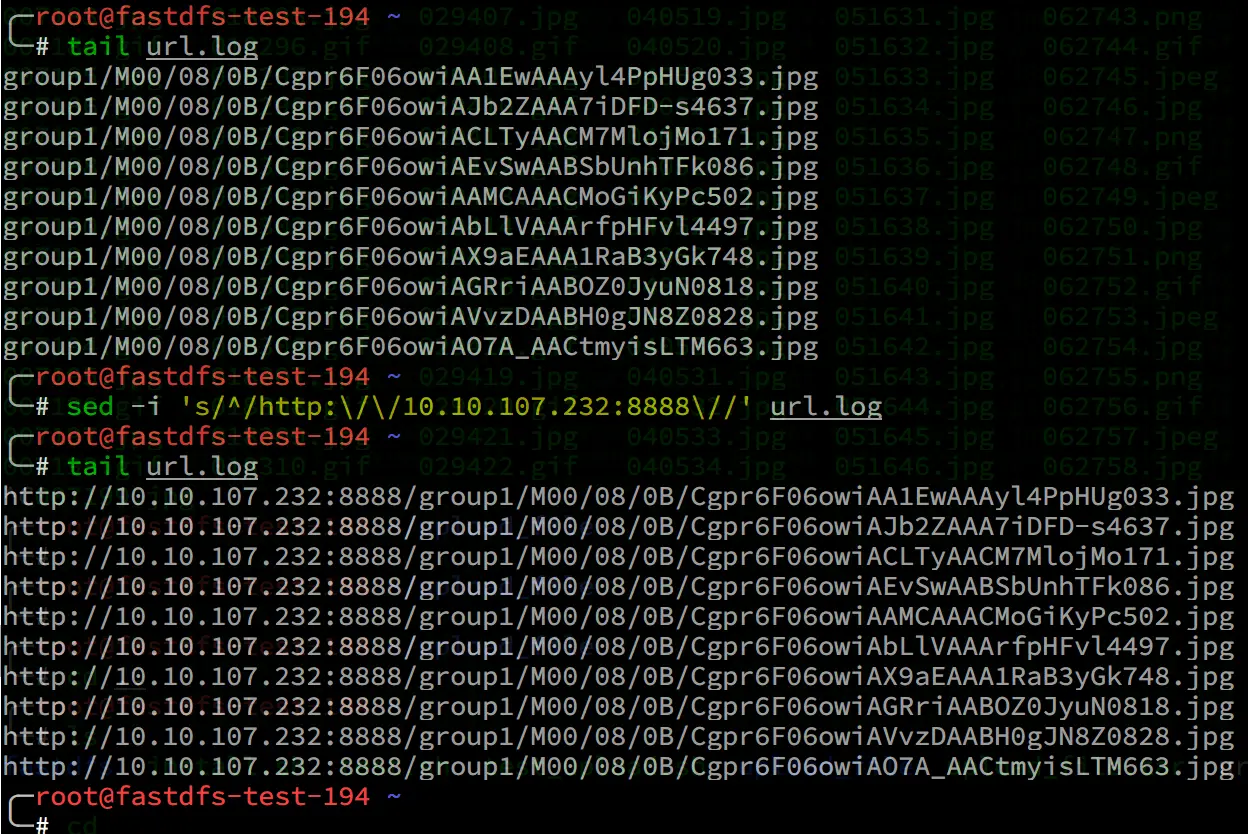

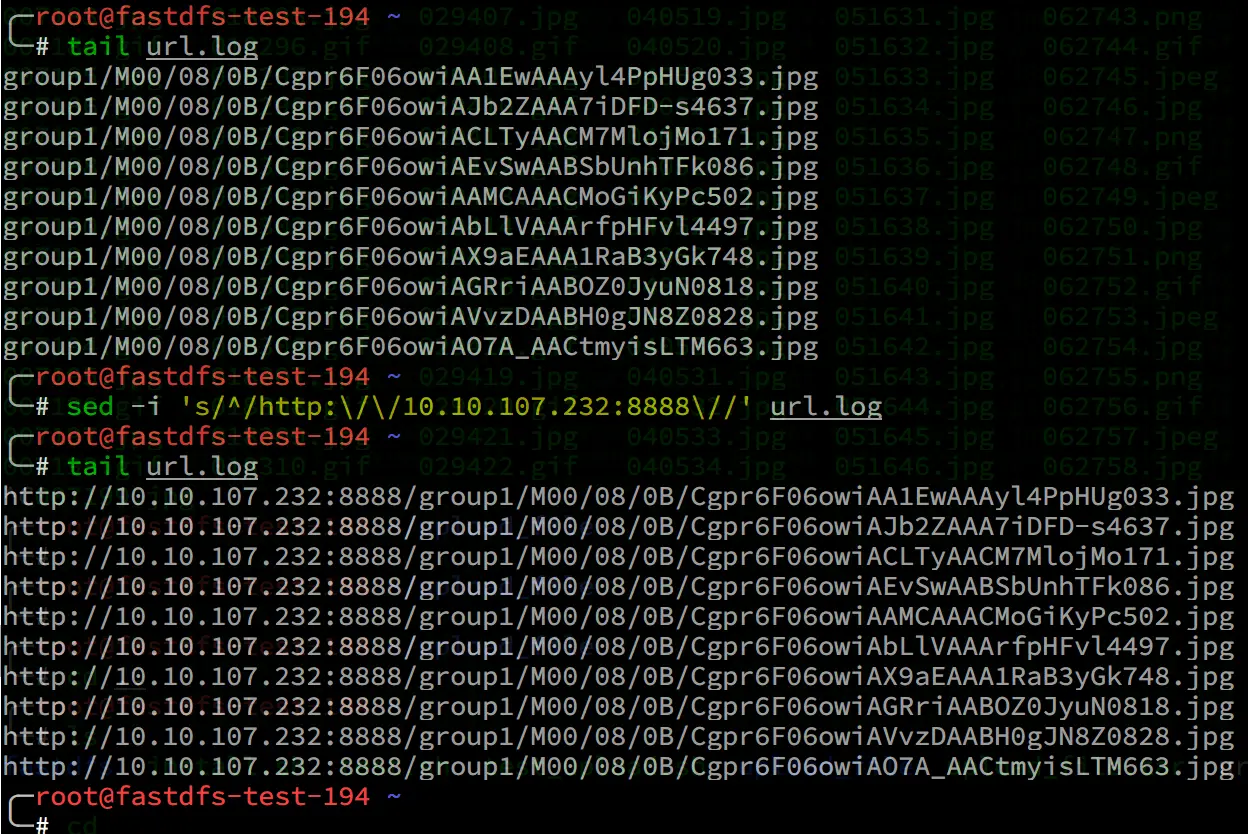

Extracting file paths from logs

Extract the path to the file from the server-side storage_access.log log, add the nginx access port address using sed to get 10W records http access url address of the uploaded file

wget download

Use the wget -i parameter to specify url.log as the standard output to test downloading the 10W images just uploaded in 3 minutes and 23 seconds

Analysis of test results

Using FastDFS’s own upload test tool and the xargs concurrent execution tool, the number of concurrent requests specified by the xargs -P parameter was measured to reach 5000 concurrent upload requests for a stable network environment. 10W images were uploaded in about 2 minutes and 11 seconds. The total test uploads of 100W images were tested continuously using a timing script. Analyzing the logs of tracker server and storage server, no error or abnormality is found in either upload or download, and the performance and stability are good.

Optimization parameters

Adjust the parameters according to business requirements and online environment to fully utilize the performance of FastDFS file system

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

|

# 接收请求的线程数数量

accept_threads=1

# work thread count, should <= max_connections

# default value is 4

# since V2.00

# 工作线程数量,应当小于等于最大连接数

work_threads=4

# min buff size

# default value 8KB

# 最小缓冲大小,默认值为 8KB

min_buff_size = 8KB

# max buff size

# default value 128KB

# 最大缓冲大小,默认值为 128KB

max_buff_size = 128KB

# thread stack size, should >= 64KB

# default value is 256KB

# 线程栈的大小,应当大于 64KB,默认为 256KB

thread_stack_size = 256KB

# if use connection pool

# default value is false

# since V4.05

# 是否使用连接池

use_connection_pool = false

# connections whose the idle time exceeds this time will be closed

# unit: second

# default value is 3600

# since V4.05

# 连接池的最大空闲时间

connection_pool_max_idle_time = 3600

|

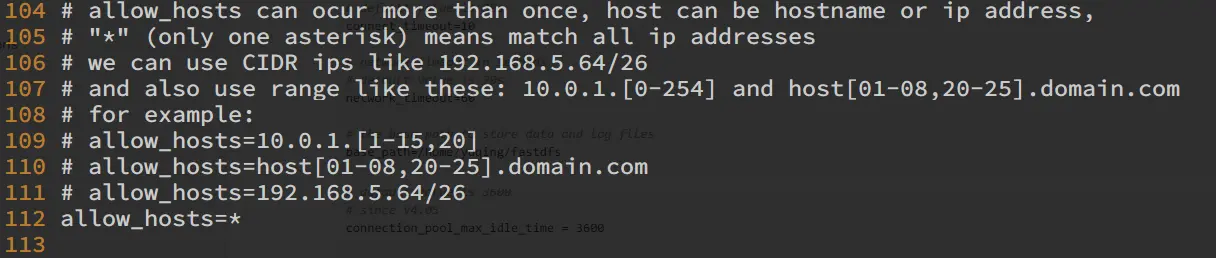

FAQ

Unable to connect to tracker server

You need to add the allowed IPs in the tracker.conf configuration file, and add firewall rules

1

2

3

|

[2019-07-25 10:40:54] ERROR - file: ../client/storage_client.c, line: 996, fdfs_recv_response fail, result: 107

upload file fail, error no: 107, error info: Transport endpoint is not connected

[2019-07-25 10:40:54] ERROR - file: tracker_proto.c, line: 37, server: 10.20.172.192:23000, recv data fail, errno: 107, error info: Transport endpoint is not connected

|

Server disk exhaustion

When the storage server runs out of disk in the partition where the upload storage directory is set, an error log will appear with no space left.

1

2

3

|

[2019-07-26 16:16:45] ERROR - file: tracker_proto.c, line: 48, server: 10.10.107.232:22122, response status 28 != 0

[2019-07-26 16:16:45] ERROR - file: ../client/tracker_client.c, line: 907, fdfs_recv_response fail, result: 28

tracker_query_storage fail, error no: 28, error info: No space left on device

|

Failed when restarting service

use service fdfs_trackerd restart restart tracker or storage service will report an error, will indicate that the port has been occupied. The solution is to use the kill -9 command to kill the tracker service or storage service, and then restart the corresponding service can

1

2

3

|

[2019-07-25 10:36:55] INFO - FastDFS v5.12, base_path=/home/dfs, run_by_group=, run_by_user=, connect_timeout=10s, network_timeout=60s, port=22122, bind_addr=, max_connections=1024, accept_threads=1, work_threads=4, min_buff_size=8,192, max_buff_size=131,072, store_lookup=2, store_group=, store_server=0, store_path=0, reserved_storage_space=10.00%, download_server=0, allow_ip_count=-1, sync_log_buff_interval=10s, check_active_interval=120s, thread_stack_size=256 KB, storage_ip_changed_auto_adjust=1, storage_sync_file_max_delay=86400s, storage_sync_file_max_time=300s, use_trunk_file=0, slot_min_size=256, slot_max_size=16 MB, trunk_file_size=64 MB, trunk_create_file_advance=0, trunk_create_file_time_base=02:00, trunk_create_file_interval=86400, trunk_create_file_space_threshold=20 GB, trunk_init_check_occupying=0, trunk_init_reload_from_binlog=0, trunk_compress_binlog_min_interval=0, use_storage_id=0, id_type_in_filename=ip, storage_id_count=0, rotate_error_log=0, error_log_rotate_time=00:00, rotate_error_log_size=0, log_file_keep_days=0, store_slave_file_use_link=0, use_connection_pool=0, g_connection_pool_max_idle_time=3600s

[2019-07-25 10:36:55] ERROR - file: sockopt.c, line: 868, bind port 22122 failed, errno: 98, error info: Address already in use.

[2019-07-25 10:36:55] CRIT - exit abnormally!

|

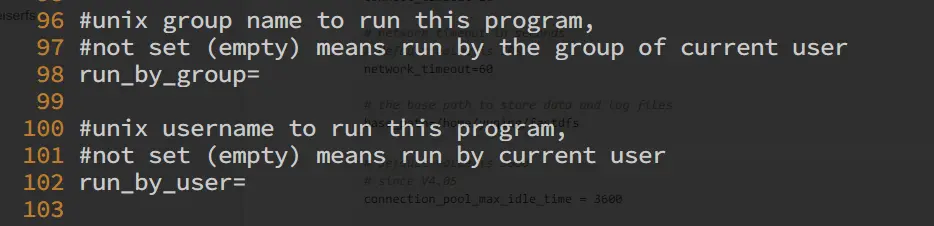

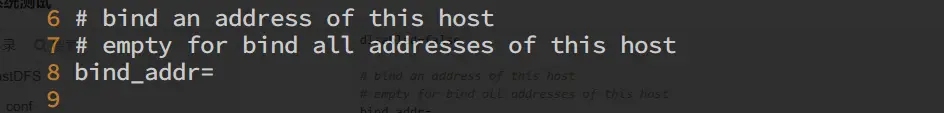

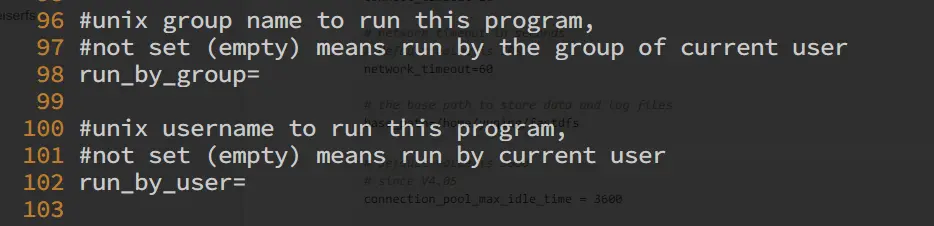

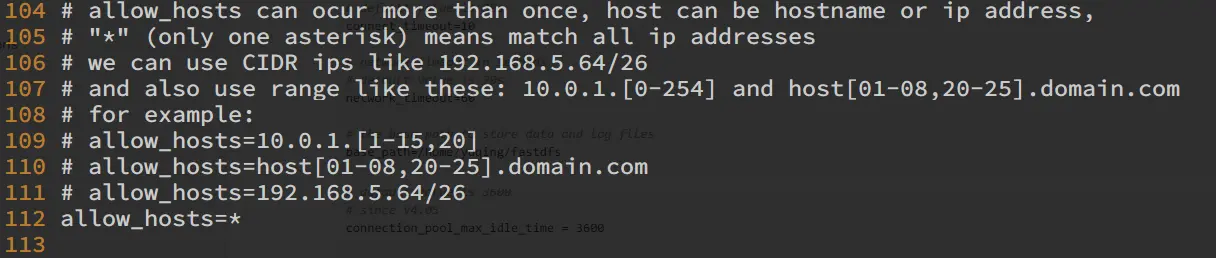

- The tracker.conf and storage.conf configuration files allow access to all IP addresses by default, it is recommended to remove allow_hosts=* and change it to the IP address of the internal network where the FastDFS client is located.

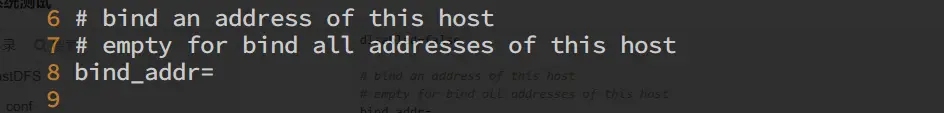

- The default configuration file of tracker.conf and storage.conf listens to 0.0.0.0, which is the IP address of all local machines, and it is recommended to change it to the IP address of the internal network where the FastDFS server is located.

- The default running user is the current user and the user group is the current user. It is recommended to specify the least privileged user to run this process.