Prerequisites

Stream was first introduced in JDK1.8, nearly 8 years ago (JDK1.8 was released at the end of 2013), and the introduction of Stream greatly simplified some development scenarios, but on the other hand, it may have reduced the readability of the code (it is true that many people say that Stream will reduce the readability of the code, but in my opinion, the readability of the code is improved after proficient use). (I think the readability of the code has been improved after using it skillfully). This article will spend a huge amount of space to analyze in detail the underlying implementation principle of Stream, the reference source code is the source code of JDK11, other versions of the JDK may not apply to this article in the source code display and related examples.

This article has taken a lot of time and effort to sort out and write, and I hope it will help the readers of this article

How Stream is forward compatible

Stream is introduced in JDK1.8, such as the need for JDK1.7 or previous code can also run in JDK1.8 or above, then the introduction of Stream must not have been released in the original interface methods to modify, otherwise it will certainly be due to compatibility issues that lead to the old version of the interface implementation can not run in the new version (method signature exceptions), guess is based on this The problem is based on the introduction of the interface default method, that is, the default keyword. A look at the source code shows that ArrayList’s superclasses Collection and Iterable have added several default methods.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

|

// java.util.Collection部分源码

public interface Collection<E> extends Iterable<E> {

// 省略其他代码

@Override

default Spliterator<E> spliterator() {

return Spliterators.spliterator(this, 0);

}

default Stream<E> stream() {

return StreamSupport.stream(spliterator(), false);

}

default Stream<E> parallelStream() {

return StreamSupport.stream(spliterator(), true);

}

}

// java.lang.Iterable部分源码

public interface Iterable<T> {

// 省略其他代码

default void forEach(Consumer<? super T> action) {

Objects.requireNonNull(action);

for (T t : this) {

action.accept(t);

}

}

default Spliterator<T> spliterator() {

return Spliterators.spliteratorUnknownSize(iterator(), 0);

}

}

|

From intuition, these new methods should be the key methods implemented in Stream (later will confirm that this is not intuition, but the result of viewing the source code). Interface default methods are consistent in use with the instance methods, in the implementation can be written directly in the interface methods method body, a bit of static methods, but subclasses can override its implementation (that is, the interface default methods in this interface implementation is a bit like static methods, can be overridden by subclasses, consistent in use with the instance methods). This implementation could be a breakthrough or a compromise, but both the compromise and the breakthrough achieve forward compatibility.

1

2

3

4

5

6

7

8

9

10

11

12

13

|

// JDK1.7中的java.lang.Iterable

public interface Iterable<T> {

Iterator<T> iterator();

}

// JDK1.7中的Iterable实现

public MyIterable<Long> implements Iterable<Long>{

public Iterator<Long> iterator(){

....

}

}

|

As above, MyIterable is defined in JDK1.7, if the class runs in JDK1.8, then calling its forEach() and spliterator() methods in its example is equivalent to directly calling the default methods forEach() and spliterator() of the interface in Iterable in JDK1.8. Of course, limited by the JDK version, here can only ensure that the compilation passed, the old function is used normally, and can not use the JDK1.7 in the Stream-related functions or use the default method keyword. To sum up so much, is to explain why the code developed and compiled using JDK7 can run in the JDK8 environment.

Splittable Iterator Spliterator

The cornerstone of Stream implementation is Spliterator, which stands for “splitable iterator” and is used to iterate over elements in a specified data source (e.g., array, collection, or IO Channel). It is designed with serial and parallel scenarios in mind. As mentioned in the previous section, Collection has a default interface method spliterator(), which generates a Spliterator instance, meaning that all collection subclasses have the ability to create Spliterator instances. The Stream implementation is very similar in design to the ChannelHandlerContext in Netty, which is essentially a chain table, and the Spliterator is the Head node of this chain (the Spliterator instance is the head node of a stream instance, which will be expanded later when the specific source code is analyzed).

Spliterator interface methods

Moving on to the methods defined by the Spliterator interface.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

|

public interface Spliterator<T> {

// 暂时省略其他代码

boolean tryAdvance(Consumer<? super T> action);

default void forEachRemaining(Consumer<? super T> action) {

do { } while (tryAdvance(action));

}

Spliterator<T> trySplit();

long estimateSize();

default long getExactSizeIfKnown() {

return (characteristics() & SIZED) == 0 ? -1L : estimateSize();

}

int characteristics();

default boolean hasCharacteristics(int characteristics) {

return (characteristics() & characteristics) == characteristics;

}

default Comparator<? super T> getComparator() {

throw new IllegalStateException();

}

// 暂时省略其他代码

}

|

tryAdvance

- Method signature:

boolean tryAdvance(Consumer<? super T> action)

- Function: If the Spliterator has the ORDERED feature enabled, the action callback passed in is executed for one of the elements and returns true, otherwise it returns false. if the Spliterator has the ORDERED feature enabled, it will process the next element in order (where the order value can be analogous to the subscript of the container array elements in an ArrayList. Adding new elements to an ArrayList is naturally ordered, with subscripts incrementing from zero) to the next element

- Example.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

|

public static void main(String[] args) throws Exception {

List<Integer> list = new ArrayList<>();

list.add(2);

list.add(1);

list.add(3);

Spliterator<Integer> spliterator = list.stream().spliterator();

final AtomicInteger round = new AtomicInteger(1);

final AtomicInteger loop = new AtomicInteger(1);

while (spliterator.tryAdvance(num -> System.out.printf("第%d轮回调Action,值:%d\n", round.getAndIncrement(), num))) {

System.out.printf("第%d轮循环\n", loop.getAndIncrement());

}

}

// 控制台输出

第1轮回调Action,值:2

第1轮循环

第2轮回调Action,值:1

第2轮循环

第3轮回调Action,值:3

第3轮循环

|

forEachRemaining

- Method Signature:

default void forEachRemaining(Consumer<? super T> action)

- Function: If there are remaining elements in the Spliterator, the incoming action callback is executed in the current thread for all remaining elements in it. If the Spliterator has the ORDERED feature enabled, all remaining elements are processed in order. This is the default method of the interface, and the method body is rather brutal, directly a dead loop wrapped around the tryAdvance() method until false exits the loop

- Example.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

|

public static void main(String[] args) {

List<Integer> list = new ArrayList<>();

list.add(2);

list.add(1);

list.add(3);

Spliterator<Integer> spliterator = list.stream().spliterator();

final AtomicInteger round = new AtomicInteger(1);

spliterator.forEachRemaining(num -> System.out.printf("第%d轮回调Action,值:%d\n", round.getAndIncrement(), num));

}

// 控制台输出

第1轮回调Action,值:2

第2轮回调Action,值:1

第3轮回调Action,值:3

|

trySplit

- Method Signature:

Spliterator<T> trySplit()

- Function: If the current Spliterator is partitionable, then this method will return a new Spliterator instance, and the elements inside this new Spliterator instance will not be overwritten by the elements in the current Spliterator instance (this is a direct translation of the API comment, what it actually means is: the current Spliterator instance X is splittable, trySplit() method will split X to generate a new Spliterator instance Y, and the elements (range) contained in the original X will be shrunk, similar to X = [a,b,c,d] => X = [a,b], Y = [c,d]; if the current Spliterator instance X is (if the current Spliterator instance X is indivisible, this method will return NULL), the specific splitting algorithm is determined by the implementation class

- Example.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

|

public static void main(String[] args) throws Exception {

List<Integer> list = new ArrayList<>();

list.add(2);

list.add(3);

list.add(4);

list.add(1);

Spliterator<Integer> first = list.stream().spliterator();

Spliterator<Integer> second = first.trySplit();

first.forEachRemaining(num -> {

System.out.printf("first spliterator item: %d\n", num);

});

second.forEachRemaining(num -> {

System.out.printf("second spliterator item: %d\n", num);

});

}

// 控制台输出

first spliterator item: 4

first spliterator item: 1

second spliterator item: 2

second spliterator item: 3

|

estimateSize

- Method Signature:

long estimateSize()

- Function: Returns an estimate of the total number of elements to be traversed by the forEachRemaining() method, or Long.MAX_VALUE if the number of samples is infinite, too expensive to compute, or unknown.

- Example.

1

2

3

4

5

6

7

8

9

10

11

12

|

public static void main(String[] args) throws Exception {

List<Integer> list = new ArrayList<>();

list.add(2);

list.add(3);

list.add(4);

list.add(1);

Spliterator<Integer> spliterator = list.stream().spliterator();

System.out.println(spliterator.estimateSize());

}

// 控制台输出

4

|

getExactSizeIfKnown

- Method Signature:

default long getExactSizeIfKnown()

- Function: If the current Spliterator has the SIZED feature (more on the feature below), then call the optimizeSize() method directly, otherwise it returns -1.

- Example.

1

2

3

4

5

6

7

8

9

10

11

12

|

public static void main(String[] args) throws Exception {

List<Integer> list = new ArrayList<>();

list.add(2);

list.add(3);

list.add(4);

list.add(1);

Spliterator<Integer> spliterator = list.stream().spliterator();

System.out.println(spliterator.getExactSizeIfKnown());

}

// 控制台输出

4

|

int characteristics()

- Method Signature:

long estimateSize()

- Function: The current Spliterator has features (collections) that use bitwise operations and are stored in 32-bit integers (more on features below)

hasCharacteristics

- Method Signature:

default boolean hasCharacteristics(int characteristics)

- Function: Determine if the current Spliterator has the incoming features

getComparator

- Method Signature:

default Comparator<? super T> getComparator()

- Function: If the current Spliterator has the SORTED feature, a Comparator instance is returned; if the elements in the Spliterator are naturally ordered (e.g. the elements implement the Comparable interface), NULL is returned; otherwise, an IllegalStateException is thrown directly.

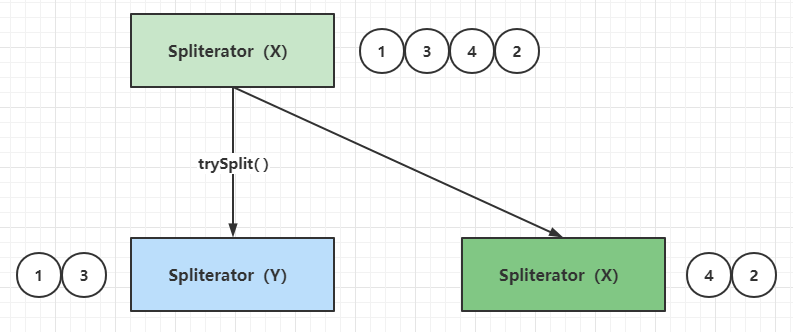

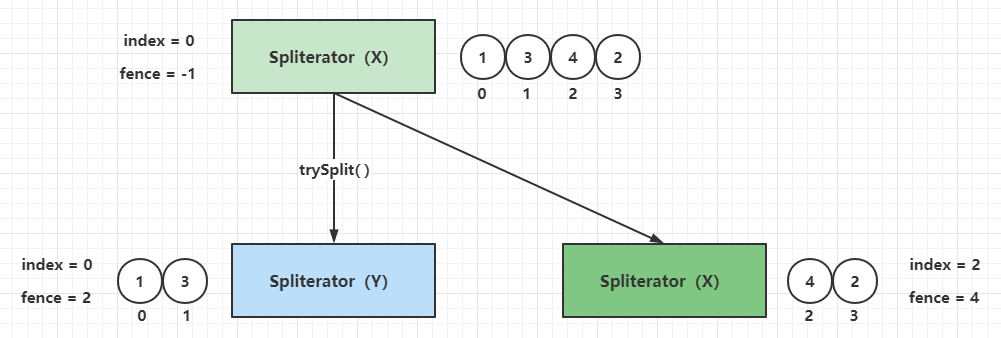

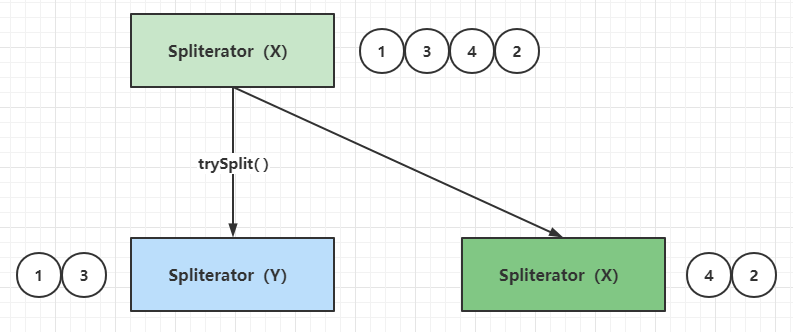

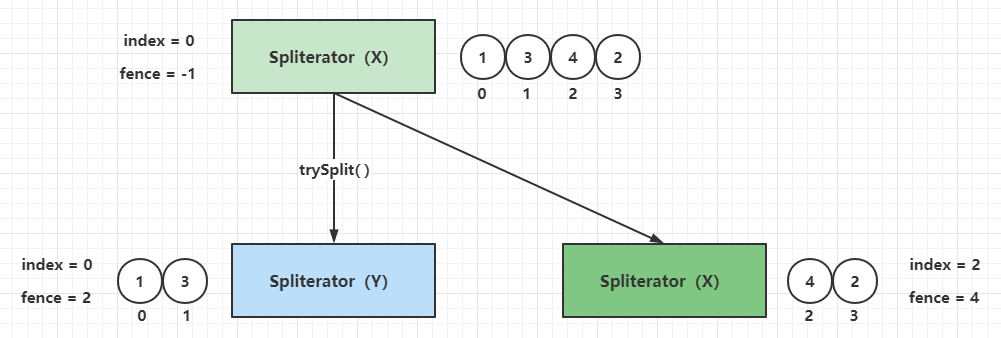

Spliterator Self-Splitting

Spliterator#trySplit() can split an existing Spliterator instance into two Spliterator instances, which I refer to here as Spliterator self splitting, schematically as follows.

The split here can be implemented in two ways.

- Physical Partitioning: For ArrayList, copying the underlying array and partitioning it is equivalent to X = [1,3,4,2] => X = [4,2], Y = [1,3] using the above example, which is obviously not very reasonable when combined with the array of elements in the ArrayList itself, which is equivalent to storing an extra copy of the data.

- Logical partitioning: For ArrayList, since the array of element containers is naturally ordered, you can use the index (subscript) of the array for partitioning, using the above example is equivalent to X = index table [0,1,2,3] => X = index table [2,3], Y = index table [0,1], this way is to share the underlying array of containers, only the index of the elements for partitioning, the implementation is relatively simple and relatively reasonable

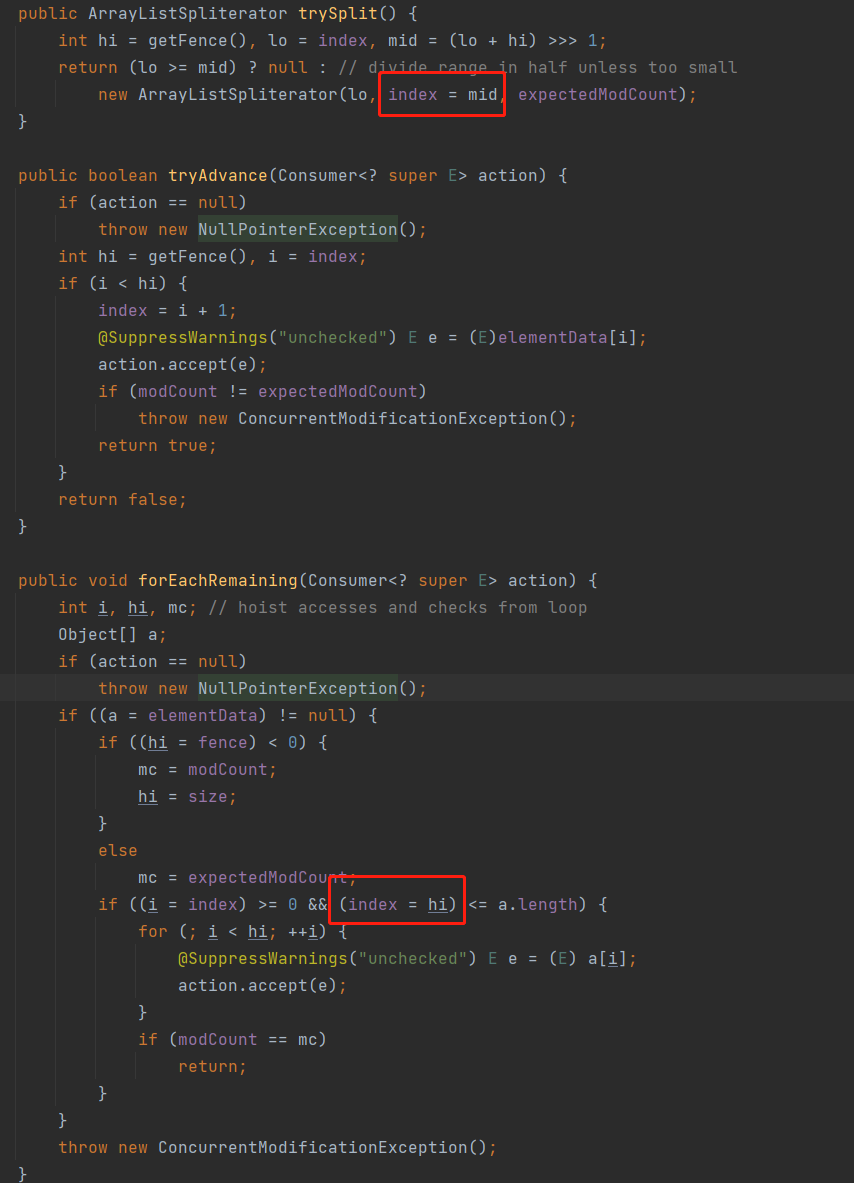

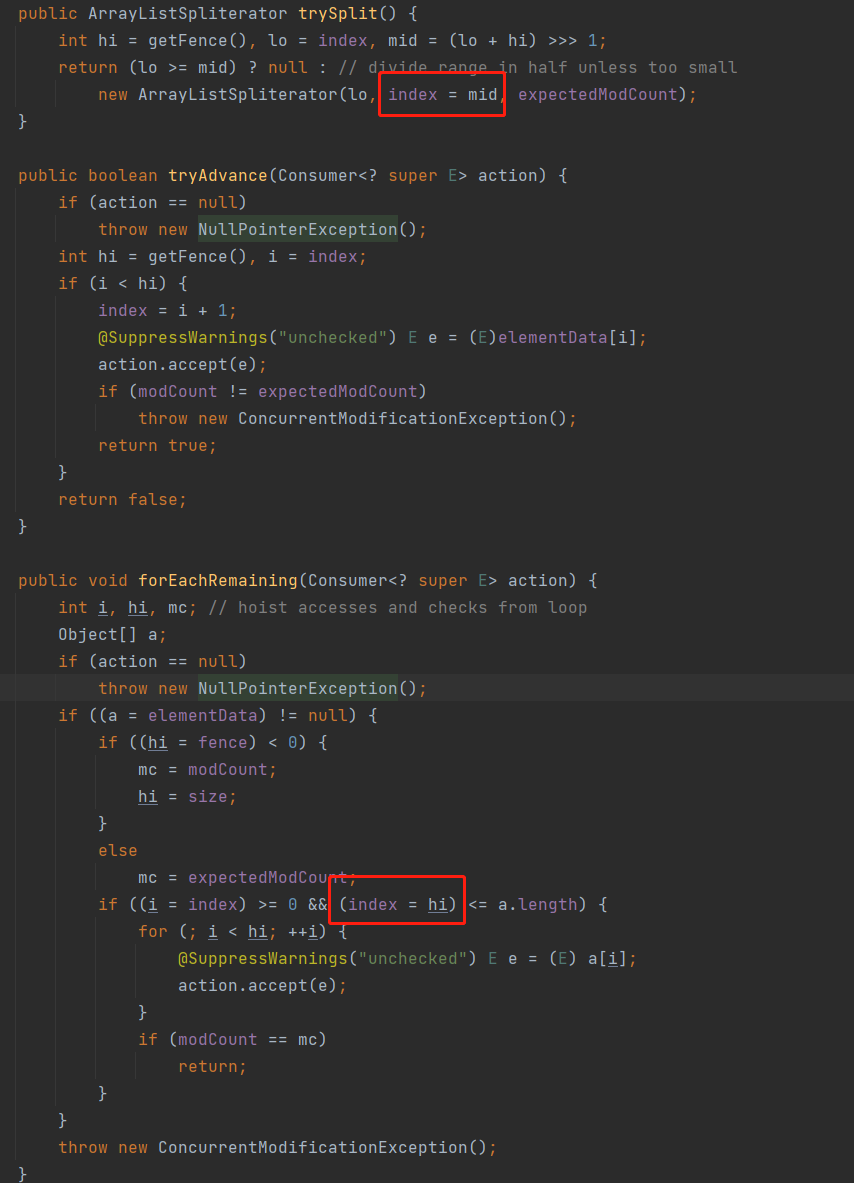

See the source code of ArrayListSpliterator to analyze the implementation of its segmentation algorithm.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

83

84

85

86

87

88

89

90

91

92

93

94

95

96

97

98

99

100

101

102

103

104

105

106

|

// ArrayList#spliterator()

public Spliterator<E> spliterator() {

return new ArrayListSpliterator(0, -1, 0);

}

// ArrayList中内部类ArrayListSpliterator

final class ArrayListSpliterator implements Spliterator<E> {

// 当前的处理的元素索引值,其实是剩余元素的下边界值(包含),在tryAdvance()或者trySplit()方法中被修改,一般初始值为0

private int index;

// 栅栏,其实是元素索引值的上边界值(不包含),一般初始化的时候为-1,使用时具体值为元素索引值上边界加1

private int fence;

// 预期的修改次数,一般初始化值等于modCount

private int expectedModCount;

ArrayListSpliterator(int origin, int fence, int expectedModCount) {

this.index = origin;

this.fence = fence;

this.expectedModCount = expectedModCount;

}

// 获取元素索引值的上边界值,如果小于0,则把hi和fence都赋值为(ArrayList中的)size,expectedModCount赋值为(ArrayList中的)modCount,返回上边界值

// 这里注意if条件中有赋值语句hi = fence,也就是此方法调用过程中临时变量hi总是重新赋值为fence,fence是ArrayListSpliterator实例中的成员属性

private int getFence() {

int hi;

if ((hi = fence) < 0) {

expectedModCount = modCount;

hi = fence = size;

}

return hi;

}

// Spliterator自分割,这里采用了二分法

public ArrayListSpliterator trySplit() {

// hi等于当前ArrayListSpliterator实例中的fence变量,相当于获取剩余元素的上边界值

// lo等于当前ArrayListSpliterator实例中的index变量,相当于获取剩余元素的下边界值

// mid = (lo + hi) >>> 1,这里的无符号右移动1位运算相当于(lo + hi)/2

int hi = getFence(), lo = index, mid = (lo + hi) >>> 1;

// 当lo >= mid的时候为不可分割,返回NULL,否则,以index = lo,fence = mid和expectedModCount = expectedModCount创建一个新的ArrayListSpliterator

// 这里有个细节之处,在新的ArrayListSpliterator构造参数中,当前的index被重新赋值为index = mid,这一点容易看漏,老程序员都喜欢做这样的赋值简化

// lo >= mid返回NULL的时候,不会创建新的ArrayListSpliterator,也不会修改当前ArrayListSpliterator中的参数

return (lo >= mid) ? null : new ArrayListSpliterator(lo, index = mid, expectedModCount);

}

// tryAdvance实现

public boolean tryAdvance(Consumer<? super E> action) {

if (action == null)

throw new NullPointerException();

// 获取迭代的上下边界

int hi = getFence(), i = index;

// 由于前面分析下边界是包含关系,上边界是非包含关系,所以这里要i < hi而不是i <= hi

if (i < hi) {

index = i + 1;

// 这里的elementData来自ArrayList中,也就是前文经常提到的元素数组容器,这里是直接通过元素索引访问容器中的数据

@SuppressWarnings("unchecked") E e = (E)elementData[i];

// 对传入的Action进行回调

action.accept(e);

// 并发修改异常判断

if (modCount != expectedModCount)

throw new ConcurrentModificationException();

return true;

}

return false;

}

// forEachRemaining实现,这里没有采用默认实现,而是完全覆盖实现一个新方法

public void forEachRemaining(Consumer<? super E> action) {

// 这里会新建所需的中间变量,i为index的中间变量,hi为fence的中间变量,mc为expectedModCount的中间变量

int i, hi, mc;

Object[] a;

if (action == null)

throw new NullPointerException();

// 判断容器数组存在性

if ((a = elementData) != null) {

// hi、fence和mc初始化

if ((hi = fence) < 0) {

mc = modCount;

hi = size;

}

else

mc = expectedModCount;

// 这里就是先做参数合法性校验,再遍历临时数组容器a中中[i,hi)的剩余元素对传入的Action进行回调

// 这里注意有一处隐蔽的赋值(index = hi),下界被赋值为上界,意味着每个ArrayListSpliterator实例只能调用一次forEachRemaining()方法

if ((i = index) >= 0 && (index = hi) <= a.length) {

for (; i < hi; ++i) {

@SuppressWarnings("unchecked") E e = (E) a[i];

action.accept(e);

}

// 这里校验ArrayList的modCount和mc是否一致,理论上在forEachRemaining()遍历期间,不能对数组容器进行元素的新增或者移除,一旦发生modCount更变会抛出异常

if (modCount == mc)

return;

}

}

throw new ConcurrentModificationException();

}

// 获取剩余元素估计值,就是用剩余元素索引上边界直接减去下边界

public long estimateSize() {

return getFence() - index;

}

// 具备ORDERED、SIZED和SUBSIZED特性

public int characteristics() {

return Spliterator.ORDERED | Spliterator.SIZED | Spliterator.SUBSIZED;

}

}

|

When reading the source code be sure to pay attention to the older generation of programmers sometimes use a more covert assignment, the author believes that it needs to be expanded.

The first red circle position in the construction of the new ArrayListSpliterator, the index property of the current ArrayListSpliterator is also modified, the process is as follows.

In the second red circle, if the forEachRemaining() method is called with parameter checking and the index (lower bound value) is assigned to hi (upper bound value) in the if branch, then the forEachRemaining() method of an ArrayListSpliterator instance must perform the traversal operation only once. once. This can be verified as follows.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

|

public static void main(String[] args) {

List<Integer> list = new ArrayList<>();

list.add(2);

list.add(1);

list.add(3);

Spliterator<Integer> spliterator = list.stream().spliterator();

final AtomicInteger round = new AtomicInteger(1);

spliterator.forEachRemaining(num -> System.out.printf("[第一次遍历forEachRemaining]第%d轮回调Action,值:%d\n", round.getAndIncrement(), num));

round.set(1);

spliterator.forEachRemaining(num -> System.out.printf("[第二次遍历forEachRemaining]第%d轮回调Action,值:%d\n", round.getAndIncrement(), num));

}

// 控制台输出

[第一次遍历forEachRemaining]第1轮回调Action,值:2

[第一次遍历forEachRemaining]第2轮回调Action,值:1

[第一次遍历forEachRemaining]第3轮回调Action,值:3

|

The following points can be confirmed for the implementation of ArrayListSpliterator.

- The forEachRemaining() method in a new instance of ArrayListSpliterator can only be called once

- The bound of the forEachRemaining() method in the ArrayListSpliterator instance to traverse the elements is [index, fence)

- When ArrayListSpliterator splits itself, the new ArrayListSpliterator is responsible for handling the segments with small subscripts (analogous to fork’s left branch), while the original ArrayListSpliterator is responsible for handling the segments with large subscripts (analogous to fork’s right branch).

- The number of remaining elements in the segment is an exact value, as provided by the estimSize() method of the ArrayListSpliterator.

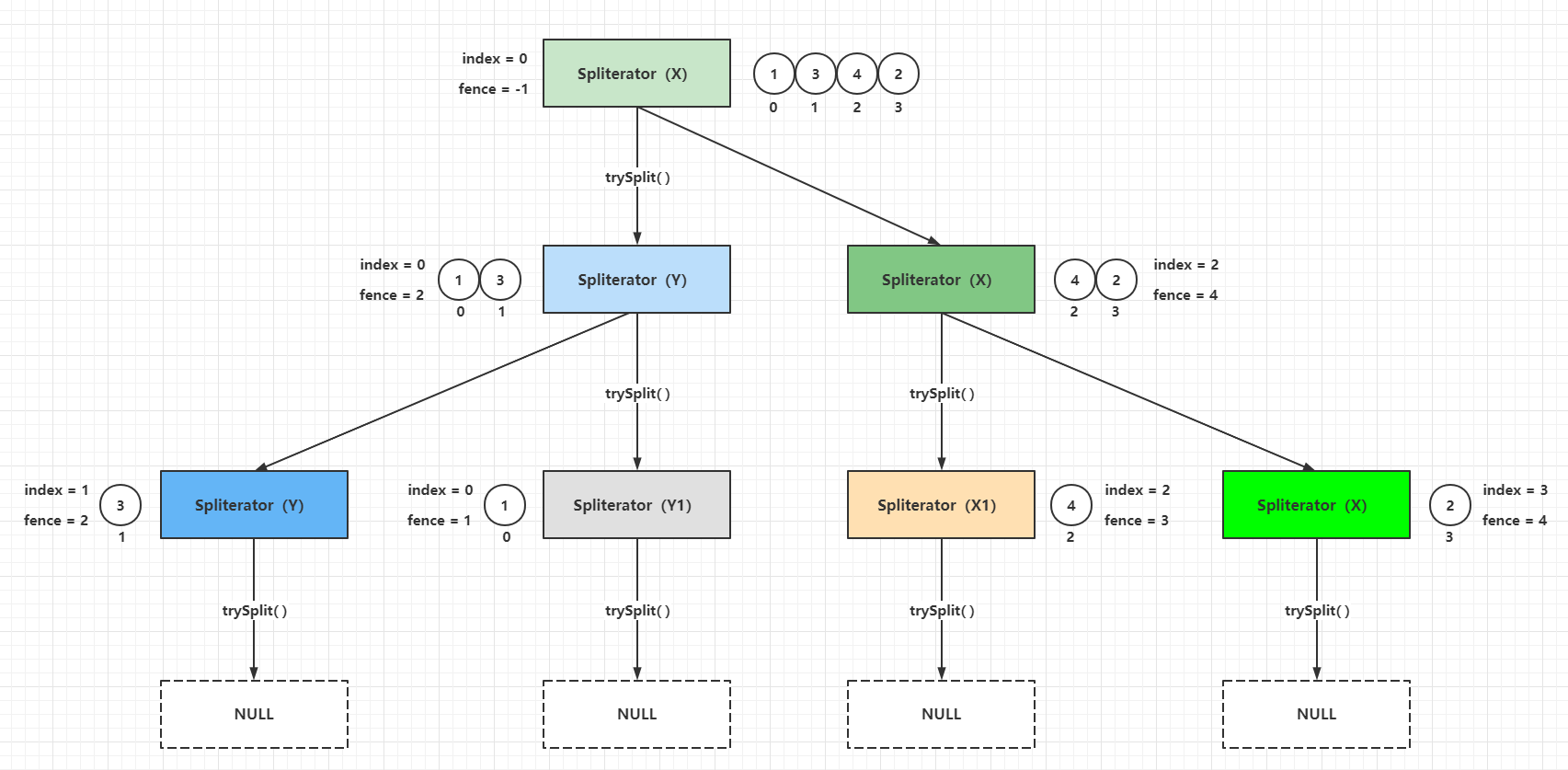

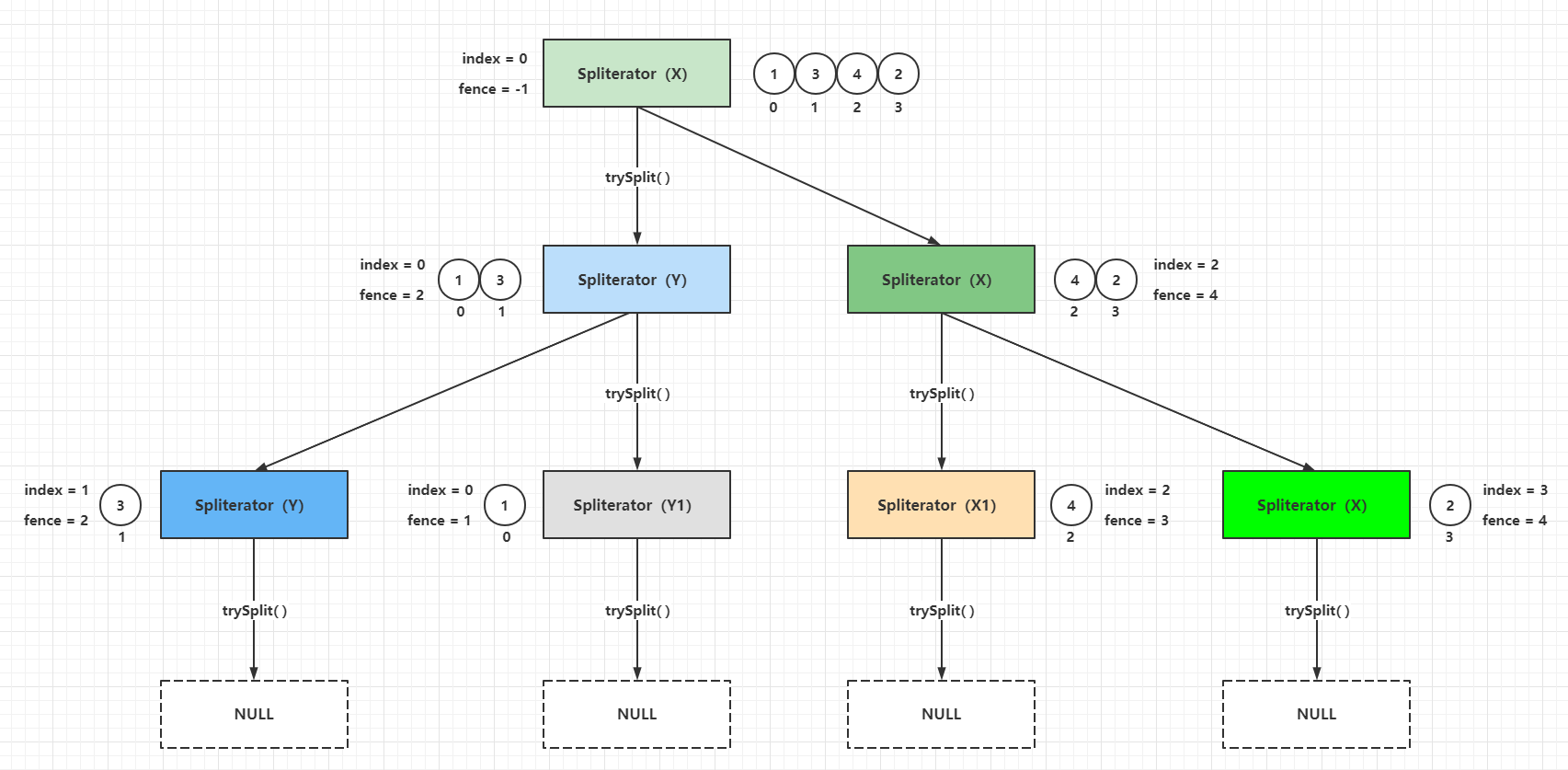

If the above example is further divided, the following process can be obtained.

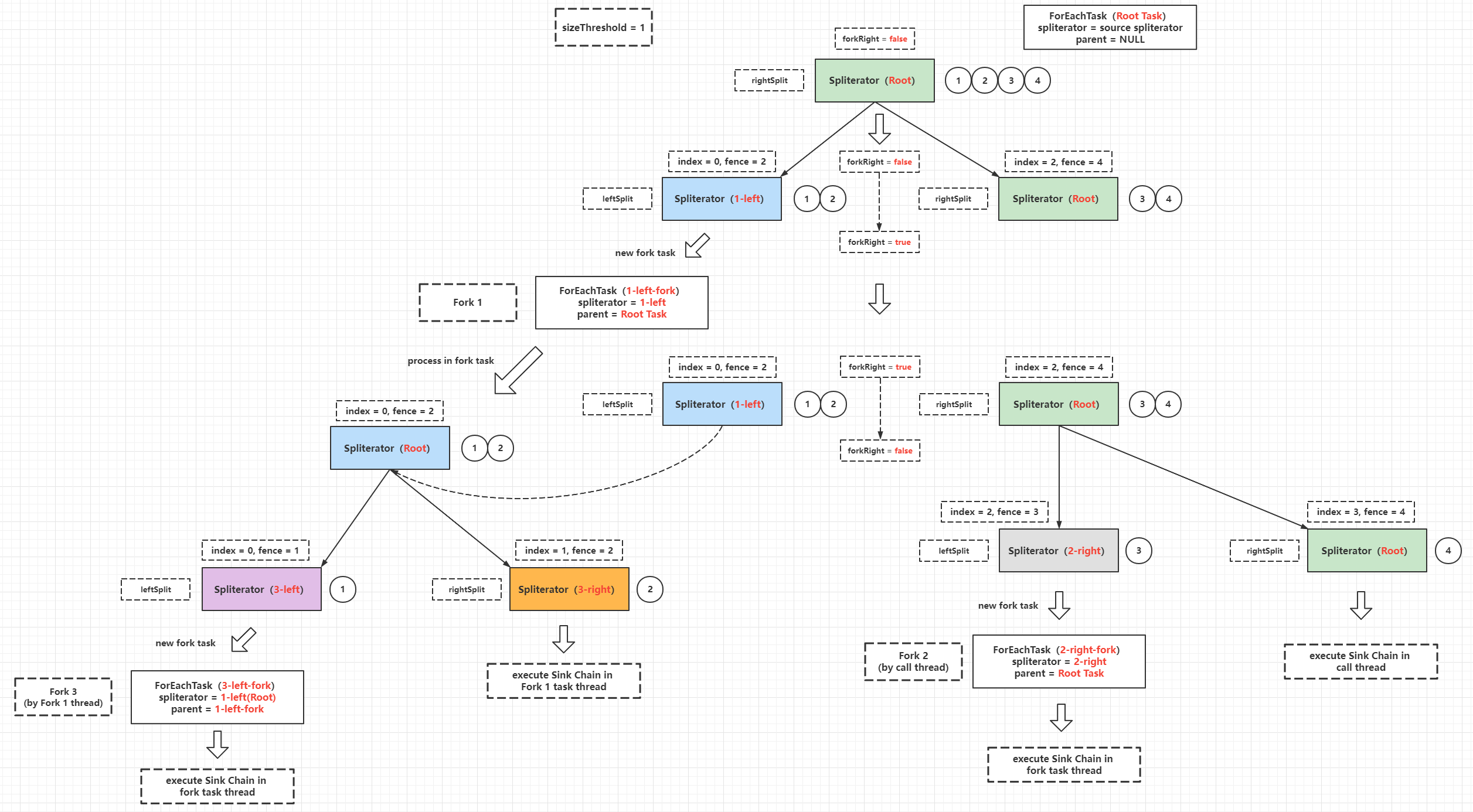

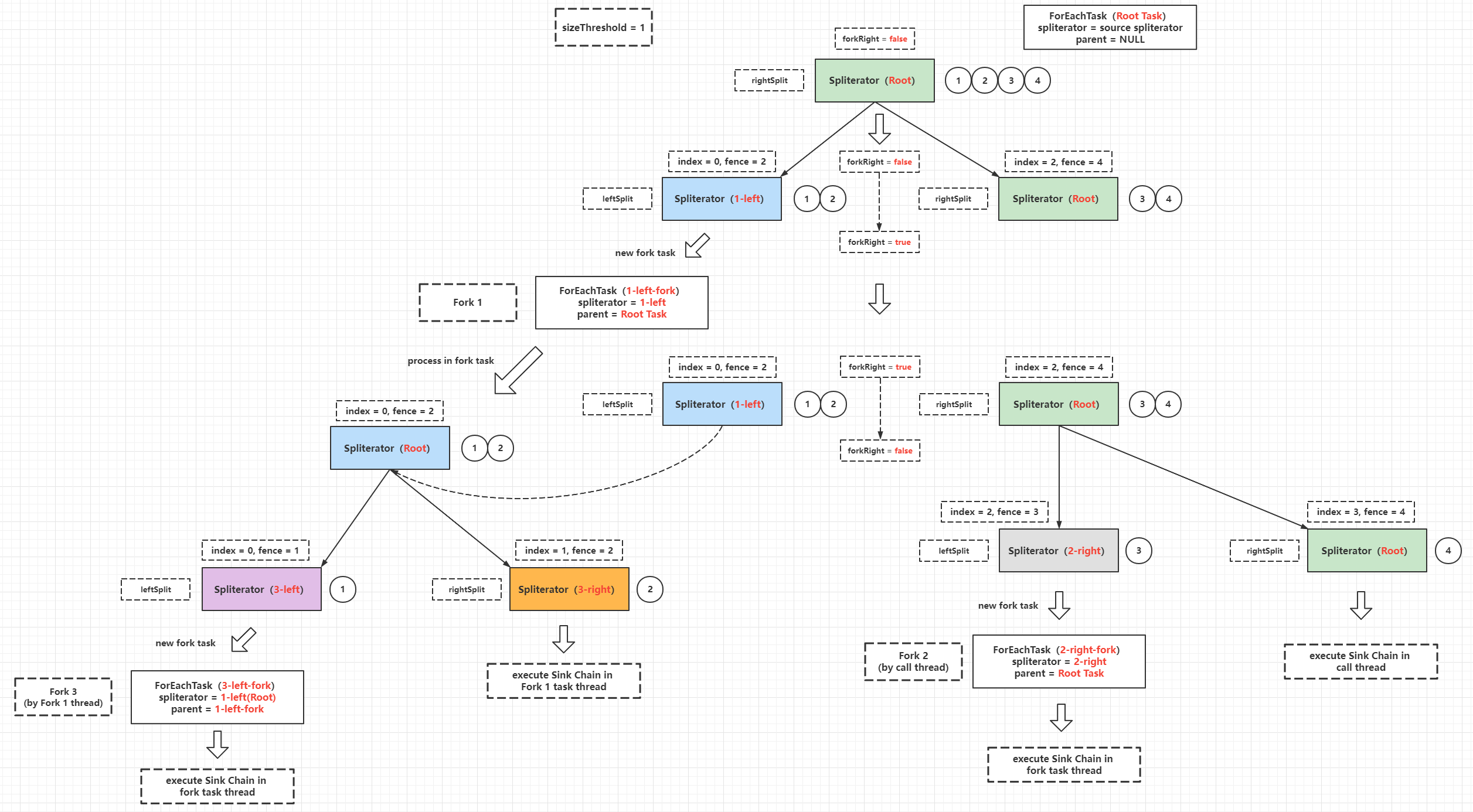

Spliterator self splitting is the basis of parallel stream implementation, the parallel stream computation process is actually fork-join processing, the implementation of trySplit() method determines the granularity of fork tasks, each fork task is concurrently safe when computing, this is guaranteed by thread closure (thread stack closure), each fork task computation The final result is then joined by a single thread to get the correct result. The following example is to find the sum of integers 1 to 100.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

|

public class ConcurrentSplitCalculateSum {

private static class ForkTask extends Thread {

private int result = 0;

private final Spliterator<Integer> spliterator;

private final CountDownLatch latch;

public ForkTask(Spliterator<Integer> spliterator,

CountDownLatch latch) {

this.spliterator = spliterator;

this.latch = latch;

}

@Override

public void run() {

long start = System.currentTimeMillis();

spliterator.forEachRemaining(num -> result = result + num);

long end = System.currentTimeMillis();

System.out.printf("线程[%s]完成计算任务,当前段计算结果:%d,耗时:%d ms\n",

Thread.currentThread().getName(), result, end - start);

latch.countDown();

}

public int result() {

return result;

}

}

private static int join(List<ForkTask> tasks) {

int result = 0;

for (ForkTask task : tasks) {

result = result + task.result();

}

return result;

}

private static final int THREAD_NUM = 4;

public static void main(String[] args) throws Exception {

List<Integer> source = new ArrayList<>();

for (int i = 1; i < 101; i++) {

source.add(i);

}

Spliterator<Integer> root = source.stream().spliterator();

List<Spliterator<Integer>> spliteratorList = new ArrayList<>();

Spliterator<Integer> x = root.trySplit();

Spliterator<Integer> y = x.trySplit();

Spliterator<Integer> z = root.trySplit();

spliteratorList.add(root);

spliteratorList.add(x);

spliteratorList.add(y);

spliteratorList.add(z);

List<ForkTask> tasks = new ArrayList<>();

CountDownLatch latch = new CountDownLatch(THREAD_NUM);

for (int i = 0; i < THREAD_NUM; i++) {

ForkTask task = new ForkTask(spliteratorList.get(i), latch);

task.setName("fork-task-" + (i + 1));

tasks.add(task);

}

tasks.forEach(Thread::start);

latch.await();

int result = join(tasks);

System.out.println("最终计算结果为:" + result);

}

}

// 控制台输出结果

线程[fork-task-4]完成计算任务,当前段计算结果:1575,耗时:0 ms

线程[fork-task-2]完成计算任务,当前段计算结果:950,耗时:1 ms

线程[fork-task-3]完成计算任务,当前段计算结果:325,耗时:1 ms

线程[fork-task-1]完成计算任务,当前段计算结果:2200,耗时:1 ms

最终计算结果为:5050

|

Of course, the computation of the final parallel stream uses ForkJoinPool and is not executed asynchronously as brute-force as in this example. The implementation of parallel streams will be analyzed in detail below.

Features supported by Spliterator

The characteristics supported by a particular Spliterator instance are determined by the method characteristics(), which returns a 32-bit value that in practice is expanded into a bit array with all characteristics assigned to different bits, and hasCharacteristics(int characteristics) is By inputting the specific characteristics value through the bit operation to determine whether the characteristics exist in characteristics(). The following is an analysis of this technique by simplifying characteristics to byte.

1

2

3

4

5

6

7

|

假设:byte characteristics() => 也就是最多8个位用于表示特性集合,如果每个位只表示一种特性,那么可以总共表示8种特性

特性X:0000 0001

特性Y:0000 0010

以此类推

假设:characteristics = X | Y = 0000 0001 | 0000 0010 = 0000 0011

那么:characteristics & X = 0000 0011 & 0000 0001 = 0000 0001

判断characteristics是否包含X:(characteristics & X) == X

|

The process inferred above is the processing logic of the feature determination method in Spliterator.

1

2

3

4

5

6

7

|

// 返回特性集合

int characteristics();

// 基于位运算判断特性集合中是否存在输入的特性

default boolean hasCharacteristics(int characteristics) {

return (characteristics() & characteristics) == characteristics;

}

|

Here it can be verified.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

|

public class CharacteristicsCheck {

public static void main(String[] args) {

System.out.printf("是否存在ORDERED特性:%s\n", hasCharacteristics(Spliterator.ORDERED));

System.out.printf("是否存在SIZED特性:%s\n", hasCharacteristics(Spliterator.SIZED));

System.out.printf("是否存在DISTINCT特性:%s\n", hasCharacteristics(Spliterator.DISTINCT));

}

private static int characteristics() {

return Spliterator.ORDERED | Spliterator.SIZED | Spliterator.SORTED;

}

private static boolean hasCharacteristics(int characteristics) {

return (characteristics() & characteristics) == characteristics;

}

}

// 控制台输出

是否存在ORDERED特性:true

是否存在SIZED特性:true

是否存在DISTINCT特性:false

|

There are currently eight features supported by Spliterator, as follows.

| Features |

Hex value |

Binary value |

Function |

| DISTINCT |

0x00000001 |

0000 0000 0000 0001 |

De-weighting, e.g. for each pair of elements (x,y) to be processed, use !x.equals(y) to compare, de-weighting in Spliterator is actually based on Set processing |

| ORDERED |

0x00000010 |

0000 0000 0001 0000 |

The (element) order processing can be understood as trySplit(), tryAdvance() and forEachRemaining() methods guarantee a strict prefix order for all element processing |

| SORTED |

0x00000004 |

0000 0000 0000 0100 |

Sorting, elements are sorted using the Comparator provided by the getComparator() method, and if the SORTED feature is defined, the ORDERED feature must be defined |

| SIZED |

0x00000040 |

0000 0000 0100 0000 |

If this feature is enabled, the exact number of elements will be returned by estimateSize() before Spliterator is split or iterated. |

| NONNULL |

0x00000040 |

0000 0001 0000 0000 |

(element) is not NULL, the data source ensures that the elements Spliterator needs to process cannot be NULL, most commonly used in concurrent containers in collections, queues and Map |

| IMMUTABLE |

0x00000400 |

0000 0100 0000 0000 |

(elements) are immutable, the data source cannot be modified, i.e. elements cannot be added, replaced and removed during processing (updating attributes is allowed) |

| CONCURRENT |

0x00001000 |

0001 0000 0000 0000 |

Modifications (to the element source) are concurrently safe, meaning that multiple threads adding, replacing, or removing elements from the data source are concurrently safe without additional synchronization conditions |

| SUBSIZED |

0x00004000 |

0100 0000 0000 0000 |

The estimated number of Spliterator elements (sub-Spliterator elements), enabling this feature means that all sub-Spliterators split by trySplit() method (the current Spliterator is also a sub-Spliterator after splitting) are enabled with SIZED feature |

A closer look reveals that all features are stored in 32-bit integers, using a 1-bit interval storage strategy, and the mapping of bit subscripts to features is: (0 => DISTINCT), (3 => SORTED), (5 => ORDERED), (7 => SIZED), (9 => NONNULL), (11 => IMMUTABLE), (13 => CONCURRENT), (15 => SUBSIZED)

All the features of the function here only outlines the core definition, there are some small words or special case description limited to space did not add completely, which can refer to the specific source code in the API comments. These features will eventually be converted into StreamOpFlag and then provided to the operation judgment in Stream, as StreamOpFlag will be more complex, the following detailed analysis.

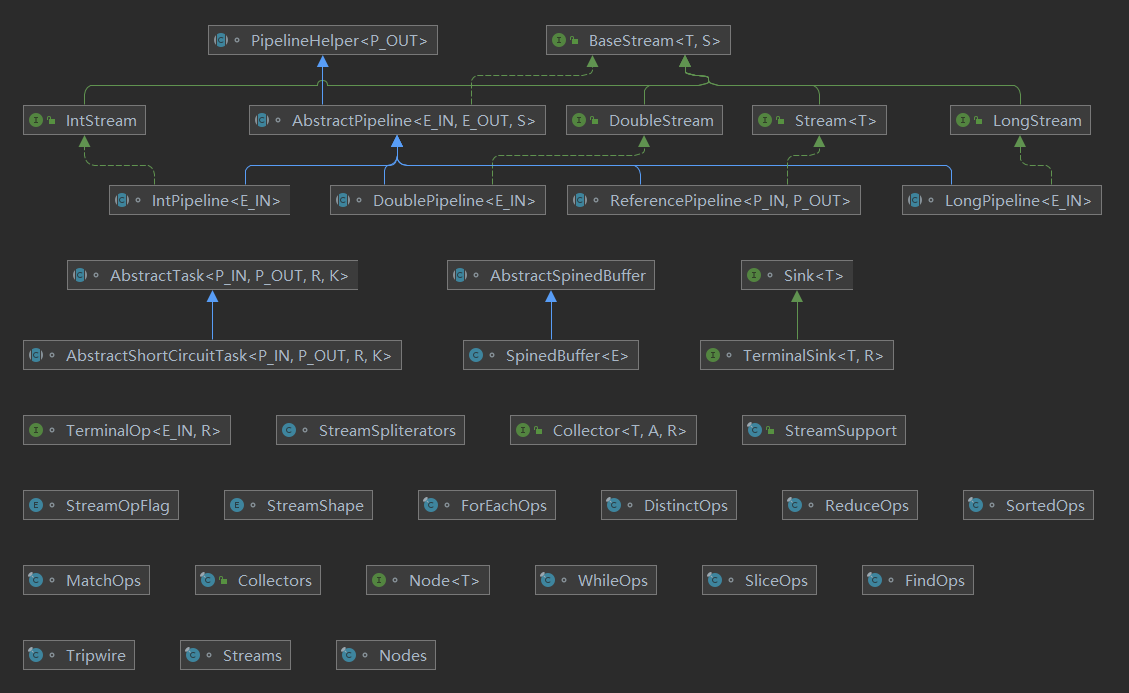

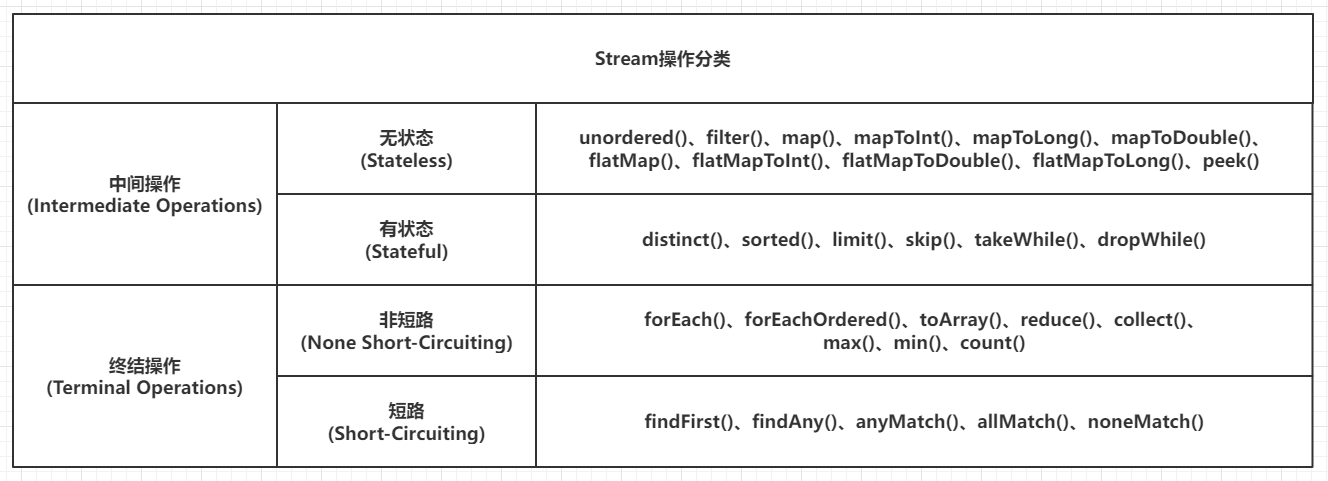

The principle of stream implementation and source code analysis

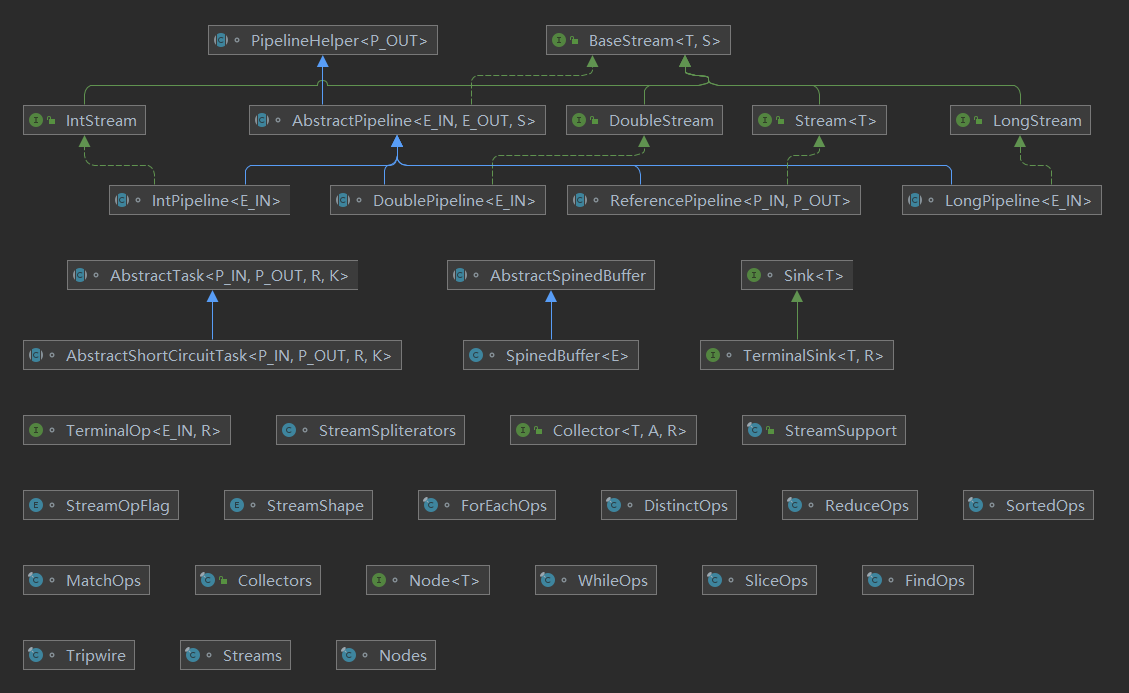

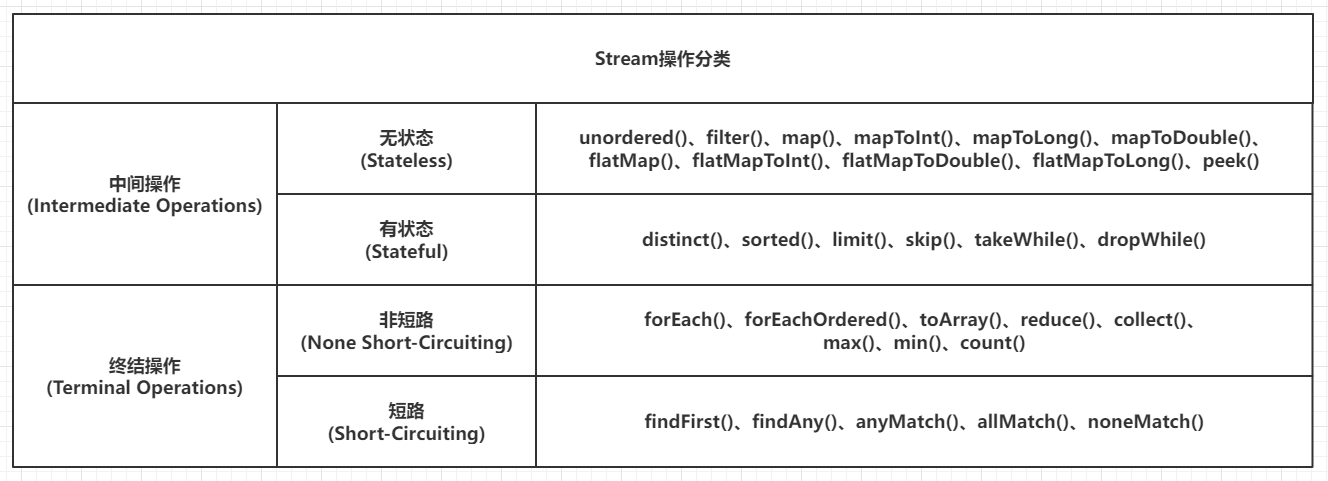

Since the stream implementation is highly abstract engineering code, it can be a bit difficult to read in source code. The whole system involves a large number of interfaces, classes and enumerations, as follows.

The top-level class structure diagram describes the pipeline-related class inheritance relationship of the stream, in which IntStream, LongStream and DoubleStream are special types, respectively, for Integer, Long and Double types, other reference types to build the Pipeline are ReferencePipeline instances, so I believe that the ReferencePipeline (reference type pipeline) is the core data structure of the stream, the following will be based on the implementation of the ReferencePipeline to do in-depth analysis.

StreamOpFlag source code analysis

Note that this subsection is very brain-burning, it is also possible that the author’s bit operation is not very skilled, most of the time consumed in this article in this subsection

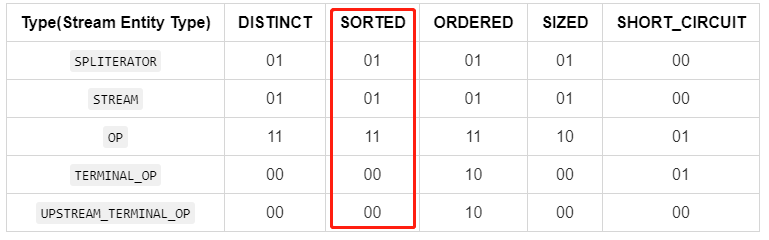

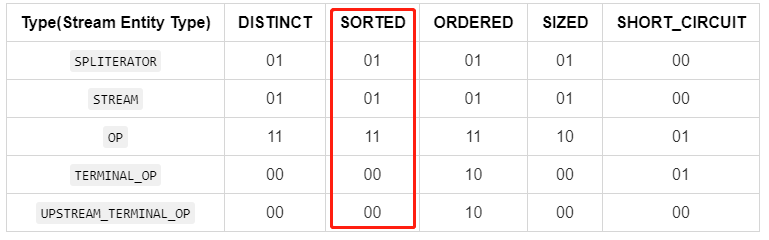

StreamOpFlag is an enumeration that functions to store Flags corresponding to characteristics of streams and operations (hereafter referred to as Stream Flags) that are provided to the Stream framework for control, customization and Stream flags can be used to characterize a number of different entities associated with a stream, including the source of the stream, the intermediate operation (Op) of the stream, and the terminal operation (Op) of the stream. However, not all Stream flags have meaning for all Stream entities, which are currently mapped to flags as follows.

| Type(Stream Entity Type) |

DISTINCT |

SORTED |

ORDERED |

SIZED |

SHORT_CIRCUIT |

| SPLITERATOR |

01 |

01 |

01 |

01 |

00 |

| STREAM |

01 |

01 |

01 |

01 |

00 |

| OP |

11 |

11 |

11 |

10 |

01 |

| TERMINAL_OP |

00 |

00 |

10 |

00 |

01 |

| UPSTREAM_TERMINAL_OP |

00 |

00 |

10 |

00 |

00 |

Among them.

- 01: indicates set/inject

- 10: indicates clear

- 11: indicates reserved

- 00: indicates the initialization value (default fill value), which is a key point, the 0 value indicates that it will never be a certain type of flag

The top comment of StreamOpFlag also contains a table as follows.

| - |

DISTINCT |

SORTED |

ORDERED |

SIZED |

SHORT_CIRCUIT |

| Stream source |

Y |

Y |

Y |

Y |

N |

| Intermediate operation |

PCI |

PCI |

PCI |

PC |

PI |

| Terminal operation |

N |

N |

PC |

N |

PI |

Tag -> Meaning.

- Y: Allowed

- N: illegal

- P: Reserved

- C: Clear

- I: Inject

- Combination PCI: can be retained, cleared or injected

- Combination PC: can be retained or cleared

- Combination PI: can be retained or injected

The two tables actually describe the same conclusion and can be compared and understood, but the final implementation refers to the definition of the first table. Note one thing: preserved(P) here means preserved. If a flag of a Stream entity is assigned to preserved, it means that the entity can use the characteristics represented by this flag. For example, the DISTINCT, SORTED and ORDERED of the OP in the first table of this subsection are all assigned the value 11 (reserved), which means that entities of type OP are allowed to use the de-duplication, natural ordering and sequential processing features. Returning to the source code section, first look at the core properties and constructors of StreamOpFlag.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

83

84

85

86

87

88

89

90

91

92

93

94

95

96

97

98

99

100

101

102

103

104

105

106

107

108

109

110

111

112

113

114

115

116

117

118

119

120

121

122

123

124

125

126

127

128

129

130

131

132

133

134

135

136

137

138

139

140

141

142

143

144

145

146

147

148

149

|

enum StreamOpFlag {

// 暂时忽略其他代码

// 类型枚举,Stream相关实体类型

enum Type {

// SPLITERATOR类型,关联所有和Spliterator相关的特性

SPLITERATOR,

// STREAM类型,关联所有和Stream相关的标志

STREAM,

// STREAM类型,关联所有和Stream中间操作相关的标志

OP,

// TERMINAL_OP类型,关联所有和Stream终结操作相关的标志

TERMINAL_OP,

// UPSTREAM_TERMINAL_OP类型,关联所有在最后一个有状态操作边界上游传播的终止操作标志

// 这个类型的意义直译有点拗口,不过实际上在JDK11源码中,这个类型没有被流相关功能引用,暂时可以忽略

UPSTREAM_TERMINAL_OP

}

// 设置/注入标志的bit模式,二进制数0001,十进制数1

private static final int SET_BITS = 0b01;

// 清除标志的bit模式,二进制数0010,十进制数2

private static final int CLEAR_BITS = 0b10;

// 保留标志的bit模式,二进制数0011,十进制数3

private static final int PRESERVE_BITS = 0b11;

// 掩码建造器工厂方法,注意这个方法用于实例化MaskBuilder

private static MaskBuilder set(Type t) {

return new MaskBuilder(new EnumMap<>(Type.class)).set(t);

}

// 私有静态内部类,掩码建造器,里面的map由上面的set(Type t)方法得知是EnumMap实例

private static class MaskBuilder {

// Type -> SET_BITS|CLEAR_BITS|PRESERVE_BITS|0

final Map<Type, Integer> map;

MaskBuilder(Map<Type, Integer> map) {

this.map = map;

}

// 设置类型和对应的掩码

MaskBuilder mask(Type t, Integer i) {

map.put(t, i);

return this;

}

// 对类型添加/inject

MaskBuilder set(Type t) {

return mask(t, SET_BITS);

}

MaskBuilder clear(Type t) {

return mask(t, CLEAR_BITS);

}

MaskBuilder setAndClear(Type t) {

return mask(t, PRESERVE_BITS);

}

// 这里的build方法对于类型中的NULL掩码填充为0,然后把map返回

Map<Type, Integer> build() {

for (Type t : Type.values()) {

map.putIfAbsent(t, 0b00);

}

return map;

}

}

// 类型->掩码映射

private final Map<Type, Integer> maskTable;

// bit的起始偏移量,控制下面set、clear和preserve的起始偏移量

private final int bitPosition;

// set/inject的bit set(map),其实准确来说应该是一个表示set/inject的bit map

private final int set;

// clear的bit set(map),其实准确来说应该是一个表示clear的bit map

private final int clear;

// preserve的bit set(map),其实准确来说应该是一个表示preserve的bit map

private final int preserve;

private StreamOpFlag(int position, MaskBuilder maskBuilder) {

// 这里会基于MaskBuilder初始化内部的EnumMap

this.maskTable = maskBuilder.build();

// Two bits per flag <= 这里会把入参position放大一倍

position *= 2;

this.bitPosition = position;

this.set = SET_BITS << position; // 设置/注入标志的bit模式左移2倍position

this.clear = CLEAR_BITS << position; // 清除标志的bit模式左移2倍position

this.preserve = PRESERVE_BITS << position; // 保留标志的bit模式左移2倍position

}

// 省略中间一些方法

// 下面这些静态变量就是直接返回标志对应的set/injec、清除和保留的bit map

/**

* The bit value to set or inject {@link #DISTINCT}.

*/

static final int IS_DISTINCT = DISTINCT.set;

/**

* The bit value to clear {@link #DISTINCT}.

*/

static final int NOT_DISTINCT = DISTINCT.clear;

/**

* The bit value to set or inject {@link #SORTED}.

*/

static final int IS_SORTED = SORTED.set;

/**

* The bit value to clear {@link #SORTED}.

*/

static final int NOT_SORTED = SORTED.clear;

/**

* The bit value to set or inject {@link #ORDERED}.

*/

static final int IS_ORDERED = ORDERED.set;

/**

* The bit value to clear {@link #ORDERED}.

*/

static final int NOT_ORDERED = ORDERED.clear;

/**

* The bit value to set {@link #SIZED}.

*/

static final int IS_SIZED = SIZED.set;

/**

* The bit value to clear {@link #SIZED}.

*/

static final int NOT_SIZED = SIZED.clear;

/**

* The bit value to inject {@link #SHORT_CIRCUIT}.

*/

static final int IS_SHORT_CIRCUIT = SHORT_CIRCUIT.set;

}

|

And because StreamOpFlag is an enumeration, an enumeration member is a separate flag, and a flag will act on multiple Stream entity types, so one of its members describes a column of the above entity-flag mapping relationship (viewed vertically):

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

|

// 纵向看

DISTINCT Flag:

maskTable: {

SPLITERATOR: 0000 0001,

STREAM: 0000 0001,

OP: 0000 0011,

TERMINAL_OP: 0000 0000,

UPSTREAM_TERMINAL_OP: 0000 0000

}

position(input): 0

bitPosition: 0

set: 1 => 0000 0000 0000 0000 0000 0000 0000 0001

clear: 2 => 0000 0000 0000 0000 0000 0000 0000 0010

preserve: 3 => 0000 0000 0000 0000 0000 0000 0000 0011

SORTED Flag:

maskTable: {

SPLITERATOR: 0000 0001,

STREAM: 0000 0001,

OP: 0000 0011,

TERMINAL_OP: 0000 0000,

UPSTREAM_TERMINAL_OP: 0000 0000

}

position(input): 1

bitPosition: 2

set: 4 => 0000 0000 0000 0000 0000 0000 0000 0100

clear: 8 => 0000 0000 0000 0000 0000 0000 0000 1000

preserve: 12 => 0000 0000 0000 0000 0000 0000 0000 1100

ORDERED Flag:

maskTable: {

SPLITERATOR: 0000 0001,

STREAM: 0000 0001,

OP: 0000 0011,

TERMINAL_OP: 0000 0010,

UPSTREAM_TERMINAL_OP: 0000 0010

}

position(input): 2

bitPosition: 4

set: 16 => 0000 0000 0000 0000 0000 0000 0001 0000

clear: 32 => 0000 0000 0000 0000 0000 0000 0010 0000

preserve: 48 => 0000 0000 0000 0000 0000 0000 0011 0000

SIZED Flag:

maskTable: {

SPLITERATOR: 0000 0001,

STREAM: 0000 0001,

OP: 0000 0010,

TERMINAL_OP: 0000 0000,

UPSTREAM_TERMINAL_OP: 0000 0000

}

position(input): 3

bitPosition: 6

set: 64 => 0000 0000 0000 0000 0000 0000 0100 0000

clear: 128 => 0000 0000 0000 0000 0000 0000 1000 0000

preserve: 192 => 0000 0000 0000 0000 0000 0000 1100 0000

SHORT_CIRCUIT Flag:

maskTable: {

SPLITERATOR: 0000 0000,

STREAM: 0000 0000,

OP: 0000 0001,

TERMINAL_OP: 0000 0001,

UPSTREAM_TERMINAL_OP: 0000 0000

}

position(input): 12

bitPosition: 24

set: 16777216 => 0000 0001 0000 0000 0000 0000 0000 0000

clear: 33554432 => 0000 0010 0000 0000 0000 0000 0000 0000

preserve: 50331648 => 0000 0011 0000 0000 0000 0000 0000 0000

|

Then the by-and (&) and by-or (|) operations are used. Suppose A = 0001, B = 0010, C = 1000, then.

- A|B = A | B = 0001 | 0010 = 0011 (by bit or, 1|0=1, 0|1=1,0|0 =0,1|1=1)

- A&B = A & B = 0001 | 0010 = 0000 (by bit with, 1|0=0, 0|1=0,0|0 =0,1|1=1)

- MASK = A | B | C = 0001 | 0010 | 1000 = 1011

- Then the condition to determine whether A|B contains A is: A == (A|B & A)

- Then the condition to determine whether MASK contains A is: A == MASK & A

The enumeration in StreamOpFlag is applied here for analysis.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

|

static int DISTINCT_SET = 0b0001;

static int SORTED_CLEAR = 0b1000;

public static void main(String[] args) throws Exception {

// 支持DISTINCT标志和不支持SORTED标志

int flags = DISTINCT_SET | SORTED_CLEAR;

System.out.println(Integer.toBinaryString(flags));

System.out.printf("支持DISTINCT标志:%s\n", DISTINCT_SET == (DISTINCT_SET & flags));

System.out.printf("不支持SORTED标志:%s\n", SORTED_CLEAR == (SORTED_CLEAR & flags));

}

// 控制台输出

1001

支持DISTINCT标志:true

不支持SORTED标志:true

|

Since StreamOpFlag’s modifier is the default, it cannot be used directly, and its code can be copied out to modify the package name to verify the functionality inside:

1

2

3

4

5

6

7

8

9

10

|

public static void main(String[] args) {

int flags = StreamOpFlag.DISTINCT.set | StreamOpFlag.SORTED.clear;

System.out.println(StreamOpFlag.DISTINCT.set == (StreamOpFlag.DISTINCT.set & flags));

System.out.println(StreamOpFlag.SORTED.clear == (StreamOpFlag.SORTED.clear & flags));

}

// 输出

true

true

|

The following methods are defined based on these arithmetic properties.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

|

enum StreamOpFlag {

// 暂时忽略其他代码

// 返回当前StreamOpFlag的set/inject的bit map

int set() {

return set;

}

// 返回当前StreamOpFlag的清除的bit map

int clear() {

return clear;

}

// 这里判断当前StreamOpFlag类型->标记映射中Stream类型的标记,如果大于0说明不是初始化状态,那么当前StreamOpFlag就是Stream相关的标志

boolean isStreamFlag() {

return maskTable.get(Type.STREAM) > 0;

}

// 这里就用到按位与判断输入的flags中是否设置当前StreamOpFlag(StreamOpFlag.set)

boolean isKnown(int flags) {

return (flags & preserve) == set;

}

// 这里就用到按位与判断输入的flags中是否清除当前StreamOpFlag(StreamOpFlag.clear)

boolean isCleared(int flags) {

return (flags & preserve) == clear;

}

// 这里就用到按位与判断输入的flags中是否保留当前StreamOpFlag(StreamOpFlag.clear)

boolean isPreserved(int flags) {

return (flags & preserve) == preserve;

}

// 判断当前的Stream实体类型是否可以设置本标志,要求Stream实体类型的标志位为set或者preserve,按位与要大于0

boolean canSet(Type t) {

return (maskTable.get(t) & SET_BITS) > 0;

}

// 暂时忽略其他代码

}

|

Here is a special operation, the bit operation is used (flags & preserve), the reason is: the same Stream entity type in the same flag can only exist one of set/inject, clear and preserve, that is, the same flags can not exist both StreamOpFlag. SORTED.set and StreamOpFlag.SORTED.clear, which are semantically contradictory, while the size (2 bits) and position of set/inject, clear and preserve in the bit map are already fixed, and preserve is designed to be 0b11 exactly 2 bits inverse, so it can be specialised to (This specialization also makes the judgment more rigorous).

1

2

3

|

(flags & set) == set => (flags & preserve) == set

(flags & clear) == clear => (flags & preserve) == clear

(flags & preserve) == preserve => (flags & preserve) == preserve

|

Analyze so much, in general, is to want to pass a 32-bit integer, every 2 bits indicate 3 kinds of state, then a complete Flags (Flags collection) can represent a total of 16 kinds of flags (position = [0,15], you can check the API notes, [4,11] and [13,15] position is not required to achieve or reserved, belong to the gap). Then we analyze the example of MaskMask calculation process

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

|

// 横向看(位移动运算符优先级高于与或,例如<<的优先级比|高)

SPLITERATOR_CHARACTERISTICS_MASK:

mask(init) = 0

mask(DISTINCT,SPLITERATOR[DISTINCT]=01,bitPosition=0) = 0000 0000 | 0000 0001 << 0 = 0000 0000 | 0000 0001 = 0000 0001

mask(SORTED,SPLITERATOR[SORTED]=01,bitPosition=2) = 0000 0001 | 0000 0001 << 2 = 0000 0001 | 0000 0100 = 0000 0101

mask(ORDERED,SPLITERATOR[ORDERED]=01,bitPosition=4) = 0000 0101 | 0000 0001 << 4 = 0000 0101 | 0001 0000 = 0001 0101

mask(SIZED,SPLITERATOR[SIZED]=01,bitPosition=6) = 0001 0101 | 0000 0001 << 6 = 0001 0101 | 0100 0000 = 0101 0101

mask(SHORT_CIRCUIT,SPLITERATOR[SHORT_CIRCUIT]=00,bitPosition=24) = 0101 0101 | 0000 0000 << 24 = 0101 0101 | 0000 0000 = 0101 0101

mask(final) = 0000 0000 0000 0000 0000 0000 0101 0101(二进制)、85(十进制)

STREAM_MASK:

mask(init) = 0

mask(DISTINCT,SPLITERATOR[DISTINCT]=01,bitPosition=0) = 0000 0000 | 0000 0001 << 0 = 0000 0000 | 0000 0001 = 0000 0001

mask(SORTED,SPLITERATOR[SORTED]=01,bitPosition=2) = 0000 0001 | 0000 0001 << 2 = 0000 0001 | 0000 0100 = 0000 0101

mask(ORDERED,SPLITERATOR[ORDERED]=01,bitPosition=4) = 0000 0101 | 0000 0001 << 4 = 0000 0101 | 0001 0000 = 0001 0101

mask(SIZED,SPLITERATOR[SIZED]=01,bitPosition=6) = 0001 0101 | 0000 0001 << 6 = 0001 0101 | 0100 0000 = 0101 0101

mask(SHORT_CIRCUIT,SPLITERATOR[SHORT_CIRCUIT]=00,bitPosition=24) = 0101 0101 | 0000 0000 << 24 = 0101 0101 | 0000 0000 = 0101 0101

mask(final) = 0000 0000 0000 0000 0000 0000 0101 0101(二进制)、85(十进制)

OP_MASK:

mask(init) = 0

mask(DISTINCT,SPLITERATOR[DISTINCT]=11,bitPosition=0) = 0000 0000 | 0000 0011 << 0 = 0000 0000 | 0000 0011 = 0000 0011

mask(SORTED,SPLITERATOR[SORTED]=11,bitPosition=2) = 0000 0011 | 0000 0011 << 2 = 0000 0011 | 0000 1100 = 0000 1111

mask(ORDERED,SPLITERATOR[ORDERED]=11,bitPosition=4) = 0000 1111 | 0000 0011 << 4 = 0000 1111 | 0011 0000 = 0011 1111

mask(SIZED,SPLITERATOR[SIZED]=10,bitPosition=6) = 0011 1111 | 0000 0010 << 6 = 0011 1111 | 1000 0000 = 1011 1111

mask(SHORT_CIRCUIT,SPLITERATOR[SHORT_CIRCUIT]=01,bitPosition=24) = 1011 1111 | 0000 0001 << 24 = 1011 1111 | 0100 0000 0000 0000 0000 0000 0000 = 0100 0000 0000 0000 0000 1011 1111

mask(final) = 0000 0000 1000 0000 0000 0000 1011 1111(二进制)、16777407(十进制)

TERMINAL_OP_MASK:

mask(init) = 0

mask(DISTINCT,SPLITERATOR[DISTINCT]=00,bitPosition=0) = 0000 0000 | 0000 0000 << 0 = 0000 0000 | 0000 0000 = 0000 0000

mask(SORTED,SPLITERATOR[SORTED]=00,bitPosition=2) = 0000 0000 | 0000 0000 << 2 = 0000 0000 | 0000 0000 = 0000 0000

mask(ORDERED,SPLITERATOR[ORDERED]=10,bitPosition=4) = 0000 0000 | 0000 0010 << 4 = 0000 0000 | 0010 0000 = 0010 0000

mask(SIZED,SPLITERATOR[SIZED]=00,bitPosition=6) = 0010 0000 | 0000 0000 << 6 = 0010 0000 | 0000 0000 = 0010 0000

mask(SHORT_CIRCUIT,SPLITERATOR[SHORT_CIRCUIT]=01,bitPosition=24) = 0010 0000 | 0000 0001 << 24 = 0010 0000 | 0001 0000 0000 0000 0000 0000 0000 = 0001 0000 0000 0000 0000 0010 0000

mask(final) = 0000 0001 0000 0000 0000 0000 0010 0000(二进制)、16777248(十进制)

UPSTREAM_TERMINAL_OP_MASK:

mask(init) = 0

mask(DISTINCT,SPLITERATOR[DISTINCT]=00,bitPosition=0) = 0000 0000 | 0000 0000 << 0 = 0000 0000 | 0000 0000 = 0000 0000

mask(SORTED,SPLITERATOR[SORTED]=00,bitPosition=2) = 0000 0000 | 0000 0000 << 2 = 0000 0000 | 0000 0000 = 0000 0000

mask(ORDERED,SPLITERATOR[ORDERED]=10,bitPosition=4) = 0000 0000 | 0000 0010 << 4 = 0000 0000 | 0010 0000 = 0010 0000

mask(SIZED,SPLITERATOR[SIZED]=00,bitPosition=6) = 0010 0000 | 0000 0000 << 6 = 0010 0000 | 0000 0000 = 0010 0000

mask(SHORT_CIRCUIT,SPLITERATOR[SHORT_CIRCUIT]=00,bitPosition=24) = 0010 0000 | 0000 0000 << 24 = 0010 0000 | 0000 0000 = 0010 0000

mask(final) = 0000 0000 0000 0000 0000 0000 0010 0000(二进制)、32(十进制)

|

The related methods and properties are as follows.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

|

enum StreamOpFlag {

// SPLITERATOR类型的标志bit map

static final int SPLITERATOR_CHARACTERISTICS_MASK = createMask(Type.SPLITERATOR);

// STREAM类型的标志bit map

static final int STREAM_MASK = createMask(Type.STREAM);

// OP类型的标志bit map

static final int OP_MASK = createMask(Type.OP);

// TERMINAL_OP类型的标志bit map

static final int TERMINAL_OP_MASK = createMask(Type.TERMINAL_OP);

// UPSTREAM_TERMINAL_OP类型的标志bit map

static final int UPSTREAM_TERMINAL_OP_MASK = createMask(Type.UPSTREAM_TERMINAL_OP);

// 基于Stream类型,创建对应类型填充所有标志的bit map

private static int createMask(Type t) {

int mask = 0;

for (StreamOpFlag flag : StreamOpFlag.values()) {

mask |= flag.maskTable.get(t) << flag.bitPosition;

}

return mask;

}

// 构造一个标志本身的掩码,就是所有标志都采用保留位表示,目前作为flags == 0时候的初始值

private static final int FLAG_MASK = createFlagMask();

// 构造一个包含全部标志中的preserve位的bit map,按照目前来看是暂时是一个固定值,二进制表示为0011 0000 0000 0000 0000 1111 1111

private static int createFlagMask() {

int mask = 0;

for (StreamOpFlag flag : StreamOpFlag.values()) {

mask |= flag.preserve;

}

return mask;

}

// 构造一个Stream类型包含全部标志中的set位的bit map,这里直接使用了STREAM_MASK,按照目前来看是暂时是一个固定值,二进制表示为0000 0000 0000 0000 0000 0000 0101 0101

private static final int FLAG_MASK_IS = STREAM_MASK;

// 构造一个Stream类型包含全部标志中的clear位的bit map,按照目前来看是暂时是一个固定值,二进制表示为0000 0000 0000 0000 0000 0000 1010 1010

private static final int FLAG_MASK_NOT = STREAM_MASK << 1;

// 初始化操作的标志bit map,目前来看就是Stream的头节点初始化时候需要合并在flags里面的初始化值,照目前来看是暂时是一个固定值,二进制表示为0000 0000 0000 0000 0000 0000 1111 1111

static final int INITIAL_OPS_VALUE = FLAG_MASK_IS | FLAG_MASK_NOT;

}

|

The 5 members of SPLITERATOR_CHARACTERISTICS_MASK (see the Mask calculation example above) are actually the bit map of all StreamOpFlag flags of the corresponding Stream entity type, which is the “horizontal” display of the mapping of the Stream’s type and flags.

The previous analysis has been relatively detailed and the process is very complex, but the more complex application of Mask is still in the later methods. the initialization of Mask is provided for the operation of merging (combine) and transforming (transforming from characteristics in Spliterator to flags) of flags, see the following methods.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

|

enum StreamOpFlag {

// 这个方法完全没有注释,只使用在下面的combineOpFlags()方法中

// 从源码来看

// 入参flags == 0的时候,那么直接返回0011 0000 0000 0000 0000 1111 1111

// 入参flags != 0的时候,那么会把当前flags的所有set/inject、clear和preserve所在位在bit map中全部置为0,然后其他位全部置为1

private static int getMask(int flags) {

return (flags == 0)

? FLAG_MASK

: ~(flags | ((FLAG_MASK_IS & flags) << 1) | ((FLAG_MASK_NOT & flags) >> 1));

}

// 合并新的flags和前一个flags,这里还是用到老套路先和Mask按位与,再进行一次按位或

// 作为Stream的头节点的时候,prevCombOpFlags必须为INITIAL_OPS_VALUE

static int combineOpFlags(int newStreamOrOpFlags, int prevCombOpFlags) {

// 0x01 or 0x10 nibbles are transformed to 0x11

// 0x00 nibbles remain unchanged

// Then all the bits are flipped

// Then the result is logically or'ed with the operation flags.

return (prevCombOpFlags & StreamOpFlag.getMask(newStreamOrOpFlags)) | newStreamOrOpFlags;

}

// 通过合并后的flags,转换出Stream类型的flags

static int toStreamFlags(int combOpFlags) {

// By flipping the nibbles 0x11 become 0x00 and 0x01 become 0x10

// Shift left 1 to restore set flags and mask off anything other than the set flags

return ((~combOpFlags) >> 1) & FLAG_MASK_IS & combOpFlags;

}

// Stream的标志转换为Spliterator的characteristics

static int toCharacteristics(int streamFlags) {

return streamFlags & SPLITERATOR_CHARACTERISTICS_MASK;

}

// Spliterator的characteristics转换为Stream的标志,入参是Spliterator实例

static int fromCharacteristics(Spliterator<?> spliterator) {

int characteristics = spliterator.characteristics();

if ((characteristics & Spliterator.SORTED) != 0 && spliterator.getComparator() != null) {

// Do not propagate the SORTED characteristic if it does not correspond

// to a natural sort order

return characteristics & SPLITERATOR_CHARACTERISTICS_MASK & ~Spliterator.SORTED;

}

else {

return characteristics & SPLITERATOR_CHARACTERISTICS_MASK;

}

}

// Spliterator的characteristics转换为Stream的标志,入参是Spliterator的characteristics

static int fromCharacteristics(int characteristics) {

return characteristics & SPLITERATOR_CHARACTERISTICS_MASK;

}

}

|

The bitwise operations here are complex, and only simple calculations and related functions are shown.

- combineOpFlags(): used to merge the new flags and the previous flags, because the data structure of Stream is a Pipeline, the successor node needs to merge the flags of the predecessor node, for example, the predecessor node flags is ORDERED.set, the current new node (successor node) that joins the Pipeline has new flags is SIZED.set, then the flags of the predecessor node should be merged in the successor node, simply imagine SIZED.set | ORDERED.set, if it is the head node, then the flags when initializing the head node should be merged INITIAL_OPS_VALUE, here is an example.

1

2

3

4

5

6

7

8

9

10

11

12

|

int left = ORDERED.set | DISTINCT.set;

int right = SIZED.clear | SORTED.clear;

System.out.println("left:" + Integer.toBinaryString(left));

System.out.println("right:" + Integer.toBinaryString(right));

System.out.println("right mask:" + Integer.toBinaryString(getMask(right)));

System.out.println("combine:" + Integer.toBinaryString(combineOpFlags(right, left)));

// 输出结果

left:1010001

right:10001000

right mask:11111111111111111111111100110011

combine:10011001

|

- The reason why the characteristics in Spliterator can be converted to flags by a simple bitwise conversion is that the characteristics in Spliterator are designed to match the StreamOpFlag, or to be precise, the bit map’s bit distribution is matched, so it can be done directly with SPLITERATOR_CHARACTERISTICS_MASK by bitwise conversion, see the following example.

1

2

3

4

|

// 这里简单点只展示8 bit

SPLITERATOR_CHARACTERISTICS_MASK: 0101 0101

Spliterator.ORDERED: 0001 0000

StreamOpFlag.ORDERED.set: 0001 0000

|

At this point, the complete implementation of StreamOpFlag has been analyzed, Mask-related methods are not intended to be expanded in detail, the following will begin to analyze the implementation of the “pipeline” structure in Stream, because of habit, the following two terms “flag” and “characteristics” will be mixed.

ReferencePipeline source code analysis

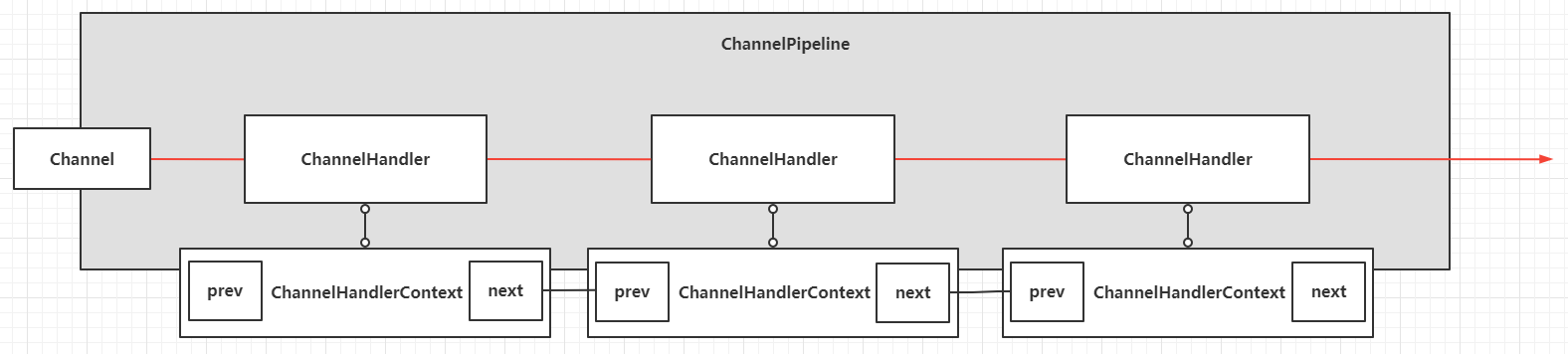

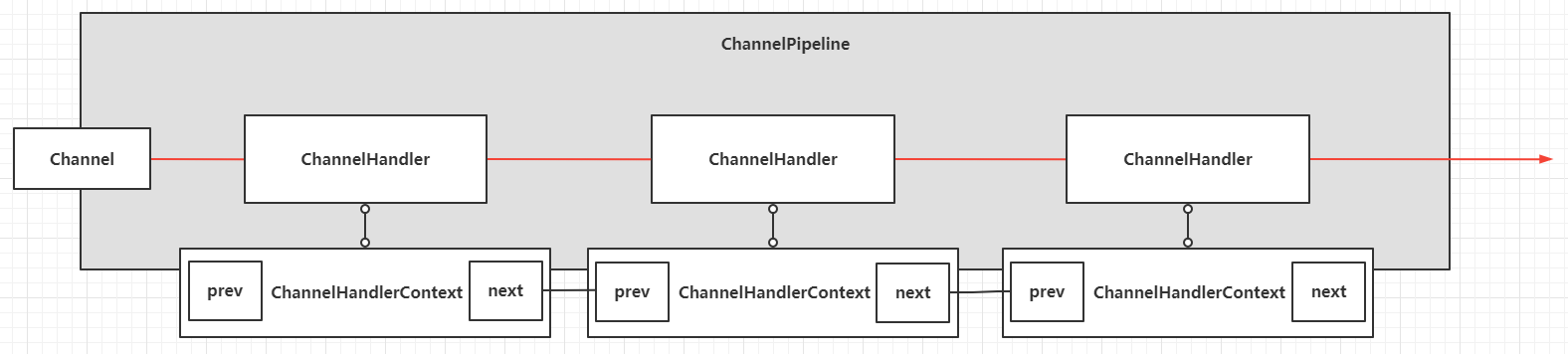

Since Stream has the characteristics of stream, it needs a chain data structure, so that the elements can “flow” from the Source all the way down and pass to each chain node, the common data structure to achieve this scenario is a two-way chain table (considering the need for backtracking, a one-way chain table is not suitable), the more famous implementations are AQS and Netty’s ChannelHandlerContext. For example, the pipeline ChannelPipeline in Netty is designed as follows.

For this bi-directional linked table data structure, the corresponding class in Stream is AbstractPipeline, and the core implementation classes are in ReferencePipeline and ReferencePipeline’s internal classes.

Main interfaces

First, a brief demonstration of AbstractPipeline’s core parent class method definitions, the main parent classes are Stream, BaseStream and PipelineHelper.

- Stream represents a sequence of elements supporting serial and parallel aggregation operations. This top-level interface provides definitions for stream intermediate operations, termination operations and some static factory methods (too many to list here), which are essentially a builder-type interface (for picking up intermediate operations) and can form a chain of multiple intermediate operations and single termination operations, e.g.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

|

public interface Stream<T> extends BaseStream<T, Stream<T>> {

// 忽略其他代码

// 过滤Op

Stream<T> filter(Predicate<? super T> predicate);

// 映射Op

<R> Stream<R> map(Function<? super T, ? extends R> mapper);

// 终结操作 - 遍历

void forEach(Consumer<? super T> action);

// 忽略其他代码

}

// init

Stream x = buildStream();

// chain: head -> filter(Op) -> map(Op) -> forEach(Terminal Op)

x.filter().map().forEach()

|

- BaseStream: The basic interface of Stream, defining the iterator of streams, equivalent variants of streams (concurrent processing variants, synchronous processing variants and variants that do not support sequential processing of elements), concurrent and synchronous judgments and closing related methods

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

|

// T是元素类型,S是BaseStream<T, S>类型

// 流的基础接口,这里的流指定的支持同步执行和异步执行的聚合操作的元素序列

public interface BaseStream<T, S extends BaseStream<T, S>> extends AutoCloseable {

// 返回一个当前Stream实例中所有元素的迭代器

// 这是一个终结操作

Iterator<T> iterator();

// 返回一个当前Stream实例中所有元素的可拆分迭代器

Spliterator<T> spliterator();

// 当前的Stream实例是否支持并发

boolean isParallel();

// 返回一个等效的同步处理的Stream实例

S sequential();

// 返回一个等效的并发处理的Stream实例

S parallel();

// 返回一个等效的不支持StreamOpFlag.ORDERED特性的Stream实例

// 或者说支持StreamOpFlag.NOT_ORDERED的特性,也就返回的变体Stream在处理元素的时候不需要顺序处理

S unordered();

// 返回一个添加了close处理器的Stream实例,close处理器会在下面的close方法中回调

S onClose(Runnable closeHandler);

// 关闭当前Stream实例,回调关联本Stream的所有close处理器

@Override

void close();

}

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

|

abstract class PipelineHelper<P_OUT> {

// 获取流的流水线的数据源的"形状",其实就是数据源元素的类型

// 主要有四种类型:REFERENCE(除了int、long和double之外的引用类型)、INT_VALUE、LONG_VALUE和DOUBLE_VALUE

abstract StreamShape getSourceShape();

// 获取合并流和流操作的标志,合并的标志包括流的数据源标志、中间操作标志和终结操作标志

// 从实现上看是当前流管道节点合并前面所有节点和自身节点标志的所有标志

abstract int getStreamAndOpFlags();

// 如果当前的流管道节点的合并标志集合支持SIZED,则调用Spliterator.getExactSizeIfKnown()返回数据源中的准确元素数量,否则返回-1

abstract<P_IN> long exactOutputSizeIfKnown(Spliterator<P_IN> spliterator);

// 相当于调用下面的方法组合:copyInto(wrapSink(sink), spliterator)

abstract<P_IN, S extends Sink<P_OUT>> S wrapAndCopyInto(S sink, Spliterator<P_IN> spliterator);

// 发送所有来自Spliterator中的元素到Sink中,如果支持SHORT_CIRCUIT标志,则会调用copyIntoWithCancel

abstract<P_IN> void copyInto(Sink<P_IN> wrappedSink, Spliterator<P_IN> spliterator);

// 发送所有来自Spliterator中的元素到Sink中,Sink处理完每个元素后会检查Sink#cancellationRequested()方法的状态去判断是否中断推送元素的操作

abstract <P_IN> boolean copyIntoWithCancel(Sink<P_IN> wrappedSink, Spliterator<P_IN> spliterator);

// 创建接收元素类型为P_IN的Sink实例,实现PipelineHelper中描述的所有中间操作,用这个Sink去包装传入的Sink实例(传入的Sink实例的元素类型为PipelineHelper的输出类型P_OUT)

abstract<P_IN> Sink<P_IN> wrapSink(Sink<P_OUT> sink);

// 包装传入的spliterator,从源码来看,在Stream链的头节点调用会直接返回传入的实例,如果在非头节点调用会委托到StreamSpliterators.WrappingSpliterator()方法进行包装

// 这个方法在源码中没有API注释

abstract<P_IN> Spliterator<P_OUT> wrapSpliterator(Spliterator<P_IN> spliterator);

// 构造一个兼容当前Stream元素"形状"的Node.Builder实例

// 从源码来看直接委托到Nodes.builder()方法

abstract Node.Builder<P_OUT> makeNodeBuilder(long exactSizeIfKnown,

IntFunction<P_OUT[]> generator);

// Stream流水线所有阶段(节点)应用于数据源Spliterator,输出的元素作为结果收集起来转化为Node实例

// 此方法应用于toArray()方法的计算,本质上是一个终结操作

abstract<P_IN> Node<P_OUT> evaluate(Spliterator<P_IN> spliterator,

boolean flatten,

IntFunction<P_OUT[]> generator);

}

|

Note one thing (repeat 3 times).

- The synchronous stream is referred to here as a stream of synchronous processing|execution, and the “parallel stream” is referred to as a stream of concurrent processing|execution, because the parallel stream is ambiguous and is actually only concurrent execution, not parallel execution

- The synchronous stream is referred to here as a stream of synchronous processing|execution, and the “parallel stream” is referred to as a stream of concurrent processing|execution, because the parallel stream is ambiguous and is actually only concurrent execution, not parallel execution

- The synchronous stream is referred to here as a stream of synchronous processing|execution, and the “parallel stream” is referred to as a stream of concurrent processing|execution, because the parallel stream is ambiguous and is actually only concurrent execution, not parallel execution

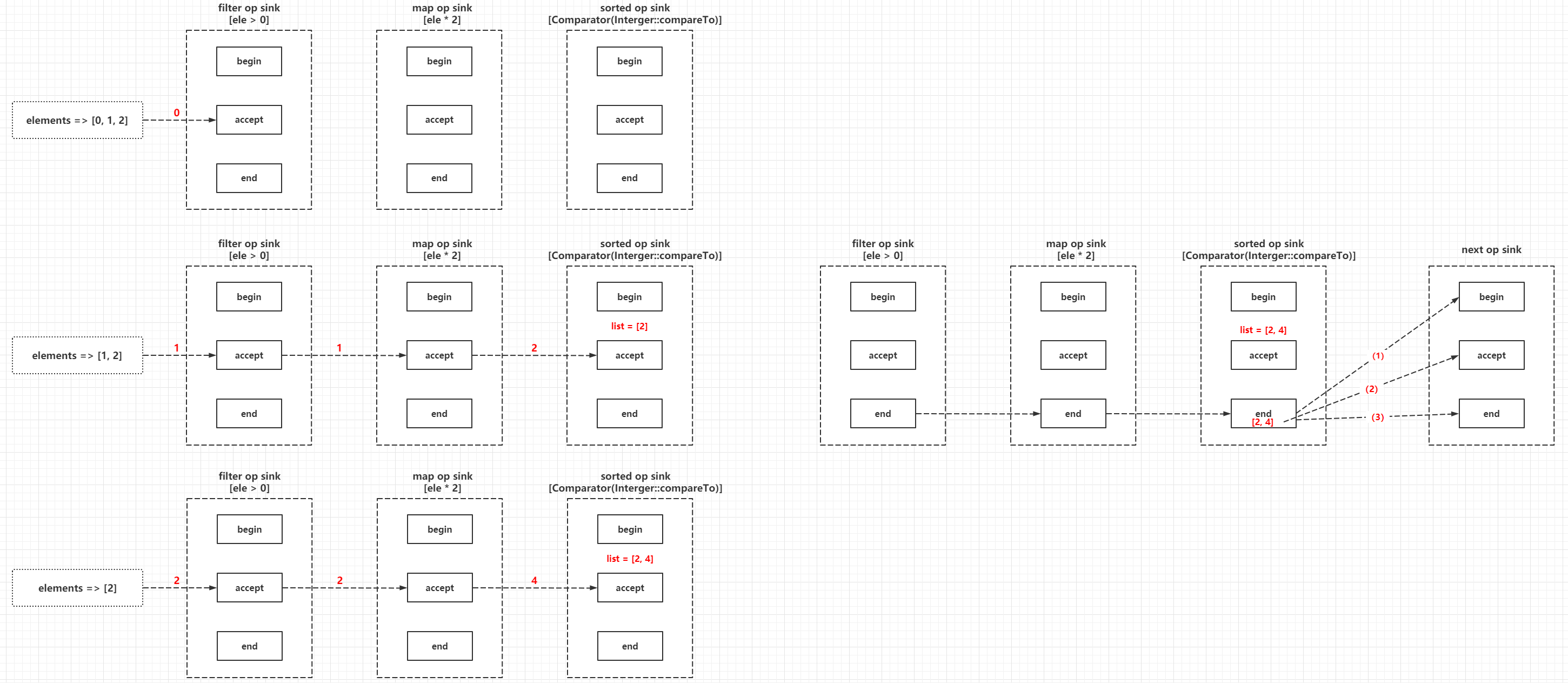

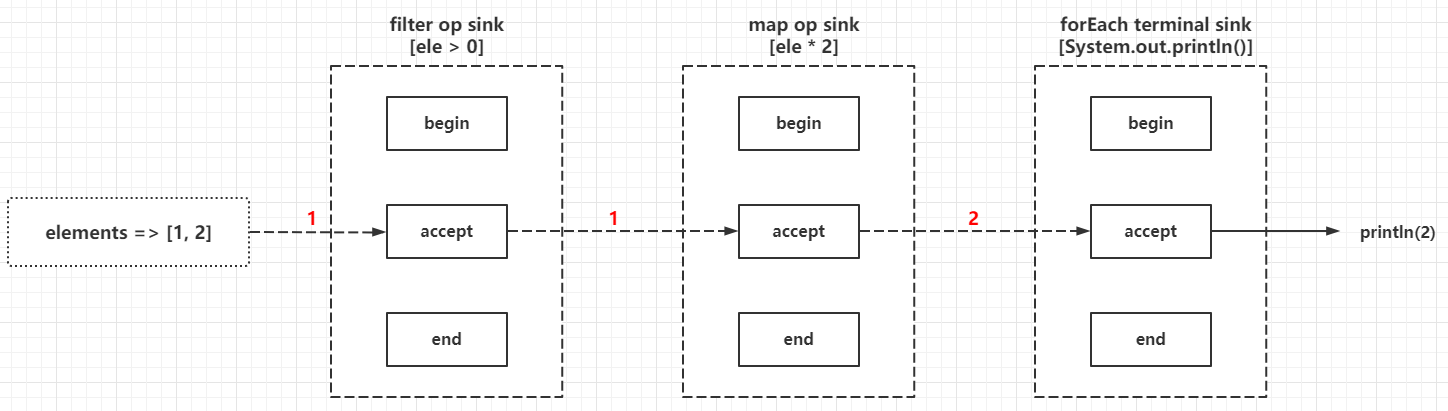

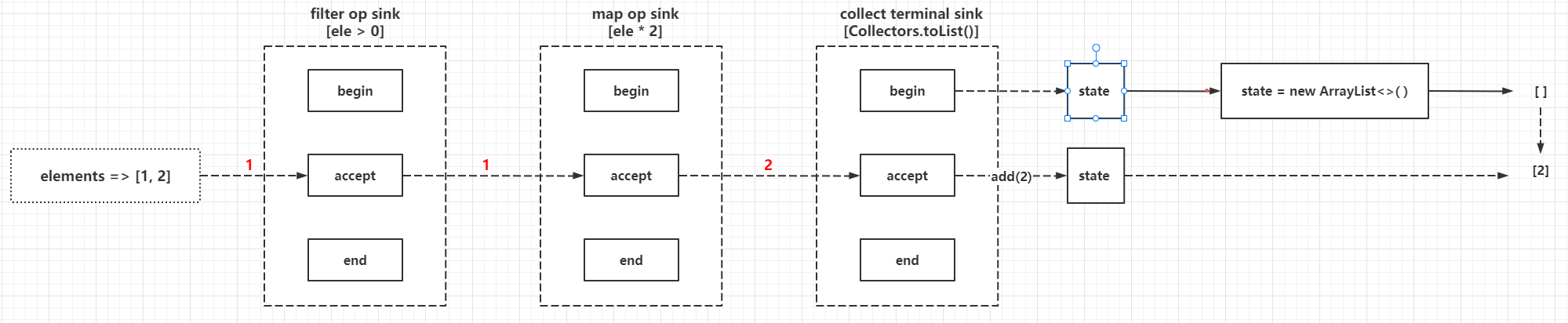

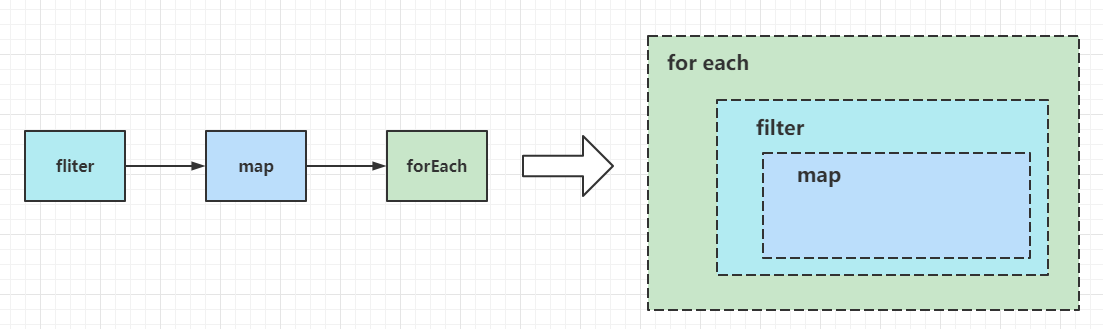

Sink and reference type chains

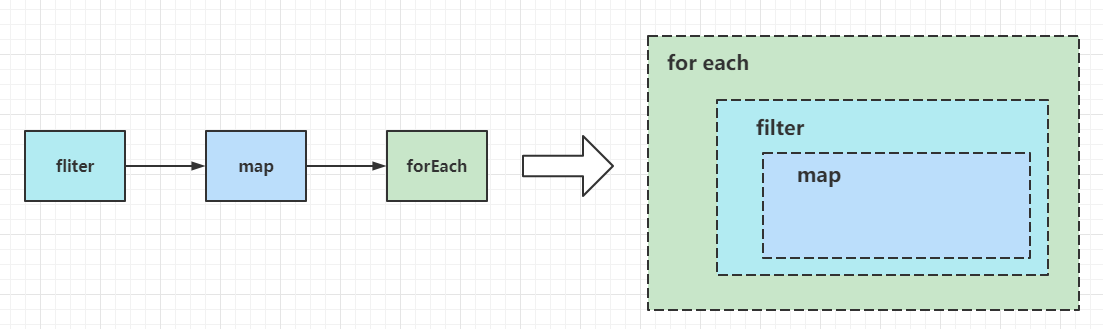

The interface Sink exists in several methods of PipelineHelper, which was not analyzed in the previous section and will be expanded in this subsection. This is similar to a multi-layer wrapper programming model, simplified as follows.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

|

public class WrapperApp {

interface Wrapper {

void doAction();

}

public static void main(String[] args) {

AtomicInteger counter = new AtomicInteger(0);

Wrapper first = () -> System.out.printf("wrapper [depth => %d] invoke\n", counter.incrementAndGet());

Wrapper second = () -> {

first.doAction();

System.out.printf("wrapper [depth => %d] invoke\n", counter.incrementAndGet());

};

second.doAction();

}

}

// 控制台输出

wrapper [depth => 1] invoke

wrapper [depth => 2] invoke

|

The above example is a bit abrupt, and two different Sink implementations can be achieved without sensory integration, citing another example as follows.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

83

84

85

86

87

88

89

90

91

92

93

94

95

96

97

98

99

100

101

102

103

104

105

106

107

108

109

110

111

112

|

public interface Sink<T> extends Consumer<T> {

default void begin(long size) {

}

default void end() {

}

abstract class ChainedReference<T, OUT> implements Sink<T> {

protected final Sink<OUT> downstream;

public ChainedReference(Sink<OUT> downstream) {

this.downstream = downstream;

}

}

}

@SuppressWarnings({"unchecked", "rawtypes"})

public class ReferenceChain<OUT, R> {

/**

* sink chain

*/

private final List<Supplier<Sink<?>>> sinkBuilders = new ArrayList<>();

/**

* current sink

*/

private final AtomicReference<Sink> sinkReference = new AtomicReference<>();

public ReferenceChain<OUT, R> filter(Predicate<OUT> predicate) {

//filter

sinkBuilders.add(() -> {

Sink<OUT> prevSink = (Sink<OUT>) sinkReference.get();

Sink.ChainedReference<OUT, OUT> currentSink = new Sink.ChainedReference<>(prevSink) {

@Override

public void accept(OUT out) {

if (predicate.test(out)) {

downstream.accept(out);

}

}

};

sinkReference.set(currentSink);

return currentSink;

});

return this;

}

public ReferenceChain<OUT, R> map(Function<OUT, R> function) {

// map

sinkBuilders.add(() -> {

Sink<R> prevSink = (Sink<R>) sinkReference.get();

Sink.ChainedReference<OUT, R> currentSink = new Sink.ChainedReference<>(prevSink) {

@Override

public void accept(OUT in) {

downstream.accept(function.apply(in));

}

};

sinkReference.set(currentSink);

return currentSink;

});

return this;

}

public void forEachPrint(Collection<OUT> collection) {

forEachPrint(collection, false);

}

public void forEachPrint(Collection<OUT> collection, boolean reverse) {

Spliterator<OUT> spliterator = collection.spliterator();

// 这个是类似于terminal op

Sink<OUT> sink = System.out::println;

sinkReference.set(sink);

Sink<OUT> stage = sink;

// 反向包装 -> 正向遍历

if (reverse) {

for (int i = 0; i <= sinkBuilders.size() - 1; i++) {

Supplier<Sink<?>> supplier = sinkBuilders.get(i);

stage = (Sink<OUT>) supplier.get();

}

} else {

// 正向包装 -> 反向遍历

for (int i = sinkBuilders.size() - 1; i >= 0; i--) {

Supplier<Sink<?>> supplier = sinkBuilders.get(i);

stage = (Sink<OUT>) supplier.get();

}

}

Sink<OUT> finalStage = stage;

spliterator.forEachRemaining(finalStage);

}

public static void main(String[] args) {

List<Integer> list = new ArrayList<>();

list.add(1);

list.add(2);

list.add(3);

list.add(12);

ReferenceChain<Integer, Integer> chain = new ReferenceChain<>();

// filter -> map -> for each

chain.filter(item -> item > 10)

.map(item -> item * 2)

.forEachPrint(list);

}

}

// 输出结果

24

|

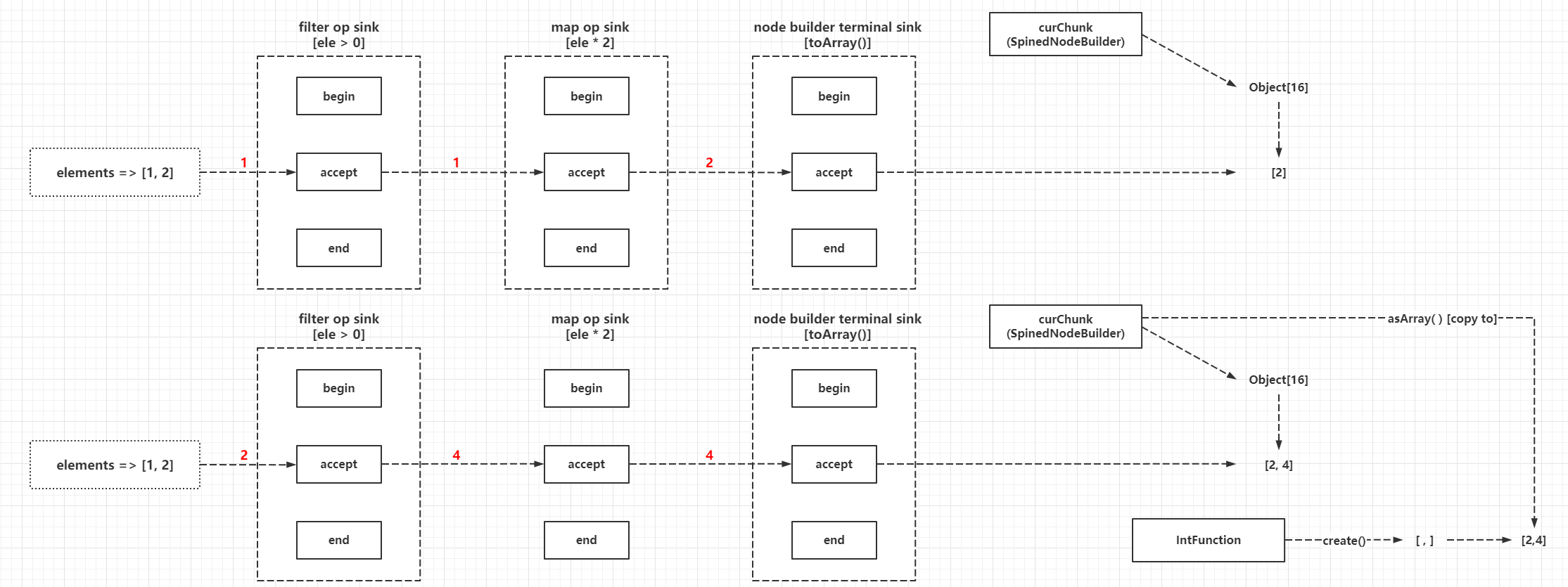

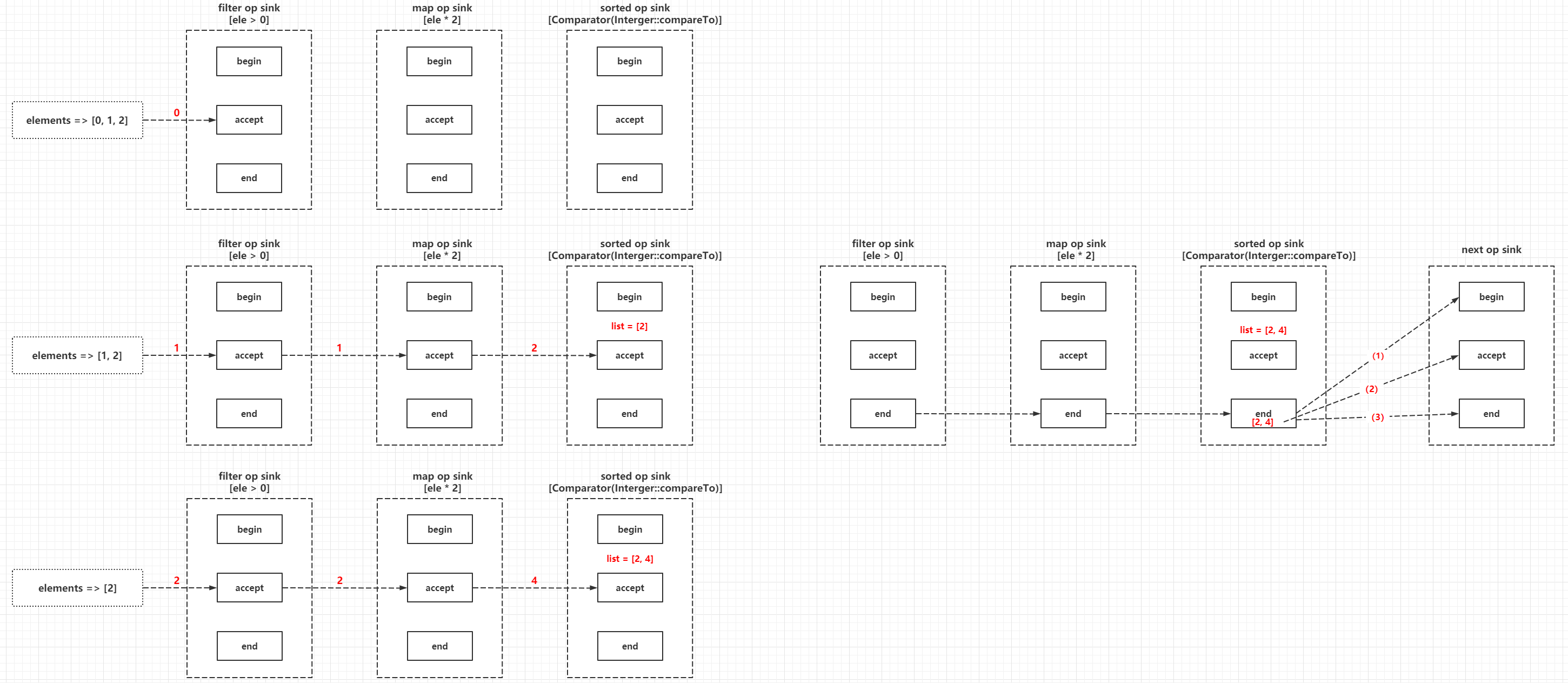

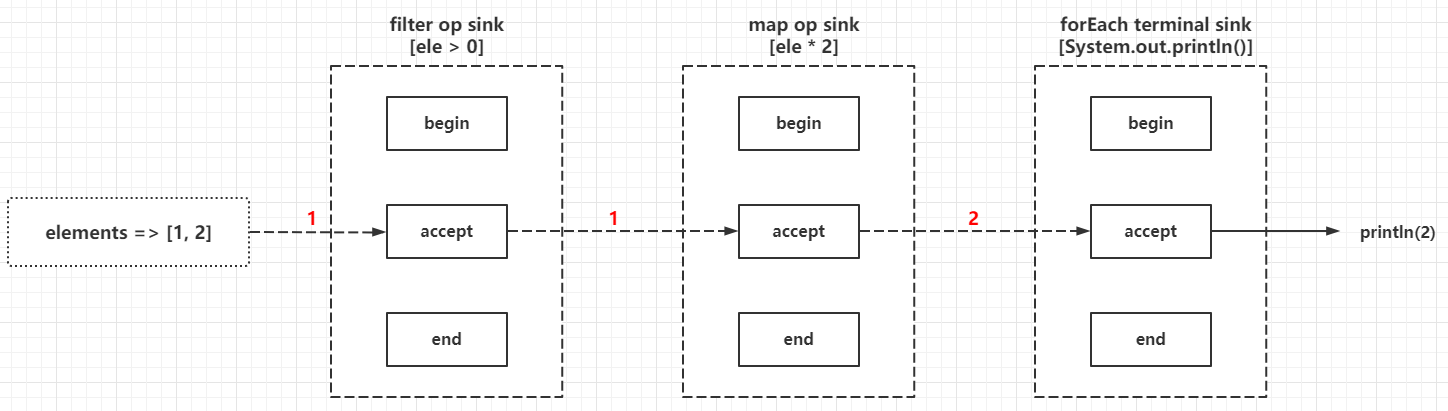

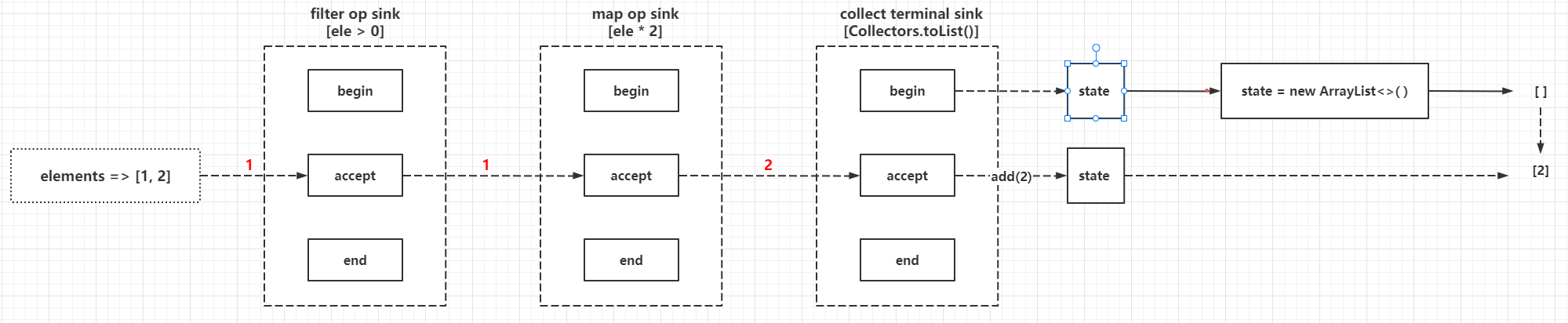

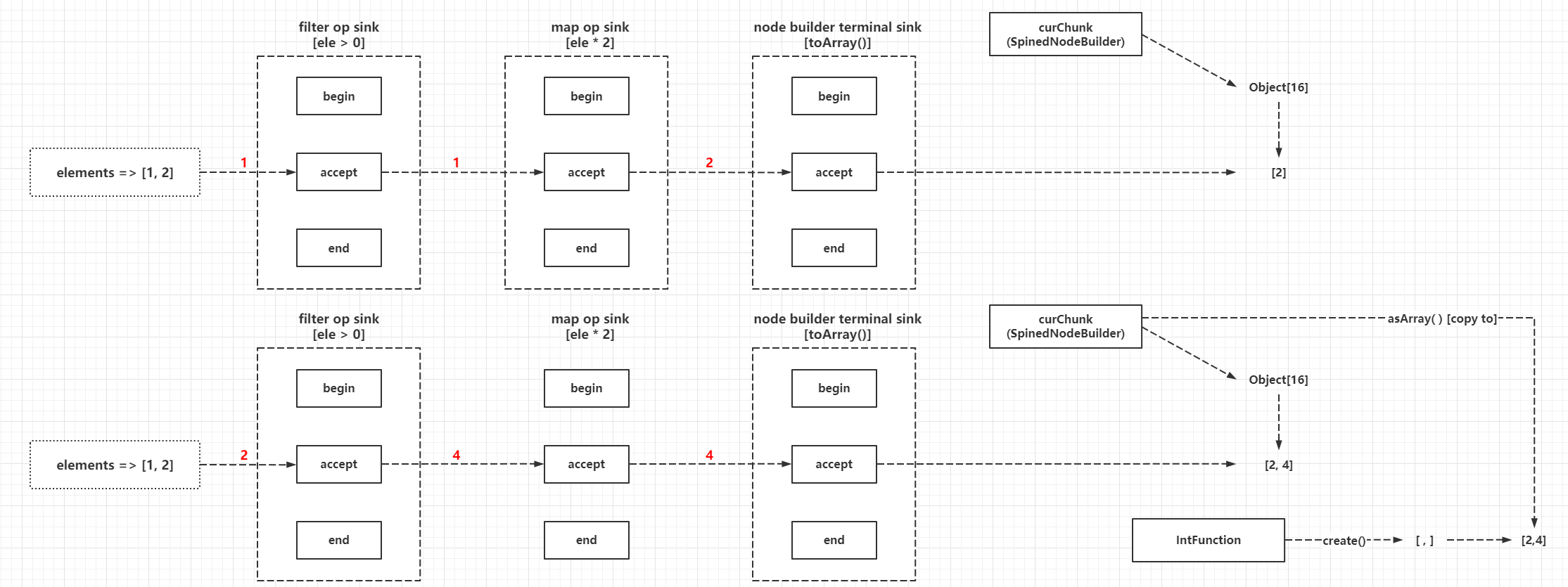

The process of execution is as follows.

The central tenet of the multi-layer wrapper programming model is that

- the vast majority of operations can be converted to the implementation of java.util.function.Consumer, that is, the implementation of accept(T t) method to complete the processing of incoming elements

- The Sink processed first is always the Sink processed later as an input, in its own processing methods to determine and call back the incoming Sink processing method callback, that is, when building a reference chain, you need to build from back to front, the logic of this way of implementation can refer to AbstractPipeline#wrapSink(), for example.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

|

// 目标顺序:filter -> map

Sink mapSink = new Sink(inputSink){

private Function mapper;

public void accept(E ele) {

inputSink.accept(mapper.apply(ele))

}

}

Sink filterSink = new Sink(mapSink){

private Predicate predicate;

public void accept(E ele) {

if(predicate.test(ele)){

mapSink.accept(ele);

}

}

}

|

- From the above point, we know that, in general, the final termination operation is applied to the first Sink of the reference chain

The above code is not made up by the author and can be found in the source code of java.util.stream.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

|

// 继承自Consumer,主要是继承函数式接口方法void accept(T t)

interface Sink<T> extends Consumer<T> {

// 重置当前Sink的状态(为了接收一个新的数据集),传入的size是推送到downstream的准确数据量,无法评估数据量则传入-1

default void begin(long size) {}

//

default void end() {}

// 返回true的时候表示当前的Sink不会接收数据

default boolean cancellationRequested() {

return false;

}

// 特化方法,接受一个int类型的值

default void accept(int value) {

throw new IllegalStateException("called wrong accept method");

}

// 特化方法,接受一个long类型的值

default void accept(long value) {

throw new IllegalStateException("called wrong accept method");

}

// 特化方法,接受一个double类型的值

default void accept(double value) {

throw new IllegalStateException("called wrong accept method");

}

// 引用类型链,准确来说是Sink链

abstract static class ChainedReference<T, E_OUT> implements Sink<T> {

// 下一个Sink

protected final Sink<? super E_OUT> downstream;

public ChainedReference(Sink<? super E_OUT> downstream) {

this.downstream = Objects.requireNonNull(downstream);

}

@Override

public void begin(long size) {

downstream.begin(size);

}

@Override

public void end() {

downstream.end();

}

@Override

public boolean cancellationRequested() {

return downstream.cancellationRequested();

}

}

// 暂时忽略Int、Long、Double的特化类型场景

}

|

If you have used RxJava or Project-Reactor, Sink is more like Subscriber, multiple Subscribers form a ChainedReference (Sink Chain, which can be understood as a composite Subscriber), while Terminal Op is similar to Publisher, only when the Subscriber subscribes to the Publisher, the data will be processed, here is the application of Reactive programming model.

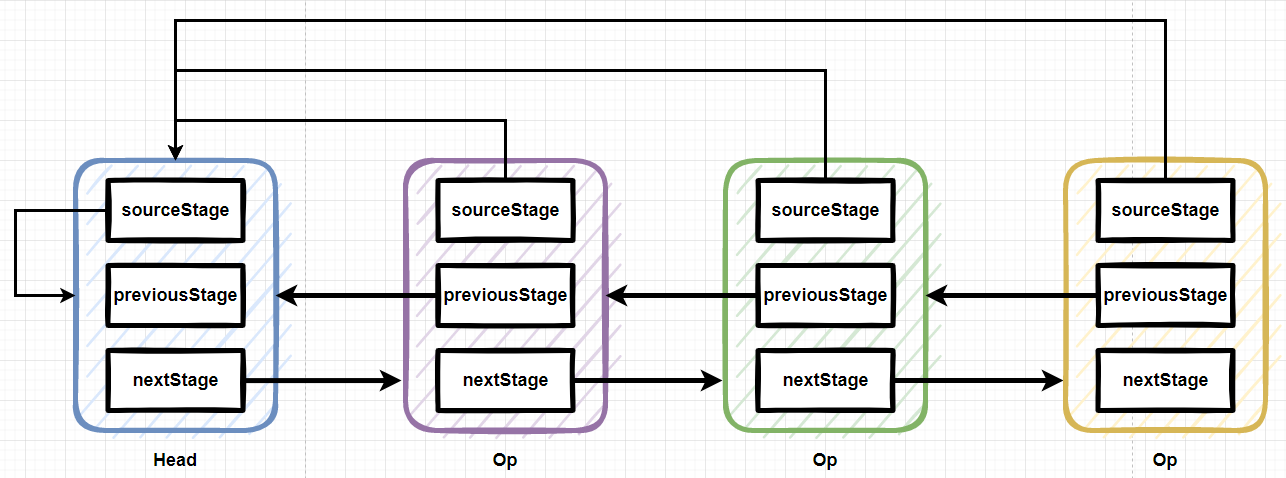

Implementation of AbstractPipeline and ReferencePipeline

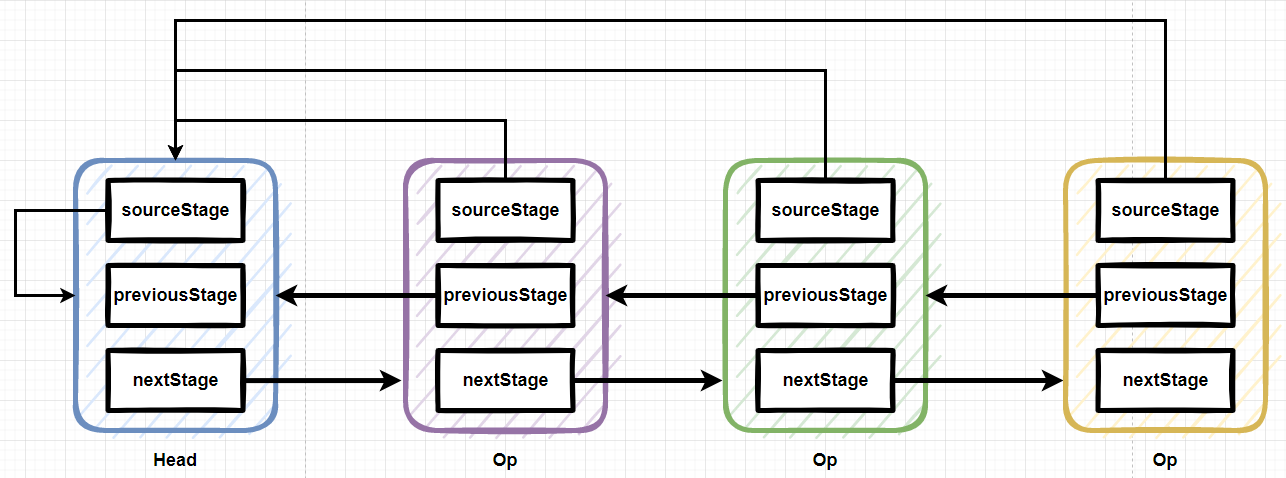

AbstractPipeline and ReferencePipeline are both abstract classes. AbstractPipeline is used to build the data structure of Pipeline and provide some Shape-related abstract methods for ReferencePipeline to implement, while ReferencePipeline is the base type of Pipeline in Stream. From the source code, the head node and operation node of Stream chain (pipeline) structure are subclasses of ReferencePipeline. First look at the member variables and constructors of AbstractPipeline.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

83

84

85

86

87

88

89

90

91

92

93

94

95

96

97

98

99

100

101

102

103

|

abstract class AbstractPipeline<E_IN, E_OUT, S extends BaseStream<E_OUT, S>>

extends PipelineHelper<E_OUT> implements BaseStream<E_OUT, S> {

// 流管道链式结构的头节点(只有当前的AbstractPipeline引用是头节点,此变量才会被赋值,非头节点为NULL)

@SuppressWarnings("rawtypes")

private final AbstractPipeline sourceStage;

// 流管道链式结构的upstream,也就是上一个节点,如果是头节点此引用为NULL

@SuppressWarnings("rawtypes")

private final AbstractPipeline previousStage;

// 合并数据源的标志和操作标志的掩码

protected final int sourceOrOpFlags;

// 流管道链式结构的下一个节点,如果是头节点此引用为NULL

@SuppressWarnings("rawtypes")

private AbstractPipeline nextStage;

// 流的深度

// 串行执行的流中,表示当前流管道实例中中间操作节点的个数(除去头节点和终结操作)

// 并发执行的流中,表示当前流管道实例中中间操作节点和前一个有状态操作节点之间的节点个数

private int depth;

// 合并了所有数据源的标志、操作标志和当前的节点(AbstractPipeline)实例的标志,也就是当前的节点可以基于此属性得知所有支持的标志

private int combinedFlags;

// 数据源的Spliterator实例

private Spliterator<?> sourceSpliterator;

// 数据源的Spliterator实例封装的Supplier实例

private Supplier<? extends Spliterator<?>> sourceSupplier;

// 标记当前的流节点是否被连接或者消费掉,不能重复连接或者重复消费

private boolean linkedOrConsumed;

// 标记当前的流管道链式结构中是否存在有状态的操作节点,这个属性只会在头节点中有效

private boolean sourceAnyStateful;

// 数据源关闭动作,这个属性只会在头节点中有效,由sourceStage持有

private Runnable sourceCloseAction;

// 标记当前流是否并发执行

private boolean parallel;

// 流管道结构头节点的父构造方法,使用数据源的Spliterator实例封装的Supplier实例

AbstractPipeline(Supplier<? extends Spliterator<?>> source,

int sourceFlags, boolean parallel) {

// 头节点的前驱节点置为NULL

this.previousStage = null;

this.sourceSupplier = source;

this.sourceStage = this;

// 合并传入的源标志和流标志的掩码

this.sourceOrOpFlags = sourceFlags & StreamOpFlag.STREAM_MASK;

// The following is an optimization of:

// StreamOpFlag.combineOpFlags(sourceOrOpFlags, StreamOpFlag.INITIAL_OPS_VALUE);

// 初始化合并标志集合为sourceOrOpFlags和所有流操作标志的初始化值

this.combinedFlags = (~(sourceOrOpFlags << 1)) & StreamOpFlag.INITIAL_OPS_VALUE;

// 深度设置为0

this.depth = 0;

this.parallel = parallel;

}

// 流管道结构头节点的父构造方法,使用数据源的Spliterator实例

AbstractPipeline(Spliterator<?> source,

int sourceFlags, boolean parallel) {

// 头节点的前驱节点置为NULL