Advances in communication technology have contributed to the rise of video-on-demand and live streaming services, and advances in 4G and 5G network technology have made streaming technology increasingly important, but network technology does not solve the problem of high latency in live streaming, and this paper will not describe the impact of the network on live streaming services, but will analyze a common phenomenon in live streaming - the perceptible Significant network latency. In addition to the business requirements for delayed live streaming, what are the factors that can cause such high latency in live video streaming?

When the viewer interacts with the anchor through bullet chatting, it may take 5s or even longer time from the time we see the bullet chatting to the time we get the response from the anchor, although the time the anchor sees the bullet chatting and the time the viewer sees the bullet chatting will not be much different, but it takes longer time for the live streaming system to transmit the audio and video data from the anchor to the client or the browser, and this time of transmitting data from the anchor side to the viewer side is generally called end-to-end audio and video delay.

Live streaming media from audio and video capture and encoding to audio and video decoding and playback involves a very long link, requiring a path to the anchor side, the streaming server and the viewer side, which provide different functions respectively.

- Host side: audio and video collection, audio and video encoding, and pushing streams.

- Streaming server: live stream collection, audio and video transcoding, live stream distribution.

- Audience side: pulling stream, audio and video decoding, audio and video playback.

In this lengthy acquisition and distribution process, the quality of live streaming is ensured by a number of techniques in different processes, and these means used to ensure reliability and reduce system bandwidth together cause the problem of high latency in live streaming. In this paper, we will analyze why the end-to-end latency of live streaming is high from the following three aspects.

- The encoding format used for audio and video determines that the client can only decode from a specific frame.

- the size of the network protocol slice used for audio/video transmission determines the interval between data received by the client.

- the cache reserved by the server and the client to ensure user experience and live streaming quality.

Data encoding

Live video will definitely use audio and video coding technology, the mainstream audio and video coding method is Advanced Audio Coding and Advanced Video Coding, AVC is often called H.264. This section will not discuss the coding and decoding algorithm of audio data, let’s analyze in detail why we need H.264 coding, and how it affects the live broadcast latency. Suppose we need to watch a 2-hour 1080p, 60FPS movie, and if each pixel requires 2 bytes of storage, then the entire movie will take up the resources shown below.

|

|

In practice, however, each movie takes up only a few hundred MB or a few GB of disk space, which is several orders of magnitude different from what we calculate. Audio and video coding is the key technology used to compress audio and video data and reduce disk and network bandwidth usage.

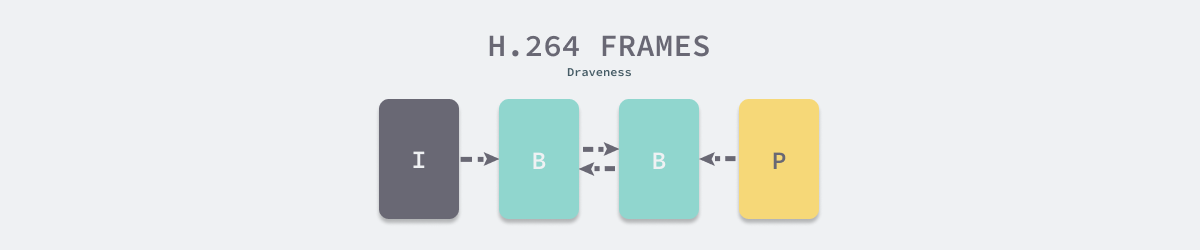

H.264 is the industry standard for video compression, because video is composed of frame by frame pictures, and there is a strong continuity between different pictures, H.264 uses Intra-coded picture (I frame) as the full amount of video data, and constantly uses Predicted picture (P frame) and Bidirectional predicted picture (B frame). (The full amount of data is incrementally modified using Predicted picture and Bidirectional predicted picture (B-frame) to achieve compression.

H.264 compresses the video data into the picture sequence shown above using I-frames, P-frames and B-frames, each of which serves a different purpose.

| Video frames | Role |

|---|---|

| I-frame | is a complete image in JPG or BMP format |

| P frames | can use the data from the previous video frame to compress the data |

| B frames | can use the previous and next video frames to compress data |

The compressed video data is a series of consecutive video frames, the client will first find the first key frame of the video data when decoding the video data, and then modify the key frames incrementally. If the first video frame received by the client is the key frame, then the client can play the video directly, but if the client misses the key frame, then it needs to wait for the next key frame before it can play the video

The Group of pictures (GOP) specifies how the video frames are organized, and the encoded video stream is composed of consecutive GOPs, and since each GOP starts with a key frame, the size of the GOP affects the latency of the playback side. The network bandwidth occupied by the video is also closely related to the GOP, in general, the GOP of mobile live streaming is set to 1 ~ 4 seconds, but we can also use a longer GOP to reduce the occupied bandwidth

The GOP in the video encoding determines the keyframe interval and the time for the client to find the first playable keyframe, which in turn affects the latency of live streaming, and this second-level latency still has a significant impact on the live video service.

Data Transfer

The two most common network protocols are Real Time Messaging Protocol (RTMP) and HTTP Live Streaming (HLS), which each use different ways to transmit audio and video streams. We can think of the RTMP protocol as distributing data based on audio and video streams, while the HLS protocol distributes audio and video data based on files.

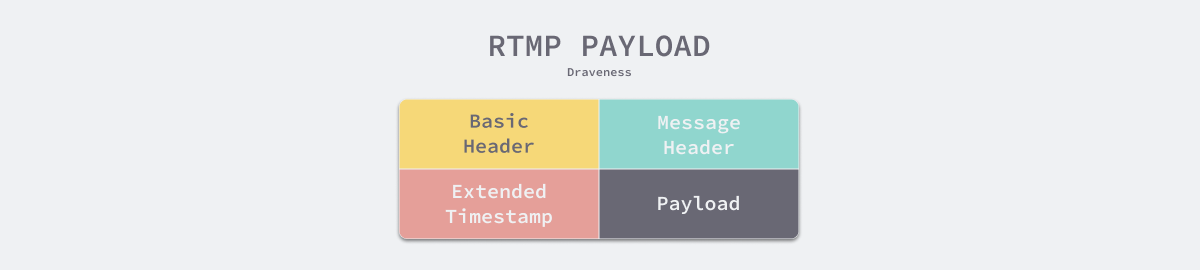

The RTMP protocol is a TCP-based application layer protocol that chunks audio and video streams into segments for transmission, with the default size of 64 bytes for audio data segments and 128 bytes for video data segments. When using the RTMP protocol, all data is transmitted in chunks

Each RTMP block contains 1 to 18 bytes of protocol header, which consists of Basic Header, Message Header and Extended Timestamp. Except for the Basic Header, which contains the block ID and type, the other two parts can be omitted, and only 1 byte of protocol header is required for the RTMP protocol to enter the transmission phase, which means very low additional overhead.

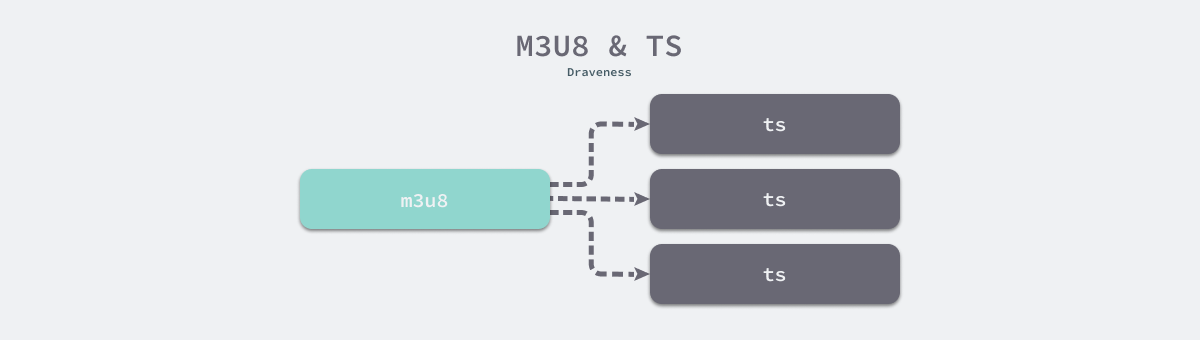

The HLS protocol is a bit-rate adaptive streaming network delivery protocol based on the HTTP protocol released by Apple in 2009. When the player gets a pull address using the HLS protocol, the player gets the m3u8 file from the pull address as follows.

m3u8 is a file format for playing multimedia lists.8 The file contains a series of video stream slices, and the player can play each stream in turn according to the description in the file. The HLS protocol splits the live stream into smaller files and organizes these live segments using m3u8, and when the player plays the live stream it will play the split ts files in turn according to the description in m3u8.

HLS protocol slice ts file size will affect the end-to-end live latency, Apple’s official documentation recommends using 6 seconds ts slice, which means that the latency from the anchor to the viewer will increase at least 6 seconds, using shorter slice is not infeasible, but will bring huge additional overhead and storage pressure.

Although all application layer protocols are limited by the MTU of the physical device to transmit audio and video data in segments, the end-to-end network latency is determined by the slicing granularity of different application layer protocols for audio and video data. The HLS protocol is a file-based distribution protocol, which has a large granularity of slices and may cause a delay of 20~30s in practice.

It should be noted that file-based distribution does not equate to high latency, but the size of the slice is the key factor to determine the latency, and reducing the extra overhead while keeping the slice small is the issue to be considered for real-time streaming media transmission protocols.

Multi-end caching

The links of live video architecture are often long, we can not guarantee the stability of the whole link, want to provide smooth data transmission and user experience, the server and and the client will increase the cache to cope with the live audio and video jitter.

The server will generally cache a portion of the live data first, and then transmit the data to the client. When the network is suddenly jittery, the server can use the data in the cache to ensure the smoothness of the live stream. When the network condition is restored, the data will be cached again; the client will also use the pre-read buffer to improve the quality of live streaming. We can reduce the buffer to increase the real-time, but it will seriously affect the user experience of the client when the network condition is more jittery

Summary

The high latency of live streaming is a systemic engineering problem, compared to 1-to-1 real-time communication such as WeChat video, the link between the producer and the consumer of the video stream is extremely long, and many factors affect the perception of the anchor and the viewer, because of the cost of bandwidth, historical inertia, and network uncertainty, we can only solve the problems encountered through different technologies, and what has to be sacrificed is the user experience:.

- too much audio and video data for the full volume - using audio and video encoding would compress the data using keyframes as well as incremental modifications, with the keyframe interval GOP determining the maximum time the client would have to wait for the first frame to be played.

- insufficient browser protocol support for live streaming - using the HLS protocol to distribute slices of the live stream based on HTTP, which can cause a 20 ~ 30s delay in the live stream for both the anchor and the viewer.

- uncertainty caused by long links - servers and clients use caching to reduce the significant impact of network jitter on live streaming quality.

All of the above factors affect the end-to-end latency of a live streaming system. In a normal live streaming system using RTMP and HTTP-FLV, latency of less than 3s can be achieved, but GOP and multi-end caching can affect this metric, and latency within 10s is normal. Finally, let’s look at some of the more open-ended related issues, and the interested reader can think carefully about the following questions.

- What is the maximum additional overhead associated with a file-based streaming protocol?

- What is the compression ratio of different video encoding formats?