Native K8s does not support migration of a given Pod from the current node to another given node. However, we can implement support for this feature based on the extension capabilities provided by K8s.

Background

In K8s, kube-scheduler is responsible for scheduling the Pods that need to be scheduled to a suitable node.

It computes the best node to run the current Pod through various algorithms. When a new Pod needs to be scheduled, the scheduler makes the best scheduling decision based on its resource description of the Kubernetes cluster at that time. However, since the cluster as a whole is dynamically changing, e.g., elastic scaling of service instances and nodes going up and down, this cluster’s state gradually becomes fragmented, resulting in wasted resources.

Resource Fragments

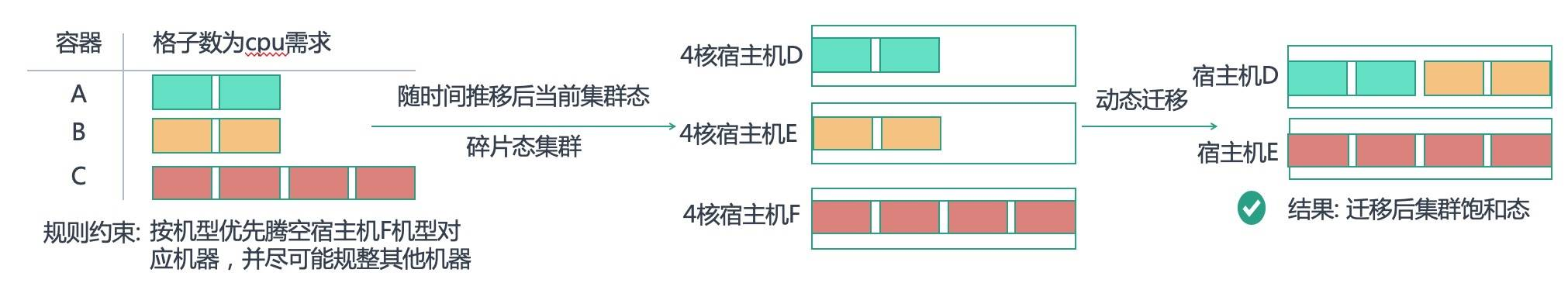

As shown in the figure above, the current state of the cluster may show a fragmentation over time, with hosts D and E each allocated with 2-core containers. At this point, if we need to create another 4-core container C, it will not be scheduled due to lack of resources.

If we can migrate container B from host E to host D, we can free up host E and save the cost of a whole machine. Or if we need to create a new 4-core container, it will not be unscheduled due to lack of resources.

K8s Background Knowledge

kube-scheduler

kube-scheduler is the component responsible for Pod scheduling in K8s. It uses the Master-Slave architecture model, where only one master node is responsible for scheduling.

The whole scheduling process is divided into two phases: filtering and scoring.

- If the filtered node set is empty, the Pod will be in the Pending state, during which the scheduler will keep trying to retry until a node meets the condition.

- Scoring: This stage ranks the set of nodes inputted in the previous stage according to priority and finally selects the node with the highest priority to bind the Pod. once the kube-scheduler determines the optimal node, it notifies the APIServer via ç “definitions.

To improve the scalability of the scheduler, the community has refactored the kube-scheduler code to abstract the scheduling framework so that custom logic can be injected into the various processes of the scheduler by means of plugins (which require the implementation of a Golang interface, compiled into binaries).

NodeAffinity

Node affinity is conceptually similar to nodeSelector in that it allows you to constrain which nodes a pod can be scheduled to based on the label on the node.

There are currently two types of node affinity, requiredDuringSchedulingIgnoredDuringExecution and preferredDuringSchedulingIgnoredDuringExecution. You can think of them as hard limits and soft limits, meaning that the former specifies the rules that must be met to schedule a pod to a node (like nodeSelector but with a more expressive syntax), and the latter specifies preferences that the scheduler will try to enforce but cannot guarantee.

|

|

This node affinity rule indicates that pods can only be placed on nodes with Label Key kubernetes.io/e2e-az-name and value e2e-az1 or e2e-az2. In addition, among nodes that meet these criteria, nodes with Label Key of another-node-label-key and value of another-node-label-value should be used first.

admission webhook

An admission webhook is an HTTP callback mechanism for receiving and processing admission requests. Two types of admission webhooks can be defined, the validating admission webhook and the mutating admission webhook. Mutating admission webhooks are called first. They can change the objects sent to the API server to perform custom set-default operations.

After all object changes have been made and the API server has validated the incoming objects, the validating admission webhook is called and enforces the custom policy by rejecting the request.

Programs

descheduler

One solution to this type of problem is something like the descheduler project that the community is currently working on.

The project itself is part of the kubernetes sigs project, and the descheduler can help us rebalance the cluster state based on some rules and configuration policies, currently the project implements some policies: RemoveDuplicates, LowNodeUtilization, RemovePodsViolatingInterPodAntiAffinity, RemovePodsViolatingNodeAffinity, RemovePodsViolatingNodeTaints, and RemovePodsHavingTooManyRestarts, PodLifeTime, all of these policies can be enabled or disabled as part of the policy, and some parameters related to the policy can also be configured, and by default, all policies are enabled.

In addition, Pods are also evicted by descheduler if they have descheduler.alpha.kubernetes.io/evict annotation on them. With this capability, users can choose which Pods to evict according to their own policies.

The way the descheduler works is simple: it finds a Pod that matches the policy and evicts it by calling the evict interface, where it is removed from the original node and then relies on a higher-level controller (e.g. Deployment) to create a new Pod, which goes back through the scheduler to choose a better node to run.

The advantage of this solution is that it is compatible with the current K8s architecture and does not require changes to the existing code. The disadvantage is that accurate Pod migration is not possible.

When we do cluster defragmentation, we usually generate a series of migration operations based on the current cluster layout, which may not be optimal from the perspective of scheduling individual Pods, but the set of multiple operations is an optimal solution.

SchedPatch Webhook

A similar Roadmap plan exists in the openkruise project, but has not yet been implemented.

SchedPatch Webhook implements a set of mutating webhook interfaces that receive requests to create Pods in kube-apiserver. It injects new scheduling requirements such as affinity, tolerations or node selector by matching user-defined rules to achieve precise scheduling requirements.

With this approach, we can first create a SchedPatch object, indicating that the next Pod of a Deployment prefers to be scheduled to a specified node, and then evict the Pod we want to migrate. apiserver forwards the Pod Create request to the SchedPatch webhook, where we modify the Pod’s scheduling requirements so that kube-scheduler will tend to schedule the Pod to a specified node.

CRD

The following is a simple SchedPatch CRD definition.

|

|

Webhook Logic

- Receive the Pod Create request from kube-apiserver.

- Get all SchedPatch objects from Informer Cache and filter out all objects that match the created Pod. If the number of PatchedPods has reached MaxCount, filter them out as well.

- Update PatchedPods.

- Modify the Pod Spec, inject the scheduling rules in the SchedPatchSpec, and return it to kube-apiserver.

Migration Example Steps

Suppose we have a Deployment of test with service: test in the Pod Label. There is currently a Pod on node-1 and we want to migrate this Pod to node-2.

Create a SchedPatch object that matches a subsequently created Pod with service: test Label, injecting NodeAffinity to indicate a preference for scheduling this Pod to node-2.

Delete the Pod on node-1 directly via kubectl.

The Deployment Controller will then create a new Pod and observe that this Pod will be dispatched to node-2.

Notes

The above solution is just a preliminary explanation of the idea of how to implement this feature, but there are still a lot of details that need to be addressed in the actual use.