When we develop HTTP Server, we often have the need to cache the interface content. For example, for some hot content, we want to cache it within 1 minute. Caching the same content in the short term will not have a material impact on the business and will also reduce the overall load on the system.

Sometimes we need to put the caching logic inside the Server, rather than on the gateway side such as Nginx, because we can easily clear the cache as needed, or we can use other storage media such as Redis as the caching backend.

The caching scenario is simply to fetch from the cache when it is available, and fetch from a downstream service when it is not, and put the data into the cache. This is actually a very generic logic and should be able to be abstracted out. Thus, the caching logic does not need to intrude into the business code.

My usual HTTP framework is gin by golang. gin has an official cache component: github.com/gin-contrib/cache, but this cache component has some shortcomings in both performance and interface design However, this cache component has some shortcomings in both performance and interface design.

Therefore, I redesigned a cache middleware: gin-cache. From the stress test results, the performance is significantly better compared to gin-contrib/cache.

gin-contrib/cache problem analysis

gin-contrib/cache is an official cache component provided by gin, but this component is not satisfactory in terms of performance or interface design. As follows.

interface design

-

gin-contrib/cacheprovides a wrap handler for external use, rather than the more elegant and generic middleware.For example:

-

user can’t customize the cache key based on the request.

gin-contrib/cacheonly providesCachePage,CachePageWithoutQueryand other functions, user can use the url as the cache key. but the component doesn’t support custom cache key. for some For some special scenarios, it will not be able to meet the requirements.

Performance

-

The component writes to the cache by overloading the

Writefunction of theResponseWriter. Each time the Write function is called in gin, it triggers a get and append operation to the cache. This write-and-cache process is obviously worse in terms of performance. -

The worst part is the implementation of concurrency safety. Since the component needs to get the original content for stitching before writing the cache, this process is not atomic. To ensure basic concurrency security in the most HTTP Server, the component adds a mutex lock to the

CachePageAtomicinterface provided externally to ensure that the cache does not write conflicts, as shown in the code below. This mutex lock makes the interface performance worse in case of higher concurrency.

I also made a pull request for gin-contrib/cache for the first performance aspect, but the other aspects, especially the interface design, made me think that this library might not be the final answer.

I decided that I might as well implement a new library that would meet these needs.

New solution

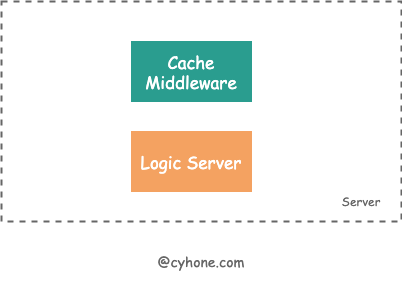

gin-cache was born. Its implementation can be found at github.com/chenyahui/gin-cache.

-

the form provided to the public is the Middleware method

-

the user can customize the way of cachekey generation according to their needs. For example, the cache can be based on the header content or body content. The customization method is as follows.

Of course, I also provide some shortcuts:

CacheByURIandCacheByPath, which cache with url as the key and ignore the query parameter in the url as the key, respectively, so that most of the needs can be met.

In terms of performance, compared to gin-contrib/cache, which caches as it writes, gin-cache only caches the final response after the entire handler is finished. The entire process involves only one write cache operation.

In addition, there are other performance optimizations in gin-cache:

- sync.Pool to optimize the creation and release of HF objects.

- using singleflight to solve the cache-penetration problem.

Cache Breakdown Problem

A common problem encountered in cache design is cache breakdown. Cache blowout means that when a hot key expires in its cache, a large number of requests will not be able to access the cache corresponding to the key, and the requests will be sent directly to the downstream storage or service. The large number of requests in a single moment may put great pressure on downstream services.

For this problem, golang has an official singleflight library: golang.org/x/sync/singleflight, which can effectively solve the cache hit problem. The principle is very simple, interested parties can directly search the source code in Github to see it, this article will not discuss.

benchmark

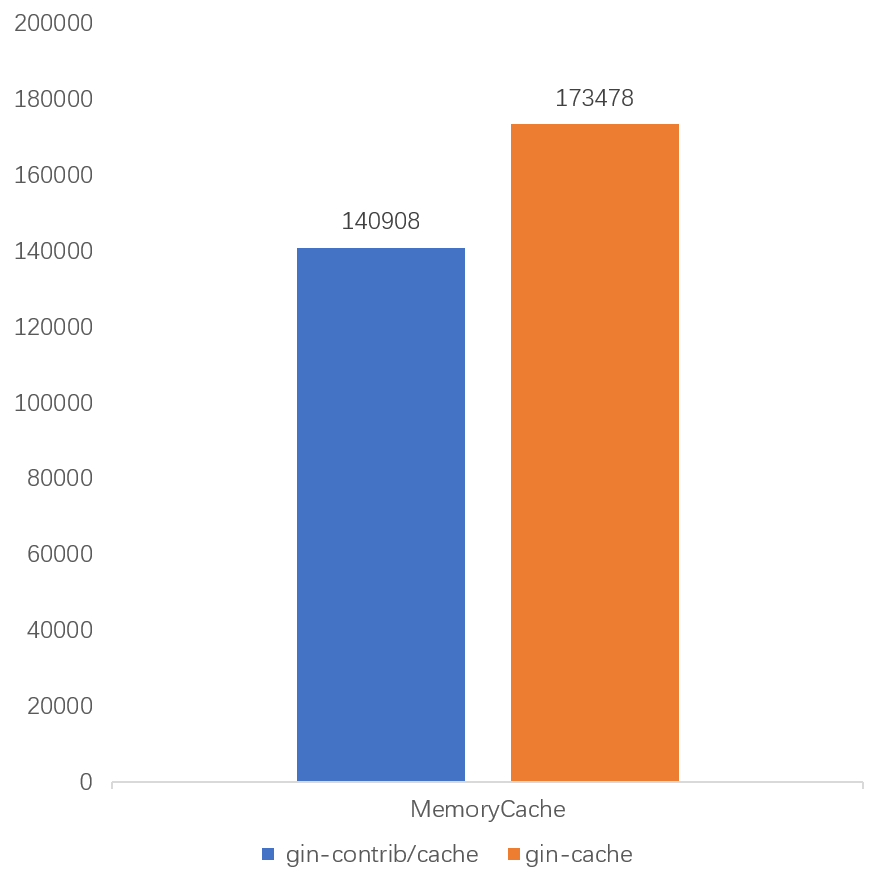

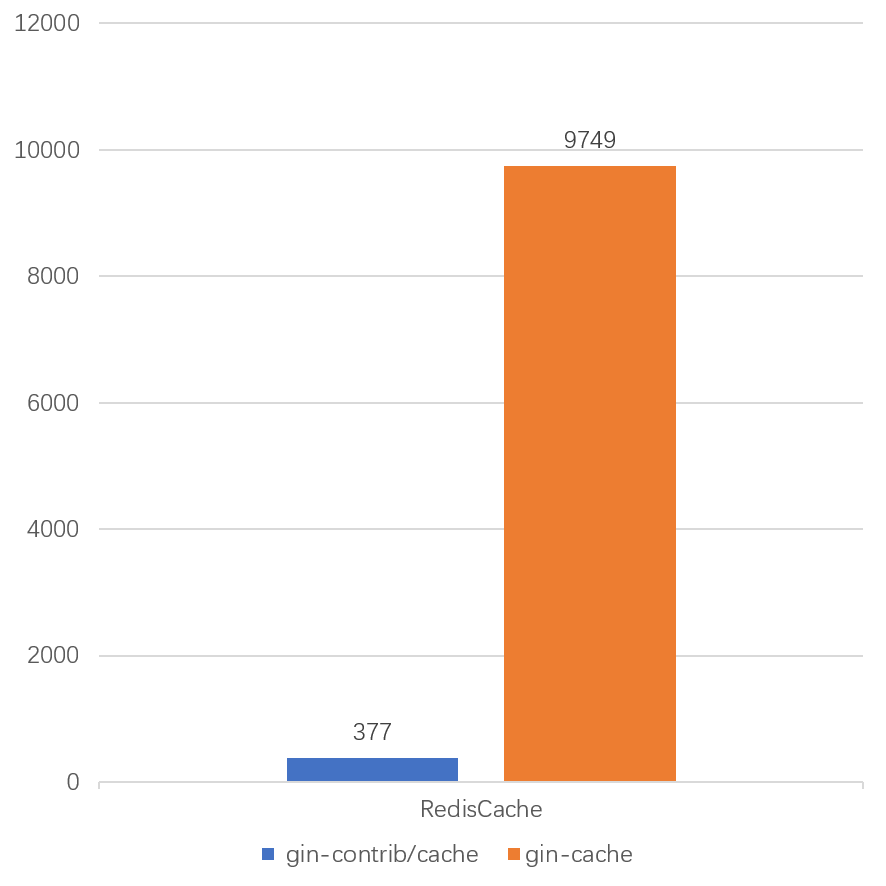

I did a benchmark stress test on gin-contrib/cache and gin-cache using wrk with a Linux CPU of 8 cores and 16G memory.

We use the following command for the stress test:

|

|

We conducted stress tests on both MemoryCache and RedisCache storage backends separately. The final results are very impressive, as seen in the graph below.

- For the MemoryCache in-process caching scenario, gin-cache is a 23% improvement.

- For the Redis caching backend scenario, gin-cache improves QPS by about 30x. Of course, this is also due to the better performance of the redis client library used by gin-cache.

Moreover, the design comparison shows that the advantage of gin-cache will be more obvious when the handler requests are more time consuming.