Kaniko is one of the tools created by Google. It is used to build docker images on Kubernetes without privileges and is described in github (https://github.com/GoogleContainerTools/kaniko) as follows:

kaniko is a tool to build container images from a Dockerfile, inside a container or Kubernetes cluster.

How it works

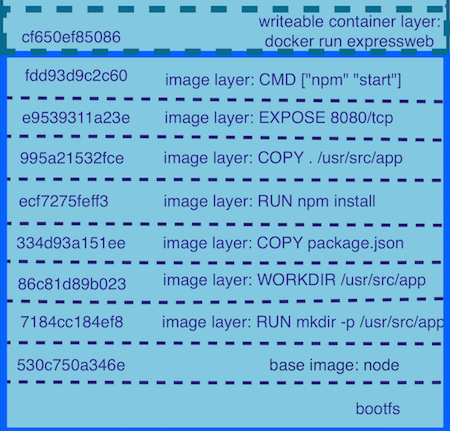

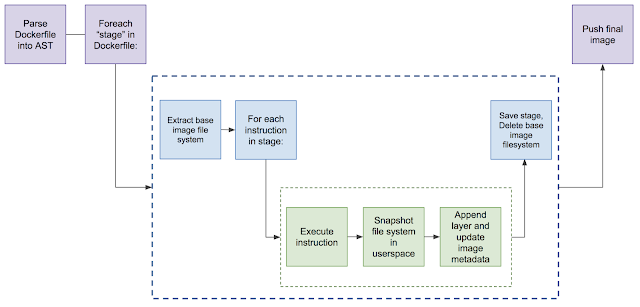

Traditional Docker build is a Docker daemon that executes sequentially on the host using a privileged user (root) and generates each layer of the image according to the Dockerfile.

Kaniko works similarly, executing each command sequentially, taking a snapshot of the file system after each command is executed. If any inconsistencies are found, a new layer is created and any changes are written to the image’s metadata.

After each command in the Dockerfile is executed, Kaniko pushes the newly generated image to the specified registry.

Using

Kaniko solves the problem of building in Kubernetes, but the build project, authentication of the target registry, and distribution of the Dockerfile still need to be considered by us. For simplicity, I just put the project code and Dockerfile under /root of some node.

Dockerfile:

The first is to solve the authentication problem of the target registry, the official documentation sample is to add a kaniko-secret.json and assign the content to the GOOGLE_APPLICATION_CREDENTIALS environment variable, if it is a self-built registry you can directly use docker config.

To build an image using Pods.

|

|

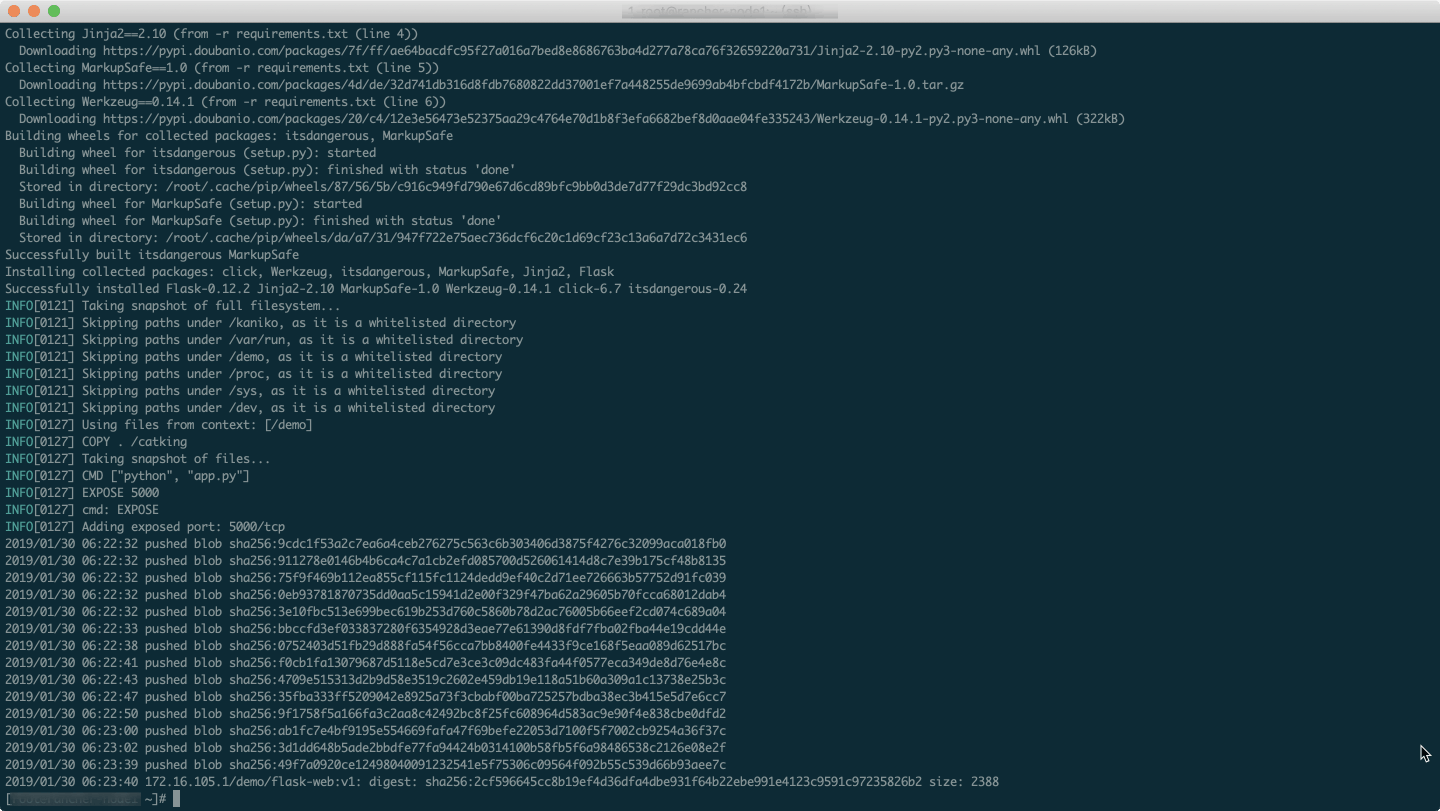

Build Log

image

Supplementary

The GCR image cannot be fetched

You can replace

with

Debug

You can enter debug mode using the debug image:

|

|

Build cache

You can use -cache=true to turn on build caching, which will use the cache directory defined by -cache-dir if it is a local cache.

You can also use the -cache-repo parameter to specify the remote repository to use for caching.

Problems encountered

- push fails after a successful build and the reason is unknown

- When Harbor is the target registry, the image is not visible in the web UI (https://github.com/GoogleContainerTools/kaniko/issues/539)

Build on Kube

For more discussion on building images on Kube, see: https://github.com/kubernetes/kubernetes/issues/1806