Kubevela is currently at version 1.1. In general, we consider version 1.x to be relatively stable enough to try to introduce into production. As we continue to track and learn, we’ve learned some good things about Kubevela, and this is a short summary document.

1. What Kubevela can solve

- For platform developers

Several roles need to be distinguished: development, operations and maintenance, and operations and maintenance development. Development is oriented to business needs, Ops is oriented to business stability, and Ops development is oriented to efficiency. Operation and maintenance development provides a variety of tools to break the barrier between development and operation and maintenance, both to quickly meet the business online. And to ensure business stability.

Kubevela is not too attractive to development and ODM, but it is refreshing to ODM. Because, Kubevela can significantly improve the platform level of the team, straight to the mainstream echelon.

- Application lifecycle management

Kubevela provides solutions for managing the application lifecycle, application definition applications, deployment appdeployments, versioning applicationrevisions, rollback approllouts, grayscale traits, approllouts. using these CRD objects can cover a large portion of business requirements.

- Componentization of Application Loads and Features

Kubevela allows platform developers to assemble and customize the platform to fit their business through these two abstractions.

The orchestration capabilities provided by Workflow add more possibilities to integrate various cloud native components, even extending to the CICD domain.

2. Challenges for applications under multiple clusters

- Unified view

Switching clusters on an application-oriented platform is a very bad user experience. What we need is not to deploy a set of managed services on each cluster and then view the data on different clusters by modifying the data sources.

We should be application-centric, where the cluster is just an attribute of the application, and the application should not be attributed to a particular cluster. A unified view is the desire to provide users with a UI that contains a complete description of the application, the runtime where it is located, a real-time service portrait, and other information.

- Definition of an application

No two platform teams in the world have the same definition of an application.

There are many details to be pushed and considered about what attributes an application should contain, what features it should have, and what restrictions are placed on what fields. Of course you can also choose to take on technical debt, defer issues and deliver a few versions quickly. But it is a long and increasingly difficult road.

Every team defines the application with some business attributes attached. Self-help is not possible, and cumbersome business requirements will not give the developers of the platform a break.

Therefore, the emergence of OAM is an opportunity to unify Application Lifecycle Management (ALM). Although Kubernetes Applications died on the beach before, Kubevela is like a star in the dark night, giving people infinite hope.

- Batch Releases

Batch releases have two dimensions, multiple copies of an application in a single cluster, and the same application in multiple clusters.

Multiple copies on a single cluster are not updated at once, but need to be released in batches. This process, called rollout, is a gradual release process.

Services in multiple clusters or multiple regions, when updated, also need to observe time, and can not be released all at once.

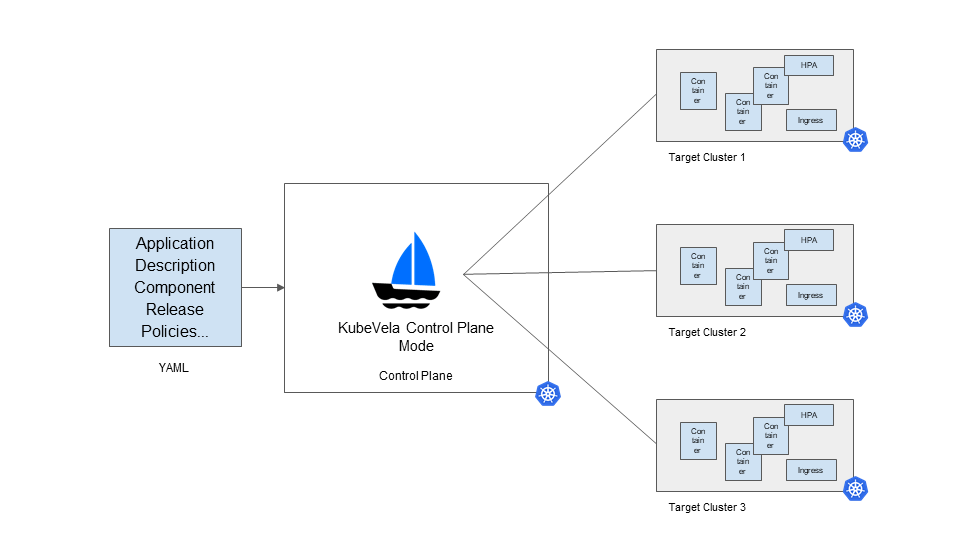

3. Multi-Cluster Application under AppDeployment

Here, we mainly use AppDeployment as the main object to distribute the application on multiple clusters.

- Adding multiple sub-clusters to the main cluster

You need to configure multiple cluster contexts in the same kubeconfig, and then follow the official documentation. Here you add two clusters, prod-cluster-1 and prod-cluster-2. Here are the commands to view the clusters.

- Define the Application

The components and traits need to be defined in advance, and the following is the definition of the application.

|

|

Since there are Annotations for app.oam.dev/revision-only: "true", the associated resources are not created. We are just defining the application here and do not need to create the corresponding load.

- Modify the application version to produce a different application version

To more closely match the production environment, we modify the parameters of the above Application, such as environment variables, image versions, etc., to generate different versions of the application.

The final version of the application that is distributed to each cluster is created. These versions, which are roughly the same with minor differences, are similar to daily application updates.

- AppDeployment Distributes Applications in Multiple Clusters

AppDeployment provides a perspective that is closer to the user’s understanding of the application. The application contains not only the definition of the application, but also the choice of runtime. Here, cluster-test-app-v1 is deployed to the prod-cluster-1 cluster, setting the number of copies to 3, while cluster-test-app-v2 is deployed to the prod-cluster-2 cluster, setting the number of copies to 4.

|

|

4 Multi-Cluster Applications under Workflow

Workflow is a new feature added in recent versions of Kubevela and is used here mainly to generate cross-cluster resource objects needed by OCM.

4.1 Configuring Open Cluster Management (OCM)

- Install Open Cluster Management using the vela command

|

|

- Add multiple subclusters to the main cluster

You need to configure the context of multiple clusters in the same kubeconfig. on the dev1 cluster, add the kubeconfig of the dev2 cluster.

- Configure environment variables

Use dev1 (master cluster) to manage dev2 (subcluster). On the master cluster, execute the command.

- Find the Token to add a subcluster

On the master cluster, execute the command.

Take the token value from it and Base64 decode it to get a valid hub-token value.

- Adding subclusters

The hub-apiserver here is the access address of the kube-apiserver of the main cluster. On the master cluster, execute the command.

|

|

- Accept a new cluster add request

On the master cluster, execute the command.

|

|

- To view the managed clusters

On the master cluster, execute the command.

- Install Kubevela rollout on the managed cluster

On the subcluster, execute the command.

4.2 Create a new WorkflowStepDefinition to describe cross-cluster resources

Use Workflow in the primary cluster to define cross-cluster resources in a WorkflowStepDefinition. Here is one of the resources that will be used.

|

|

Where ManifestWork defines the configuration and resource information to be distributed to a cluster. Only dispatch-traits are defined here, and accordingly we also need to define dispatch-comp-rev.

The process of distributing resources can be understood as packaging the resources to be distributed into ManifestWork objects on the main cluster, distributing them via OCM to AppliedManifestworks objects on the subclusters, and then having the subclusters extract the resources for creation.

4.3 Creating an application for distribution

Here we use Application to define an application on the main cluster dev1 and distribute it to the subcluster dev2.

|

|

In the scenario of OCM multi-cluster applications, sub-clusters need to deploy Kubevela rollout components. As a result, Kubevela is able to control the subcluster rollout process in a more granular way, such as the ratio and number of batches per rollout process, etc.

4.4 Possible issues to be encountered

- OCM errors when creating resources in subclusters

|

|

The hint is that there are not enough permissions, and on the subcluster, an admin permission is bound directly to klusterlet-work-sa.

|

|

5. Summary

This article discusses the application of Kubevela in multiple clusters, and the main content is as follows:

- Applications under multiple clusters, unlike single clusters, cannot simply switch data sources to achieve, and their interaction design has higher requirements. Multi-cluster application platform needs to have a unified view to view the service portrait of the application under multi-cluster, and to take the application as the center, treat the cluster as the property, and separate the priority.

- AppDeployment is a good abstraction and can give some inspiration to the platform design, and also see some KubeFed figure. AppDeployment is a multi-cluster application presented in the user’s perspective, but the current processing granularity of Workload is too large, for the whole Application, that is, the full amount of delete, update, create Workload. If it is used for production, it also needs to be updated with rollout.

- We can integrate Kubevela multi-cluster application under OCM with Workflow for more scalability, and we can also switch to other multi-cluster components, such as Karmada, and use the distribution capability of OCM and Kubevela rollout component on sub-clusters to achieve batch release and rolling update.

Kubevela is for visible applications, but it needs more underlying components to support the whole application platform.