The core function of an operating system is software governance, and a very important part of software governance is to allow multiple software to work together to use the computer’s resources rationally and without contention.

Memory, as the most basic hardware resource of a computer, has a very special position. We know that the CPU can directly access very few storage resources, only: registers, memory (RAM), ROM on the motherboard.

The registers are very, very fast to access, but are so few in number that most programmers do not deal with them directly, but rather the compiler of the programming language automatically selects registers as needed to optimize the performance of the program.

The ROM on the motherboard is non-volatile, read-only storage. By non-volatile, I mean that the data inside it will still be there after the computer restarts. This is unlike memory (RAM) where the data on it is lost after the computer reboots.ROM’s non-volatile and read-only nature dictates that it is ideal for storing a computer’s bootloader (BIOS).

So you can see that memory is in a very special position, it is the only basic resource that the CPU has built-in support and that the programmer will deal with directly.

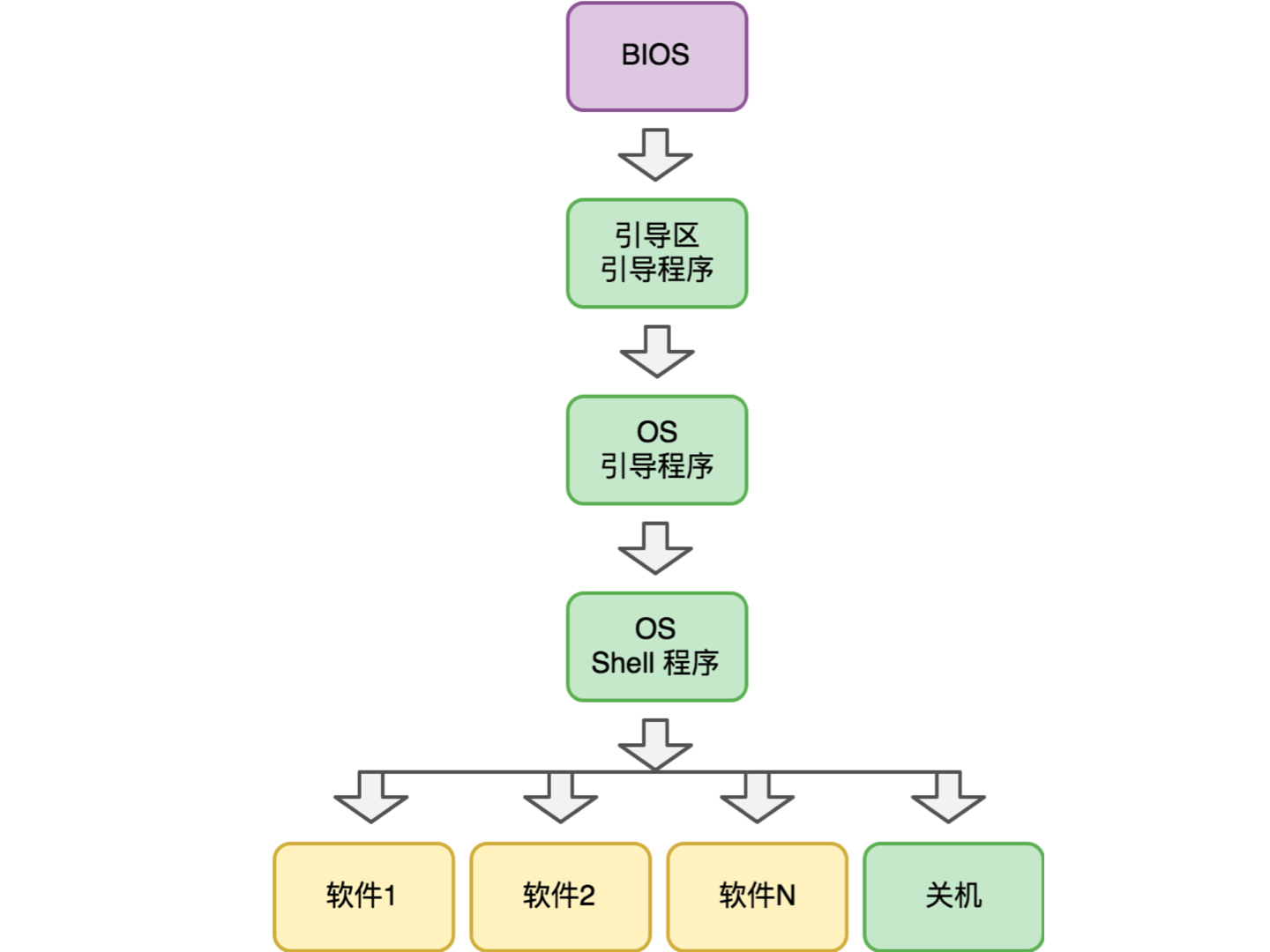

The whole process of computer operation

Of course, this is the view from the CPU point of view: for the CPU, the “computing “ process is a complete “computing “ process from the moment the computer is powered up, the first instruction of the BIOS program is executed, and the computer is finally shut down. It doesn’t care how many “sub ‘computing’ processes” there are in this process.

But from the perspective of the operating system, the entire “computing “ process, from boot-up to shutdown, is accomplished by a number of software, i.e., sub “computing “ processes, working together. In terms of timing, the complete “computing “ process of a computer is as follows.

Each sub-process of the entire “computing “ process has its own explicit considerations.

First of all, the BIOS program is not solidified in the CPU, but placed independently on the ROM of the motherboard, because the input and output devices of computers in different historical periods are very different, there are keyboard + mouse + monitor, touch screen, and pure voice interaction, external storage is floppy, hard disk, flash memory, these changes we can cope with by adjusting the BIOS program, without the need to modify the CPU.

The boot sector bootloader is the boundary where the program is transferred from the internal storage (ROM) to the external storage. The bootloader is so short that BIOS only needs to load it into memory for execution, but then control of the system is subtly transferred to the external storage.

The boot sector bootloader is not solidified in the BIOS, but written in the boot sector of the external storage, in order to avoid the need for frequent BIOS program modifications. After all, the BIOS is still hardware, while the boot sector bootloader is already in the software category, so it will be much easier to modify it.

The OS bootloader, then, is the real start of external storage taking over control of the computer. Here OS stands for Operating System. This is where the operating system gets to work. There are many, many things that happen in this process, which we’ll skip here. But eventually, after all the initialization is done, the OS hands over the execution to the OS Shell program.

The OS Shell program is responsible for the interaction between the operating system and the user. In the earliest days, when computers interacted with a character interface, the OS Shell program was a command line program. In DOS, it was called command.com, while in Linux it was called something like sh or bash. Here sh is short for shell.

During this period, the way to start a software was to type a command line into a shell program, and the shell was responsible for interpreting the command line to understand the user’s intent, and then starting the appropriate software. In the GUI era, starting software in the shell became a matter of clicking the mouse or moving a finger (touch screen), and the interaction paradigm was much simplified.

After understanding the entire process of computer boot-up to shutdown, you may soon realize that there is a critical detail that is not explained: how does the computer run the software on external storage?

This has to do with memory management.

In conjunction with the role of memory, we talk about memory management and only need to talk clearly about two issues.

- How to allocate memory (to running software, to avoid them competing for resources).

- How to run software on external storage (e.g. hard disk)?

Before answering these two questions, let’s understand a little background: the real mode and the protected mode of the CPU. These two modes are completely different in the way the CPU operates on memory. In real mode, the CPU accesses memory directly through physical addresses. In protected mode, the CPU translates virtual memory addresses into physical memory addresses through an address mapping table, and then reads the data.

Accordingly, an operating system that works in real mode is called a real mode operating system, and an operating system that works in protected mode is called a protected mode operating system.

Memory management in real mode

Under a real-mode operating system, all software, including the operating system itself, is under the same physical address space. To the CPU, they appear to be the same program. How does the operating system allocate memory? There are at least two possible ways.

- Put the address of the OS memory management related function in a recognized place (e.g. at 0x10000), and every software that wants to request memory will go to this place to get the memory management function and call it.

- design the memory management function as an interrupt request. An interrupt is a mechanism by which the

CPUresponds to a hardware device event. When something happens to an input/output device that needs to be handled by theCPU, it triggers an interrupt.

The memory has a global interrupt vector table, which is essentially a bunch of function addresses in a place that everyone recognizes. For example, if the keyboard presses a key, it will trigger interrupt number 9. When the CPU receives an interrupt request, it stops what it’s doing to respond to the interrupt request (goes to the interrupt vector table, finds the function address corresponding to item 9 and executes it), and then goes back to what it was doing when it’s done.

The interrupt mechanism was originally designed to respond to hardware events, but the CPU also provides instructions to allow software to trigger an interrupt, which we call a soft interrupt. For example, we have agreed that interrupt 77 is a memory management interrupt, and the operating system writes its own memory management function to item 77 of the interrupt vector table during initialization.

So, the two methods above are essentially the same method, only the details of the mechanism are different. The interrupt mechanism is much more than just a function vector table. For example, interrupts will have priority, and higher priority interrupts can interrupt lower priority interrupts. The opposite is not possible.

So, how does the OS run software on external storage (like a hard drive) in real mode?

Quite simply, it reads the complete software from the external storage into memory and then executes it. However, before execution it does one thing, it fixes the floating address. Why is there a floating address? Because the software does not know where it will be when it is not loaded into memory, so there are many addresses involving data and functions that cannot be fixed and have to be determined when the OS loads it into memory.

Overall, the mechanism of real mode memory management is very easy to understand. After all, it is essentially a program that is split into many pieces of software (program code fragments), which enables the dynamic loading of program code fragments.

Memory management in protected mode

But there are two problems with real mode.

One of them is security. The operating system and all software running together can modify each other’s data and even program instructions at will, so it is very easy to do damage.

The second is that the software supported low complexity , while the number of software that can be run is small.

On the one hand, the more complex the software is, the more code it has, the more storage space it needs, and it may even appear that the size of a single piece of software exceeds the available memory of the computer, which then can not execute it in real mode.

On the other hand, even if a single piece of software can run, once we have several more running at the same time, the memory requirements of the operating system increase dramatically. Compared to the memory demand of so many software combined, the memory storage space is often still insufficient.

But why do we usually open new software without any fear of running out of memory?

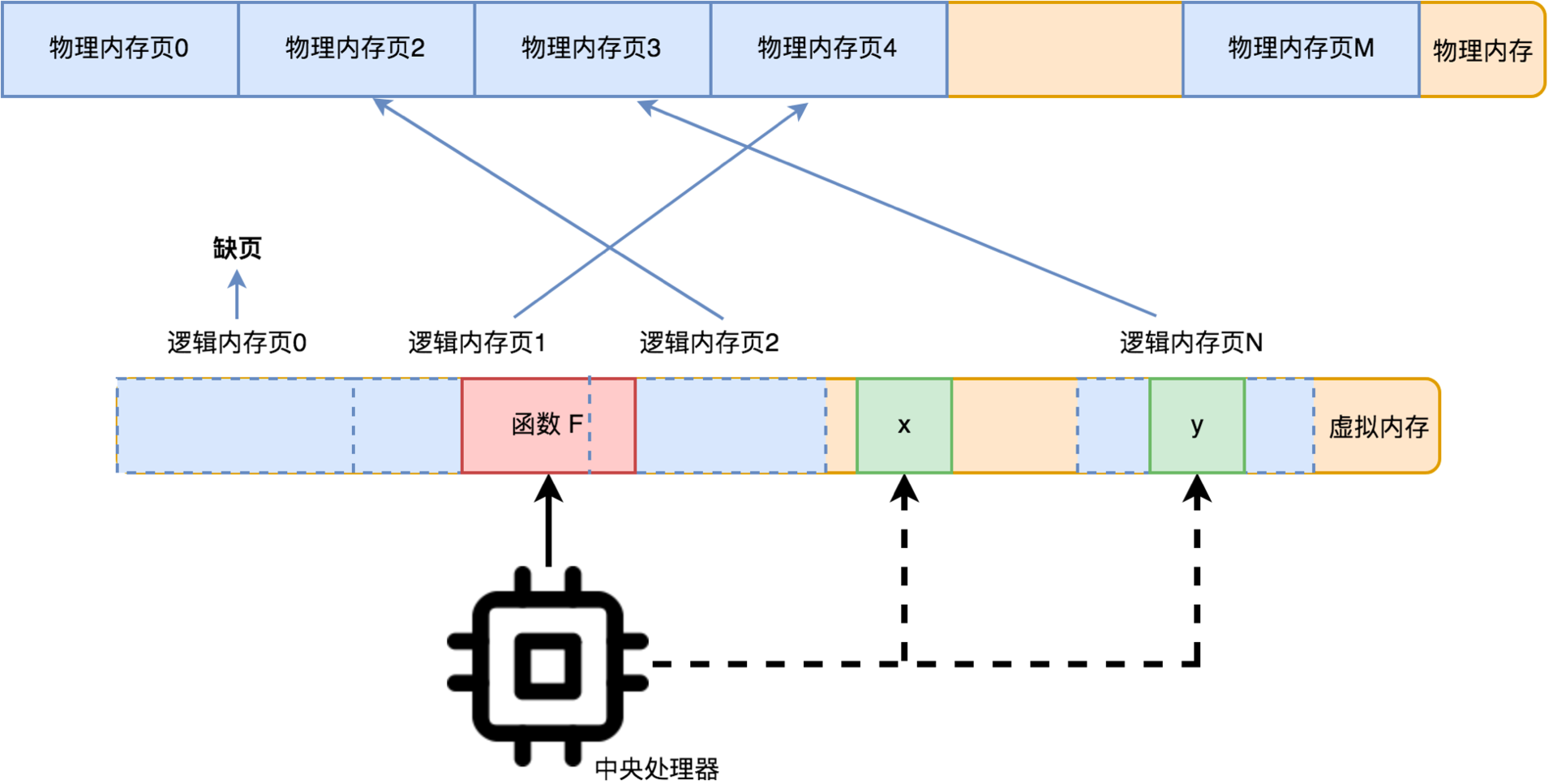

This is where protected mode comes into play. In protected mode, memory access is no longer directly through physical memory, but is based on virtual memory. In virtual memory mode, the entire memory space is divided into a number of contiguous memory pages. Each memory page is a fixed size, say 64K.

Thus, each time the CPU accesses data in a virtual memory address, it first calculates which memory page it is accessing, and then the CPU converts the virtual memory address to a physical memory address through an address mapping table, and then goes to this physical memory address to read the data. The address mapping table is an array where the subscript is the memory page number and the value is the first address of the physical memory corresponding to that memory page.

Of course, it is possible that the physical memory address corresponding to a memory page does not exist yet, and this is called a missing page, so the data cannot be read. In this case, the CPU will initiate a missing page interrupt request.

This out-of-page interrupt request is taken over by the operating system. When a page out occurs, the operating system allocates physical memory for this memory page and recovers the data for this memory page. If there is no free physical memory to allocate, it selects a memory page that has not been accessed for the longest time for elimination.

Of course, the data of this memory page is saved before elimination (for unix-based systems it is often a swap partition; for windows it is a .swp file with hidden attributes.) The next time the CPU accesses the obsolete memory page, a missing page interrupt will occur and the OS will have to recover the data.

With this virtual memory mechanism, the operating system does not need to load the entire software into memory right from the start, but loads the corresponding program code fragments on demand via page-out interrupts. The problem of running multiple software programs at the same time is also solved. When memory is not enough, the longest unused memory pages are eliminated to free up physical memory.

The problem of running software is solved. So, how does the operating system allocate memory to running software?

Actually, the problem of memory allocation is also solved, without any additional mechanism. The memory address space is virtual anyway, and the OS can allocate a super large amount of memory to the software it wants to run right from the start, and you can use it however you want. If the software doesn’t use a memory page, nothing happens. Once the software uses a memory page, the operating system allocates real physical memory to it through a page-out interrupt.

By introducing virtual memory and its out-of-page mechanism, the CPU has solved the relationship between the operating system and the software very well.

Each running piece of software, let’s call it a process, has its own address mapping table. That is, the virtual addresses are not global, but rather each process has a separate virtual address space of its own.

In protected mode, one of the things that the computer’s infrastructure system and the operating system are working together to do is to make each piece of software “feel” like it is monopolizing the resources of the entire computer. The independent virtual address space camouflages this nicely: it looks like I’m enjoying all the memory resources alone. The problem of floating addresses in real mode is also solved by the fact that software can assume what the absolute address of its own code is for loading, and does not need to readjust the address of CPU instruction operations at load time.

This is very different from real mode. In real mode, all processes are in the same address space in physical memory, and they can access each other’s data, modify or even destroy each other’s data, and thus cause other processes (including those of the operating system itself) to crash. Memory is the basic resource for process operation, and keeping the process basic resources independent is the most basic requirement of software governance. This is the reason why protection mode is called “protection” mode.