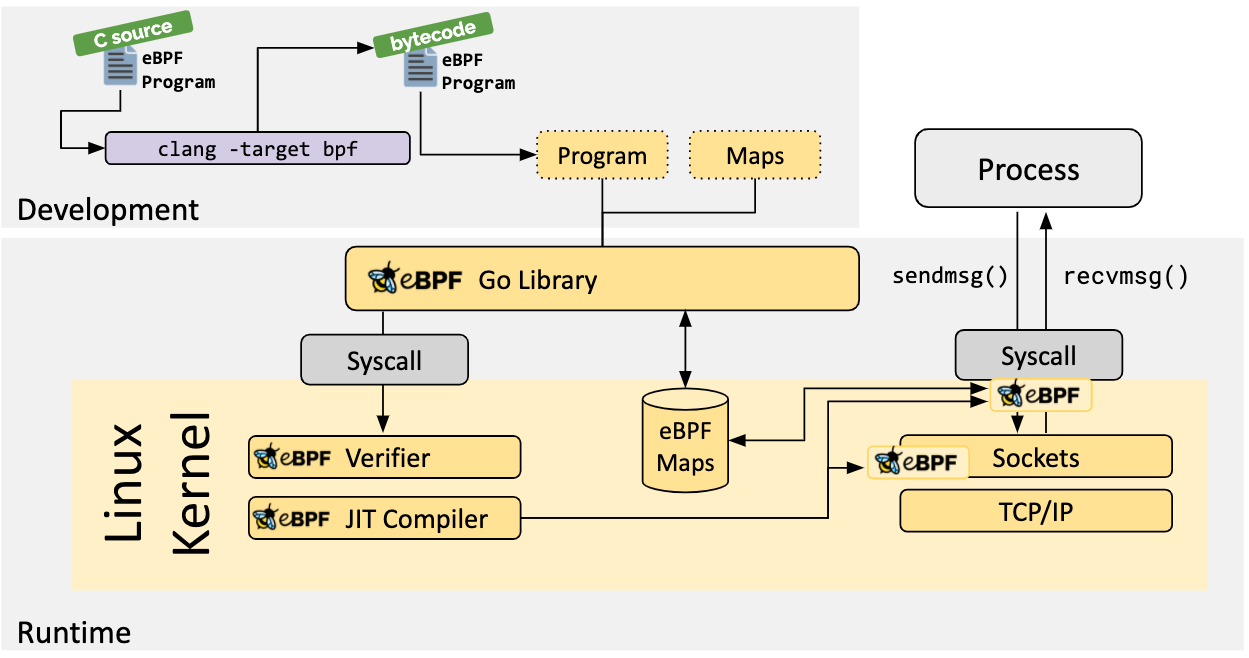

ebpf is an innovative and revolutionary technology that enables sandboxed programs to be run in the kernel without modifying the kernel source code or loading kernel modules. By making the Linux kernel programmable, it is possible to build smarter, more feature-rich infrastructure software based on existing (rather than adding new) abstraction layers, without increasing system complexity or sacrificing execution efficiency or security.

The first version of BPF was introduced in 1994. We actually use it when writing rules using the tcpdump tool, which is used to view or “sniff” network packets.

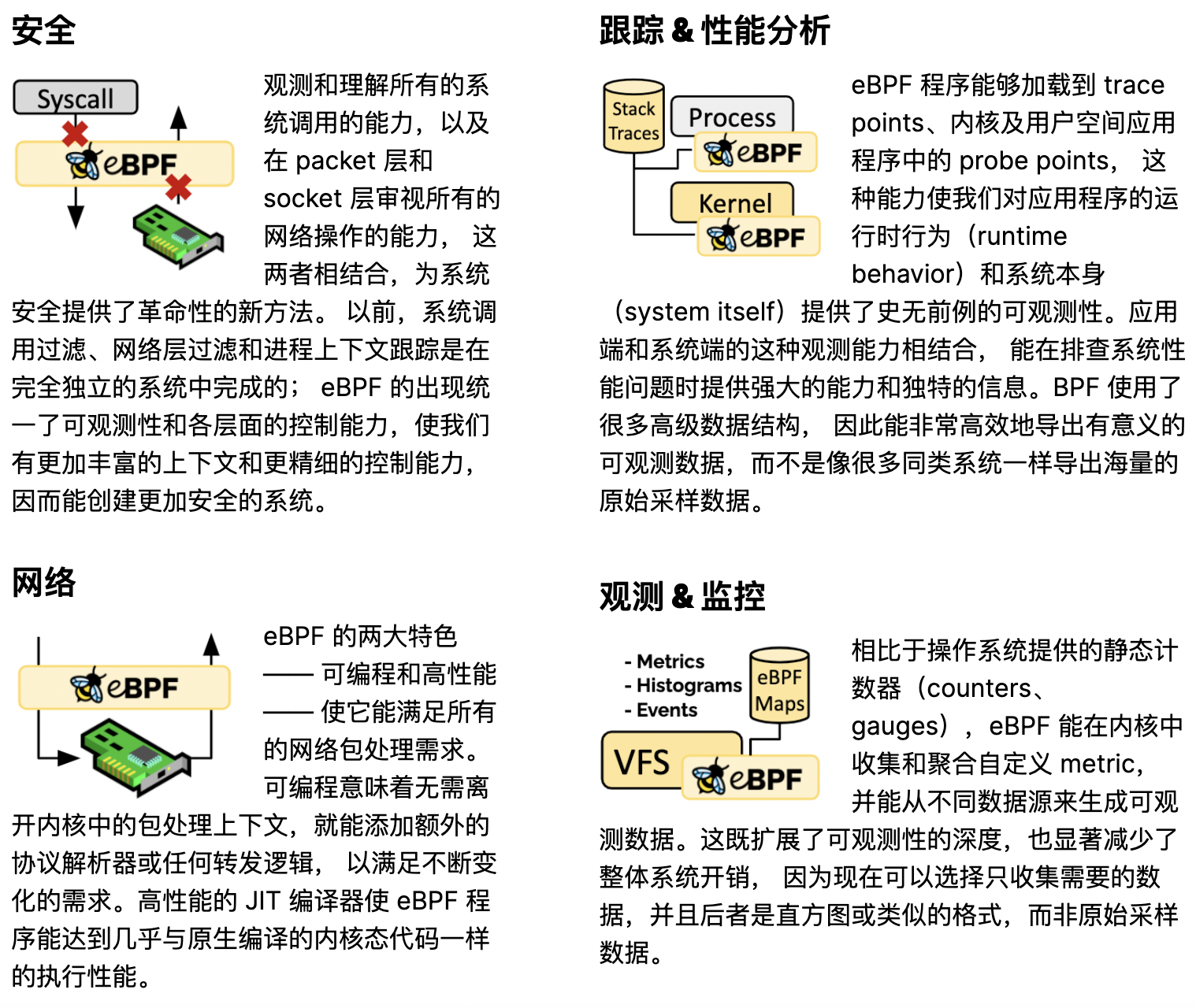

Using ebpf technology, you can provide new ideas and techniques in the direction of security, trace & performance analysis, networking, observation & monitoring, etc.

- Security: You can check security from system call level, packet level, socket level, such as developing DDOS protection system and writing firewall programs.

- Network: You can develop kernel layer high performance packet handler, such as Cilium to provide kernel layer load balancing, and push service mesh to deeper layer to solve sidecar performance problems.

- Trace & Performance Analysis: Linux provides many types of probe points, such as Kernel probes, perf events, Tracepoints, User-space probes, User statically defined tracepoints, XDP and so on. We can write probe programs to collect information about these probe points, so we can track programs and analyze performance in this way.

- Observation & Monitoring: With continuous observation and monitoring of these probe points, we can enrich our trace program. The key is that we do not need to change the established programs, but observe them from other programs through the ebpf method. 2014, Alexei Starovoitov, a famous kernel hacker, extended the functionality of BPF. He increased the number of registers and the allowed size of the program, added JIT compilation, and created a program for checking if the program is safe. Most impressively, however, the new BPF program was able to run not only while processing packets, but also to respond to other kernel events and pass information back and forth between kernel and user space. alexei Starovoitov’s new version of BPF was called eBPF (e stands for extended: extended). But now that it has replaced all older versions of BPF usage and has become so popular, it is still referred to as BPF for simplicity’s sake.

You can write your own bpf programs for custom logic processing and analysis, or you can use tools written by the greats and use them for generic performance analysis and tracing of your programs. This article is about using some tools to do generic analysis of rpcx microservices programs, since they are generic, you can can analyze other Go programs, and not only limited to Go programs, other applications and even kernels you can analyze and trace.

For how to write bpf programs I am going to introduce it in a new article.

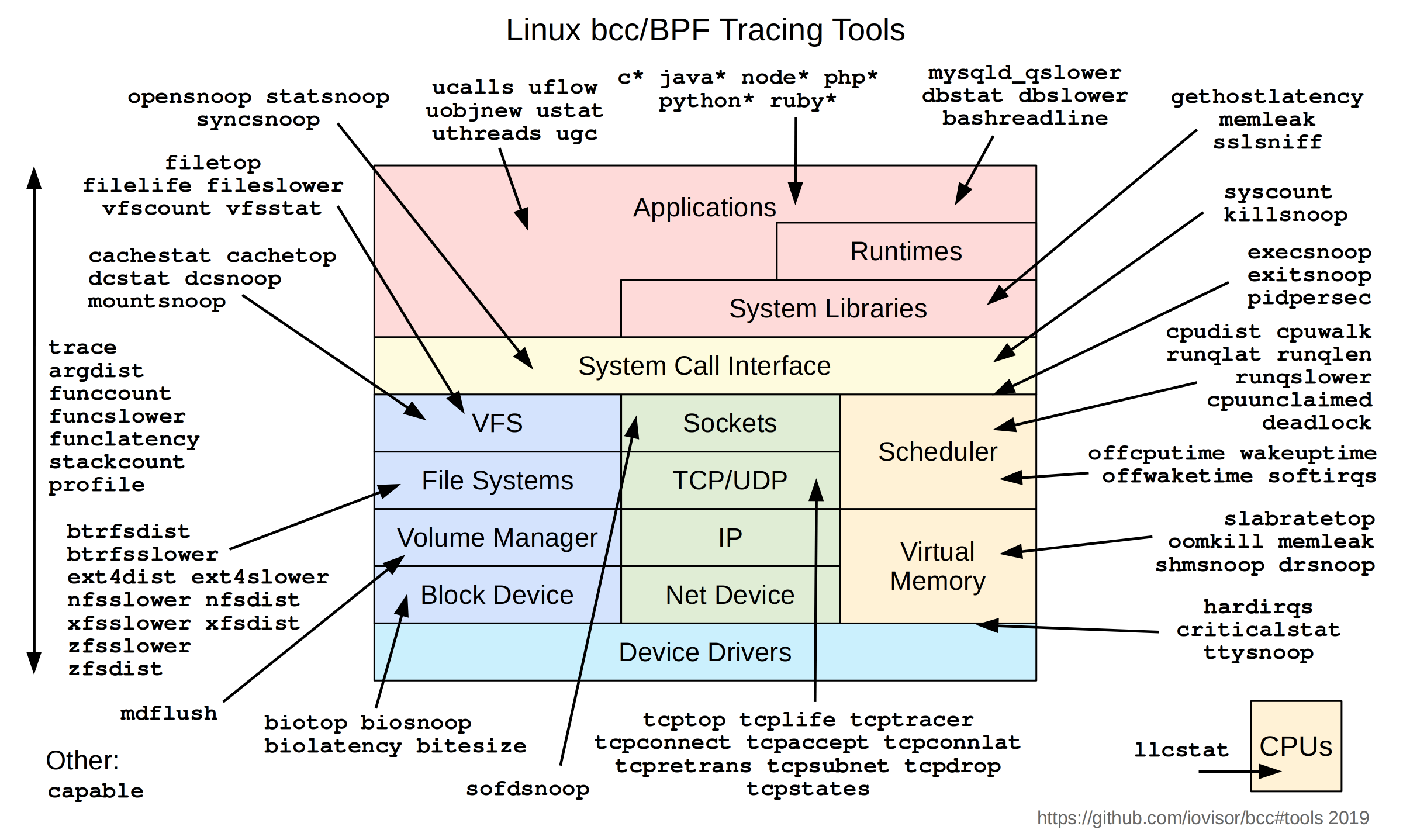

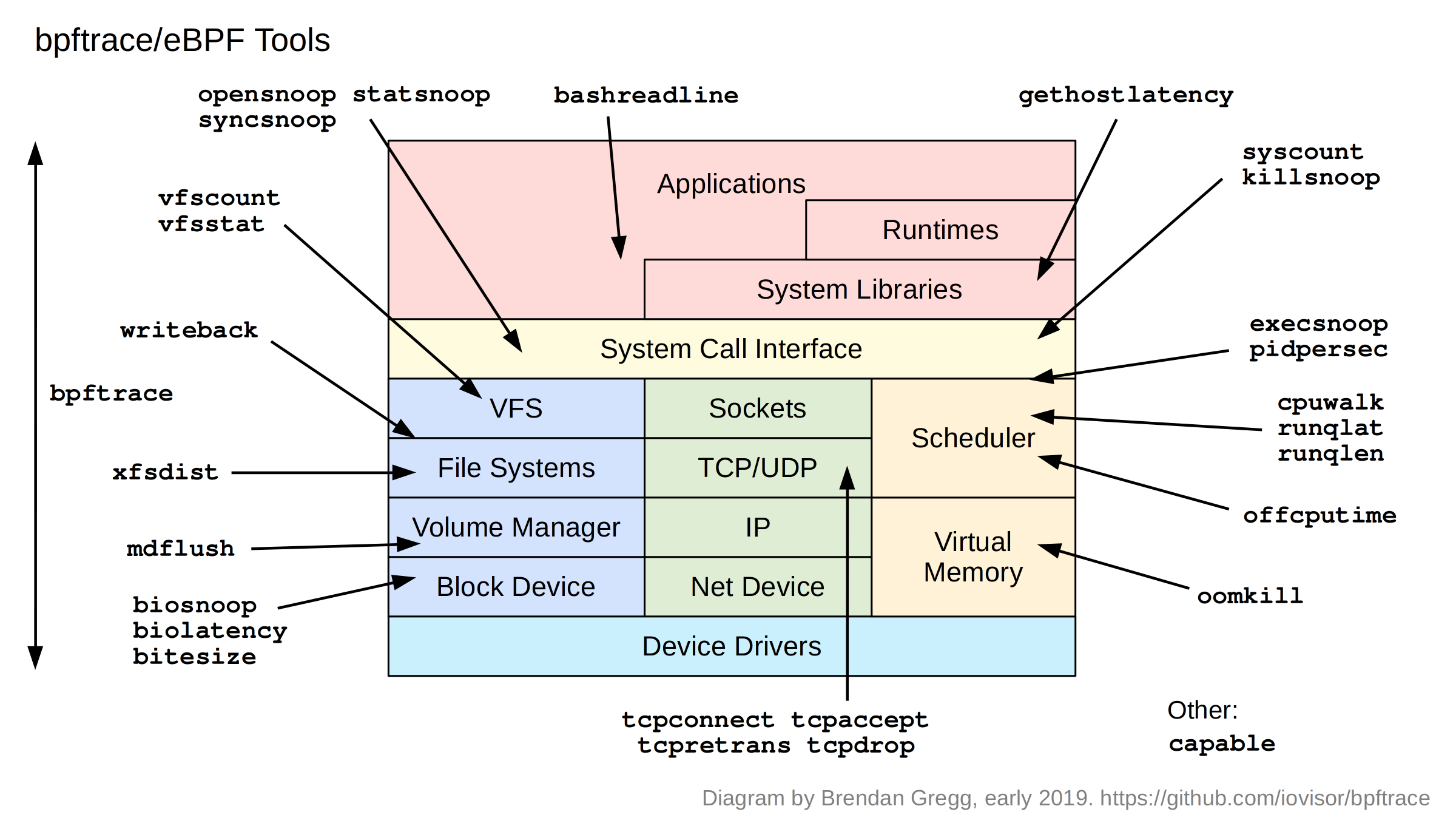

This time it will focus on bcc provides related tools and bpftrace.

bcc is a toolkit for creating efficient eBPF-based kernel tracing and manipulation programs, including some useful command line tools and examples. BCC simplifies the writing of eBPF programs for kernel detection in C, including wrappers for LLVM and front-ends for Python and Lua. It also provides high-level libraries for direct integration into applications.

bpftrace is a high-level trace language for Linux eBPF. Its language is inspired by awk and C as well as by previous tracing programs such as DTrace and SystemTap. bpftrace uses LLVM as a backend to compile scripts into eBPF bytecode and uses BCC as a library to interact with the Linux eBPF subsystem as well as existing Linux tracing features and connection points.

Simple rpcx microservice program

Since we are going to use the ebpf analysis program, first we need to have a program. Here I have chosen rpcx a simplest example to implement a minimal microservice for multiplication.

The code of this program can be downloaded at rpcx-examples-102basic.

The server-side program is as follows:

|

|

Use go build server.go to compile the server program and run it (. /server).

The client application is as follows:

|

|

The client calls the Arith.Mul microservice once every second, and the logic of the microservice is simple: it performs multiplication and returns the result to the client.

Tracing and analyzing microservices

As a demo, this article only tracks server-side Arith.Mul calls.

bcc provides a number of bpf-based analyzers, as follows (classic diagram put together by Brendan Gregg)

Here we will select a few relevant tools to demonstrate how to use these tools to analyze running programs. Note that it is a running program and we have not added some additional trace points to the program.

bcc package

First you have to install the bcc suite, and your Linux kernel has to be new enough. In some big houses, there are still some kernel versions of 2.6.x servers, and these old kernel servers cannot support ebpf or the new features of ebpf.

I tested this on one of my virtual machines in AliCloud, which is of version:

- Linux lab 4.18.0-348.2.1.el8_5.x86_64

- CentOS Stream release 8

Installing these tools is done directly with yum install bcc-tools.

If you are on a different version of the operating system, you can install them by referring to the bcc installation documentation: bcc/INSTALL.

Before using the tools for analysis, you first need to know the name of your microservice Arith.Mul in the symbol table, which you can query using objdump.

Its name is main.(*Arith).Mul , and we will use this name to analyze the microservice below.

Make sure that the server you just created is always running.

funccount

funccount is used to count the number of times a function has been called over time.

Execute the following command in the directory where the server is located (if it is in a different path, you need to change the path of the program in the command parameter):

Here we set the observation time to 10 seconds, and we can see that this function is called 10 times during these 10 seconds.

It contains several parameters, for example, you can keep observing and output the result every 5 seconds:

We can even use it for Go GC related function tracing.

Or track Go runtime scheduling.

funclatency

funclatency counts the time taken by the execution of a function.

If we want to analyze the execution of the Arith.Mul method, we can use the following command, which will show the distribution of the time spent on this function call in the form of a histogram.

|

|

We counted 10 seconds of data. You can see that this function was called 10 times during this period. The average time taken is 31 microseconds.

If we want to check if there is a long tail in the online program, it is easy to analyze the statistics using this tool.

funcslower

funcslower This tool can trace functions that execute slowly in the kernel and in the program, for example using the following command.

|

|

You can even print out the stack information:

|

|

tcp series tools

bcc provides a bunch of tools for tcp tracing, we can use them for different scenarios.

- tools/tcpaccept: Tracks TCP passive connections (accept()).

- tools/tcpconnect: Traces TCP active connections (connect()).

- tools/tcpconnlat: Tracks TCP active connection latency (connect()).

- tools/tcpdrop: Tracks TCP packet drop details for kernel.

- tools/tcplife: Tracks TCP session (lifecycle metrics summary).

- tools/tcpretrans: Tracks TCP retransmissions.

- tools/tcprtt: Tracks TCP round-trip elapsed time.

- tools/tcpstates: Tracks TCP session state changes.

- tools/tcpsubnet: Summarize and aggregate TCP transmissions by subnet.

- tools/tcpsynbl: Displays TCP SYN backlog.

- tools/tcptop: Aggregates TCP send/recv throughput by host.

- tools/tcptracer: Tracks TCP connection creation/closure (connect(), accept(), close()).

- tools/tcpcong: Tracks the duration of TCP socket congestion control state.

For example, if we are concerned about connection establishment, we can use tcptracer:

|

|

There are also a bunch of xxxxsnoop programs that can be traced for specific system calls.

bpftrace

Sometimes we want to implement some custom tracing using scripts, for example tools like awk that provide simple scripting.

One such tool is bpftrace, which uses LLVM as the backend to compile scripts into eBPF bytecode and uses BCC as a library to interact with the Linux eBPF subsystem as well as existing Linux trace functions and connection points.

The bpftrace reference manual can be found at bpftrace reference_guide.

Using our Arith.Mul as an example, we can use the following command to add a probe to the function call to print out the input parameters.

Why does arg0,arg1 just print out the parameters? Simply put, our microservice arguments are exactly two int64 integers, which correspond exactly to arg0,arg1.

The service return value of rpcx is also passed in as an argument and has not been set when the function is called, so if you print arg3 is not a REPLY return value.

At this point we need to move the probe and add an offset, how much of an offset do we add? By disassembling we see that the return value has been assigned when adding 92, so use the following command to print the return value (this time the first argument is overwritten).

Go has changed to a register-based calling convention since 1.17, so here we use the built-in arg0, arg1,… , if you are using an earlier version of Go, here you can switch to sarg0,sarg1,… Try (stack arguments).