Problem phenomenon

One day I encountered a problem: when accessing a web service, some requests failed, and Connection Refused was returned.

For some reason, the web service is a hostNetwork type, which is the same network namespace as the host, and when I logged into Node, I could see that the listening socket was still there, but when I made a direct curl request, it returned Connection Refused.

Why? Usually Connection Refused means that the listening socket is not open and the corresponding port number is not opened, the kernel will return icmp-port-unreachable, but now it is obvious that the port is open.

Cause of the problem

Actually, in addition to the port not being open, the kernel will return icmp-port-unreachable and may also return icmp-port-unreachable error messages if certain iptables rules are hit. This is the reason for the problem above.

Looking at all the svc’s in the cluster, you can see that there happens to be a nodePort type svc with the same port number assigned, as the web service above.

Check iptables as follows (example).

|

|

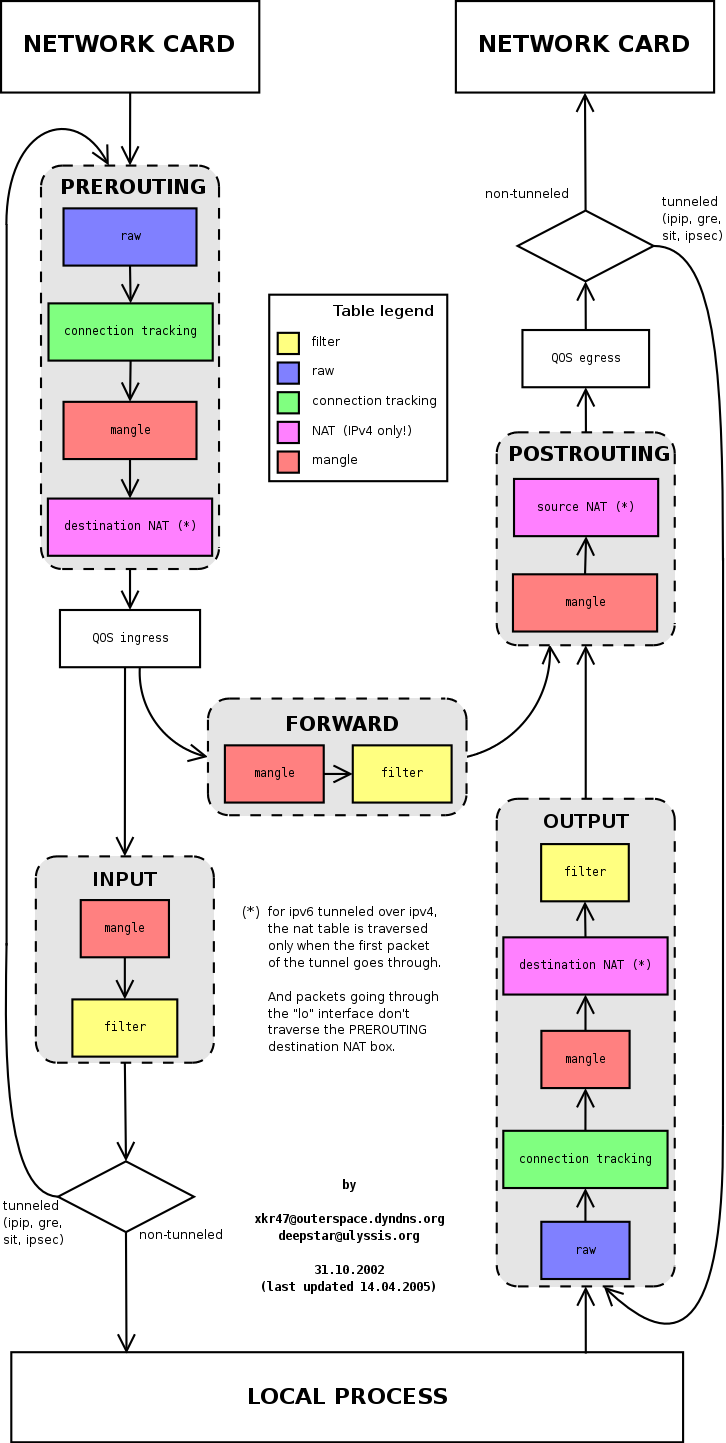

Above is a diagram of the different stages of iptables in the kernel (source), it can be seen that it needs to go through the PREROUTING, INPUT phase of iptables before it is actually sent up to the Local Process.

From the above iptables rules, the NodePort type svc, will add rules to the INPUT phase of iptables, and will directly return icmp-port-unreachable error messages when matching the destination port number of 30584.

Note that the above shows the case where svc does not match to the endpoint. When Pod starts normally and svc matches to the endpoint, the iptables rule will forward the message to Pod at this time, and Local Process still cannot receive the message. Since the client’s request in this case will not directly fail, but will be handed over to the Pod for processing, so it is more confusing.

Soul-crushing questions

The thoughtful reader may ask: Isn’t kubernetes designed this way to set a trap for Local Process? When the kernel randomly selects a local port, it will probably hit the kubernetes svc port number.

Actually, kubernetes has done its best.

When creating a nodePort type svc, kubernetes (actually doing kube-proxy) will not only issue iptables rules, but also create a listening socket, which listens on the port number of the nodePort, so.

- When the kernel specifies bind the port number, it will return port used

- When the kernel randomly selects a local port number, it will not hit the port

Therefore, normally, Local Process will not step on the trap.

However, if Local Process starts first and kube-proxy starts later, the situation described above will occur.

In this case, kube-proxy will still issue iptables rules and try to bind the port number, but it will be unsuccessful because it is already occupied by Local Process.

|

|

However, since iptables has been issued, Local Process can only stand by empty-handedly and shed tears at the port number, watching the messages being hijacked.

Solution

The solution is to configure the resourcequota of user namespace and configure the quota of service.NodePort/service.LoadBalancer to 0. Users who create svc of nodePort or LoadBalancer type will be rejected by resourequota.