Overview

The mainstream virtual NIC solutions are tun/tap and veth, with tun/tap appearing much earlier in time. The kernel released after Linux Kernel version 2.4 compiles tun/tap driver by default, and tun/tap is widely used. In the cloud-native virtual network, flannel0 in the UDP mode of flannel is a tun device, and OpenVPN also uses tun/tap for data forwarding.

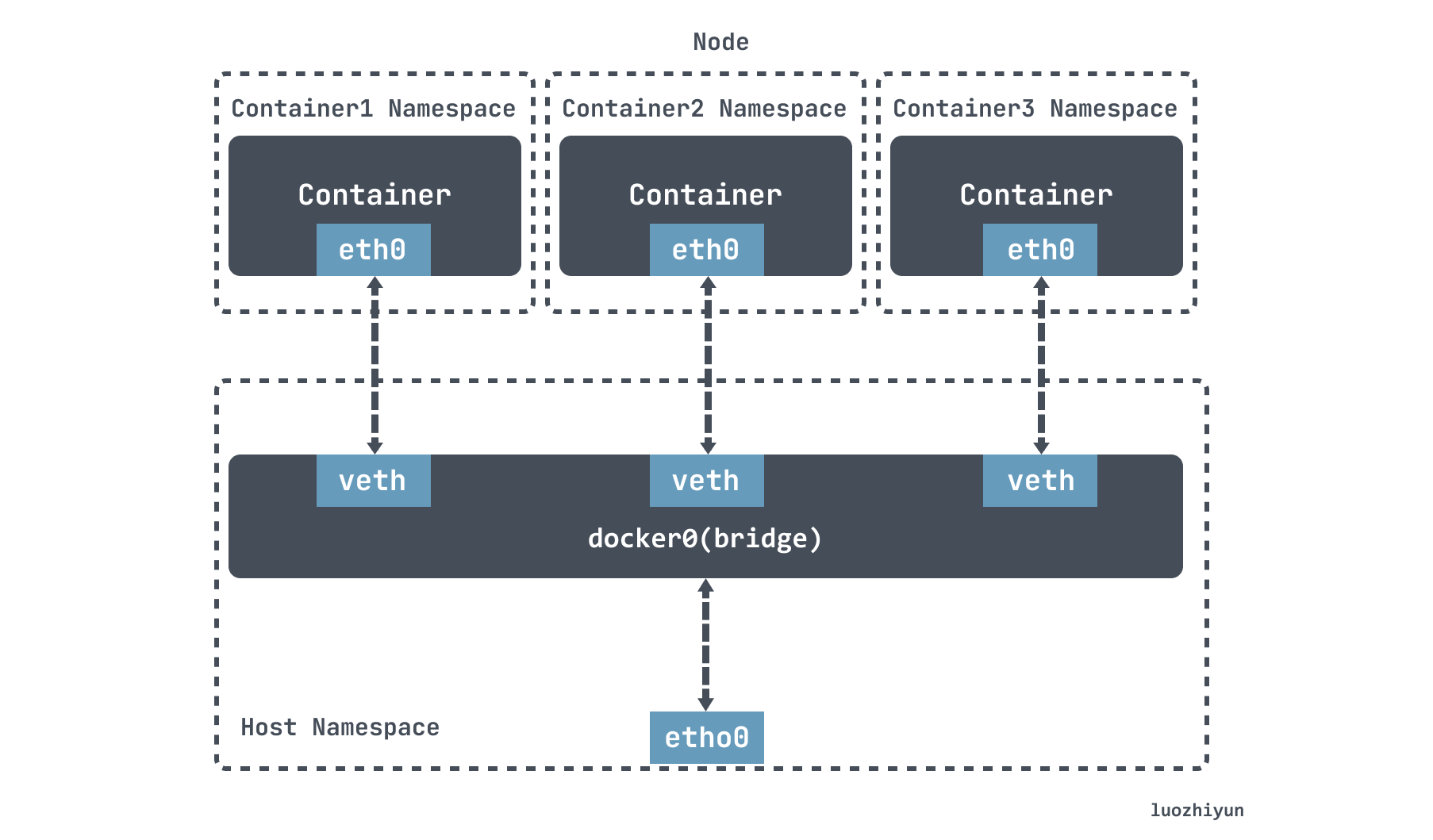

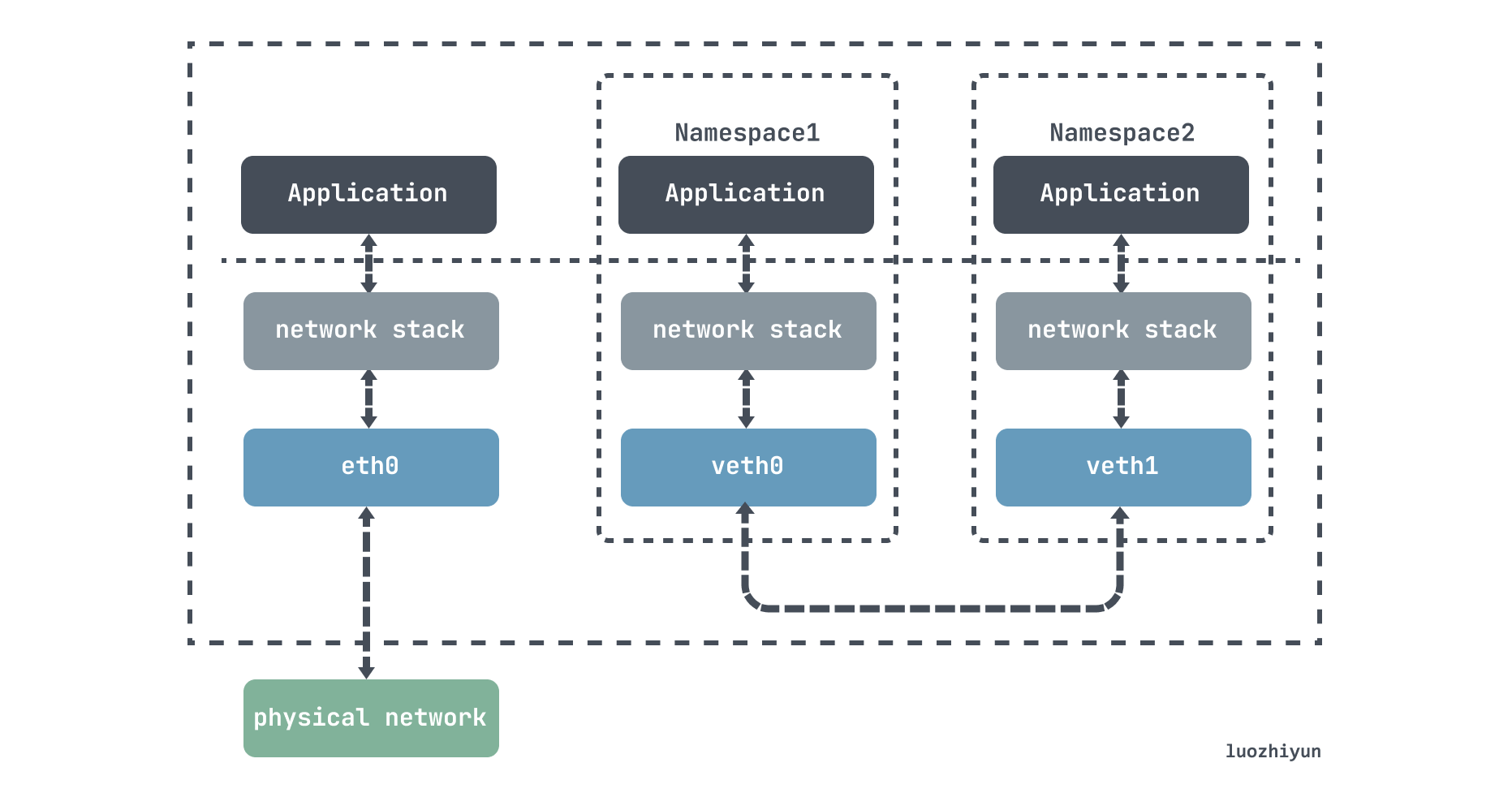

veth is another mainstream virtual NIC solution. In Linux Kernel version 2.6, Linux started to support network namespace isolation and also provided a special Virtual Ethernet (commonly abbreviated as veth) to allow two isolated network namespaces to communicate with each other. veth is not actually a device, but a pair of devices. veth is not actually a device, but a pair of devices, and is therefore often referred to as a Veth-Pair.

The Bridge mode in Docker relies on veth-pair to connect to the docker0 bridge to communicate with the host and even other machines in the outside world.

tun/tap

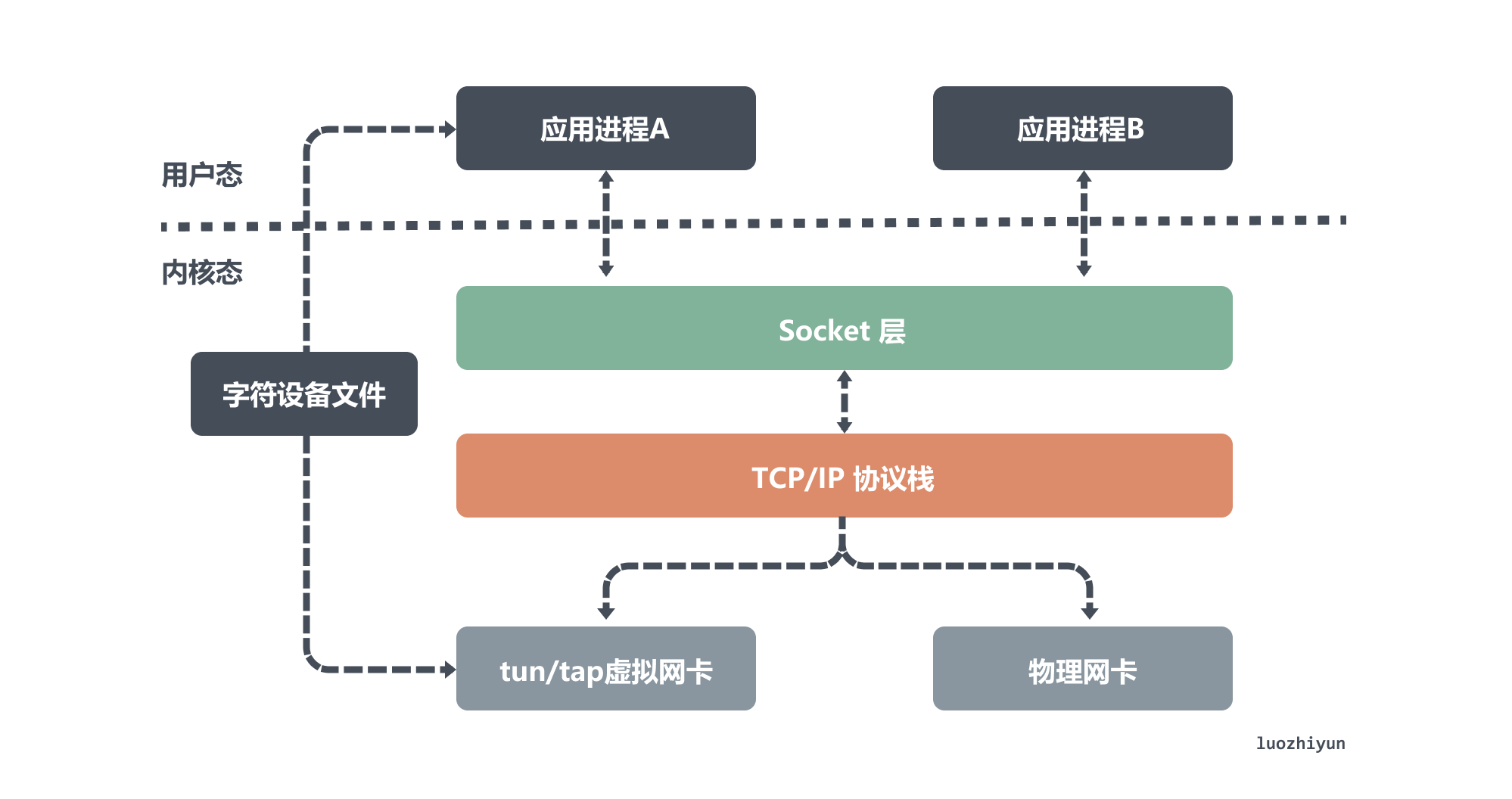

tun and tap are two relatively independent virtual network devices that act as virtual NICs, they function the same as physical NICs except that they do not have the hardware functionality of physical NICs. In addition tun/tap is responsible for transferring data between the kernel network stack and user space.

- tun device is a layer 3 network layer device, reads IP packets from /dev/net/tun character device and writes only IP packets, so it is often used for some point-to-point IP tunnels, such as OpenVPN, IPSec, etc.

- tap device is a layer 2 link layer device, equivalent to an Ethernet device, reading MAC layer data frames from /dev/tap0 character device and writing only MAC layer data frames, so it is often used as a virtual machine emulation NIC.

From the above diagram, we can see the difference between the physical NIC and the virtual NIC emulated by the tun/tap device. Although they are both connected to the network stack at one end, the physical NIC is connected to the physical network at the other end, while the tun/tap device is connected to a file as a transmission channel at the other end.

According to the previous introduction, we know that the virtual NIC has two main functions, one is to connect other devices (virtual or physical NIC) and Bridge, which is the role of the tap device; the other is to provide user space programs to send and receive data from the virtual NIC, which is the role of the tun device.

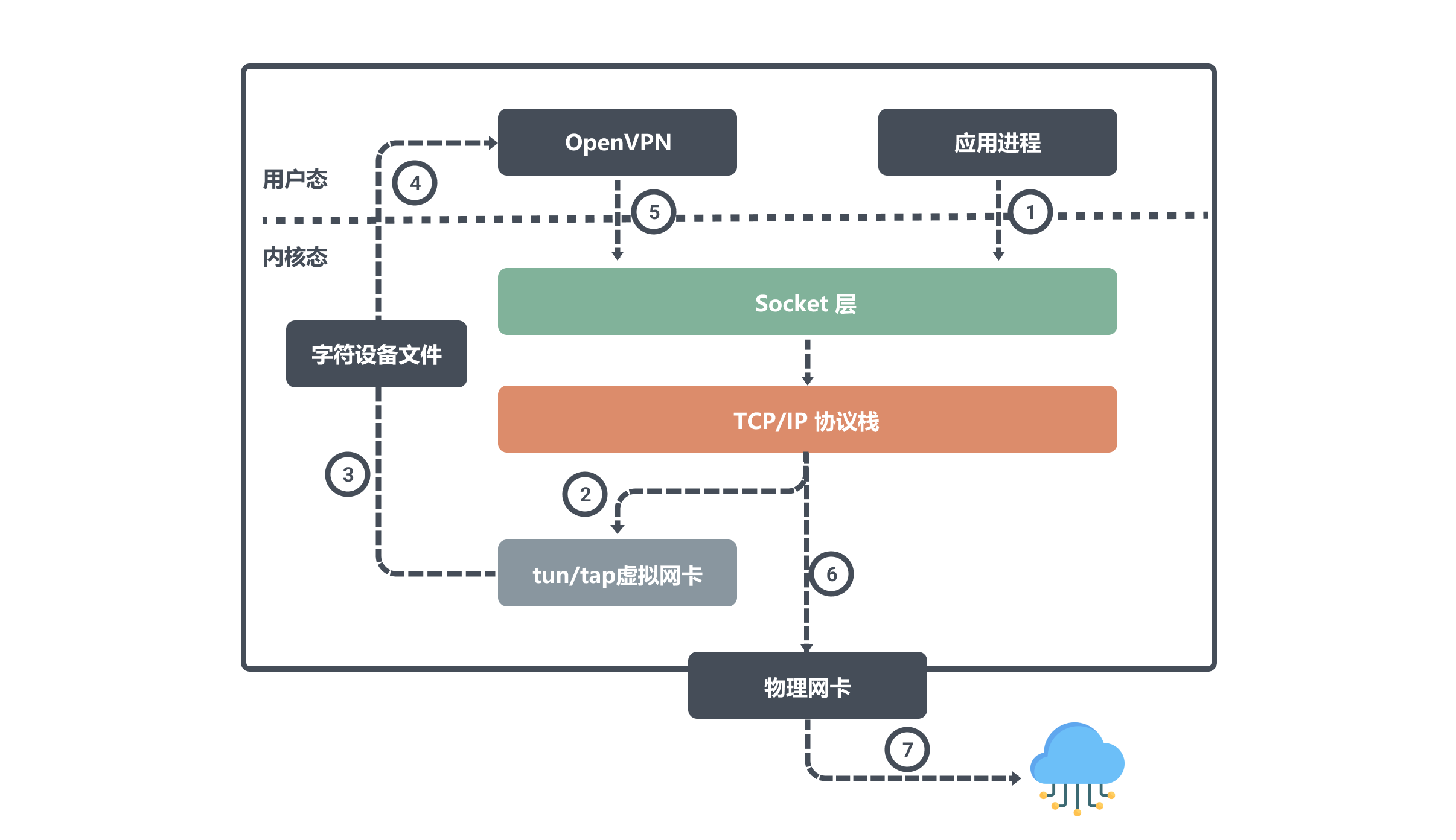

OpenVPN uses tun devices to send and receive data

OpenVPN is a common example of using a tun device, which makes it easy to build a LAN-like private network channel between different network access sites. The core mechanism is to install a tun device on both the OpenVPN server and the client’s computer to access each other through its virtual IP.

For example, two hosts on the public network, nodes A and B, have physical NICs configured with IPs ipA_eth0 and ipB_eth0, respectively. openvpn clients and servers running on nodes A and B will create tun devices on their nodes, and both will read or write to the tun devices.

Assuming that the virtual IPs corresponding to these two devices are ipA_tun0 and ipB_tun0, then the application on node B wants to communicate with node A through the virtual IPs, then the packets flow in the direction shown in the figure below.

The user process initiates a request to ipA_tun0, and after a routing decision the kernel writes the data from the network protocol stack to the tun0 device; then OpenVPN reads the tun0 device data from the character device file and sends the data request out; the kernel network protocol stack streams the data from the local eth0 interface to ipA_eth0 based on the routing decision.

Let’s also look at how Node A receives data.

When Node A receives data via the physical NIC eth0, it writes it to the kernel network stack. Since the target port number is listened to by the OpenVPN program, the network stack will give the data to OpenVPN.

After the OpenVPN program gets the data, it finds that the target IP is from the tun0 device, so it writes the data from user space to the character device file, and then the tun0 device writes the data to the protocol stack, and the network protocol stack finally forwards the data to the application process.

We know from the above that using tun/tap devices to transfer data needs to go through the protocol stack twice, and there will inevitably be some performance loss. If conditions permit, direct container-to-container communication does not use tun/tap as the preferred solution, but is generally based on veth, which will be introduced later. However, tun/tap does not have the constraints of veth, which requires devices to be present in pairs and data to be transferred as is. Once the packet is in the user state program, the programmer has full control over what changes to make and where to send it, and can write code to implement them, so the tun/tap solution has a broader scope of application than the veth solution.

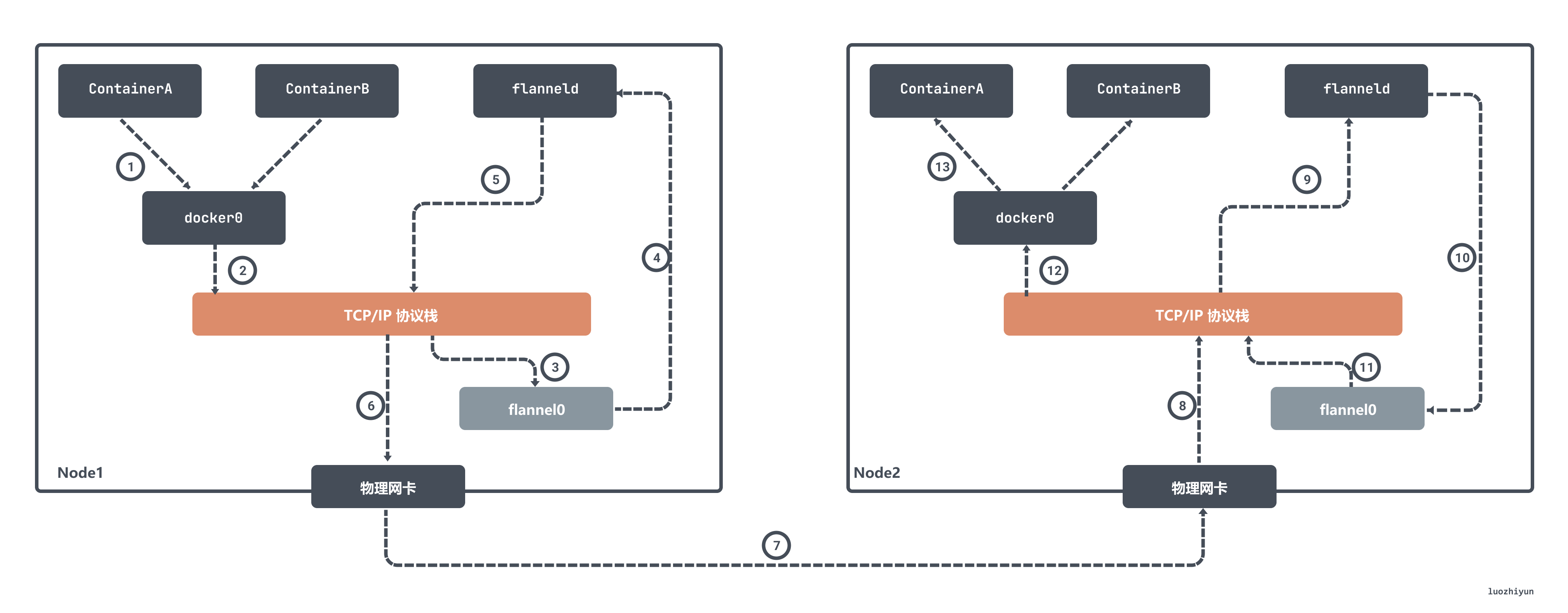

flannel UDP mode uses tun devices to send and receive data

Early on, flannel used tun devices to enable UDP access across the primary network, similar to OpenVPN above.

In flannel, flannel0 is a Layer 3 tun device that is used to pass IP packets between the OS kernel and the user application. When the operating system sends an IP packet to the flannel0 device, flannel0 hands over the IP packet to the application that created the device, the flanneld process, which is a UDP process that processes the packets sent by flannel0.

The flanneld process will match the destination IP address to the corresponding subnet, find the IP address of the host Node2 for this subnet from Etcd, and then encapsulate the packet in a UDP packet and send it to Node 2. Since flanneld on each host listens to port 8285, the flanneld process on the Node2 machine The flanneld process will get the incoming data from port 8285 and parse out the IP address encapsulated in it to ContainerA.

flanneld sends this IP packet directly to the TUN device it manages, which is the flannel0 device. The network stack then routes the packet to the docker0 bridge, which acts as a Layer 2 switch and sends the packet to the correct port, where it enters the containerA’s Network Namespace through the veth pair device.

The Flannel UDP mode described above is now deprecated because it goes through three copies of the data between the user and kernel states. The container sends the packet once through the docker0 bridge into the kernel state; the packet goes from the flannel0 device to the flanneld process once more; and the third time, flanneld reenters the kernel state after UDP packet sealing and sends the UDP packet through the eth0 of the host.

tap device as a virtual machine NIC

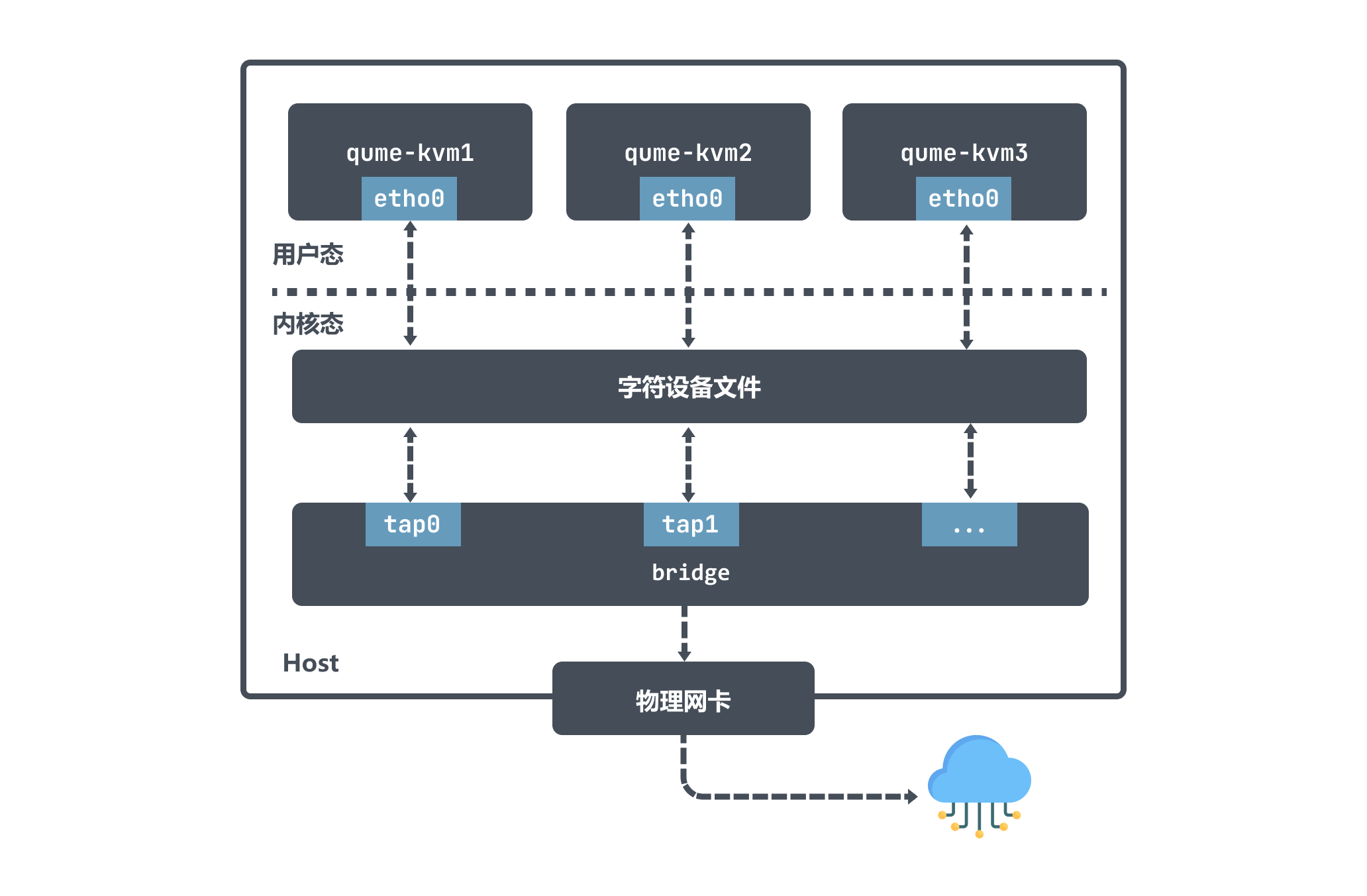

As we mentioned above, a tap device is a Layer 2 link layer device that is commonly used to implement virtual NICs. Take qemu-kvm for example, it has great flexibility to use tap device and Bridge together to implement various network topologies.

When qume-kvm is in tap mode, it registers a tapx virtual NIC with the kernel at boot time, with x representing an increasing number; the tapx virtual NIC is bound to the Bridge, an interface on top of it, and the data will eventually be forwarded through the Bridge.

qume-kvm will send data out through its NIC eth0, which from the Host’s point of view means that the user-level program qume-kvm process will write data to the character device; the tapx device will then receive the data and the Bridge will decide how to forward the packets. If qume-kvm wants to communicate with the outside world, then the packets are sent to the physical NIC, which ultimately enables communication with the outside world.

The above diagram also shows that the packets sent by qume-kvm reach the Bridge first through the tap device and then go to the physical network, and the packets do not need to go through the host’s protocol stack, which is more efficient.

veth-pair

A veth-pair is a pair of virtual device interfaces that come in pairs, with one end connected to a protocol stack and one end connected to each other, so that data entered at one end of the veth device will flow out of the other end of the device unchanged.

It can be used to connect various virtual devices, and the connection between two namespace devices can be used to transfer data via veth-pair.

Let’s do an experiment to construct a process of communication between ns1 and ns2 using veth to see how veth sends and receives request packets.

|

|

Then we can see that the namespace is set up with the respective virtual NICs and the corresponding IPs.

|

|

Then we ping the ip of the vethDemo1 device.

|

|

The packets of both NICs are analyzed.

|

|

By analyzing the above packets and combining them with ping-related concepts, we can roughly conclude the following.

- the ping process constructs ICMP echo request packets and sends them to the protocol stack via socket.

- the protocol stack knows that the packet going to 10.1.1.3 should go out the 10.1.1.2 port based on the destination IP address and the system routing table

- since it is the first time to access 10.1.1.3, it does not have its mac address at first, so the protocol stack sends an ARP out first, asking for the mac address of 10.1.1.3.

- the protocol stack hands the ARP packet to vethDemo0 for it to send out.

- the ARP request packet is forwarded to vethDemo1 since the other end of vethDemo0 is connected to vethDemo1.

- vethDemo1 receives the ARP packet and forwards it to the protocol stack at the other end to make an ARP reply, responding by telling the mac address; 7.

- when the mac address of 10.1.1.3 is obtained, a ping request is issued and an ICMP request is constructed and sent to the destination, and the ping command returns a success.

Summary

This article is just about two common virtual network devices. The reason for this is that when I was reading about flannel, I was told that flannel0 is a tun device, but I didn’t understand what a tun device is, so I couldn’t understand what a flannel is.

After researching, I found that the tun/tap device is a virtual network device responsible for data forwarding, but it needs to pass through the file as a transmission channel, which inevitably leads to why the tun/tap device has to forward twice, which is why the performance of the flannel device in UDP mode is not good, leading to the abandonment of this mode later.

Because of the poor performance of tun/tap devices as virtual network devices, direct container-to-container communication does not use tun/tap as the preferred solution, but is generally implemented based on veth, which will be introduced later. veth is a layer 2 device that allows two isolated network namespaces to communicate with each other without repeatedly going through the network stack. The veth pair is connected to the protocol stack on one end and to each other on the other, making the transfer of data between them very simple, and giving veth better performance than tap/tun.