This test was conducted for five common rpc frameworks:

- rpcx, one of the earliest Go ecosystem microservices frameworks, used by Sina, Good Future, etc.

- kitex, bytedance’s microservices framework

- arpc: a performance rpc framework by lesismal

- grpc: a Google-initiated open source rpc framework that supports cross-language and is widely used. The system is based on HTTP/2 protocol transport and uses Protocol Buffers as the interface description language.

- standard library of rpc/std_rpc: Go standard library comes with the rpc framework, currently in a maintenance state

The latest version of each framework is used for testing:

- rpcx: v1.7.8

- kitex: v0.3.4

- arpc: v1.2.9

- grpc: v1.48.0

- std_rpc: v1.8.4

In order to maintain as much consistency as possible in testing, all tests are conducted in the same test environment, the same test logic, the same test message:

- Test environment (6 years old machine, two machines, one for server, one for client)

- CPU model: Intel(R) Xeon(R) CPU E5-2620 v2 @ 2.10GHz / 2pcs

- Physical cores: 6; Logical cores: 12

- Memory: 64G

- Enable NIC multi-queue

- Both use protobuf as the encoding method for the message body, and the encoded message body size is 581 bytes

- In the client-side statistics, statistics throughputs and latency (lantency, including the maximum value, average, median value, and p99.9 value, the minimum value is less than 1 millisecond, so not counted)

- For latency, we also need to pay attention to its long tail, so we need to pay attention to the median and p99.9. p99.9 means that 999 parts per thousand requests have latency less than a certain time.

The test is divided into three main scenarios.

- TCP connection number is 10, persistent connection. Concurrent number of 100, 200, 500, 1000, 2000, 5000 cases The main test is the maximum capacity of each framework in the case of high concurrency, including throughput and latency.

- TCP connections of 10, concurrency of 200, and persistent connections. Latency in the case of 100,000/sec, 150,000/sec, 180,000/sec throughput The main measurement is the latency and long tail of each framework while the throughput remains consistent. Too much latency or long tail means that using this throughput rate is not appropriate.

- TCP connections of 1000, concurrency of 1000, persistent connections. The main test is the performance of each framework in the case of a relatively high number of large connections. Mainly for the bytes of kitex such self-developed netpoll, to see if it is more suitable for this large number of connections.

Here the number of concurrency refers to the number of goroutines started by the client, multiple goroutines may share the same client (connection).

All the test code for each framework is placed in rpcx-benchmark. The test commands are very simple, as shown in the introduction below, so you can download them to test and verify yourself, and you are welcome to provide optimization patches, add more rpc frameworks, etc.

Scenario 1

TCP connection count is 10, persistent connections. The number of concurrent connections is 100, 200, 500, 1000, 2000, 5000.

- Server side start:

. /server -s xxx.xxx.xxx.xxx:8972// xxx.xxx.xxx.xxx is the IP address that the server listens to - Client test:

. /client -c 2000 -n 1000000 -s xxx.xxx.xxx.xxx:8972// Here the concurrency is 2000, testing a total of 1 million requests sent

Test raw data:

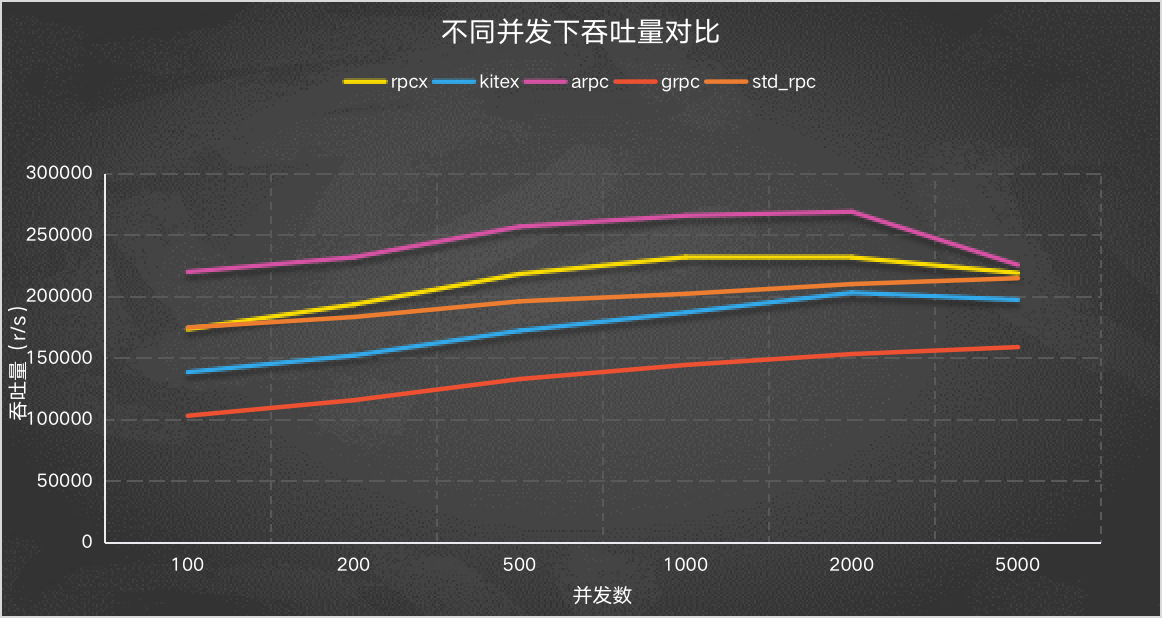

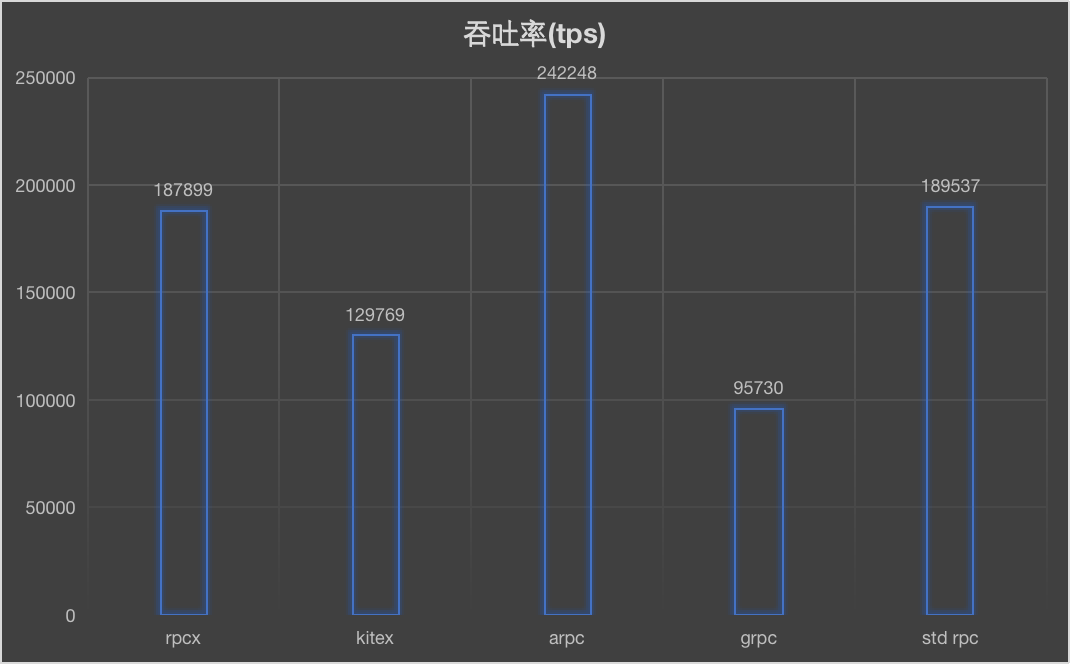

Throughput

Y-axis is throughput/sec, the higher the better. You can see that arpc framework throughput is very good, and last year’s test was also arpc performance is better, followed by rpcx, standard rpc, kitex, grpc.

You can see that in the case of high concurrency, except for grpc, all other can reach 180,000 requests/sec throughput.

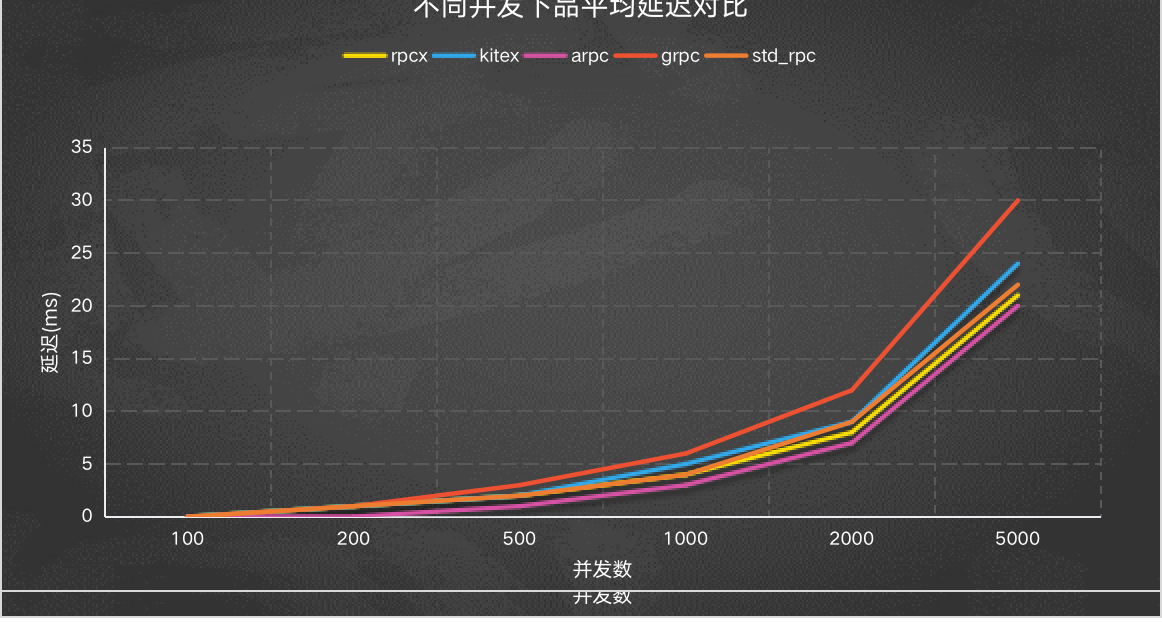

Average delay

The Y-axis is the average latency, the lower the better, as you can see the higher latency of grpc. The latency is exactly the opposite of the throughput above.

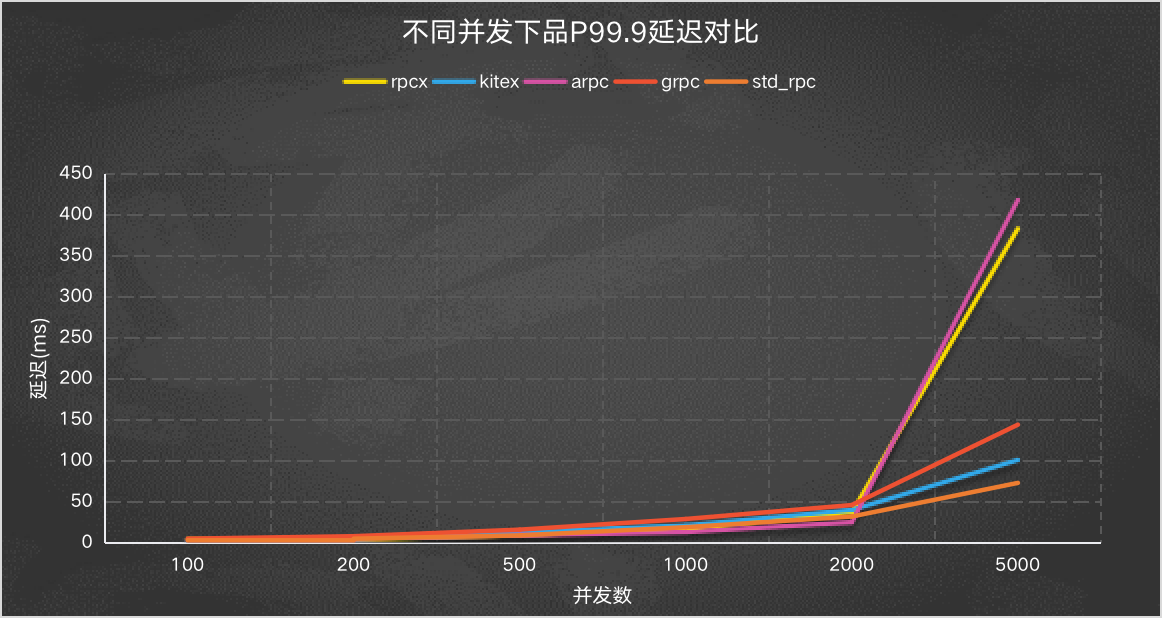

P99.9 Delay

Another case where we may be more concerned about the long tail can be observed by picking the median or p99.9. Here is the case of p99.9.

Of course, it is meaningless to compare with a concurrency of 5000 because the throughput of each framework is different at a concurrency of 5000, and the latency will be large at a high throughput. So in order to observe the long tail in a fair situation, I designed the following test.

Scenario 2

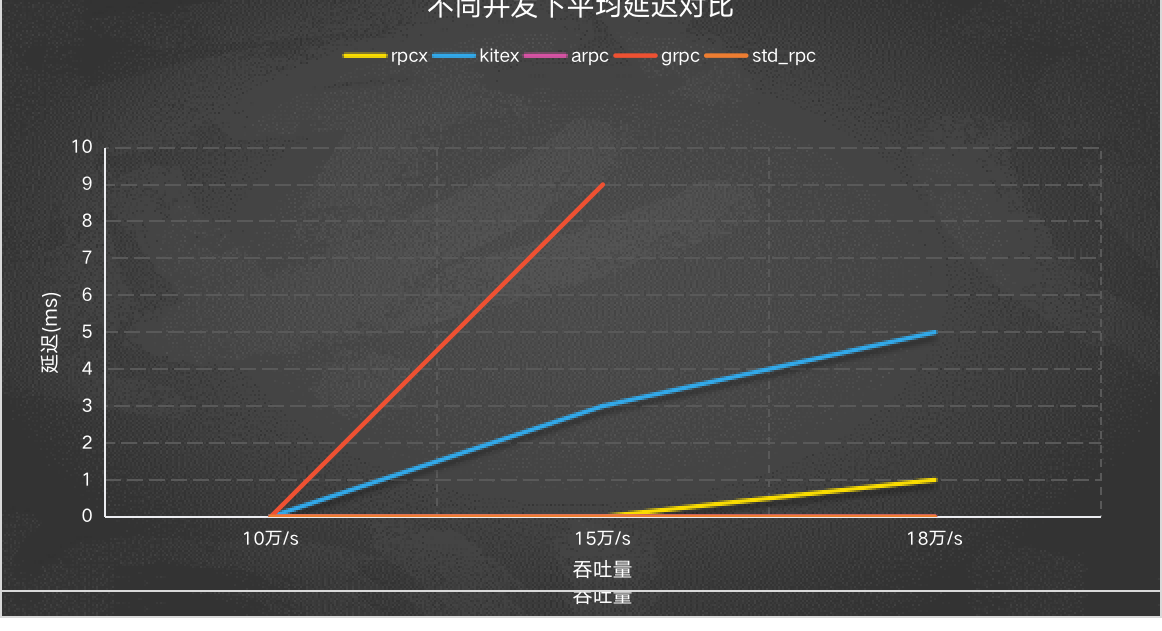

TCP connections of 10,concurrency of 200,persistent connections. Latency in case of throughput of 100,000/sec, 150,000/sec, 180,000/sec.

In order to observe the latency, we need to ensure that the throughputs are all as same as possible, and it is more scientific to observe the latency and the long tail case at the same throughput.

So scenario 2 is to observe the latency and P99.9 latency of the framework for three scenarios with throughputs of 100,000/sec, 150,000/sec, and 180,000/sec at a concurrency of 200.

Test raw data:

Average delay

The average latency looks higher for grpc because it doesn’t reach 180,000/sec throughput, so its last value is not available. kitex is relatively high, this also depends on whether there is some specific tuning for kitex. Basically, it is negatively correlated with throughput.

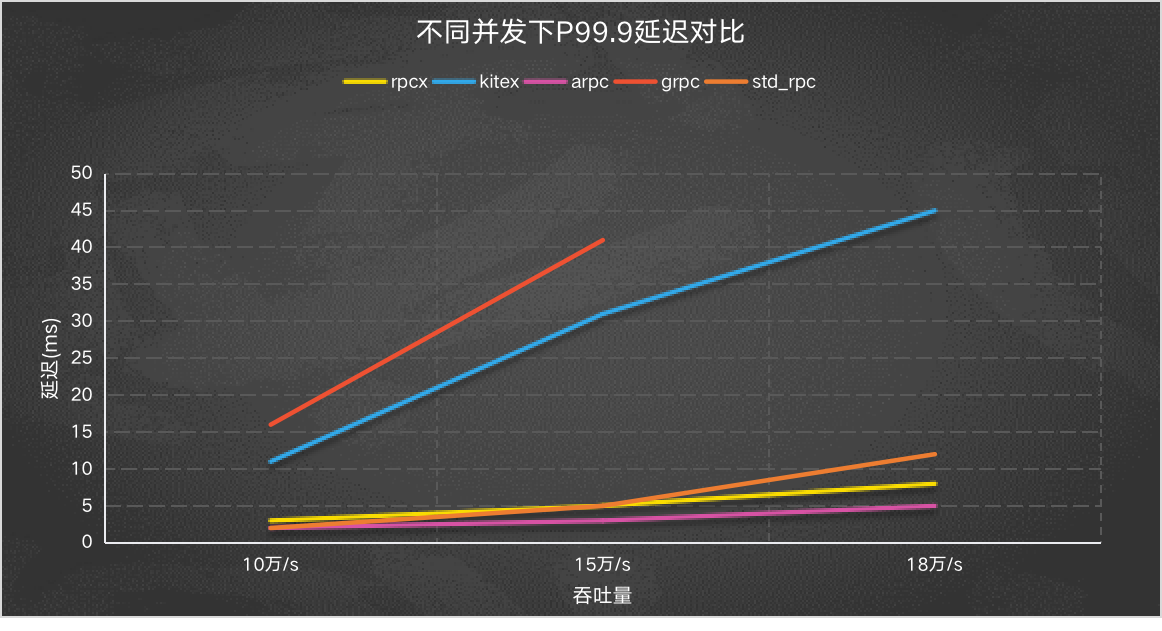

P99.9 Delay

For the long tail, here is the case of p99.9

The P99.9 latency of arpc, rpcx and standard rpc is still better.

Scenario 3

TCP connections with 1000 concurrent and persistent connections.

Observe the performance (throughput and latency) of each framework in the presence of a large number of connections. Instead of choosing a huge number of connections, this scenario chooses a more common number of 1000 connections for the test, with connections that are persistent.

arpc is significantly better than other frameworks in the case of more connections, followed by rpcx and the standard rpc framework.

Average time consumption and P99.9 time consumption is also in line with this situation, the specific raw data reference.

Overall, the performance of rpcx is still good, and I hope that in the future, reference to the brother rpc framework to do further optimization.

Reference https://colobu.com/2022/07/31/2022-rpc-frameworks-benchmarks/