In the era of mobile Internet, the scale of business systems that directly face C-user is generally very large, and the machine resources consumed by the system are also very considerable. The number of CPU cores and memory used by the system are consuming the company’s real money. Minimizing the resource consumption of single service instance without decreasing the service level, which is commonly known as “eating less grass and producing more milk”, has always been the goal of the operators of each company, and some companies can save hundreds of thousands of dollars per year by reducing the number of CPU cores used by 1%.

With the same choice of programming language, it is important to continuously reduce the consumption of service resources. It is more natural and straightforward to rely on developers to continuously polish the performance of their code on the one hand, and on the compilers of the programming language to improve the results in terms of compilation optimization on the other. However, these two aspects are also complementary, if developers can understand the compiler optimization scenarios and tools more thoroughly, they can write more friendly code for compilation optimization, and thus obtain better performance optimization results.

The Go core team has been continuously investing in Go compiler optimization and has achieved good results, although there is still a lot of room compared to the code optimization power of the old GCC and llvm. In a recent article, “The Road of bytedance Large-scale Microservice Language Development”, it is also mentioned that bytedance internal Go compiler inline optimization (the most profitable change) has been modified, so that the Go code of bytedance internal service has been given more opportunities for optimization, which has achieved 10-20% performance improvement of online service and memory resource usage drop, saving roughly 100,000 cores.

Seeing such obvious results, I’m sure you readers are eager to learn about inline optimization in the Go compiler. Don’t worry, in this article, I will learn and understand inline optimization in the Go compiler with you. I hope that this article will help you to master the following.

- What is inline optimization and what are its benefits

- Where inline optimization is located in the Go compilation process and how it is implemented

- What code can be optimized inline and what cannot be optimized inline yet

- How to control the Go compiler’s inline optimization

- What are the disadvantages of inline optimization

Let’s start by understanding what inline optimization is.

1. What is compiler inlining optimization

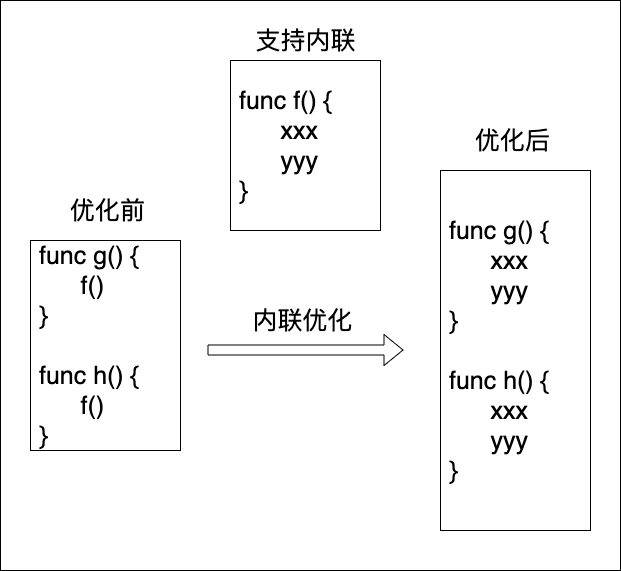

inlining is a common optimization tool used by programming language compilers for functions, also known as function inlining. If a function F supports inlining, it means that the compiler can replace the code that makes a call to the function F with the function body/function definition of F to eliminate the extra overhead caused by the function call, a process shown in the figure below.

We know that Go has only changed to a register-based calling statute since version 1.17. Previous versions have been based on passing arguments and return values on the stack, and the overhead of function calls is much higher, in which case the effect of inline optimization is more significant.

In addition, with inline optimization, the compiler’s optimization decisions can be made not in the context of each individual function (e.g., function g in the above figure), but in the context of the function call chain (the code becomes flatter after the inline replacement). For example, the optimization of the subsequent execution of g in the above figure will not be limited to the g context, but will allow the compiler to decide on the subsequent optimization to be performed in the context of the g->f call chain due to the inlining of f, i.e. inlining allows the compiler to see further and wider.

Let’s look at a simple example.

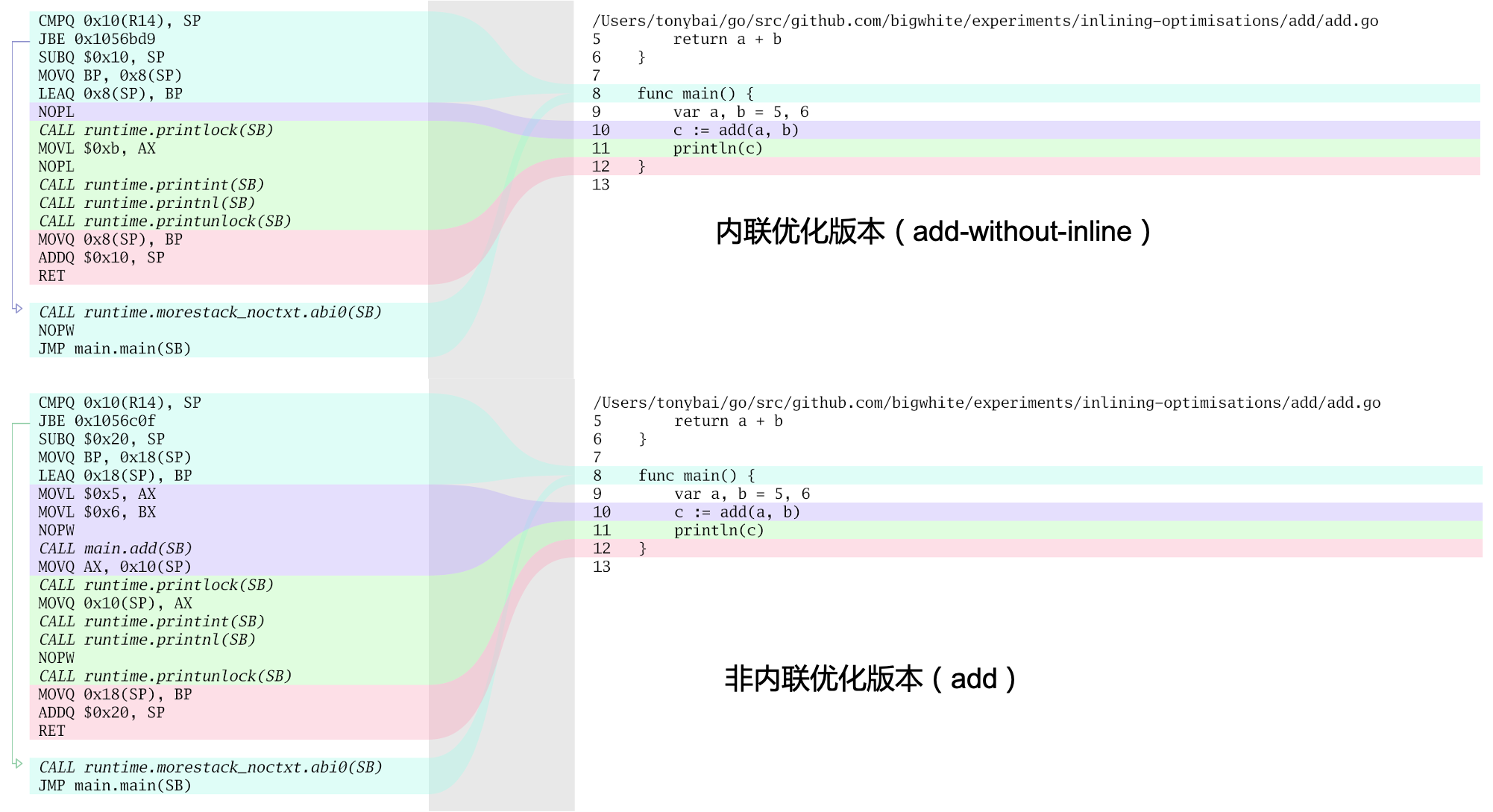

In this example, our focus is on the add function. above the add function definition, we use //go:noinline to tell the compiler to turn off inline for the add function, we build the program and get the executable: add-without-inline. then remove the //go:noinline line and do another build. We get the executable add, and we use the lensm tool to graphically look at the assembly code of the two executables and do the following comparison.

We see that the non-inline optimized version of add-without-inline calls the add function in the main function with the CALL instruction as we expected; however, in the inline optimized version, the function body of the add function does not replace the code at the location where the add function is called in the main function; the location where the main function calls the add function corresponds to It is a NOPL assembly instruction, which is an empty instruction that does not perform any operation. So where is the assembly code implemented by the add function?

The conclusion is: it’s been optimized away! This is what was said earlier inlining provides more opportunities for subsequent optimization . after the add function call is replaced with the implementation of the add function, the Go compiler can directly determine that the result of the call is 11, so even the addition operation is omitted, and the result of the add function is directly replaced with a constant 11 (0xb), and then the constant 11 is directly passed to the println built-in function ( MOVL 0xb, AX).

A simple benchmark also shows the performance difference between inline and non-inline add.

|

|

We see that: the inline version has about 5 times the performance of the non-inline version.

At this point, many people may ask: Since inlining optimization works so well, why not inline all the functions inside the Go program, so that the whole Go program becomes one big function without any function calls in between, so that the performance can become even higher? While this may theoretically be the case, inlining optimization is not without overhead and the effect of inlining varies for functions of different complexity. I’ll start with a look at the overhead of inlining optimization with you!

2. The “Overhead” of Inline Optimization

Before we really understand the overhead of inline optimization, let’s look at where inline optimization is located in the Go compilation process, i.e., where it is located.

Go compilation process

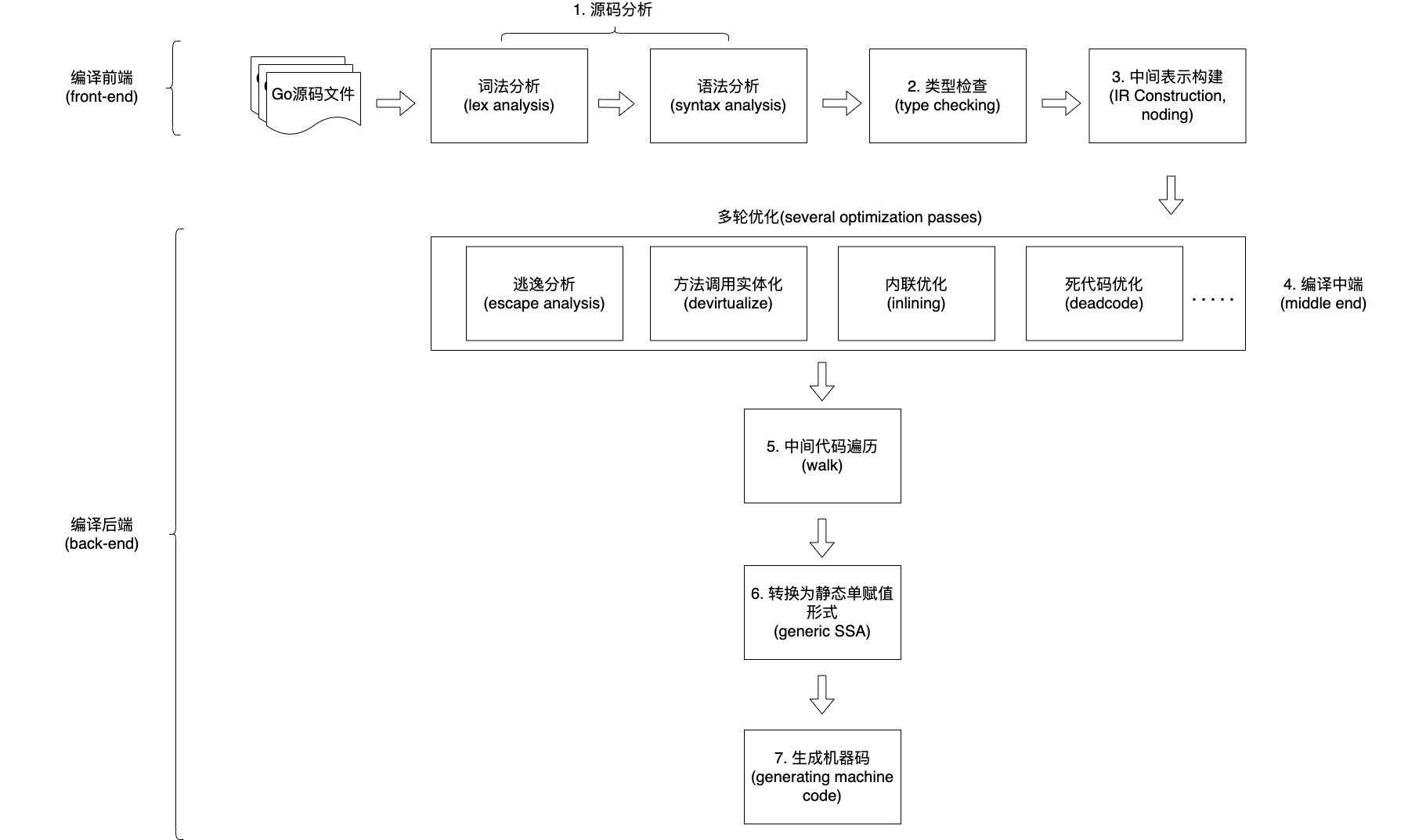

As with all static language compilers, the Go compilation process is roughly divided into the following stages.

-

Compiling front-end

The Go team does not intentionally divide the Go compilation process into the front and back ends of our common sense. If we had to, source code analysis (including lexical and syntax analysis), type checking, and intermediate representation (Intermediate Representation) building could be classified as the logical front end of compilation, with the rest of the links behind it classified as the back end.

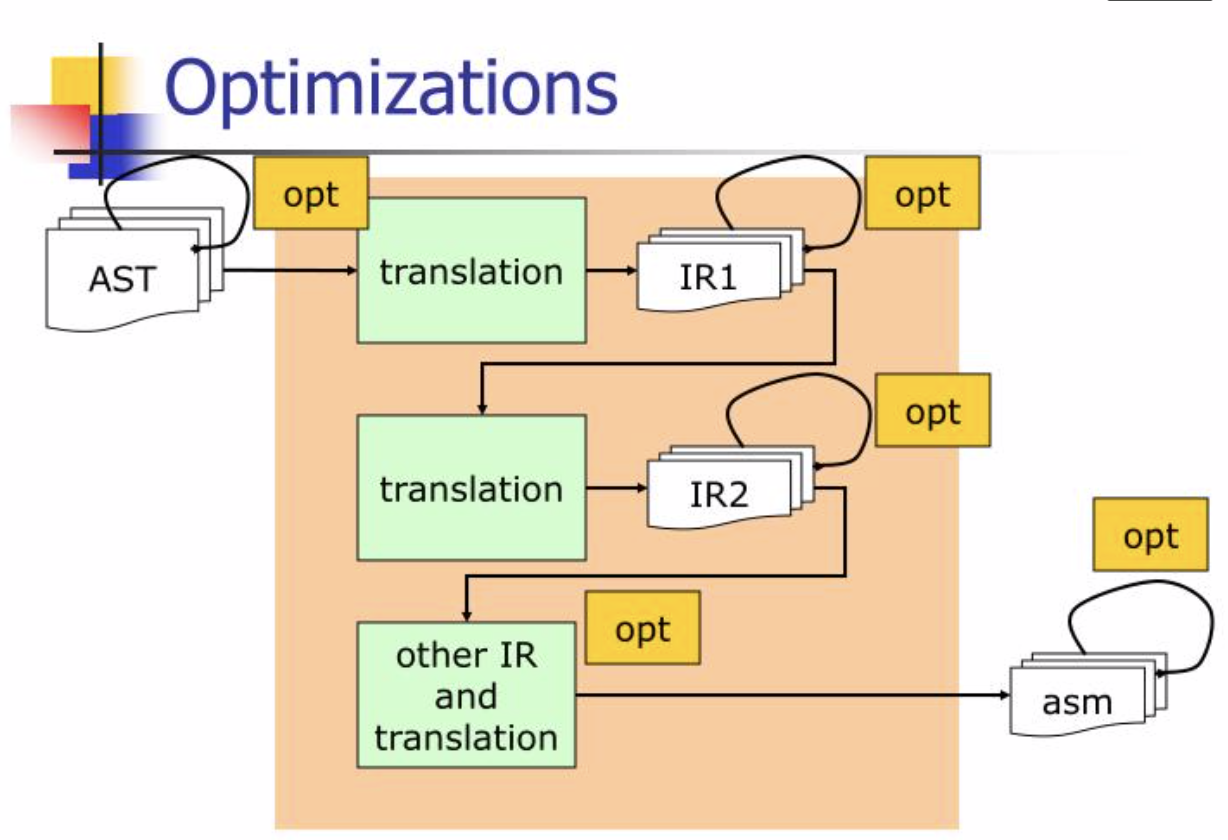

The source code is analyzed to form an abstract syntax tree, followed by a type check based on the abstract syntax tree. After the type check is passed, the Go compiler converts the AST to an intermediate code representation that is independent of the target platform.

Currently Go has two types of IR implementations, one is irgen (aka “-G=3″ or “noder2″), irgen is the implementation used since Go 1.18 (which is also an AST-like structure); the other is unified IR, in Go 1.19 In Go 1.19, we can enable it with

GOEXPERIMENT=unified, and according to the latest news, unified IR will land in Go 1.20.Note: Most of the compilation processes of modern programming languages generate intermediate code (IR) several times, for example, the static single assignment form (SSA) to be mentioned below is also a form of IR. For each IR, the compiler will have some optimization actions.

Ref: https://www.slideserve.com/heidi-farmer/ssa-static-single-assignment-form

-

Compiling the back-end

The first step in the back end of the compilation is a session called the middle end by the Go team, in which the Go compiler performs multiple rounds (passes) of optimizations based on the intermediate code above, including dead code elimination, inline optimization, method call materialization (devirtualization), and escape analysis.

Note: devirtualization means converting a method called through an interface variable to a dynamically typed variable of the interface to call the method directly, eliminating the process of method table lookup through the interface.

Next is the intermediate code traversal (walk), which is the last round of optimization based on the IR representation above. It mainly decomposes complex statements into separate, simpler statements, introduces temporary variables and re-evaluates the execution order, while in this session, it also converts some high-level Go structures into lower-level, more basic operation structures, such as converting switch statements into binary search algorithm or skiplist, replacing operations on maps and channels with runtime calls (e.g., mapaccess), etc.

Next are the last two parts of the compilation backend, first converting the IR to SSA (static single assignment) form, and again doing multiple rounds of optimization based on SSA, and finally generating machine-dependent assembly instructions based on the final form of SSA for the target architecture, which are then handed over to the assembler to generate relocatable target machine code.

Note: The go compiler produces relocatable target machine code that is eventually provided to the linker to generate executable files.

We see that Go inlining occurs in the mid-session and is an optimization means based on the IR intermediate code that implements the decision at the IR level whether a function is inlinable or not, and the replacement of the function body at its call for inlinable functions.

Once we understand where the Go inline is located, we can roughly determine the overhead that comes with Go inline optimization.

Overhead of Go Inline Optimization

Let’s look at the overhead of Go inline optimization with an example. reviewdog is a pure Go implementation of a code review tool that supports major code hosting platforms such as github and gitlab. It has a size of about 12k lines (using loccount statistics).

We build the reviewdog with inline optimization on and off, and collect the build time and the size of the built binaries, with the following results.

|

|

We see that the version with inline optimization on consumes about 24% more time to compile than the version with inline optimization off, and the resulting binary size is about 11% larger - that’s the overhead of inline optimization! i.e., it slows down the compiler and causes the size of the generated binary to be larger.

Note: Whether or not inline optimization is enabled for hello world level programs most of the time you won’t see much difference, either in compile time or binary size.

Since we know where the inline optimization is located, this overhead can be well explained: according to the definition of inline optimization, once a function is decided to be inlineable, all the code in the program at the location where the function is called is replaced with the implementation of the function, thus eliminating the runtime overhead of the function call, which also leads to a certain amount of “code bloat” at the IR (intermediate code) level. code “bloat” at the IR (intermediate code) level. As mentioned earlier, the “side effect” of code bloat is that the compiler can take a broader and more distant view of the code, and thus may implement more optimizations. The more rounds of optimizations that can be implemented, the slower the compiler executes, which further increases the compiler’s time consumption; at the same time, the bloated code requires the compiler to process and generate more code in later stages, which not only increases the time consumption, but also increases the size of the final binary file.

Go has always been sensitive to compilation speed and binary size, so Go uses a relatively conservative inlining optimization strategy. So how exactly does the Go compiler decide whether a function can be inlined or not? Let’s take a brief look at how the Go compiler decides which functions can be optimized inline.

3. Decision Principle of Function Inline

As mentioned before, inline optimization is one round of a multi-round (pass) optimization at the middle end of the compilation, so its logic is relatively independent, it is done based on IR code and it is IR code that is changed. We can find the main code of the Go compiler for inline optimization in $GOROOT/src/cmd/compile/internal/inline/inl.go in the Go source code.

Note: The location and logic of the code in the inline optimization section of the Go compiler may have changed in previous versions and in future versions; currently this article refers to code that is the source code in Go 1.19.1.

The inline optimization IR optimization session does two things: first, it goes through all the functions in IR, determines whether a function can be inlined by CanInline, and for those functions that can be inlined, saves the corresponding information, such as the function body, for subsequent inline function replacement; second, it replaces all the inline functions called in the function. We focus on CanInline, i.e. how the Go compiler decides whether a function is inline or not!

The “driving logic” for the inline optimization process is in the Main function in $GOROOT/src/cmd/compile/internal/gc/main.go.

|

|

We see from the code: if there is no global disable inline optimization (base.Flag.LowerL != 0), then Main will call the InlinePackage function of the inline package to perform the inline optimization.

The code for InlinePackage is as follows.

|

|

InlinePackage iterates through each top-level declared function, and for non-recursive functions or recursive functions that span more than one function before recursion, determines if they can be inlined by calling the CanInline function. Whether or not it can be inlined, the InlineCalls function is then called to replace the inline function called in its function definition.

VisitFuncsBottomUp is traversed from the bottom up according to the function call graph, which ensures that each time analyze is called, each function in the list only calls other functions in the list, or functions that have already been analyzed (in this case replaced by inline function bodies) in a previous call.

What is a recursive function that spans more than one function before recursion, look at this example below to understand.

f is a recursive function, but instead of calling itself, it eventually calls itself back through the function chain g -> h, which is > 1 in length, so f is inlineable.

The CanInline function has more than 100 lines of code and its main logic is divided into three parts.

The first is the determination of some //go:xxx directive. When the function contains the following directive, then the function cannot be inlined.

//go:noinline//go:noraceor build command line with -race option//go:nocheckptr//go:cgo_unsafe_args//go:uintptrkeepalive//go:uintptrescapes- … …

Secondly, it will make a decision about the status of the function, for example, if the function body is empty, it cannot be inlined; if type checking (typecheck) is not done, it cannot be inlined, etc.

The final call to visitor.tooHairy determines the complexity of the function. The method is to set an initial maximum budget for this iteration (visitor), which is a constant (inlineMaxBudget) and currently has a value of 80.

Then iterate through the individual syntax elements in that function implementation in the visitor.tooHairy function.

The consumption of the budget varies from element to element. For example, if append is called once, the visitor budget value is subtracted from inlineExtraAppendCost, and then if the function is an intermediate function (not a leaf function), then the visitor budget value is also subtracted from v.extraCallCost, i.e. 57. And so it goes all the way down. If the budget is used up, i.e. v.budget < 0, then the function is too complex to be inlined; on the contrary, if the budget is still available all the way down, then the function is relatively simple and can be optimized inline.

Note: Why is the value of inlineExtraCallCost 57? This is an empirical value, obtained from a benchmark.

Once it is determined that it can be inlined, then the Go compiler saves some information to the Inl field of that function node in the IR.

|

|

The Go compiler sets the budget value to 80, apparently not wanting overly complex functions to be optimized inline, so why? The main reason is to weigh the benefit of inline optimization against the overhead. By making more complex functions inline, the overhead will increase, but the benefit may not increase significantly, i.e. the so-called “input-output ratio” is insufficient.

From the above description of the principle, it is clear that it may be better to inline functions that are small in size (low complexity) and are called repeatedly. For those functions that are too complex, the overhead of function calls is already very small or negligible, so inlining is less effective.

Many people will say: Isn’t there more opportunity for compiler optimization after inlining? The problem is that it is impossible to predict whether or not there are optimization opportunities and what additional optimizations will be implemented.

4. Intervening with the Go compiler’s inline optimization

Finally, let’s look at how to intervene with the Go compiler’s inlining optimizations. the Go compiler by default turns on global inlining optimizations and follows the CanInline decision process from inl.go above to determine whether a function can be inlined.

But Go also gives us some means to control inlining, for example we can explicitly tell the compiler on a function not to inline that function, let’s take add.go in the example above.

With the //go:noinline indicator, we can disable the inlining of add.

Note: Disabling a function from being inlined does not affect the InlineCalls function’s function body replacement for inline functions called inside that function.

We can also turn off inline optimization on a larger scale. With the help of the -gcflags '-l' option, we can turn off optimization on a global scale, i.e. Flag.LowerL == 0 and the Go compiler’s InlinePackage will not execute.

Let’s verify this with the previously mentioned reviewdog.

After that we look at the generated binary file size.

We find that the noinline version is actually slightly larger than the inline version! Why is this? It has to do with the way the -gcflags argument is passed. If you just pass in -gcflags '-l' like on the command line above, closing the inline only applies to the current package, i.e. cmd/reviewdog, and none of the package’s dependencies etc. are affected. -gcflags supports pattern matching.

We can set different patterns to match more packages, for example, the pattern all can include all the dependencies of the current package, let’s try again.

|

|

This time we see that reviewdog-noinline-all is quite a bit smaller than reviewdog-inline, which is because all turns off inlining for each of the packages reviewdog depends on as well.

5. Summary

In this article, I have taken you through the concept of inlining, the role of inlining, the “overhead” of inlining optimization, and the principles of function inlining decisions made by the Go compiler, and finally I have given you the means to control inlining optimization by the Go compiler.

Inline optimization is an important optimization tool that will bring significant performance improvements to your system when used properly.

The Go compiler group is also making continuous improvements to Go inlining optimization, from only supporting inlining of leaf functions to supporting inlining of non-leaf node functions, and I believe Go developers will continue to receive performance dividends in this area in the future.

The source code covered in this article can be downloaded here.

6. Ref

https://go.dev/src/cmd/compile/READMEhttps://github.com/golang/proposal/blob/master/design/19348-midstack-inlining.mdhttps://golang.org/s/go19inliningtalkhttps://dave.cheney.net/2020/04/25/inlining-optimisations-in-gohttps://dave.cheney.net/2020/05/02/mid-stack-inlining-in-gohttps://github.com/golang/go/issues/29737https://tonybai.com/2022/10/17/understand-go-inlining-optimisations-by-example/