Eviction is the termination of a Pod running on a Node to ensure the availability of the workload. For using Kubernetes, it is necessary to understand the eviction mechanism because usually, Pods are evicted because they need to solve the problems caused behind the eviction, and to quickly locate them you need to have an understanding of the eviction mechanism.

Reasons for Pod eviction

Kubernetes officially gives the reasons for the eviction of subordinate Pods as follows.

- Preemption and Eviction

- Node-pressure

- Taints

- API-initiated

- drain

- Evicted by controller-manager

Preemption and priority

Preemption is a process where kube-scheduler checks for low priority Pods and then evicts them to allocate resources to higher priority Pods when the node does not have enough resources to run the newly added Pod. this process is called “preemption” .

Node-pressure eviction

Node-pressure eviction means that the resources of the node where the Pod is located, such as CPU, memory, inode, etc., are divided into compressible resources CPU (compressible resources) and incompressible resources (incompressible resources) disk IO, memory, etc. When the incompressible resources are not enough, the Pod will be evicted. The eviction for such issues is per compute node kubelet monitors the resource usage of the node by capturing cAdvisor metrics.

Evicted by controller-manager

kube-controller-manager periodically checks the status of nodes that have been in NotReady for more than a certain amount of time, or Pod deployments that have failed for a long time, and these Pods are replaced by the control plane controller-manager that creates new Pods to replace the problematic ones.

Initiating evictions via API

Kubernetes provides users with an eviction API, and users can call the API to implement custom evictions.

For versions 1.22 and above, evictions can be initiated via the API policy/v1.

For example, to evict Pod netbox-85865d5556-hfg6v , you can use the following command.

|

|

You can see the result that the old Pod is evicted and the new Pod is created, where the experimental environment has fewer nodes, so it is reflected as no node replacement.

|

|

Eviction return status via API

- 200 OK|201 Success: Eviction allowed,

Evictionis similar to sending aDELETErequest to a Pod URL - 429 Too Many Requests: may see this due to API speed limit, also for configuration reasons, eviction is not allowed

poddisruptionbudget(PDB is a protection mechanism that will always ensure a certain number or percentage of Pods are voluntarily evicted) - 500 Internal Server Error: Eviction is not allowed, there are misconfigurations, such as multiple PDBs referencing a Pod

drain Pods on Node

drain is a maintenance command provided to users after kubernetes 1.5+, with this command (kubectl drain <node_name>) you can evict all Pods running on the node, which has been used to perform operations on the node host (e.g. kernel upgrade, reboot)

Notes: The

kubectl drain <node_name>command can only be sent to one node at a time.

Taint eviction

Taint is usually used in conjunction with tolerance, where a node with taint will not have a pod dispatched to that node, while tolerance will allow a certain amount of taint to be dispatched to the pod.

After Kubernetes 1.18+, a taint-based eviction mechanism is allowed, whereby the kubelet will automatically add nodes and thus evict under certain circumstances.

Kubernetes has some built-in taint, at which point Controller will automatically taint the node with.

node.kubernetes.io/not-ready: Node failure.Ready=Falsefor the corresponding NodeCondition.node.kubernetes.io/unreachable: Node controller cannot access the node. Corresponds to NodeConditionReady=Unknown.node.kubernetes.io/memory-pressure: Node memory pressure.node.kubernetes.io/disk-pressure: Node disk pressure.node.kubernetes.io/pid-pressure: Node has PID pressure.node.kubernetes.io/network-unavailable: Node network is not available.node.kubernetes.io/unschedulable: Node is not schedulable.

Example: Pod eviction troubleshooting process

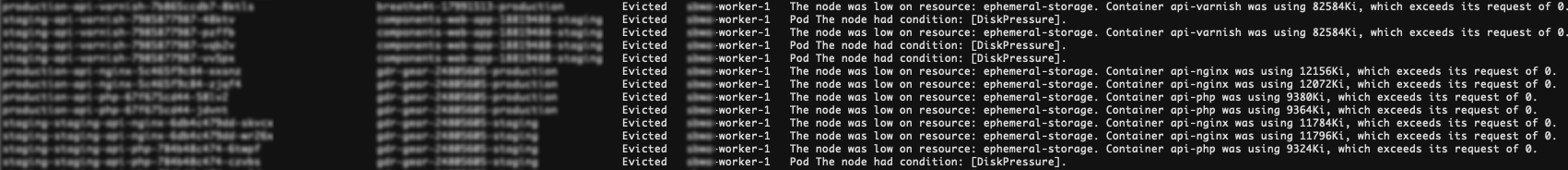

Imagine a scenario where a Kubernetes cluster with three worker nodes, version v1.19.0, finds that some of the pods running on worker 1 have been evicted.

Source:

https://www.containiq.com/post/kubernetes-pod-evictions

The above diagram shows that a lot of pods have been evicted, and the error message is clear. The eviction process is triggered by the kubelet due to insufficient storage resources on the node.

Method 1: Enable auto-scaler

- Add worker nodes to the cluster and either deploy cluster-autoscaler to automatically scale up or down based on the configured conditions.

- Adding only worker’s local storage, which involves VM scaling, will cause worker nodes to be temporarily unavailable.

Method 2: Protecting Critical Pods

Specify resource requests and limits in the resource list and configure QoS (Quality of Service). When a kubelet triggers an eviction, it will guarantee that at least these pods will not be affected.

This kind of policy guarantees the availability of some critical Pods to a certain extent. If a Pod is not evicted when a node has a problem, this will require additional steps to be performed to find the failure.

Running the command kubectl get pods results in a number of pods being in the evicted state. The results are saved in the kubelet log of the node. Find the corresponding logs using cat /var/paas/sys/log/kubernetes/kubelet.log | grep -i Evicted -C3.

Checking Ideas

Check Pod tolerance

When a Pod fails to connect or a node fails to respond, you can use tolerationSeconds to configure the corresponding length of time.

View the conditions to prevent Pod eviction

Pod eviction is suspended if the number of nodes in the cluster is less than 50 and the number of failed nodes exceeds 55% of the total number of nodes. In this case, Kubernetes will attempt to evict the workload of the failed node (the APP running in kubernetes).

The subordinate json describes a healthy node.

If the ready condition is Unknown or False for longer than the pod-eviction-timeout, the node controller will perform an API-initiated type eviction on all Pods assigned to that node.

Checking Pod’s allocated resources

Pods will be evicted based on the resource usage of the node. The evicted Pods will be scheduled according to the resources of the node assigned to the Pod. The “Manage eviction” and “Scheduling” conditions consist of different rules. As a result, the evicted container may be rescheduled to the original node. Therefore, it is important to allocate resources to each container.

Checking Pods for periodic failures

Pods can be evicted multiple times. That is, if a Pod in a node is also evicted after it has been evicted and dispatched to a new node, the Pod will be evicted again.

If the eviction action is triggered by kube-controller-manager, the Pod in the Terminating state is retained. After the node is restored, the Pod will be automatically destroyed. Pods can be forcibly deleted if the node has been deleted or otherwise cannot be recovered.

If the eviction is triggered by a kubelet, the Pod state will remain in the Evicted state. It is only used for later fault location and can be deleted directly.

The command to delete an evicted Pod is as follows.

|

|

Notes.

- Pods that are evicted by Kubernetes are not automatically recreated as pods. to recreate a pod, you need to use the replicationcontroller, replicaset, and deployment mechanisms, which is the Kubernetes workload mentioned above.

- Pod controllers are controllers that coordinate a set of Pods to always be in a desired state, so they will be deleted and then rebuilt, also a feature of the Kubernetes declarative API.

How to monitor evicted Pods

Using Prometheus

|

|

Using ContainIQ

ContainIQ is an observability tool designed for Kubernetes that includes a Kubernetes event dashboard, which includes Pod eviction events.

Ref

https://kubernetes.io/docs/concepts/scheduling-eviction/https://kubernetes.io/docs/concepts/scheduling-eviction/node-pressure-eviction/https://kubernetes.io/docs/concepts/scheduling-eviction/taint-and-toleration/https://kubernetes.io/docs/concepts/scheduling-eviction/api-eviction/https://kubernetes.io/docs/tasks/administer-cluster/safely-drain-node/https://kubernetes.io/docs/tasks/administer-cluster/safely-drain-node/#draining-multiple-nodes-in-parallelhttps://www.containiq.com/post/kubernetes-pod-evictionshttps://sysdig.com/blog/kubernetes-pod-evicted/https://cylonchau.github.io/kubernetes-eviction.html