Compared to traditional virtualization, Kubernetes containers have shorter lifecycles, higher volume densities, and faster cluster change rates. The container network then must take full account of the high-speed communication between cluster nodes. In addition, the secure isolation of resources between compute loads carrying many tenants on an enterprise-class container cloud platform must also be taken into account.

Obviously, the traditional physical network architecture can not meet the needs of high flexibility of containers, container networks must have a new design architecture, Kubernetes rapid development and evolution, where the development of this aspect of the network changes the fastest, the most prosperous ecology.

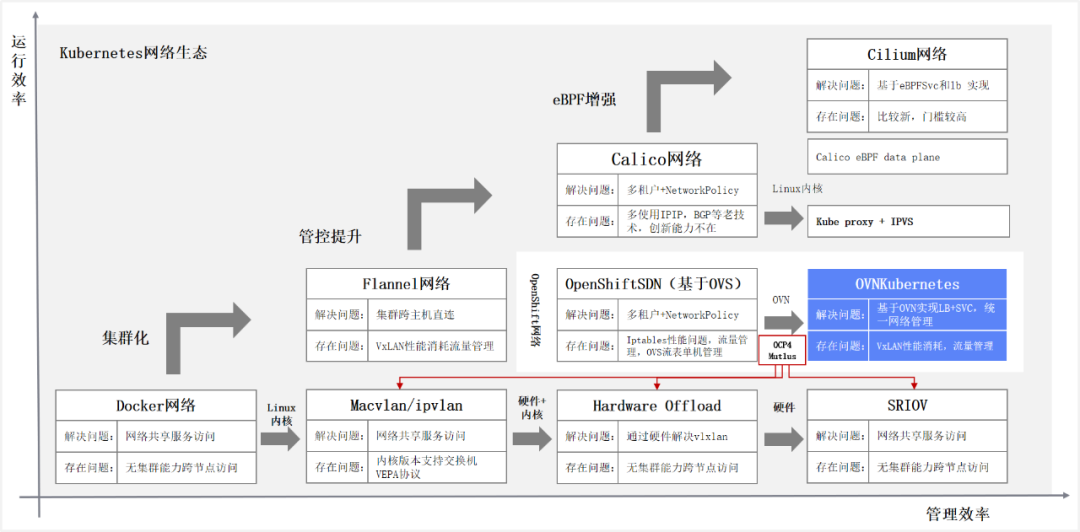

The development of container networks, from Docker-led CNM model, evolved to Google, CoreOS, Kubernetes-led CNI model. CNM and CNI is not the implementation of the network, but the network specification and network system. Currently, the container network CNI is basically the de facto standard, in addition to the different camps behind the open source community, more of a technical iterative evolution, architecture optimization and adjustment. The same as Kubernetes in the latest version began to decouple Docker. The following figure is the container network development pulse, from the operational efficiency and management efficiency of the two aspects to promote the continued iterative optimization of the container.

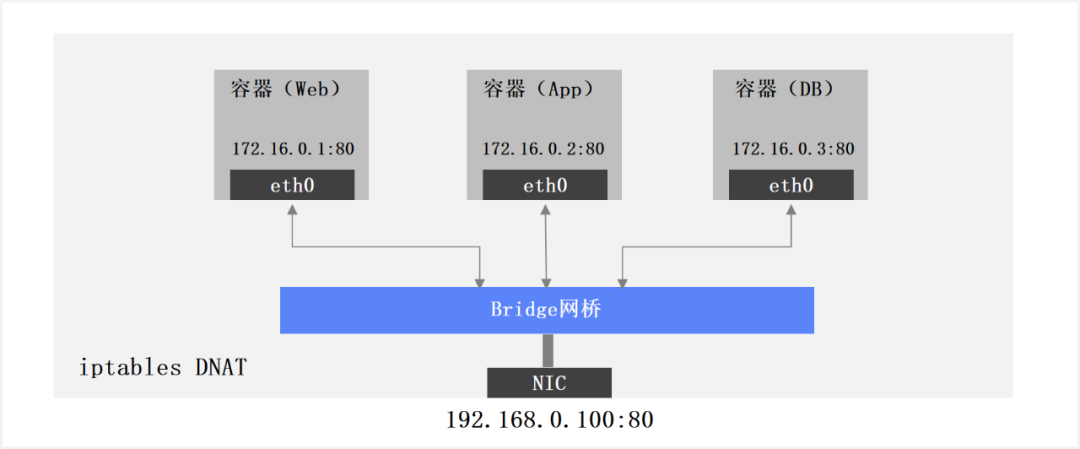

Single-node container networks

Container single-node network, in a single container host, through Bridge or Macvlan and other ways to share the host physical NIC, multiple containers use the “port mapping” way to share the IP address of the container host. The problem is how to manage large-scale, direct communication between containers across hosts without going through NAT is not possible. As shown in the figure below.

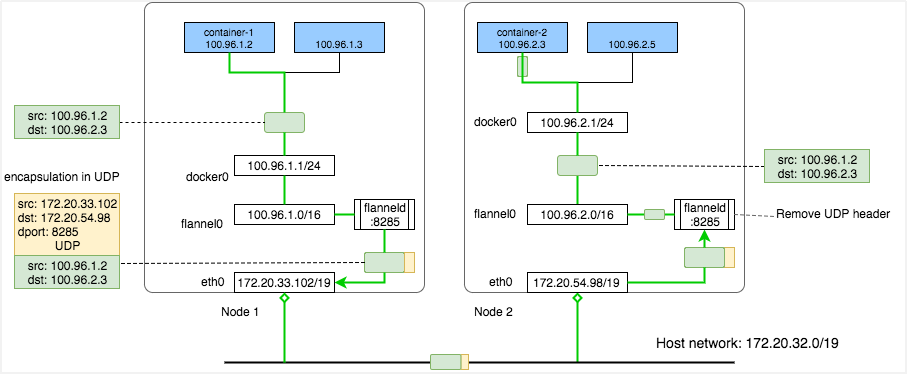

Kubernetes Flannel Networks

Kubernetes Flannel network to achieve container host clusters and cross-node Pod direct connection, each container host is assigned a network segment for intra-container Pod interconnection. Overlay container network is implemented through VxLAN protocol encapsulation. The container IP uses the physical machine IP when transmitting on the physical network, and its real IP is encapsulated in the VxLAN protocol. There is a flanneld service on each machine for packetization and unpacketization when communicating on the external network. The problem is that although the second layer brings the convenience of connectivity, the control, access control, and tenant isolation are not implemented, as shown in the figure below.

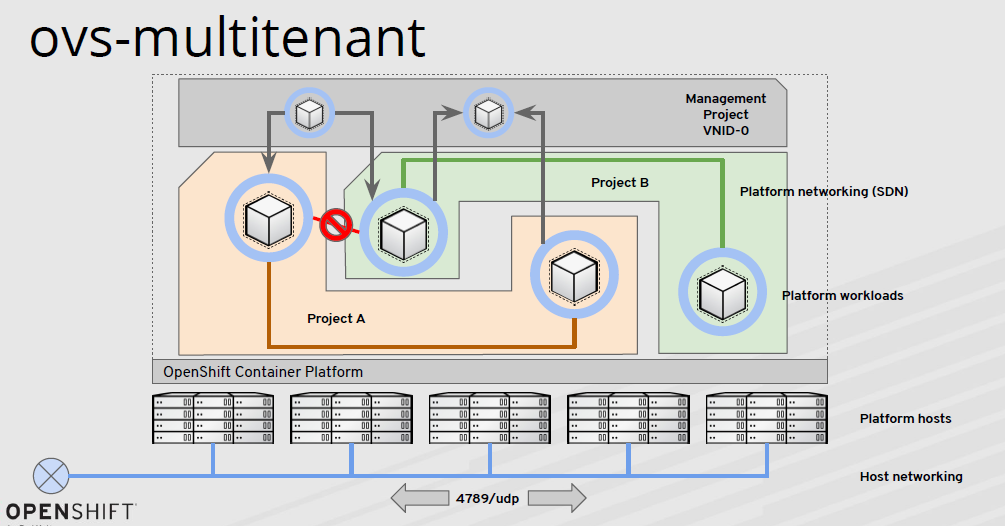

Multi-tenancy + NetworkPolicy container network

Multi-tenant + NetworkPolicy container network, Openshift v3 OVS network is based on this idea has been implemented. Through VxLAN to achieve cross-cluster communication with each other, the use of programmable OVS flow tables to control container traffic forwarding control on each node, network NetworkPolicy control between multi-tenants, based on Namespace level control, based on Pod, port control. The problem is that OVS and flow tables are still standalone, with a lot of process programming work, SVC and LB exposure relying on iptables, and a large performance overhead, as shown in the figure below.

SDN Container Networks

SDN container network, with the rapid development of technology, especially in recent years, the digital transformation of large state-owned enterprises accelerated, after a variety of applications containerized operation, the limitations of the OVS container network began to highlight. The first is that the unified control plane is not very efficient. The second is that OVS is implemented with the help of iptables on each node, which has a high performance overhead when implementing NAT, while Kubernetes Service management is complex. Finally, it is difficult to support new requirements such as IPv6 and Windows.

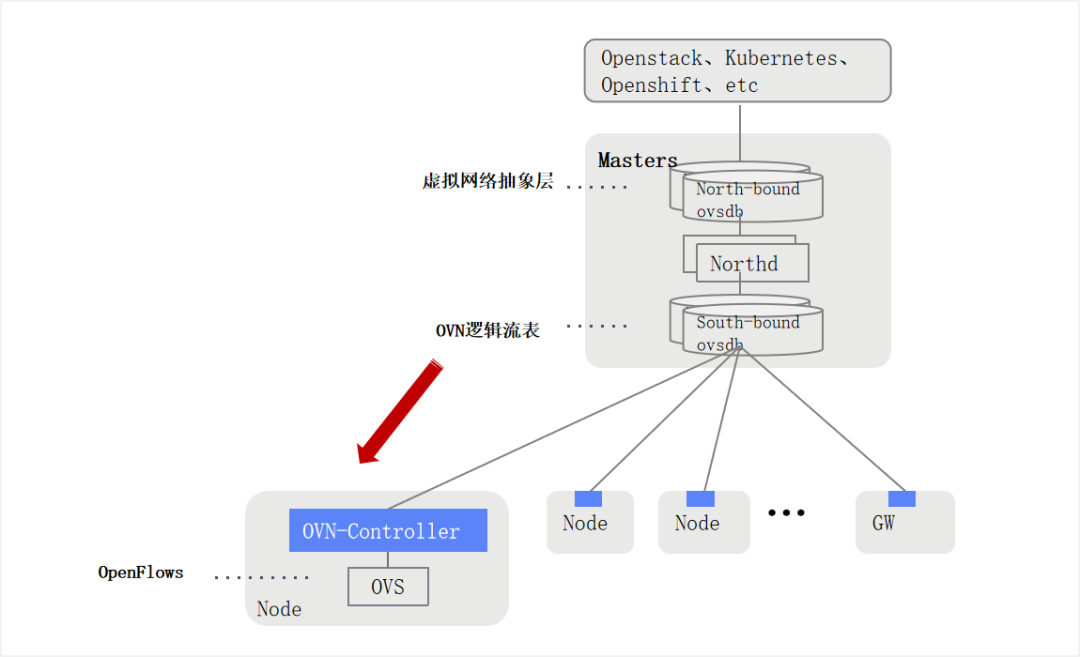

OVN is an evolution from OVS and is a native control layer implementation of openvSwitch. OVN is a centralized SDN controller that implements network orchestration at the cluster level, manages component high availability, uses the OpenFlow control protocol, and uses OVS to implement network forwarding.

Summary

OVN still uses OVS for many functions (LB, Gateway, DNS, DHCP) for underlying traffic forwarding, but dramatically improves the management capabilities of upper layer applications (Openstack, Kubernetes, Openshift). Compared to other kubernetes CNI network plugins, OVN is the real SDN!