Submariner is a completely open source project that helps us network communication between different Kubernetes clusters, either locally or in the cloud.Submariner has the following features:

- L3 connectivity across clusters

- Service discovery across clusters

- Globalnet support for CIDR overlap

- Submariner provides a command line tool, subctl, to simplify deployment and management.

- Compatible with various CNIs

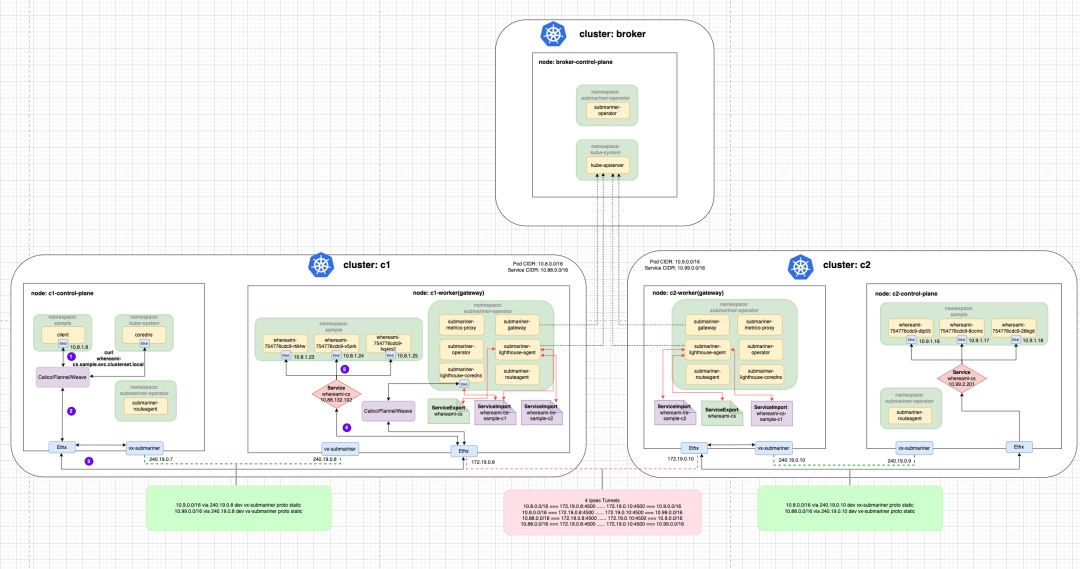

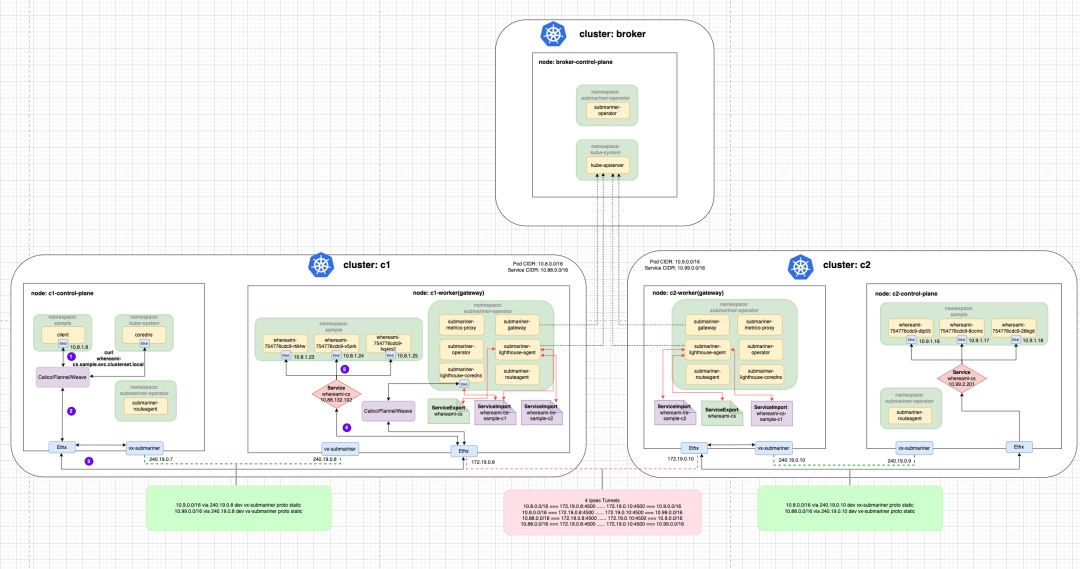

1. Submariner architecture

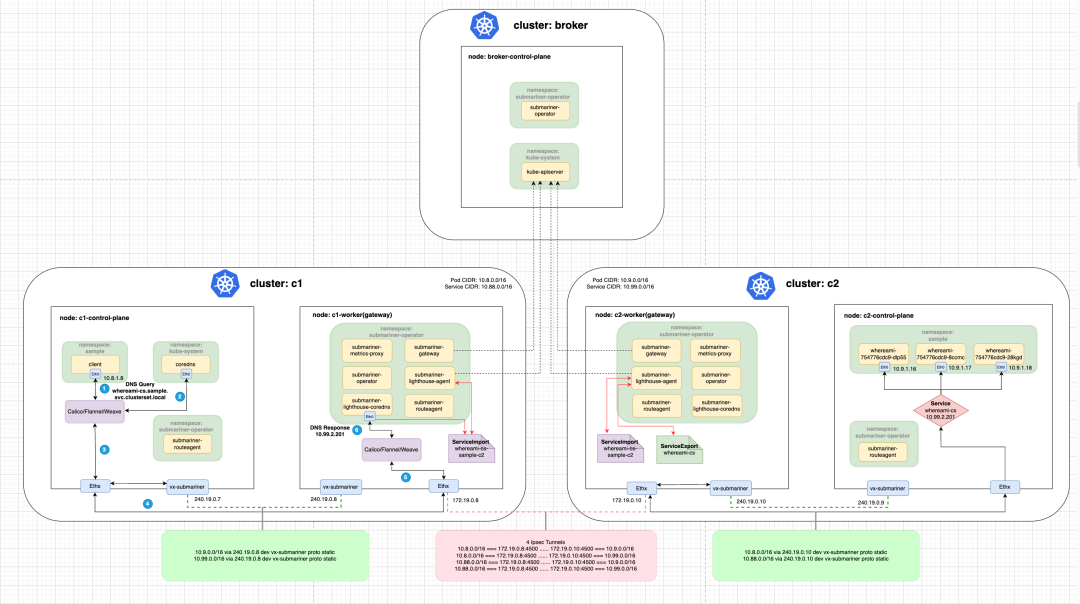

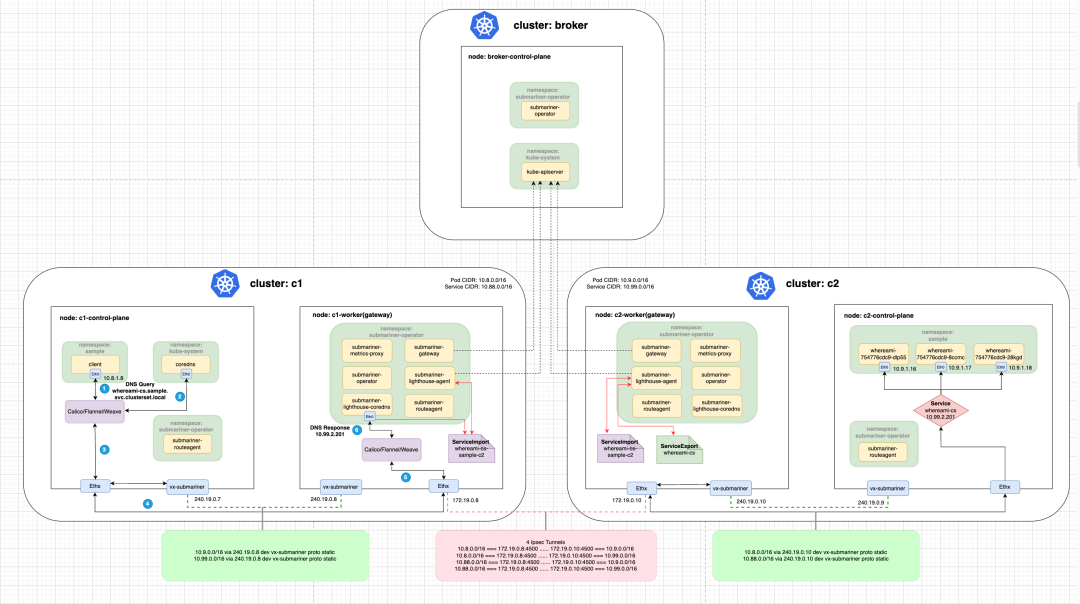

Submariner consists of several main parts:

- Broker: essentially two CRDs (Endpoint and Cluster) for exchanging cluster information, where we need to select one cluster as the Broker cluster and the other clusters connect to the API Server of the Broker cluster to exchange cluster information::

- Endpoint: contains the information needed by the Gateway Engine to establish inter-cluster connections, such as Private IP and Public IP, NAT ports, etc.

- Cluster: Contains static information about the original cluster, such as its Service and Pod CIDR.

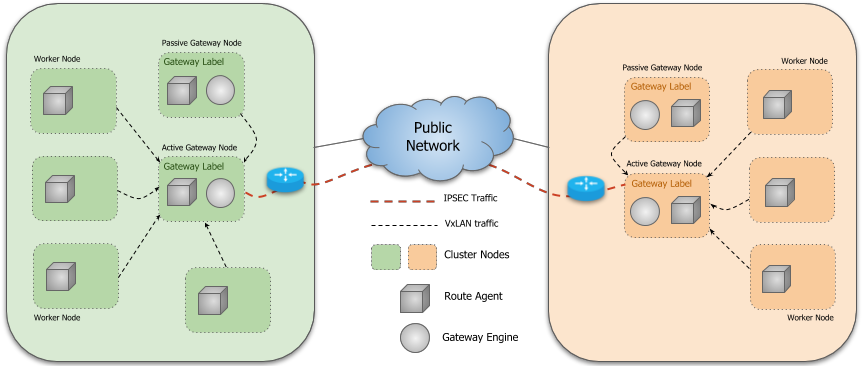

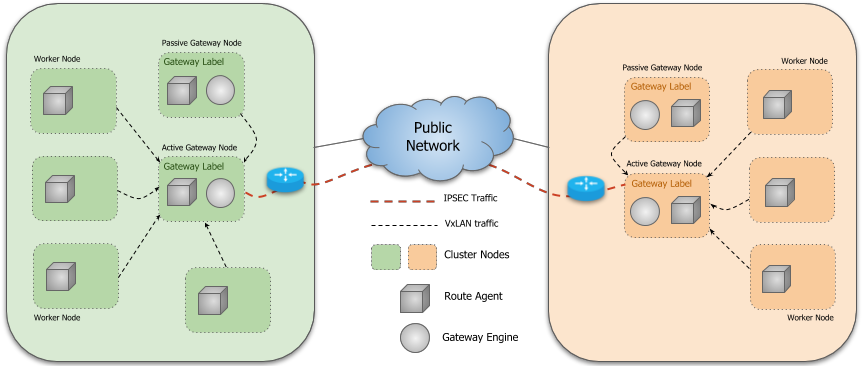

- Gateway Engine: manages the tunnels that connect to other clusters.

- Route Agent: Responsible for routing traffic across clusters to active Gateway Node.

- Service Discovery: Provides cross-cluster DNS service discovery.

- Globalnet (optional): Handles cluster interconnections with overlapping CIDRs.

- Submariner Operator: Responsible for installing Submariner components such as Broker, Gateway Engine, Route Agent, etc. in Kubernetes clusters.

1.1. Service Discovery

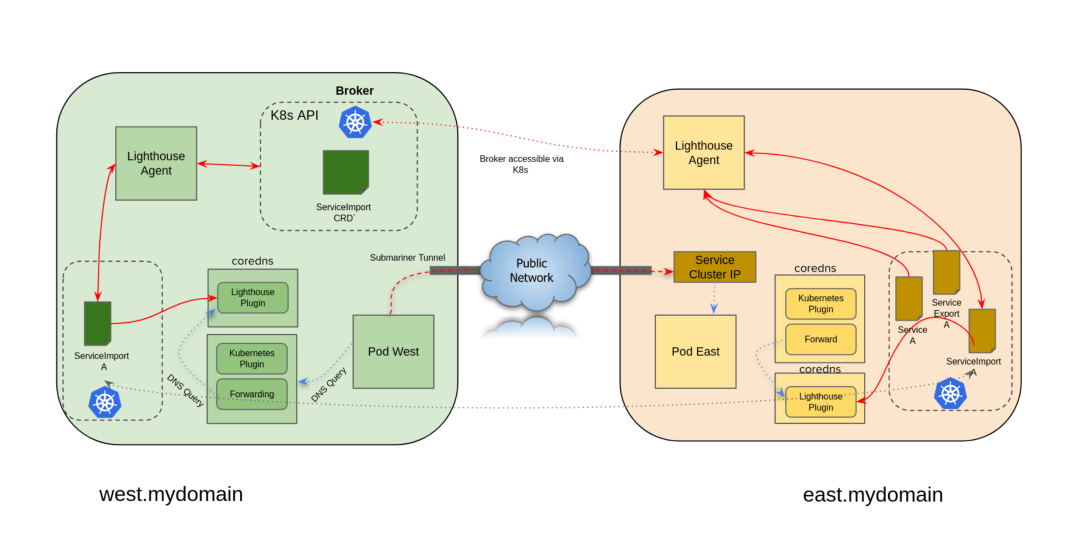

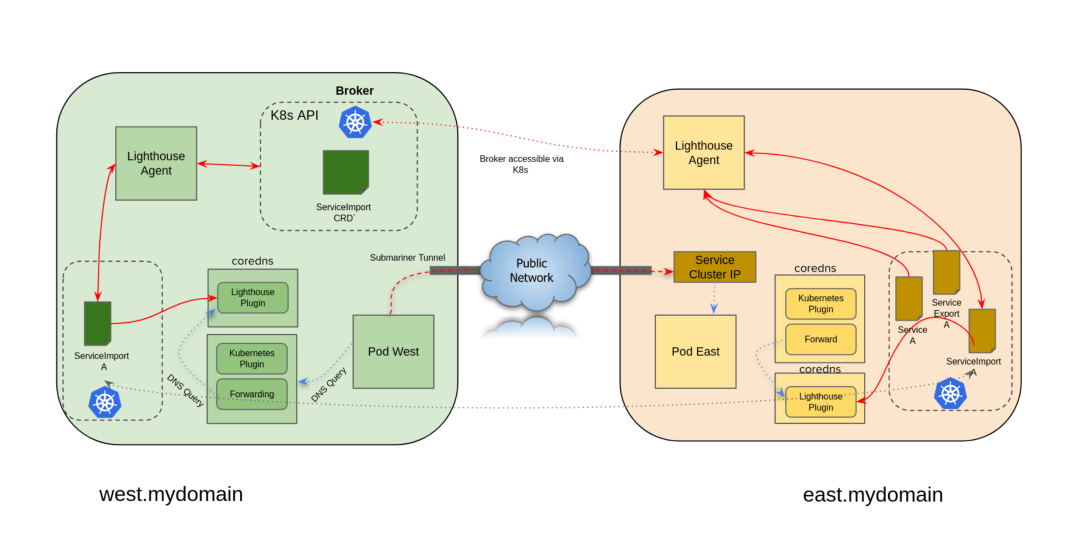

Cross-cluster DNS service discovery in Submariner is implemented by the following two components based on the specification of the Kubernetes Multi-Cluster Service APIs:

- Lighthouse Agent: accesses the API Server of the Broker cluster to exchange ServiceImport metadata information with other clusters.

- For each Service in the local cluster for which a ServiceExport has been created, the Agent creates the corresponding ServiceImport resource and exports it to the Broker for use by other clusters.

- For ServiceImport resources exported to the Broker from other clusters, it creates a copy of it in this cluster.

- Lighthouse DNS Server:

- Lighthouse DNS Server performs DNS resolution based on the ServiceImport resource.

- CoreDNS is configured to send resolution requests for the

clusterset.local domain name to the Lighthouse DNS server.

The MCS API is a specification defined by the Kubernetes community for cross-cluster service discovery and consists of two main CRDs, ServiceExport and ServiceImport.

-

ServiceExport defines the Service that is exposed (exported) to other clusters, created by the user in the cluster where the Service to be exported is located, consistent with the Service’s name and Namespace.

1

2

3

4

5

|

apiVersion: multicluster.k8s.io/v1alpha1

kind: ServiceExport

metadata:

name: my-svc

namespace: my-ns

|

-

ServiceImport: When a service is exported, the controller implementing the MCS API automatically generates a ServiceImport resource corresponding to it in all clusters (including the cluster that exported the service).

1

2

3

4

5

6

7

8

9

10

11

12

13

14

|

apiVersion: multicluster.k8s.io/v1alpha1

kind: ServiceImport

metadata:

name: my-svc

namespace: my-ns

spec:

ips:

- 42.42.42.42 # IP address for cross-cluster access

type: "ClusterSetIP"

ports:

- name: http

protocol: TCP

port: 80

sessionAffinity: None

|

1.2. Gateway Engine

The Gateway Engine is deployed in each cluster and is responsible for establishing tunnels to other clusters. The tunnels can be implemented by:

- IPsec, implemented using Libreswan. This is the current default setting.

- WireGuard, implemented using the wgctrl library.

- VXLAN, without encryption.

You can use the -cable-driver parameter to set the type of tunnel when joining a cluster with the subctl join command.

The Gateway Engine is deployed as DaemonSet and only runs on Nodes with the submariner.io/gateway=true Label. When we join the cluster with the subctl join command, if no Node has a Label, we will be prompted to select a Node as the Gateway Node.

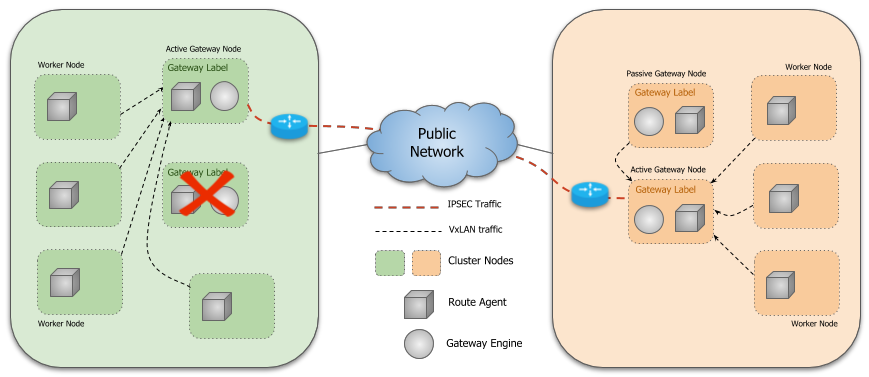

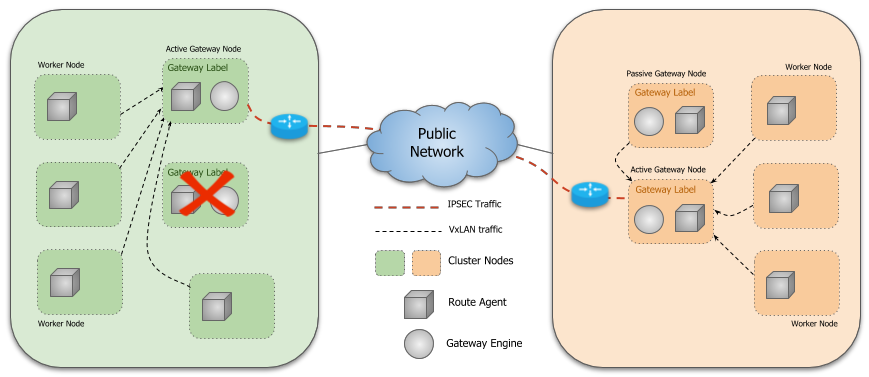

Submariner also supports Gateway Engines in active/passive high availability mode, where we can deploy Gateway Engines on multiple nodes. only one Gateway Engine can be active at a time to handle cross-set traffic, and the Gateway Engine The active instance is determined by leader election, and the other instances wait in passive mode, ready to take over in case of an active instance failure.

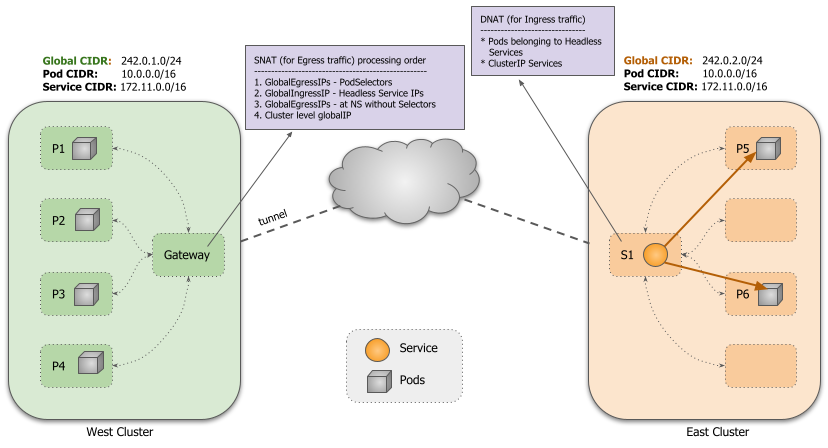

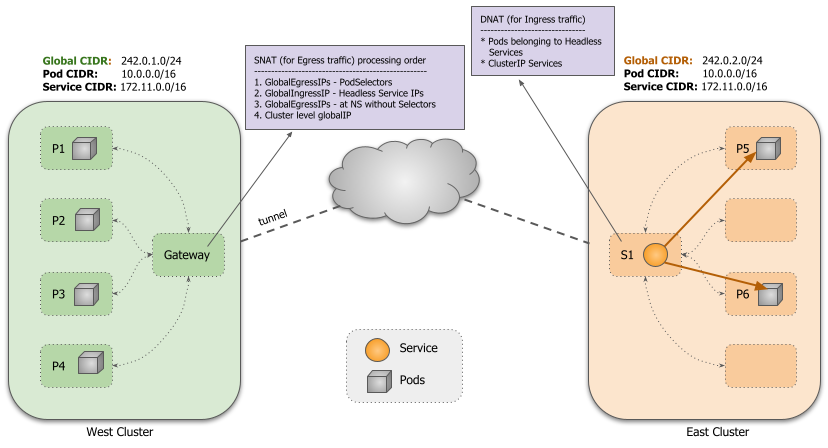

1.3. Globalnet

One of the highlights of Submariner is the support for CIDR overlap between clusters, which helps reduce the cost of network reconfiguration. For example, during deployment, some clusters may use default network segments, resulting in CIDR overlap. In this case, if subsequent changes to the cluster segments are required, there may be an impact on the operation of the cluster.

To support overlapping CIDRs between clusters, Submariner performs NAT translation of traffic to and from the cluster via a GlobalCIDR segment (default is 242.0.0.0/8), with all address translation occurring on the active Gateway Node. You can specify the global GlobalCIDR for all clusters with the --globalnet-cidr-range parameter when subctl deploy deploys the Broker; you can also specify the GlobalCIDR for the cluster with the --globalnet-cidr parameter when subctl join joins the cluster.

Exported ClusterIP type services are assigned a Global IP from GlobalCIDR for inbound traffic, and for Headless type services, a Global IP is assigned to each associated Pod for both inbound and outbound traffic.

2. Environment Preparation

For this experiment, we used a virtual machine running Ubuntu 20.04 and started multiple Kubernetes clusters via Kind for testing.

1

2

3

4

5

6

7

8

9

10

|

root@seven-demo:~# seven-demo

Static hostname: seven-demo

Icon name: computer-vm

Chassis: vm

Machine ID: f780bfec3c409135b11d1ceac73e2293

Boot ID: e83e9a883800480f86d37189bdb09628

Virtualization: kvm

Operating System: Ubuntu 20.04.5 LTS

Kernel: Linux 5.15.0-1030-gcp

Architecture: x86-64

|

Install the relevant software and command line tools.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

|

# Install Docker, depending on the operating system https://docs.docker.com/engine/install/

sudo apt-get update

sudo apt-get install -y \

ca-certificates \

curl \

gnupg

sudo mkdir -m 0755 -p /etc/apt/keyrings

curl -fsSL https://download.docker.com/linux/ubuntu/gpg | sudo gpg --dearmor -o /etc/apt/keyrings/docker.gpg

echo \

"deb [arch="$(dpkg --print-architecture)" signed-by=/etc/apt/keyrings/docker.gpg] https://download.docker.com/linux/ubuntu \

"$(. /etc/os-release && echo "$VERSION_CODENAME")" stable" | \

sudo tee /etc/apt/sources.list.d/docker.list > /dev/null

sudo apt-get update

sudo apt-get install -y docker-ce docker-ce-cli containerd.io docker-buildx-plugin docker-compose-plugin

# Installing Kind

curl -Lo ./kind https://kind.sigs.k8s.io/dl/v0.18.0/kind-linux-amd64

chmod +x ./kind

sudo mv ./kind /usr/local/bin/kind

# Installing kubectl

curl -LO "https://dl.k8s.io/release/$(curl -L -s https://dl.k8s.io/release/stable.txt)/bin/linux/amd64/kubectl"

chmod +x kubectl

mv kubectl /usr/local/bin

apt install -y bash-completion

echo 'source <(kubectl completion bash)' >>~/.bashrc

# Installing subctl

curl -Lo subctl-release-0.14-linux-amd64.tar.xz https://github.com/submariner-io/subctl/releases/download/subctl-release-0.14/subctl-release-0.14-linux-amd64.tar.xz

tar -xf subctl-release-0.14-linux-amd64.tar.xz

mv subctl-release-0.14/subctl-release-0.14-linux-amd64 /usr/local/bin/subctl

chmod +x /usr/local/bin/subctl

|

3. Quick Start

3.1. Creating a cluster

To create a 3-node cluster using Kind, the reader needs to replace SERVER_IP with their own server IP. By default, Kind sets the Kubernetes API server IP:Port to the local loopback address ( 127.0.0.1):random port, which is fine for interacting with the cluster from the local machine, but in this experiment multiple Kind clusters need to communicate with each other, so we need to change the apiServerAddress of the Kind to the local IP.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

|

# Replace with server IP

export SERVER_IP="10.138.0.11"

kind create cluster --config - <<EOF

kind: Cluster

name: broker

apiVersion: kind.x-k8s.io/v1alpha4

nodes:

- role: control-plane

networking:

apiServerAddress: $SERVER_IP

podSubnet: "10.7.0.0/16"

serviceSubnet: "10.77.0.0/16"

EOF

kind create cluster --config - <<EOF

kind: Cluster

name: c1

apiVersion: kind.x-k8s.io/v1alpha4

nodes:

- role: control-plane

- role: worker

networking:

apiServerAddress: $SERVER_IP

podSubnet: "10.8.0.0/16"

serviceSubnet: "10.88.0.0/16"

EOF

kind create cluster --config - <<EOF

kind: Cluster

name: c2

apiVersion: kind.x-k8s.io/v1alpha4

nodes:

- role: control-plane

- role: worker

networking:

apiServerAddress: $SERVER_IP

podSubnet: "10.9.0.0/16"

serviceSubnet: "10.99.0.0/16"

EOF

|

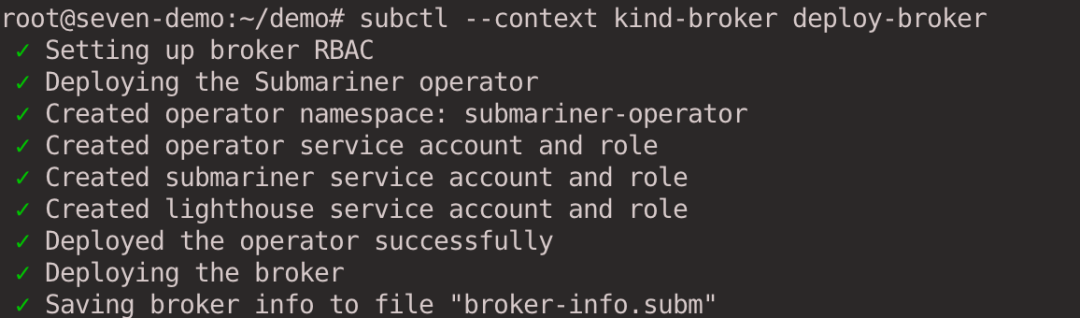

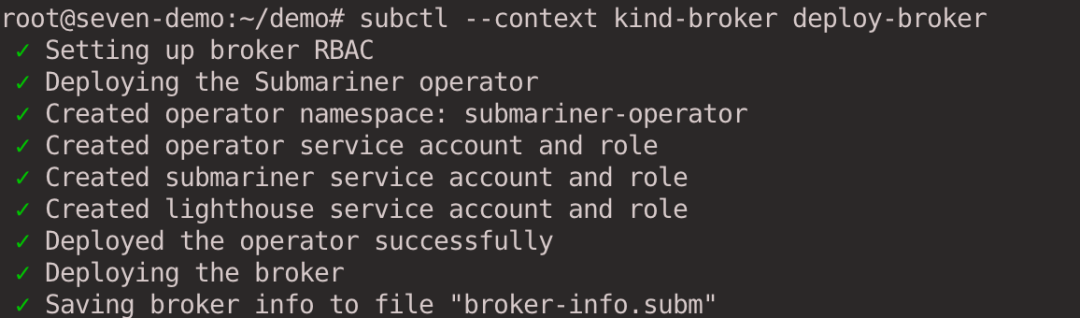

3.2. Deploying a Broker

In this experiment, we specifically configure a cluster as a Broker cluster, which can be either a dedicated cluster or one of the connected clusters. Execute the subctl deploy-broker command to deploy the Broker, which contains only a set of CRDs and no Pods or Services are deployed.

1

|

subctl --context kind-broker deploy-broker

|

After deployment, a broker-info.subm file is generated, which is Base64 encrypted and contains the address of the API Server connecting to the Broker cluster, as well as certificate information and IPsec key information.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

|

{

"brokerURL": "https://10.138.0.11:45681",

"ClientToken": {

"metadata": {

"name": "submariner-k8s-broker-admin-token-f7b62",

"namespace": "submariner-k8s-broker",

"uid": "3f949d19-4f42-43d6-af1c-382b53f83d8a",

"resourceVersion": "688",

"creationTimestamp": "2023-04-05T02:50:02Z",

"annotations": {

"kubernetes.io/created-by": "subctl",

"kubernetes.io/service-account.name": "submariner-k8s-broker-admin",

"kubernetes.io/service-account.uid": "da6eeba1-b707-4d30-8e1e-e414e9eae817"

},

"managedFields": [

{

"manager": "kube-controller-manager",

"operation": "Update",

"apiVersion": "v1",

"time": "2023-04-05T02:50:02Z",

"fieldsType": "FieldsV1",

"fieldsV1": {

"f:data": {

".": {},

"f:ca.crt": {},

"f:namespace": {},

"f:token": {}

},

"f:metadata": {

"f:annotations": {

"f:kubernetes.io/service-account.uid": {}

}

}

}

},

{

"manager": "subctl",

"operation": "Update",

"apiVersion": "v1",

"time": "2023-04-05T02:50:02Z",

"fieldsType": "FieldsV1",

"fieldsV1": {

"f:metadata": {

"f:annotations": {

".": {},

"f:kubernetes.io/created-by": {},

"f:kubernetes.io/service-account.name": {}

}

},

"f:type": {}

}

}

]

},

"data": {

"ca.crt": "LS0tLS1CRUdJTiBDRVJUSUZJQ0FURS0tLS0tCk1JSUMvakNDQWVhZ0F3SUJBZ0lCQURBTkJna3Foa2lHOXcwQkFRc0ZBREFWTVJNd0VRWURWUVFERXdwcmRXSmwKY201bGRHVnpNQjRYRFRJek1EUXdOVEF5TkRVMU1Wb1hEVE16TURRd01qQXlORFUxTVZvd0ZURVRNQkVHQTFVRQpBeE1LYTNWaVpYSnVaWFJsY3pDQ0FTSXdEUVlKS29aSWh2Y05BUUVCQlFBRGdnRVBBRENDQVFvQ2dnRUJBSytXCmIzb0h1ZEJlbU5tSWFBNXQrWmI3TFhKNXRLWDB6QVc5a0tudjQzaGpoTE84NHlSaEpyY3ZSK29QVnNaUUJIclkKc01tRmx3aVltbU5ORzA4c2NLMTlyLzV0VkdFR2hCckdML3VKcTIybXZtYi80aHdwdmRTQjN0UDlkU2RzYUFyRwpYYllwOE4vUmlheUJvbTBJVy9aQjNvZ0MwK0tNcWM0NE1MYnBkZXViWnNSckErN2pwTElYczE3OGgxb25kdGNrClIrYlRnNGpjeS92NTkrbGJjamZSeTczbUllMm9DbVFIbE1XUFpSTkMveDhaTktGekl6UHc4SmZSOERjWk5Xc1YKa1NBUVNVUkpnTEhBbjY5MlhDSEsybmJuN21pcjYvYVZzVVpyTGdVNC9zcWg3QVFBdDFGQkk3NDRpcithTjVxSwpJRnRJenkxU3p2ZEpwMThza3EwQ0F3RUFBYU5aTUZjd0RnWURWUjBQQVFIL0JBUURBZ0trTUE4R0ExVWRFd0VCCi93UUZNQU1CQWY4d0hRWURWUjBPQkJZRUZFQUhFbndHditwTXNVcHVQRXNqbkQwTEgvSFpNQlVHQTFVZEVRUU8KTUF5Q0NtdDFZbVZ5Ym1WMFpYTXdEUVlKS29aSWh2Y05BUUVMQlFBRGdnRUJBQTFGckk1cGR1VTFsQzluVldNNwowYlc2VFRXdzYwUTlFVWdsRzc4bkRFZkNKb3ovY2xWclFNWGZrc2Zjc1VvcHZsaE5yWFlpbmd0UEE4aEMrTnRJCmdPZElDZDJGaWFOTjRCYkt3a1NmRkQvbmhjWDU1WmQ0UzN1SzZqb2JWVHIzaXVJRVhIdHg0WVIyS1ZuZitTMDUKQTFtbXdzSG1ZbkhtWEllOUEyL3hKdVhtSnNybWljWTlhMXhtSXVyYzhNalBsa1pZWVU1OFBvZHJFNi9XcnBaawpBbW9qcERIWWIrbnZxa0FuaG9hYUV3b2FEVGxYRjY0M3lVLy9MZE4wTmw5MWkvSHNwQ2tZdVFrQjJmQXNkSGNaCkMrdzQ4WVhYT21pSzZXcmJGYVJnaEVKdjB6UjdsZk50UEVZVWJHWEFxV0ZlSnFTdnM5aUYwbFV1NzZDNkt3YWIKbmdnPQotLS0tLUVORCBDRVJUSUZJQ0FURS0tLS0tCg==",

"namespace": "c3VibWFyaW5lci1rOHMtYnJva2Vy",

"token": "ZXlKaGJHY2lPaUpTVXpJMU5pSXNJbXRwWkNJNklqaHZWVnBuZVVoZk1uVTFjSEJxU1hOdE1UTk1NbUY0TFRaSlIyVlZVRGd4VjI1dmMyNXBNMjFYZFhjaWZRLmV5SnBjM01pT2lKcmRXSmxjbTVsZEdWekwzTmxjblpwWTJWaFkyTnZkVzUwSWl3aWEzVmlaWEp1WlhSbGN5NXBieTl6WlhKMmFXTmxZV05qYjNWdWRDOXVZVzFsYzNCaFkyVWlPaUp6ZFdKdFlYSnBibVZ5TFdzNGN5MWljbTlyWlhJaUxDSnJkV0psY201bGRHVnpMbWx2TDNObGNuWnBZMlZoWTJOdmRXNTBMM05sWTNKbGRDNXVZVzFsSWpvaWMzVmliV0Z5YVc1bGNpMXJPSE10WW5KdmEyVnlMV0ZrYldsdUxYUnZhMlZ1TFdZM1lqWXlJaXdpYTNWaVpYSnVaWFJsY3k1cGJ5OXpaWEoyYVdObFlXTmpiM1Z1ZEM5elpYSjJhV05sTFdGalkyOTFiblF1Ym1GdFpTSTZJbk4xWW0xaGNtbHVaWEl0YXpoekxXSnliMnRsY2kxaFpHMXBiaUlzSW10MVltVnlibVYwWlhNdWFXOHZjMlZ5ZG1salpXRmpZMjkxYm5RdmMyVnlkbWxqWlMxaFkyTnZkVzUwTG5WcFpDSTZJbVJoTm1WbFltRXhMV0kzTURjdE5HUXpNQzA0WlRGbExXVTBNVFJsT1dWaFpUZ3hOeUlzSW5OMVlpSTZJbk41YzNSbGJUcHpaWEoyYVdObFlXTmpiM1Z1ZERwemRXSnRZWEpwYm1WeUxXczRjeTFpY205clpYSTZjM1ZpYldGeWFXNWxjaTFyT0hNdFluSnZhMlZ5TFdGa2JXbHVJbjAub1JHM2d6Wno4MGVYQXk5YlZ5b1V2NmoyTERvdFJiNlJyOTF4d0ZiTDMwdFNJY3dnS3FYd3NZbVV1THhtcFdBb2M5LWRSMldHY0ZLYklORlZmUUttdVJMY2JsenlTUFFVMlB3WVVwN1oyNnlxYXFOMG1UQ3ZNWWxSeHp6cWY3LXlXUm8yNE9pWS1nMnNmNmNrRzRPMkdwa2MwTlNoOWRTUGY4dXJTbjZSVGJwbjFtcFZjTy1IQjJWeU5hTE9EdmtWS3RLVFJfVS1ZRGc1NzVtczM0OXM0X2xMZjljZjlvcjFaQXVvXzcyN0E5U0VvZ0JkN3BaSndwb0FEUHZRb1NGR0VLQWZYYTFXXzJWVE5PYXE4cUQxOENVbXVFRUFxMmtoNElBN0d5LVRGdUV2Q0JYUVlzRHYzUFJQTjZpOGlKSFBLVUN1WVNONS1NT3lGX19aNS1WdlhR"

},

"type": "kubernetes.io/service-account-token"

},

"IPSecPSK": {

"metadata": {

"name": "submariner-ipsec-psk",

"creationTimestamp": null

},

"data": {

"psk": "NL7dUK+RagDKPQZZj+7Q7wComj0/wLFbfvnHe12hHxR8+d/FnkEqXfmh8JMzLo6h"

}

},

"ServiceDiscovery": true,

"Components": [

"service-discovery",

"connectivity"

],

"CustomDomains": null

}

|

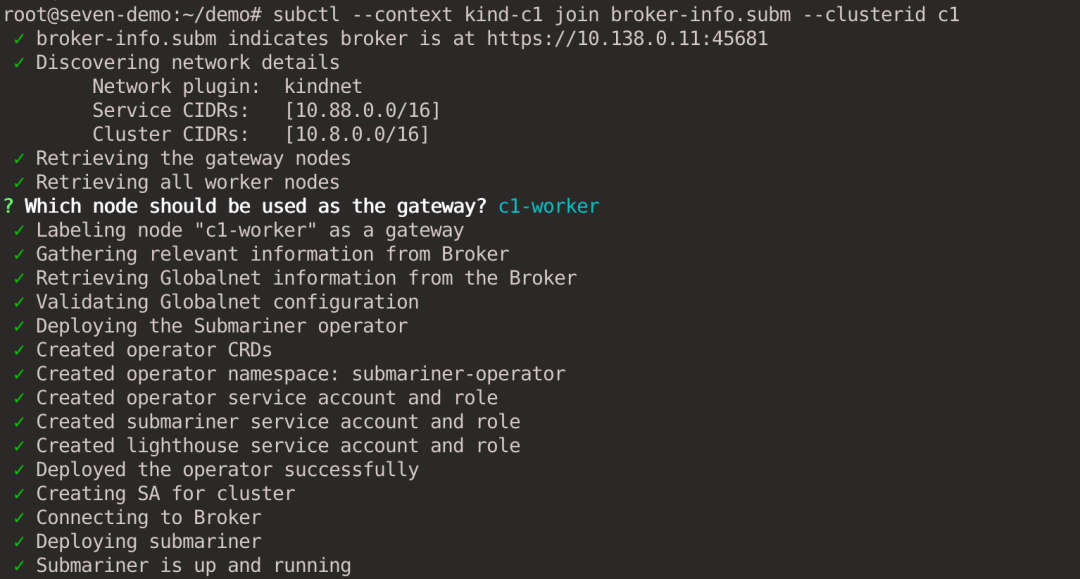

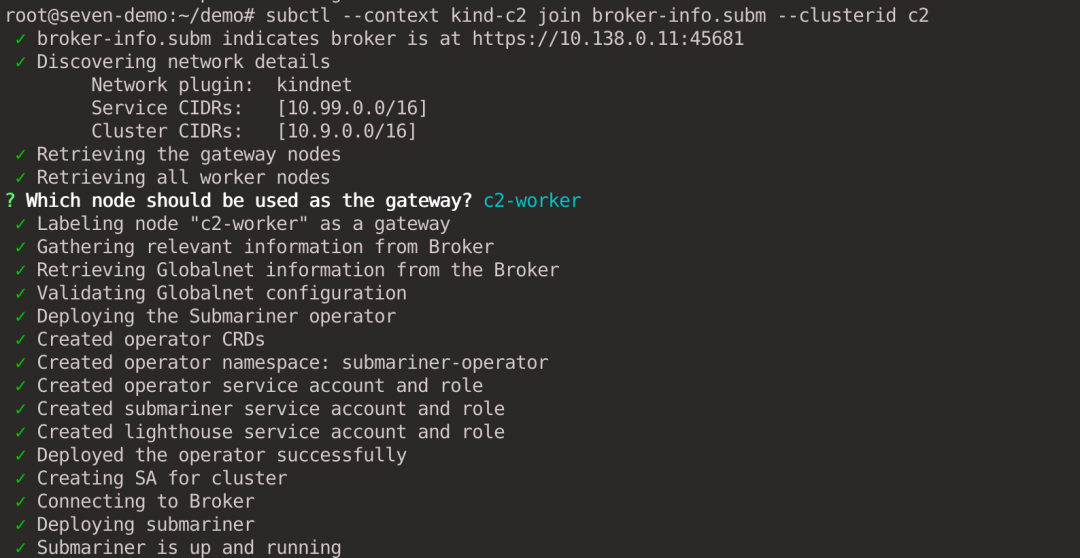

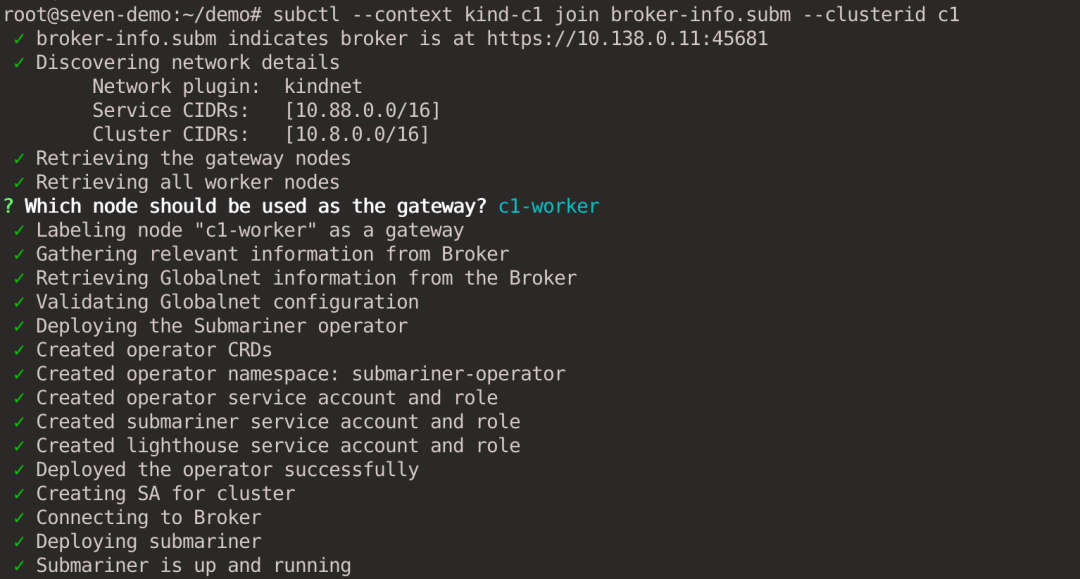

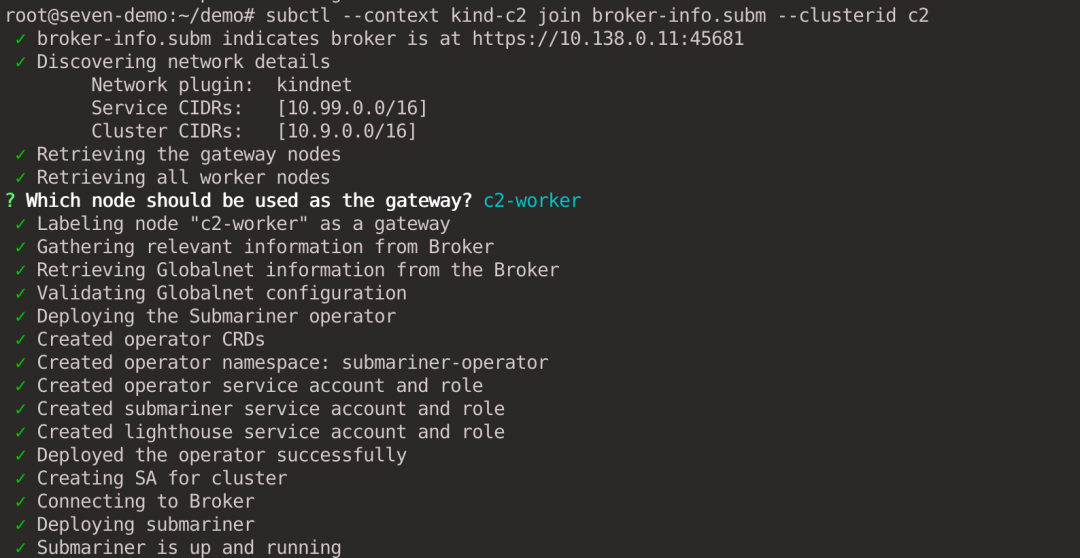

3.3. c1, c2 join clusters

Execute the -subctl join command to join both clusters c1 and c2 to the Broker cluster. Use the --clusterid parameter to specify the cluster IDs, which need to be unique for each cluster. Provide the -broker-info.subm file generated in the previous step for cluster registration.

1

2

|

subctl --context kind-c1 join broker-info.subm --clusterid c1

subctl --context kind-c2 join broker-info.subm --clusterid c2

|

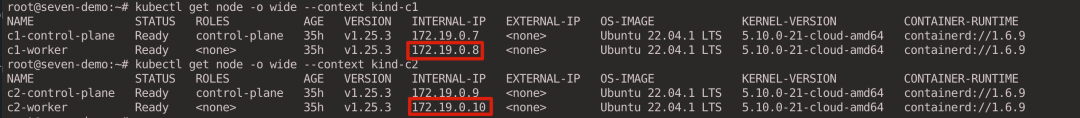

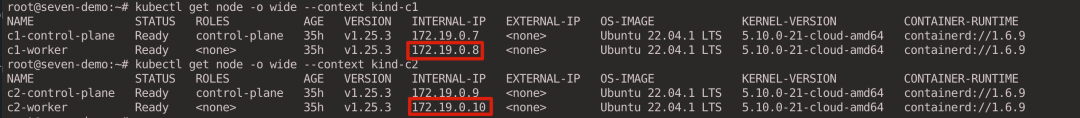

We will be prompted to select a node as the Gateway Node. c1 cluster selects the c1-worker node as the Gateway and c2 cluster selects the c2-worker node as the Gateway.

The IP addresses of the two Gateway Nodes are as follows, which are then used to establish a tunnel connection between the two clusters respectively.

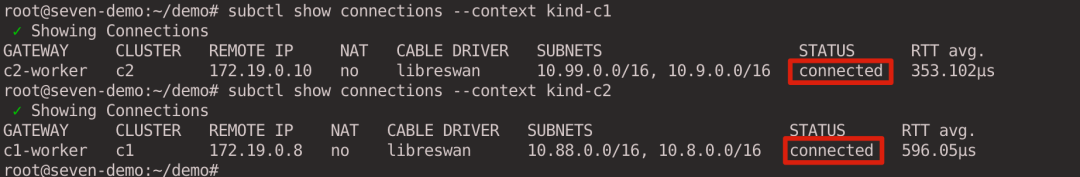

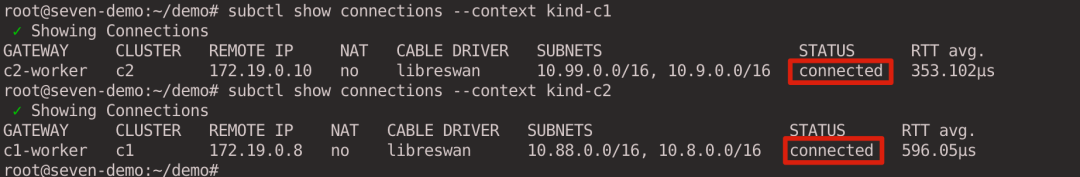

3.4. Viewing Cluster Connections

After waiting for the relevant components of Submariner in both c1 and c2 clusters to run successfully, execute the following command to view the inter-cluster connectivity.

1

2

|

subctl show connections --context kind-c1

subctl show connections --context kind-c2

|

You can see the connections established by cluster c1 and c2 with each other.

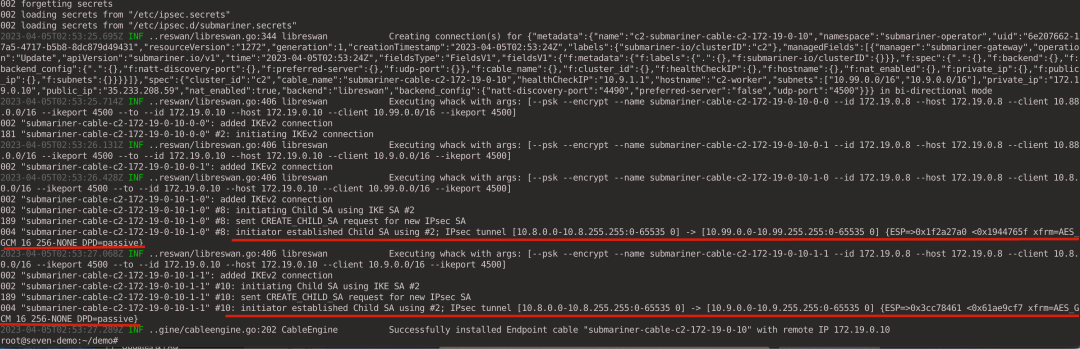

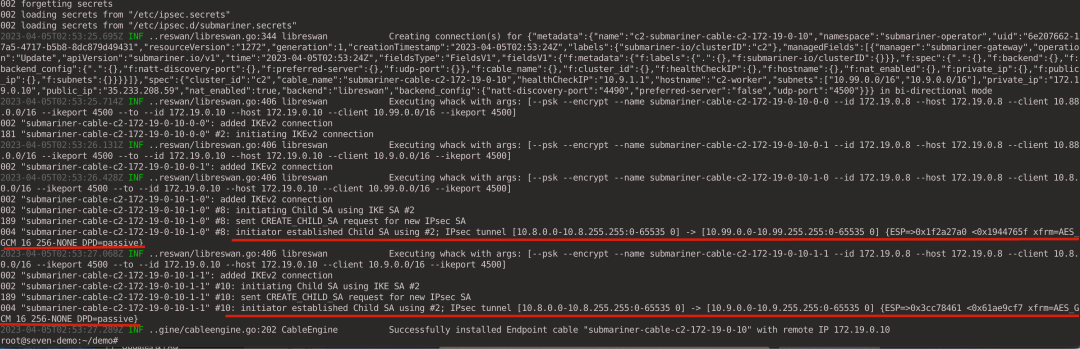

Looking at the c1 gateway logs, you can see that an IPsec tunnel was successfully established with the c2 cluster.

3.5. Testing cross-cluster communication

At this point, we have successfully established a cross-cluster connection between clusters c1 and c2, so we will create the service and demonstrate how to export it to other clusters for access.

In the following example, we create the relevant resources in sample Namespace. Note that the sample namespace must be created in both clusters for service discovery to work properly.

1

2

3

|

kubectl --context kind-c2 create namespace sample

# You need to ensure that sample Namespace is also available on the c1 cluster, otherwise the Lighthouse agent will fail to create Endpointslices

kubectl --context kind-c1 create namespace sample

|

3.5.1. ClusterIP Service

First test the ClusterIP type of service. execute the following command to create a service in the c2 cluster. In this experiment, whereami is an HTTP Server written in Golang that injects Kubernetes-related information (Pod name, Pod’s Namespace, Node) into the container’s environment variables via the Downward API and outputs it when a request is received. In addition, whereami prints the IP address and port information of the requesting party.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

|

kubectl --context kind-c2 apply -f - <<EOF

apiVersion: apps/v1

kind: Deployment

metadata:

name: whereami

namespace: sample

spec:

replicas: 3

selector:

matchLabels:

app: whereami

template:

metadata:

labels:

app: whereami

spec:

containers:

- name: whereami

image: cr7258/whereami:v1

imagePullPolicy: Always

ports:

- containerPort: 80

env:

- name: NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

- name: NODE_NAME

valueFrom:

fieldRef:

fieldPath: spec.nodeName

- name: POD_NAME

valueFrom:

fieldRef:

fieldPath: metadata.name

- name: POD_IP

valueFrom:

fieldRef:

fieldPath: status.podIP

---

apiVersion: v1

kind: Service

metadata:

name: whereami-cs

namespace: sample

spec:

selector:

app: whereami

ports:

- protocol: TCP

port: 80

targetPort: 80

EOF

|

View services in the c2 cluster.

1

2

3

4

5

6

7

8

|

root@seven-demo:~# kubectl --context kind-c2 get pod -n sample -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

whereami-754776cdc9-28kgd 1/1 Running 0 19h 10.9.1.18 c2-control-plane <none> <none>

whereami-754776cdc9-8ccmc 1/1 Running 0 19h 10.9.1.17 c2-control-plane <none> <none>

whereami-754776cdc9-dlp55 1/1 Running 0 19h 10.9.1.16 c2-control-plane <none> <none>

root@seven-demo:~# kubectl --context kind-c2 get svc -n sample -o wide

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE SELECTOR

whereami-cs ClusterIP 10.99.2.201 <none> 80/TCP 19h app=whereami

|

Use the subctl export command in the c2 cluster to export the service.

1

|

subctl --context kind-c2 export service --namespace sample whereami-cs

|

This command creates a ServiceExport resource with the same name and Namespace as the Service.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

|

root@seven-demo:~# kubectl get serviceexports --context kind-c2 -n sample whereami-cs -o yaml

apiVersion: multicluster.x-k8s.io/v1alpha1

kind: ServiceExport

metadata:

creationTimestamp: "2023-04-06T13:04:15Z"

generation: 1

name: whereami-cs

namespace: sample

resourceVersion: "327707"

uid: d1da8953-3fa5-4635-a8bb-6de4cd3c45a9

status:

conditions:

- lastTransitionTime: "2023-04-06T13:04:15Z"

message: ""

reason: ""

status: "True"

type: Valid

- lastTransitionTime: "2023-04-06T13:04:15Z"

message: ServiceImport was successfully synced to the broker

reason: ""

status: "True"

type: Synced

|

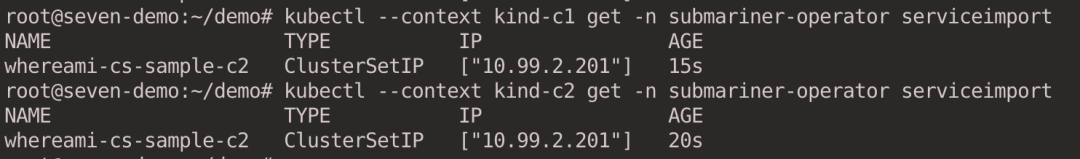

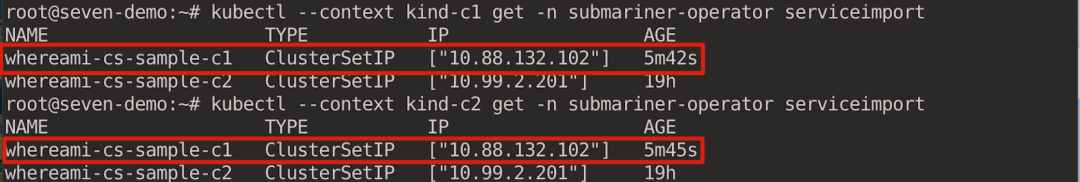

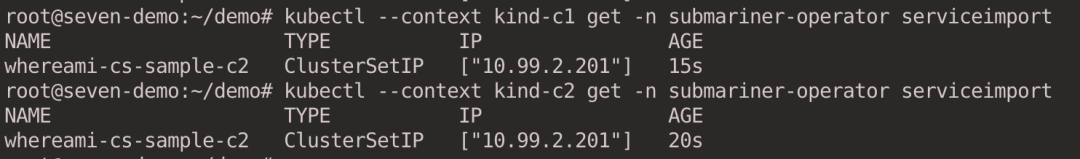

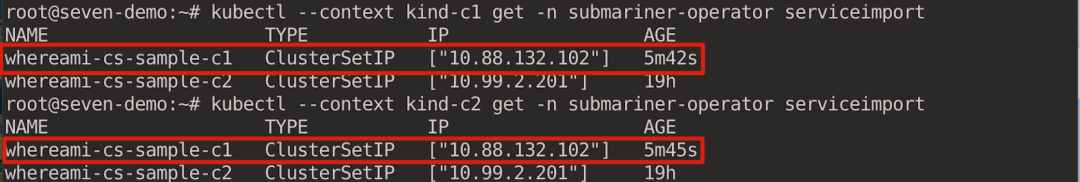

ServiceImport resources are automatically created by Submariner in clusters c1,c2 with the IP address of the Service’s ClusterIP address.

1

2

|

kubectl --context kind-c1 get -n submariner-operator serviceimport

kubectl --context kind-c2 get -n submariner-operator serviceimport

|

Create a client pod in cluster c1 to access the whereami service in cluster c2.

1

2

|

kubectl --context kind-c1 run client --image=cr7258/nettool:v1

kubectl --context kind-c1 exec -it client -- bash

|

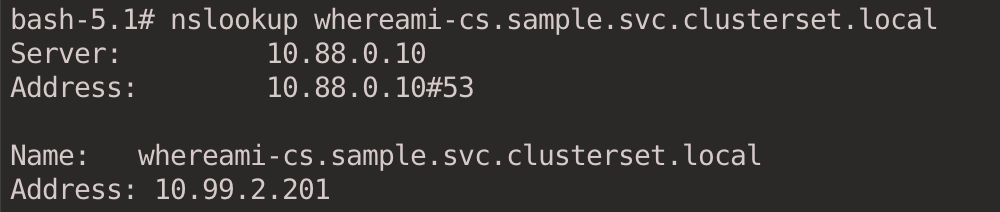

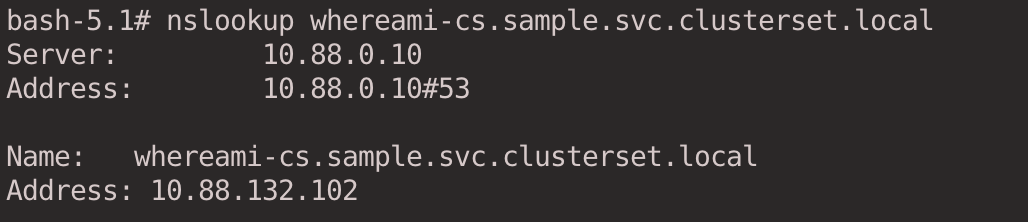

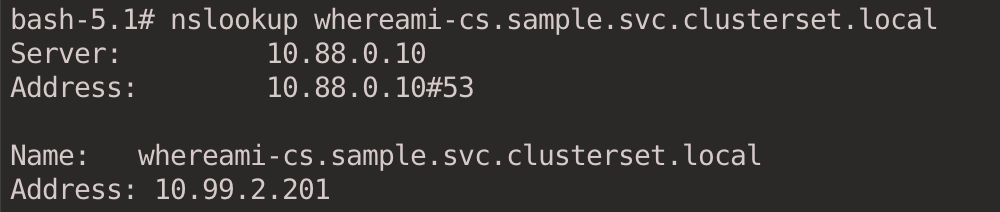

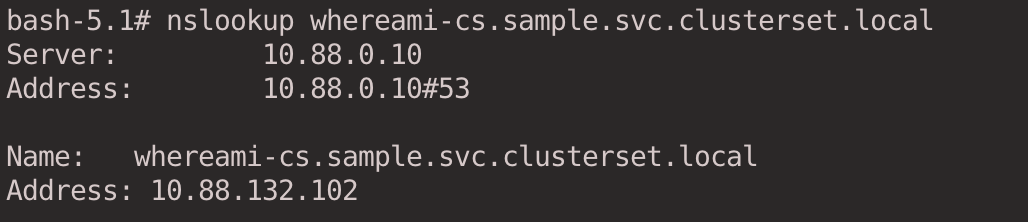

Try DNS resolution first. ClusterIP Service type services can be accessed in the following format <svc-name>. <namespace>.svc.clusterset.local.

1

|

nslookup whereami-cs.sample.svc.clusterset.local

|

The IP returned is the address of the ClusterIP in the c2 Cluster Service.

We look at the CoreDNS configuration file, which is modified by the Submariner Operator to pass the domain name clusterset.local, used for cross-cluster communication, to Lighthouse DNS for resolution.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

|

root@seven-demo:~# kubectl get cm -n kube-system coredns -oyaml

apiVersion: v1

data:

Corefile: |+

#lighthouse-start AUTO-GENERATED SECTION. DO NOT EDIT

clusterset.local:53 {

forward . 10.88.78.89

}

#lighthouse-end

.:53 {

errors

health {

lameduck 5s

}

ready

kubernetes cluster.local in-addr.arpa ip6.arpa {

pods insecure

fallthrough in-addr.arpa ip6.arpa

ttl 30

}

prometheus :9153

forward . /etc/resolv.conf {

max_concurrent 1000

}

cache 30

loop

reload

loadbalance

}

kind: ConfigMap

metadata:

creationTimestamp: "2023-04-05T02:47:34Z"

name: coredns

namespace: kube-system

resourceVersion: "1211"

uid: 698f20a5-83ea-4a3e-8a1e-8b9438a6b3f8

|

Submariner follows the following logic to perform service discovery across cluster sets:

- If the exported service is not available in the local cluster, Lighthouse DNS returns the IP address of the ClusterIP service from one of the remote clusters from which the service was exported.

- If the exported service is available in the local cluster, Lighthouse DNS always returns the IP address of the local ClusterIP service.

- If multiple clusters export services with the same name from the same namespace, Lighthouse DNS load balances between clusters in a polling fashion.

- You can access cluster-specific services by prefixing DNS queries with the

cluster-id prefix, <cluster-id>.<svc-name>.<namespace>.svc.clusterset.local.

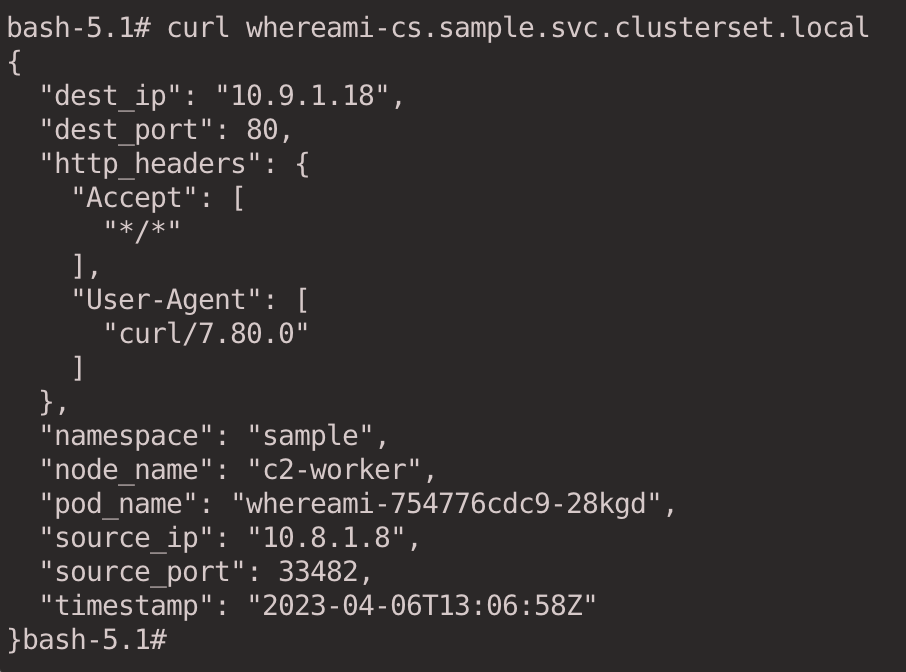

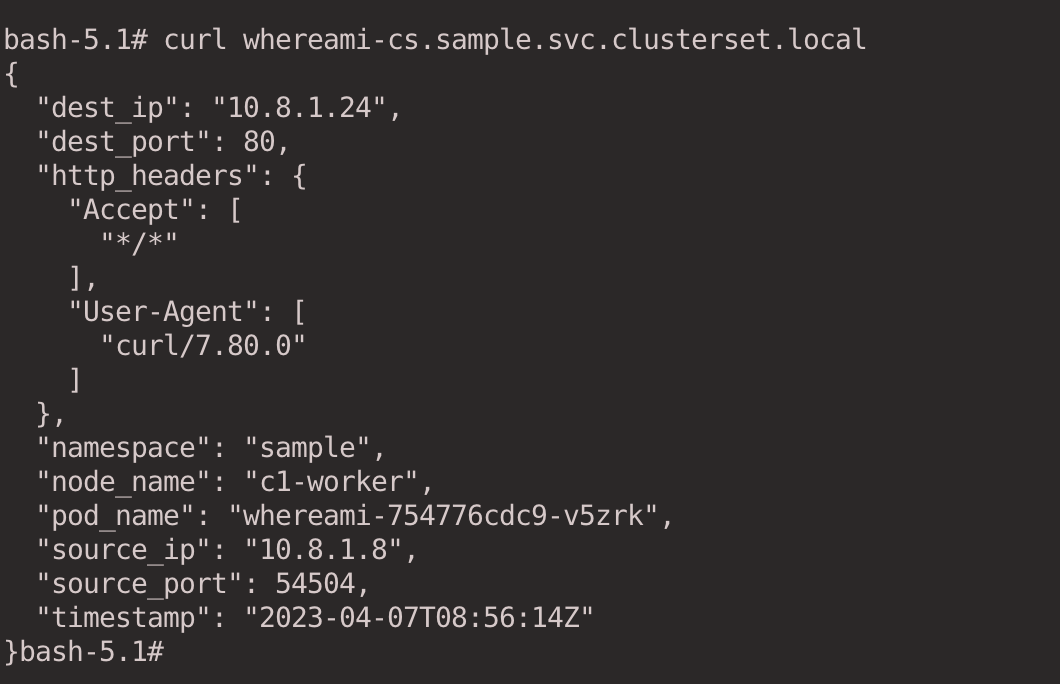

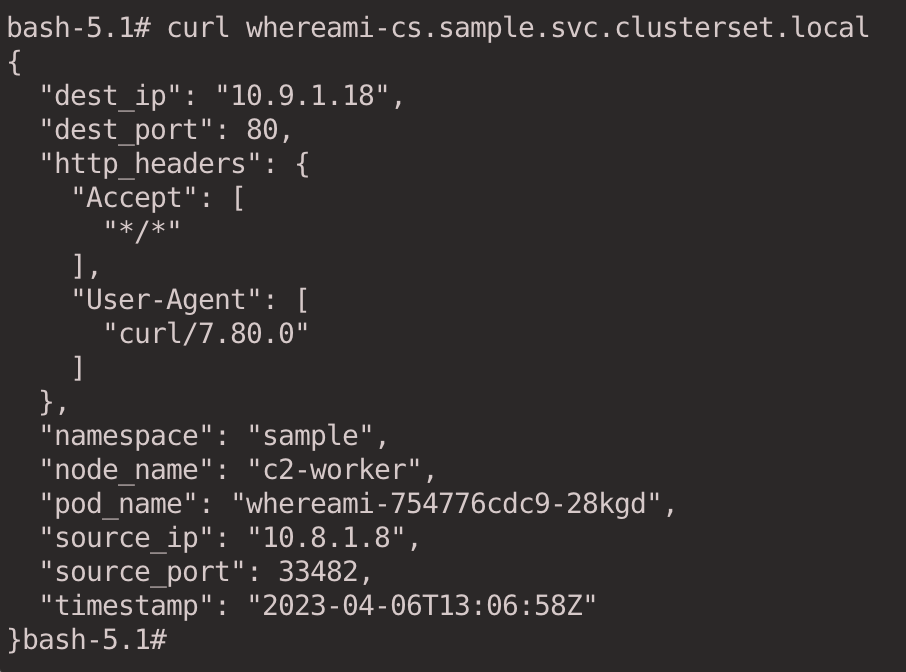

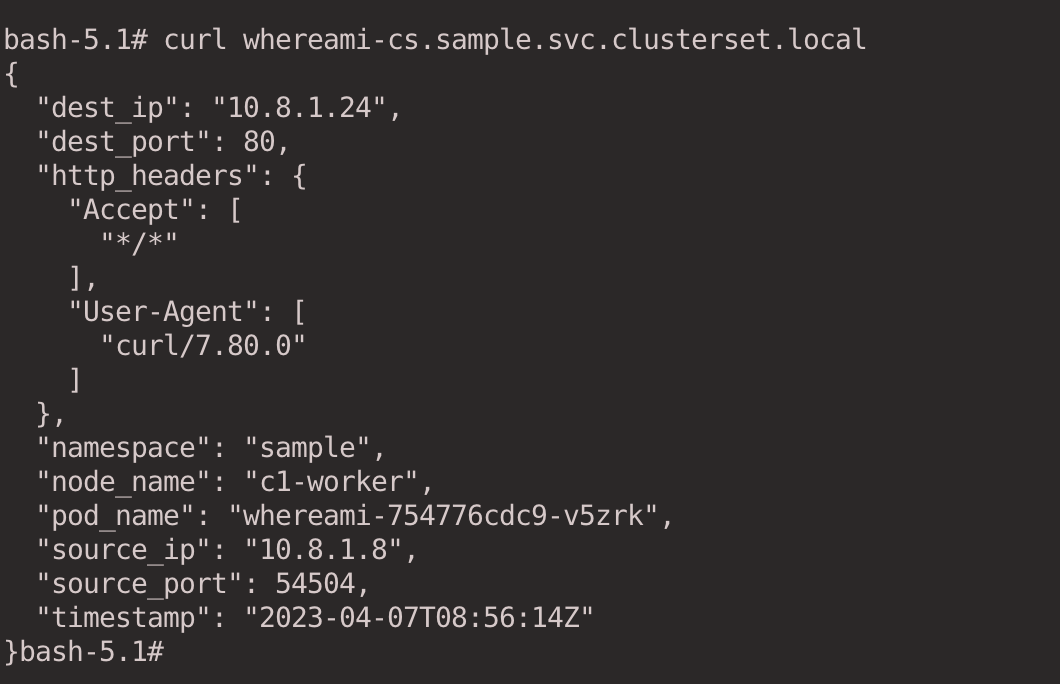

Initiate an HTTP request via the curl command.

1

|

curl whereami-cs.sample.svc.clusterset.local

|

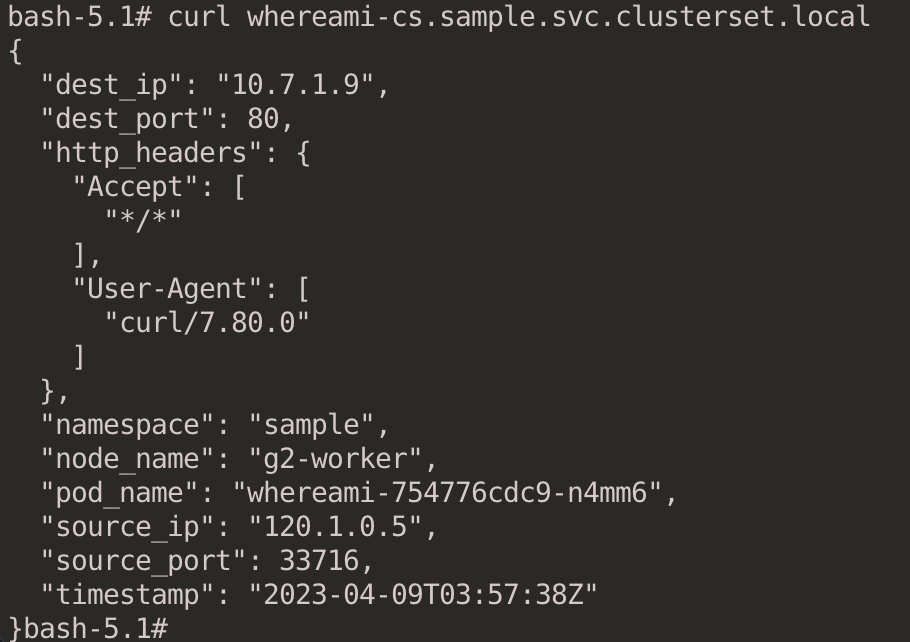

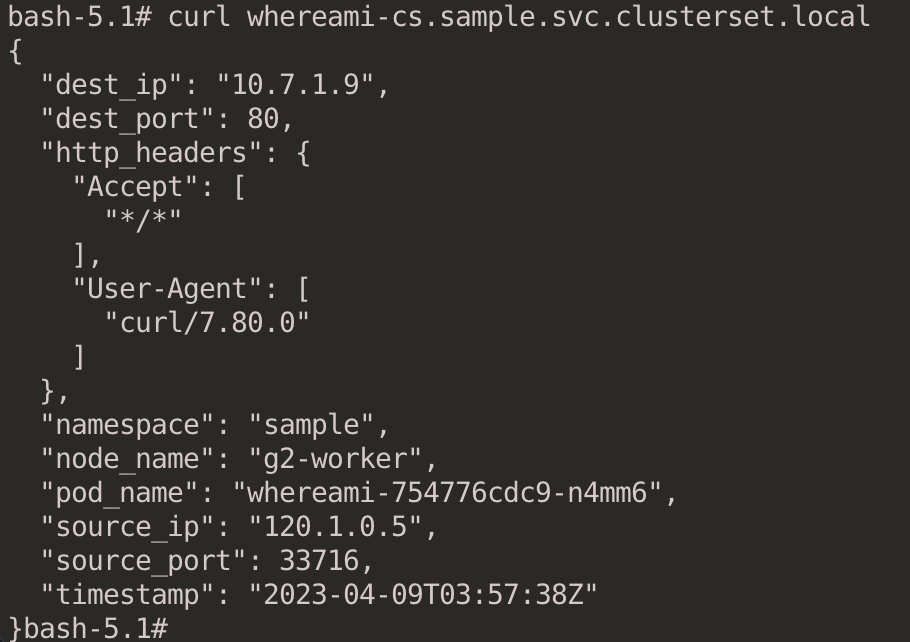

The result is as follows. We can confirm that the Pod is in the c2 cluster based on the node_name field in the output.

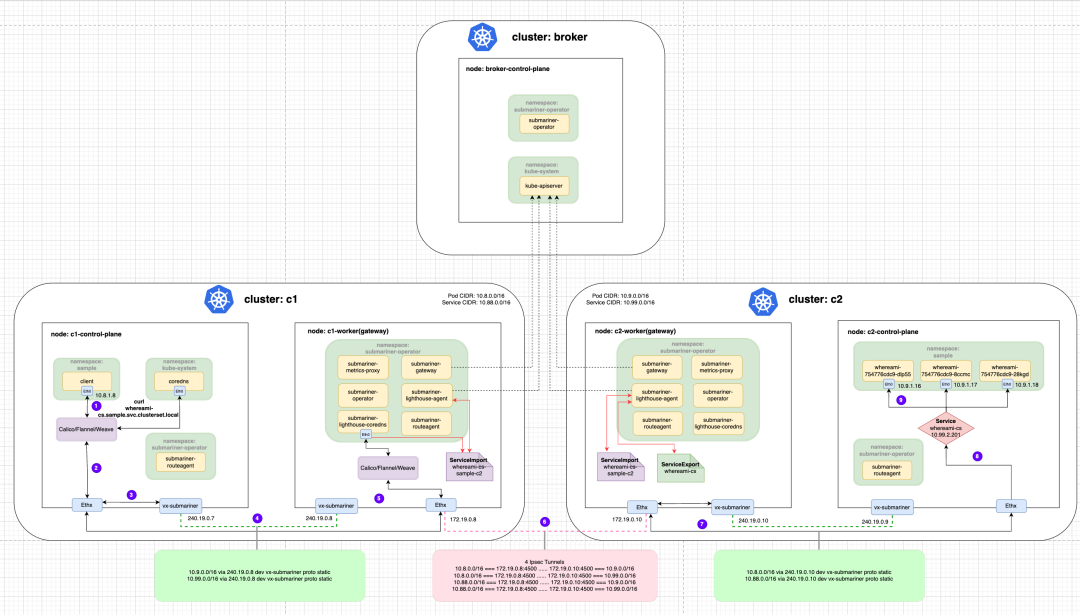

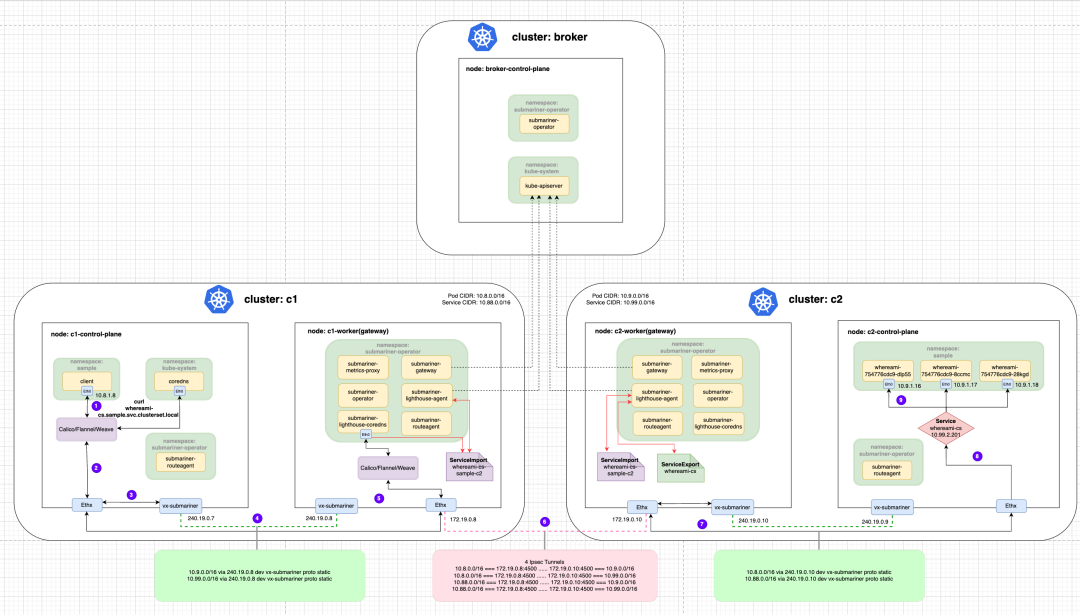

Here is a brief explanation of the traffic with the following diagram: Traffic from the client Pod of the c1 cluster first goes through the veth-pair to the Root Network Namespace of the Node, and then through the vx-submariner VXLAN tunnel set by the Submariner Route Agent to send the traffic The traffic is then sent to the Gateway Node (c1-worker) through the vx-submariner VXLAN tunnel set by the Submariner Route Agent. The traffic is then sent through the IPsec tunnel connecting the c1 and c2 clusters to the other end. The Gateway Node of the c2 cluster (c2-worker) receives the traffic and sends it to the back-end whereami Pod through the iptables reverse proxy rules (DNAT based on the ClusterIP in the process).

Next, we create the same service in the c1 cluster.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

|

kubectl --context kind-c1 apply -f - <<EOF

apiVersion: apps/v1

kind: Deployment

metadata:

name: whereami

namespace: sample

spec:

replicas: 3

selector:

matchLabels:

app: whereami

template:

metadata:

labels:

app: whereami

spec:

containers:

- name: whereami

image: cr7258/whereami:v1

imagePullPolicy: Always

ports:

- containerPort: 80

env:

- name: NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

- name: NODE_NAME

valueFrom:

fieldRef:

fieldPath: spec.nodeName

- name: POD_NAME

valueFrom:

fieldRef:

fieldPath: metadata.name

- name: POD_IP

valueFrom:

fieldRef:

fieldPath: status.podIP

---

apiVersion: v1

kind: Service

metadata:

name: whereami-cs

namespace: sample

spec:

selector:

app: whereami

ports:

- protocol: TCP

port: 80

targetPort: 80

EOF

|

View the service on the c1 cluster.

1

2

3

4

5

6

7

8

|

root@seven-demo:~# kubectl --context kind-c1 get pod -n sample -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

whereami-754776cdc9-hq4m2 1/1 Running 0 45s 10.8.1.25 c1-worker <none> <none>

whereami-754776cdc9-rt84w 1/1 Running 0 45s 10.8.1.23 c1-worker <none> <none>

whereami-754776cdc9-v5zrk 1/1 Running 0 45s 10.8.1.24 c1-worker <none> <none>

root@seven-demo:~# kubectl --context kind-c1 get svc -n sample

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

whereami-cs ClusterIP 10.88.132.102 <none> 80/TCP 50s

|

Export services in the c1 cluster.

1

|

subctl --context kind-c1 export service --namespace sample whereami-cs

|

View ServiceImport.

1

2

|

kubectl --context kind-c1 get -n submariner-operator serviceimport

kubectl --context kind-c2 get -n submariner-operator serviceimport

|

Since the same service is available locally in cluster c1, this request will be sent to the service in cluster c1.

1

2

|

kubectl --context kind-c1 exec -it client -- bash

nslookup whereami-cs.sample.svc.clusterset.local

|

1

|

curl whereami-cs.sample.svc.clusterset.local

|

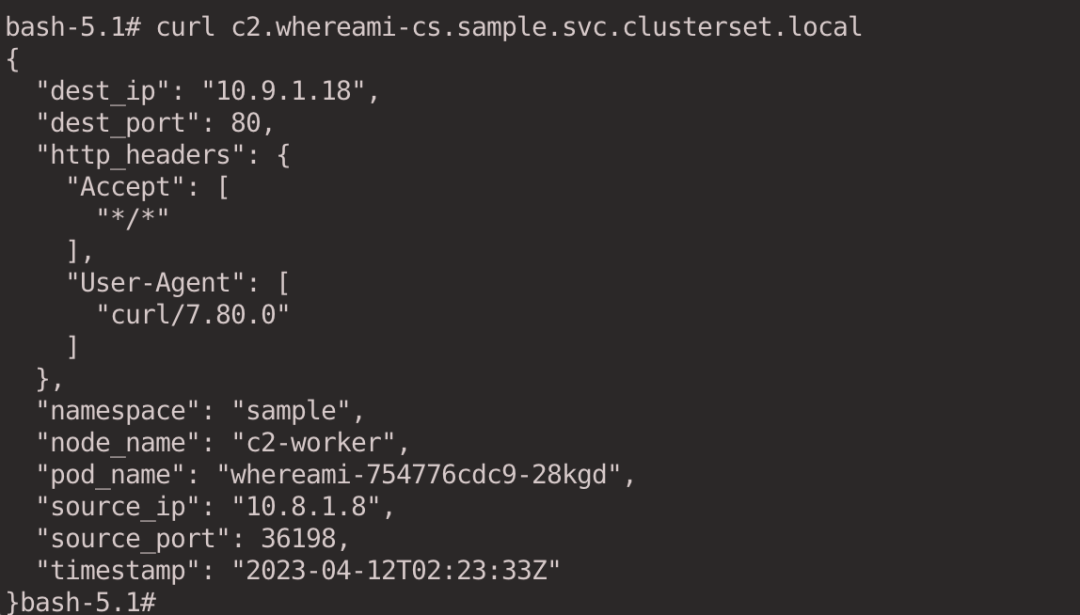

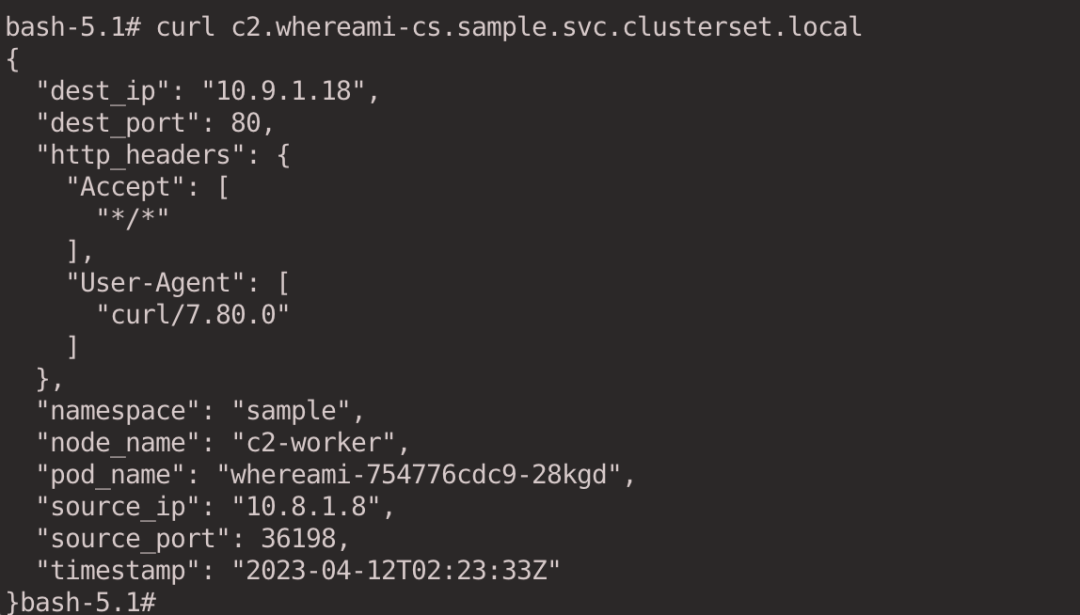

We can also pass <cluster-id>. <svc-name>. <namespace>.svc.clusterset.local to specify access to the ClusterIP Service that accesses a specific cluster. e.g. we specify the Service that accesses the c2 cluster.

1

|

curl c2.whereami-cs.sample.svc.clusterset.local

|

3.5.2. Headless Service + StatefulSet

Submariner also supports Headless Services with StatefulSets, which allows access to individual Pods via stable DNS names. in a single cluster, Kubernetes supports this by introducing stable Pod IDs, which can be accessed in a single cluster via <pod-name>.<svc-name>.<ns>.svc.cluster.local format to resolve domains. In a cross-cluster scenario, Submariner resolves the domain name via <pod-name>.<cluster-id>.<svc-name>.<ns>.svc.clusterset.local format to resolve the domain name.

Create Headless Service and StatefulSet in the c2 cluster.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

|

kubectl --context kind-c2 apply -f - <<EOF

apiVersion: v1

kind: Service

metadata:

name: whereami-ss

namespace: sample

labels:

app: whereami-ss

spec:

ports:

- port: 80

name: whereami

clusterIP: None

selector:

app: whereami-ss

---

apiVersion: apps/v1

kind: StatefulSet

metadata:

name: whereami

namespace: sample

spec:

serviceName: "whereami-ss"

replicas: 3

selector:

matchLabels:

app: whereami-ss

template:

metadata:

labels:

app: whereami-ss

spec:

containers:

- name: whereami-ss

image: cr7258/whereami:v1

ports:

- containerPort: 80

name: whereami

env:

- name: NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

- name: NODE_NAME

valueFrom:

fieldRef:

fieldPath: spec.nodeName

- name: POD_NAME

valueFrom:

fieldRef:

fieldPath: metadata.name

- name: POD_IP

valueFrom:

fieldRef:

fieldPath: status.podIP

EOF

|

View services in the c2 cluster.

1

2

3

4

5

6

7

8

|

root@seven-demo:~# kubectl get pod -n sample --context kind-c2 -o wide -l app=whereami-ss

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

whereami-0 1/1 Running 0 38s 10.9.1.20 c2-control-plane <none> <none>

whereami-1 1/1 Running 0 36s 10.9.1.21 c2-control-plane <none> <none>

whereami-2 1/1 Running 0 31s 10.9.1.22 c2-control-plane <none> <none>

root@seven-demo:~# kubectl get svc -n sample --context kind-c2 -l app=whereami-ss

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

whereami-ss ClusterIP None <none> 80/TCP 4m58s

|

Export services in the c2 cluster.

1

|

subctl --context kind-c2 export service whereami-ss --namespace sample

|

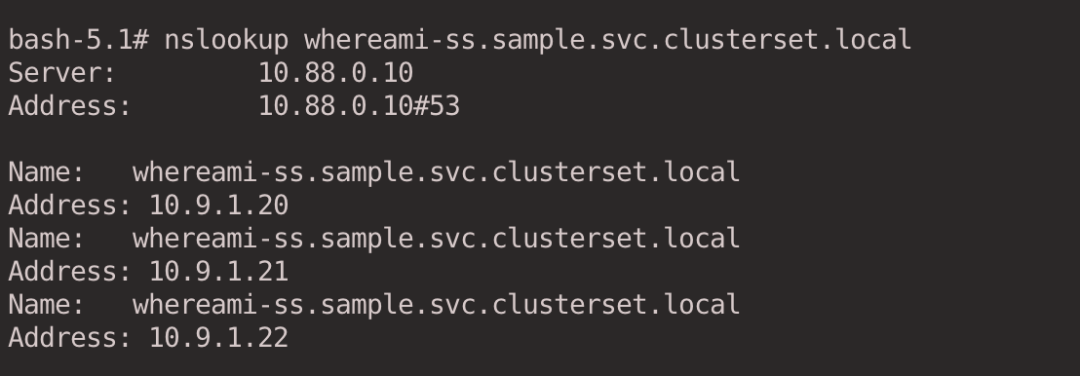

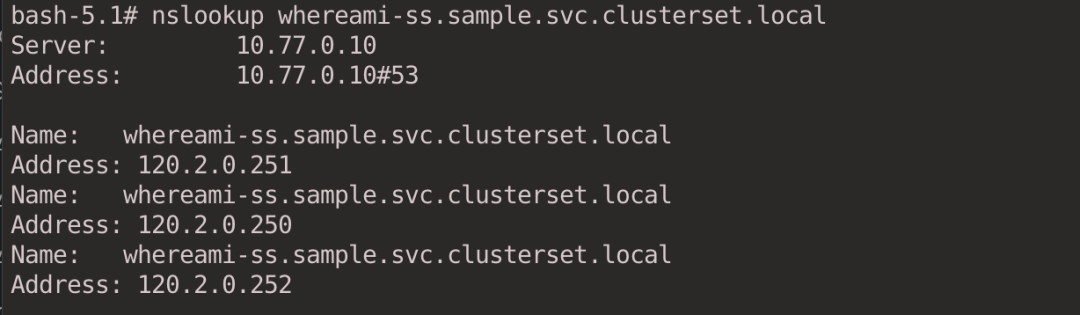

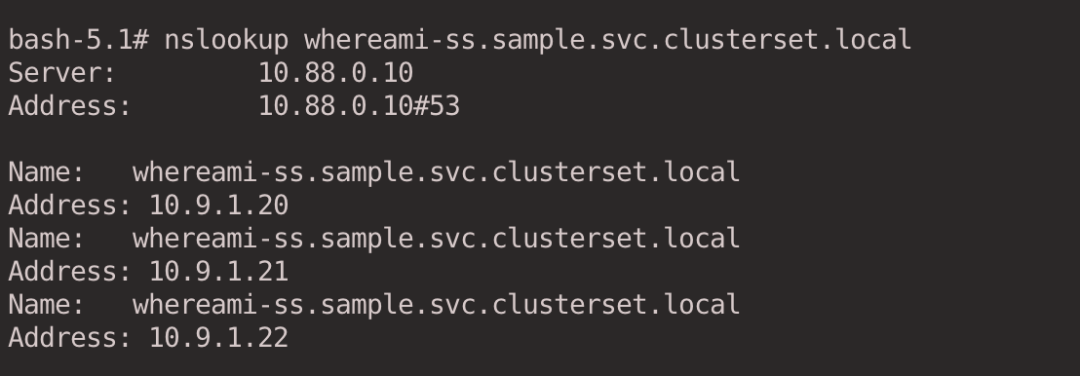

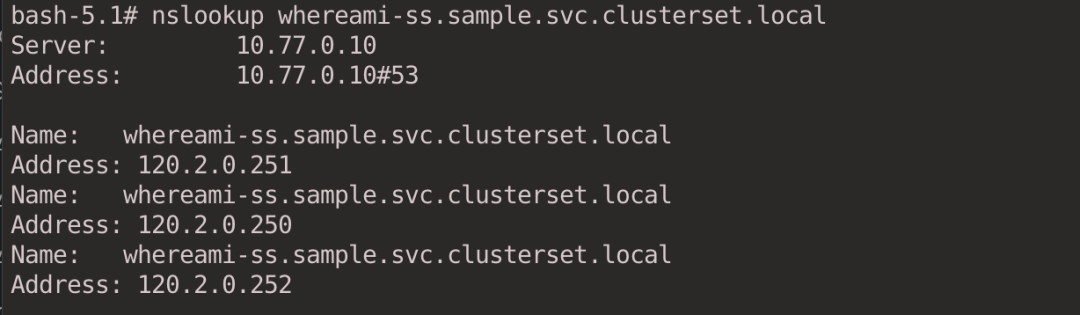

Resolve the domain name of Headless Service to get the IP of all Pods.

1

2

|

kubectl --context kind-c1 exec -it client -- bash

nslookup whereami-ss.sample.svc.clusterset.local

|

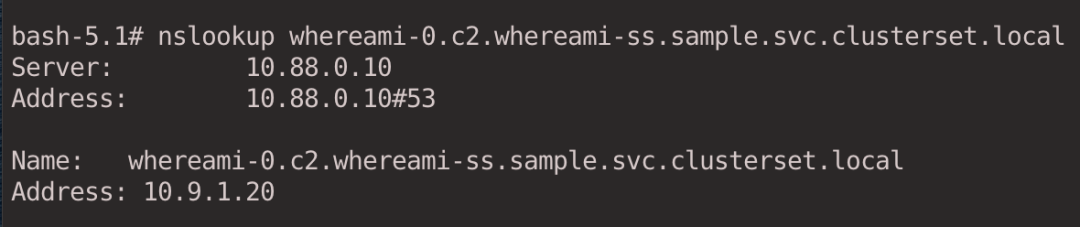

You can also specify individual Pods to be resolved.

1

|

nslookup whereami-0.c2.whereami-ss.sample.svc.clusterset.local

|

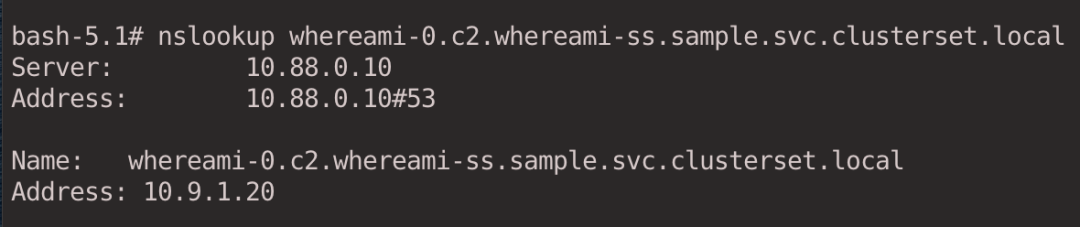

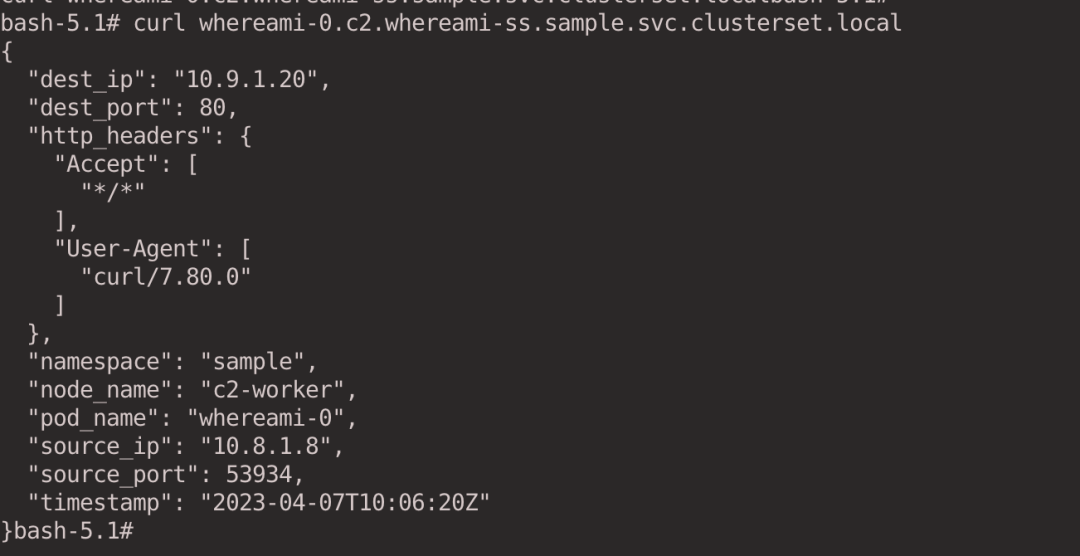

Access the specified Pod via the domain name.

1

|

curl whereami-0.c2.whereami-ss.sample.svc.clusterset.local

|

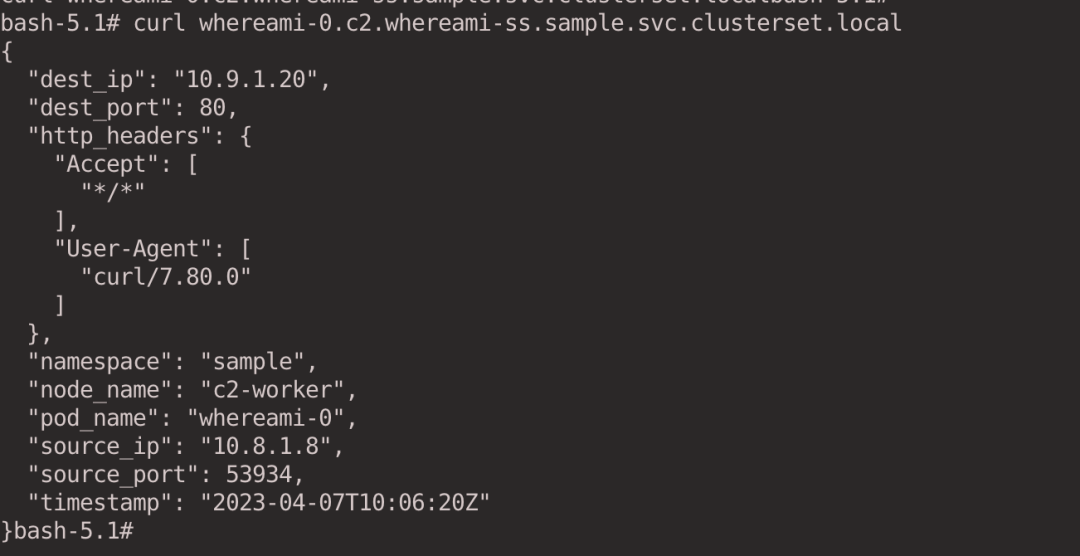

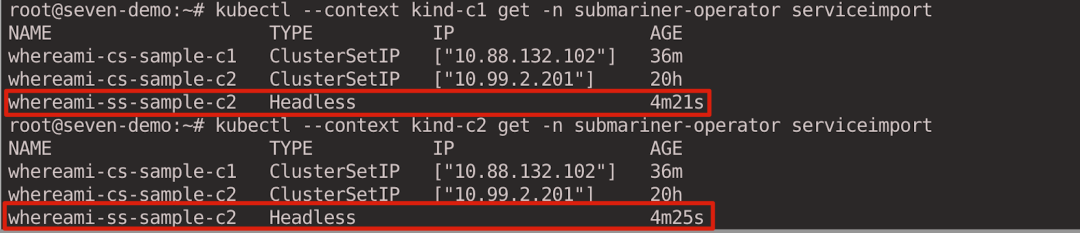

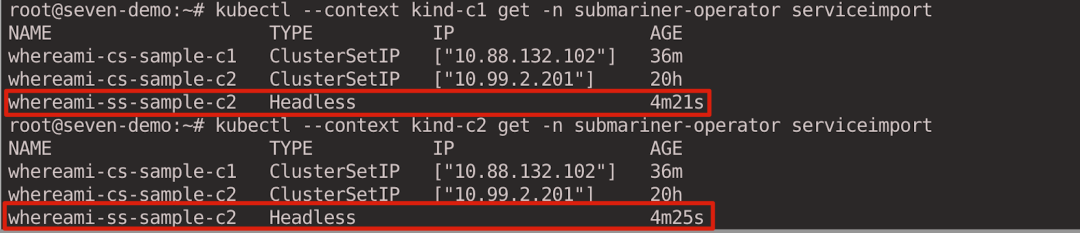

When you look at ServiceImport, the column for IP address is empty because the exported service type is Headless.

1

2

|

kubectl --context kind-c1 get -n submariner-operator serviceimport

kubectl --context kind-c2 get -n submariner-operator serviceimport

|

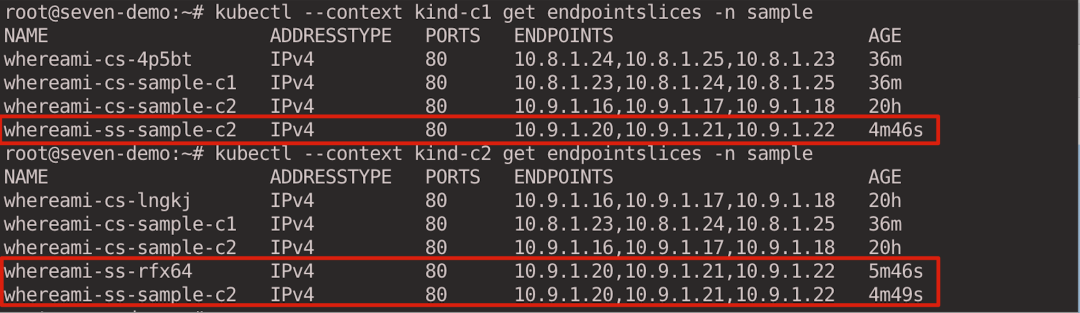

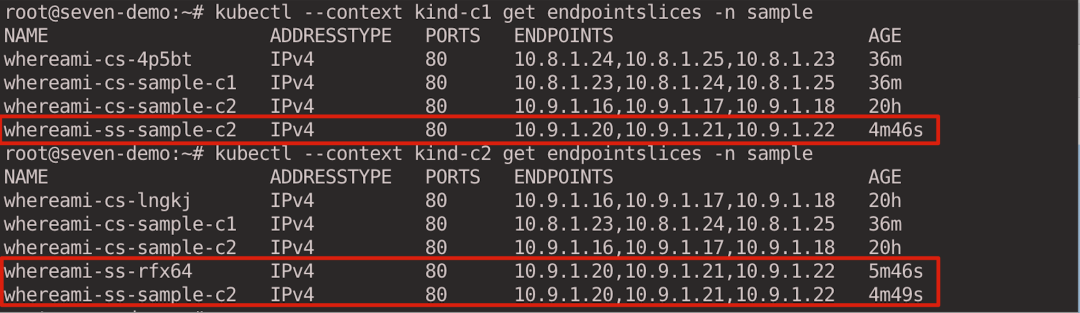

For Headless Service, the Pod IP is resolved according to Endpointslice.

1

2

|

kubectl --context kind-c1 get endpointslices -n sample

kubectl --context kind-c2 get endpointslices -n sample

|

4. Resolving CIDR Overlap with Globalnet

The next step is to demonstrate how to solve CIDR overlap between multiple clusters using Submariner’s Globalnet feature. In this experiment, we will create 3 clusters and set the Service and Pod CIDRs of each cluster to be the same.

4.1. Creating a cluster

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

|

# Replace with server IP

export SERVER_IP="10.138.0.11"

kind create cluster --config - <<EOF

kind: Cluster

name: broker-globalnet

apiVersion: kind.x-k8s.io/v1alpha4

nodes:

- role: control-plane

networking:

apiServerAddress: $SERVER_IP

podSubnet: "10.7.0.0/16"

serviceSubnet: "10.77.0.0/16"

EOF

kind create cluster --config - <<EOF

kind: Cluster

name: g1

apiVersion: kind.x-k8s.io/v1alpha4

nodes:

- role: control-plane

- role: worker

networking:

apiServerAddress: $SERVER_IP

podSubnet: "10.7.0.0/16"

serviceSubnet: "10.77.0.0/16"

EOF

kind create cluster --config - <<EOF

kind: Cluster

name: g2

apiVersion: kind.x-k8s.io/v1alpha4

nodes:

- role: control-plane

- role: worker

networking:

apiServerAddress: $SERVER_IP

podSubnet: "10.7.0.0/16"

serviceSubnet: "10.77.0.0/16"

EOF

|

4.2. Deploying the Broker

Use the --globalnet=true parameter to enable the Globalnet feature and the --globalnet-cidr-range parameter to specify the global GlobalCIDR for all clusters (default 242.0.0.0/8).

1

|

subctl --context kind-broker-globalnet deploy-broker --globalnet=true --globalnet-cidr-range 120.0.0.0/8

|

4.3. g1, g2 join cluster

Use the --globalnet-cidr parameter to specify the GlobalCIDR for this cluster.

1

2

|

subctl --context kind-g1 join broker-info.subm --clusterid g1 --globalnet-cidr 120.1.0.0/24

subctl --context kind-g2 join broker-info.subm --clusterid g2 --globalnet-cidr 120.2.0.0/24

|

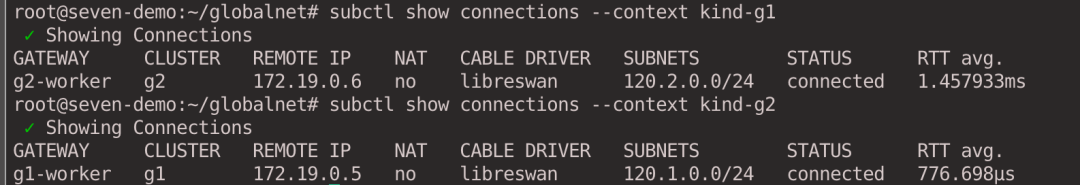

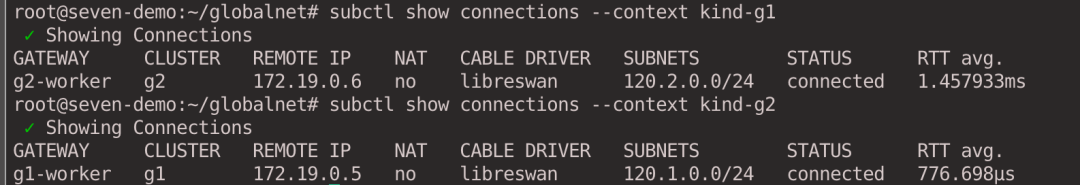

4.4. Viewing cluster connections

1

2

|

subctl show connections --context kind-g1

subctl show connections --context kind-g2

|

4.5. Testing cross-cluster communication

Create sample namespaces in both clusters.

1

2

|

kubectl --context kind-g2 create namespace sample

kubectl --context kind-g1 create namespace sample

|

4.5.1. ClusterIP Service

Create a service in the g2 cluster.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

|

kubectl --context kind-g2 apply -f - <<EOF

apiVersion: apps/v1

kind: Deployment

metadata:

name: whereami

namespace: sample

spec:

replicas: 3

selector:

matchLabels:

app: whereami

template:

metadata:

labels:

app: whereami

spec:

containers:

- name: whereami

image: cr7258/whereami:v1

imagePullPolicy: Always

ports:

- containerPort: 80

env:

- name: NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

- name: NODE_NAME

valueFrom:

fieldRef:

fieldPath: spec.nodeName

- name: POD_NAME

valueFrom:

fieldRef:

fieldPath: metadata.name

- name: POD_IP

valueFrom:

fieldRef:

fieldPath: status.podIP

---

apiVersion: v1

kind: Service

metadata:

name: whereami-cs

namespace: sample

spec:

selector:

app: whereami

ports:

- protocol: TCP

port: 80

targetPort: 80

EOF

|

View the service in the g2 cluster.

1

2

3

4

5

6

7

8

|

root@seven-demo:~/globalnet# kubectl --context kind-g2 get pod -n sample -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

whereami-754776cdc9-72qd4 1/1 Running 0 19s 10.7.1.8 g2-control-plane <none> <none>

whereami-754776cdc9-jsnhk 1/1 Running 0 20s 10.7.1.7 g2-control-plane <none> <none>

whereami-754776cdc9-n4mm6 1/1 Running 0 19s 10.7.1.9 g2-control-plane <none> <none>

root@seven-demo:~/globalnet# kubectl --context kind-g2 get svc -n sample -o wide

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE SELECTOR

whereami-cs ClusterIP 10.77.153.172 <none> 80/TCP 26s app=whereami

|

Export services in the g2 cluster.

1

|

subctl --context kind-g2 export service --namespace sample whereami-cs

|

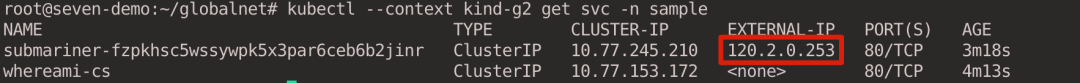

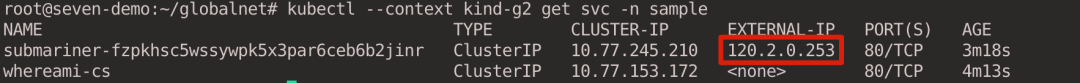

After exporting the service, we look at the Service for the g2 cluster and see that Submariner has automatically created an additional service in the same namespace as the exported service and set externalIPs to the Global IPs assigned to the corresponding service.

1

|

kubectl --context kind-g2 get svc -n sample

|

Access the whereami service of the g2 cluster in the g1 cluster.

1

2

|

kubectl --context kind-g1 run client --image=cr7258/nettool:v1

kubectl --context kind-g1 exec -it client -- bash

|

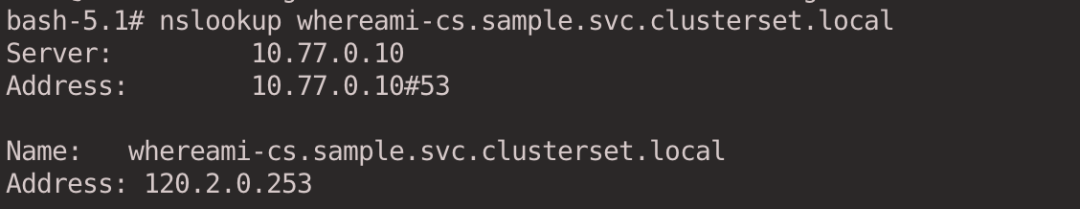

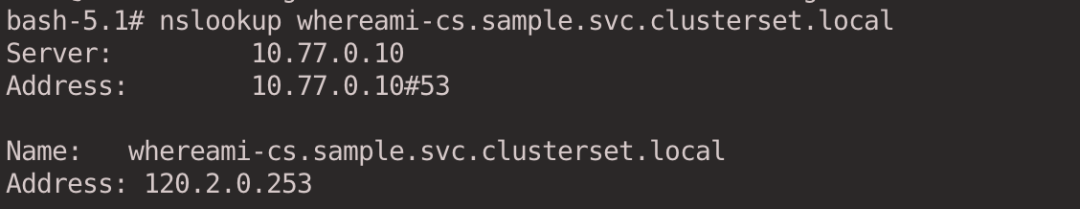

The DNS will resolve to the Global IP address assigned to the c2 cluster whereami service, not the ClusterIP IP address of the service.

1

|

nslookup whereami-cs.sample.svc.clusterset.local

|

1

|

curl whereami-cs.sample.svc.clusterset.local

|

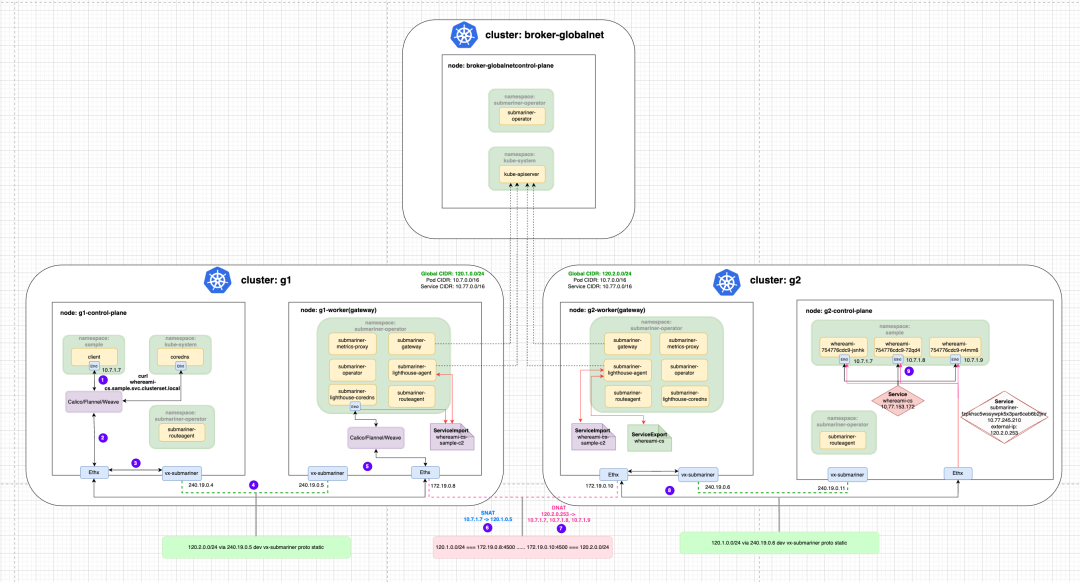

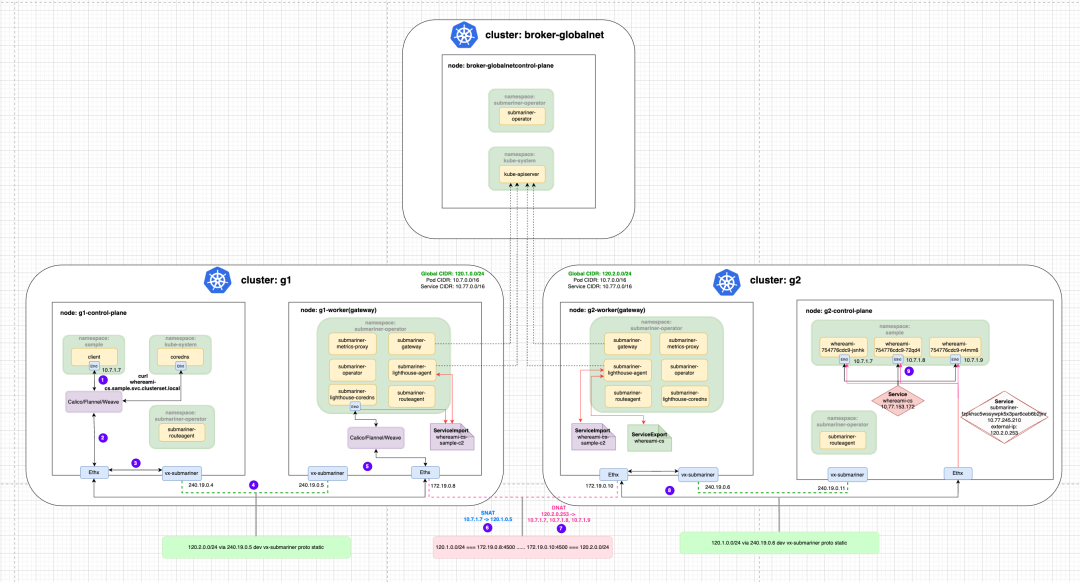

Here is a brief explanation of the traffic with the following diagram. The traffic originates from the client Pod of the c1 cluster, and after DNS resolution should request IP 120.2.0.253. It first goes through the veth-pair to the Root Network Namespace of the Node, and then through the vx-submariner set by the Submariner Route Agent. This VXLAN tunnel sends the traffic to the Gateway Node (c1-worker).

The source IP 10.7.1.7 was converted to 120.1.0.5 on the Gateway Node, and then sent to the other end through the IPsec tunnel of c1 and c2 clusters. After receiving the traffic, the Gateway Node (c2-worker) of the c2 cluster passed it through the reverse proxy rule of iptables (during which DNAT was performed based on the Global IP) and finally sent it to the backend whereami Pod.

We can check the Iptables of Gateway Node on g1 and g2 clusters respectively to verify the NAT rules, first execute docker exec -it g1-worker bash and docker exec -it g2-worker bash to enter these two nodes, then execute iptables- save command to see the iptables configuration, below I have filtered the relevant iptables configuration.

g1-worker node:

1

2

|

# Convert the source IP to one of 120.1.0.1-120.1.0.8

-A SM-GN-EGRESS-CLUSTER -s 10.7.0.0/16 -m mark --mark 0xc0000/0xc0000 -j SNAT --to-source 120.1.0.1-120.1.0.8

|

g2-worker node:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

|

# Traffic accessing 120.2.0.253:80 jumps to the KUBE-EXT-ZTP7SBVPSRVMWSUN chain

-A KUBE-SERVICES -d 120.2.0.253/32 -p tcp -m comment --comment "sample/submariner-fzpkhsc5wssywpk5x3par6ceb6b2jinr external IP" -m tcp --dport 80 -j KUBE-EXT-ZTP7SBVPSRVMWSUN

# Jump to KUBE-SVC-ZTP7SBVPSRVMWSUN chain

-A KUBE-EXT-ZTP7SBVPSRVMWSUN -j KUBE-SVC-ZTP7SBVPSRVMWSUN

# Randomly select a Pod at the back end of whereami

-A KUBE-SVC-ZTP7SBVPSRVMWSUN -m comment --comment "sample/submariner-fzpkhsc5wssywpk5x3par6ceb6b2jinr -> 10.7.1.7:80" -m statistic --mode random --probability 0.33333333349 -j KUBE-SEP-BB74OZOLBDYS7GHU

-A KUBE-SVC-ZTP7SBVPSRVMWSUN -m comment --comment "sample/submariner-fzpkhsc5wssywpk5x3par6ceb6b2jinr -> 10.7.1.8:80" -m statistic --mode random --probability 0.50000000000 -j KUBE-SEP-MTZHPN36KRSHGEO6

-A KUBE-SVC-ZTP7SBVPSRVMWSUN -m comment --comment "sample/submariner-fzpkhsc5wssywpk5x3par6ceb6b2jinr -> 10.7.1.9:80" -j KUBE-SEP-UYVYXWJKZN2VHFJW

# DNAT Address Conversion

-A KUBE-SEP-BB74OZOLBDYS7GHU -p tcp -m comment --comment "sample/submariner-fzpkhsc5wssywpk5x3par6ceb6b2jinr" -m tcp -j DNAT --to-destination 10.7.1.7:80

-A KUBE-SEP-MTZHPN36KRSHGEO6 -p tcp -m comment --comment "sample/submariner-fzpkhsc5wssywpk5x3par6ceb6b2jinr" -m tcp -j DNAT --to-destination 10.7.1.8:80

-A KUBE-SEP-UYVYXWJKZN2VHFJW -p tcp -m comment --comment "sample/submariner-fzpkhsc5wssywpk5x3par6ceb6b2jinr" -m tcp -j DNAT --to-destination 10.7.1.9:80

|

4.5.2. Headless Service + StatefulSet

Next, test Globalnet in a Headless Service + StatefulSet scenario. Create the service in the g2 cluster.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

|

kubectl --context kind-g2 apply -f - <<EOF

apiVersion: v1

kind: Service

metadata:

name: whereami-ss

namespace: sample

labels:

app: whereami-ss

spec:

ports:

- port: 80

name: whereami

clusterIP: None

selector:

app: whereami-ss

---

apiVersion: apps/v1

kind: StatefulSet

metadata:

name: whereami

namespace: sample

spec:

serviceName: "whereami-ss"

replicas: 3

selector:

matchLabels:

app: whereami-ss

template:

metadata:

labels:

app: whereami-ss

spec:

containers:

- name: whereami-ss

image: cr7258/whereami:v1

ports:

- containerPort: 80

name: whereami

env:

- name: NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

- name: NODE_NAME

valueFrom:

fieldRef:

fieldPath: spec.nodeName

- name: POD_NAME

valueFrom:

fieldRef:

fieldPath: metadata.name

- name: POD_IP

valueFrom:

fieldRef:

fieldPath: status.podIP

EOF

|

View the service in the g2 cluster.

1

2

3

4

5

6

7

8

|

root@seven-demo:~# kubectl get pod -n sample --context kind-g2 -o wide -l app=whereami-ss

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

whereami-0 1/1 Running 0 62s 10.7.1.10 g2-worker <none> <none>

whereami-1 1/1 Running 0 56s 10.7.1.11 g2-worker <none> <none>

whereami-2 1/1 Running 0 51s 10.7.1.12 g2-worker <none> <none>

root@seven-demo:~# kubectl get svc -n sample --context kind-c2 -l app=whereami-ss

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

whereami-ss ClusterIP None <none> 80/TCP 42h

|

Export services in the g2 cluster.

1

|

subctl --context kind-g2 export service whereami-ss --namespace sample

|

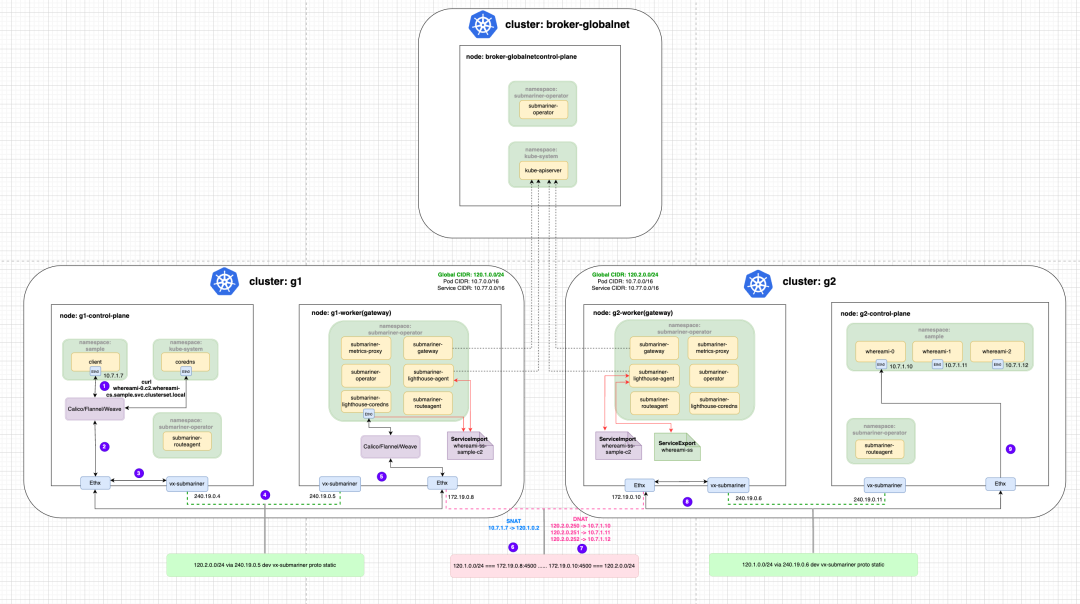

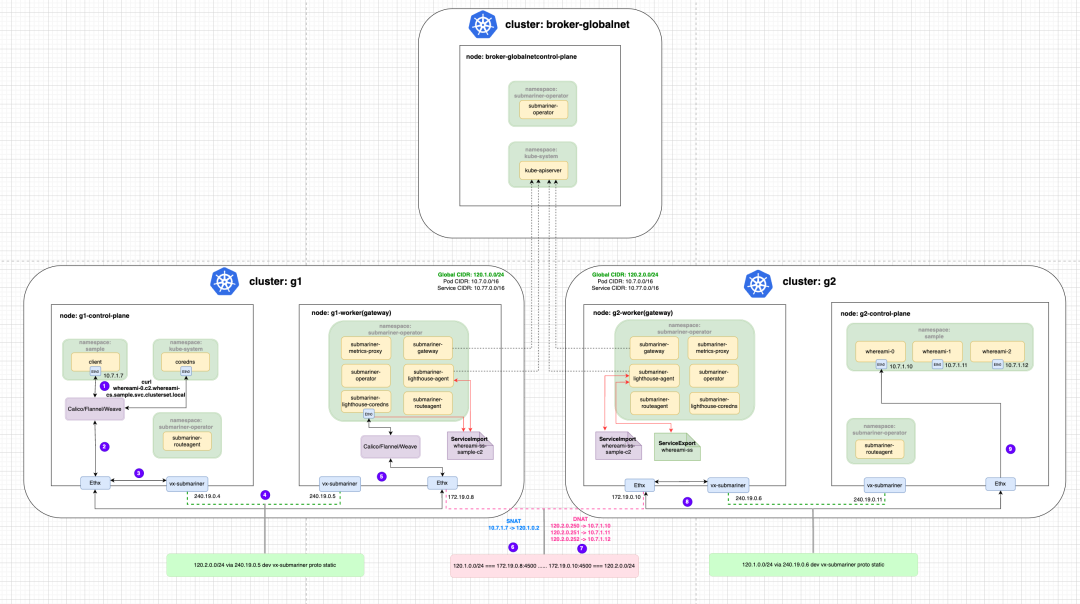

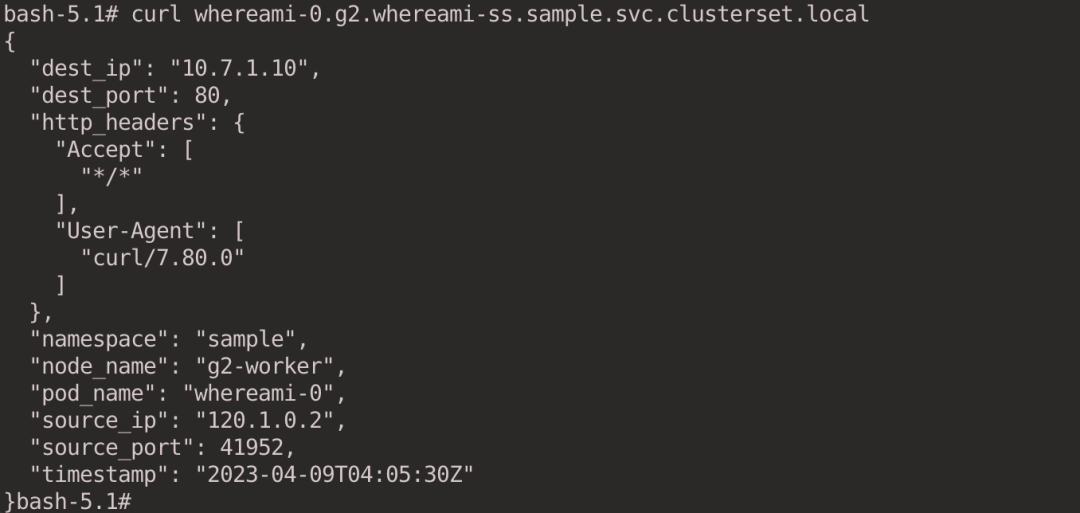

To access the g2 cluster service in the g1 cluster, Globalnet assigns a Global IP to each Pod associated with the Headless Service for outbound and inbound traffic.

1

2

|

kubectl --context kind-g1 exec -it client -- bash

nslookup whereami-ss.sample.svc.clusterset.local

|

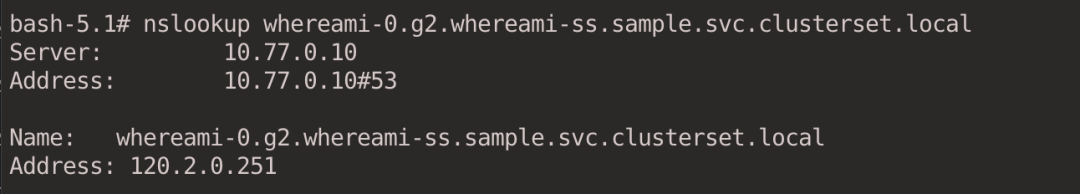

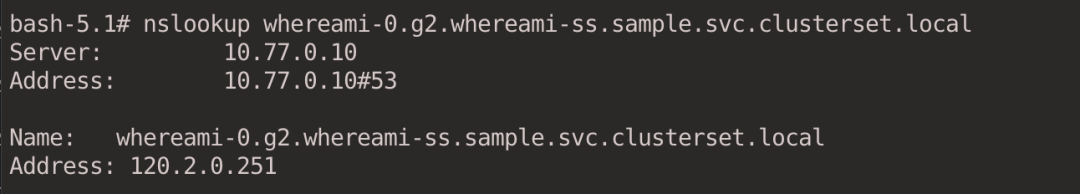

Specifies to resolve a Pod.

1

|

nslookup whereami-0.g2.whereami-ss.sample.svc.clusterset.local

|

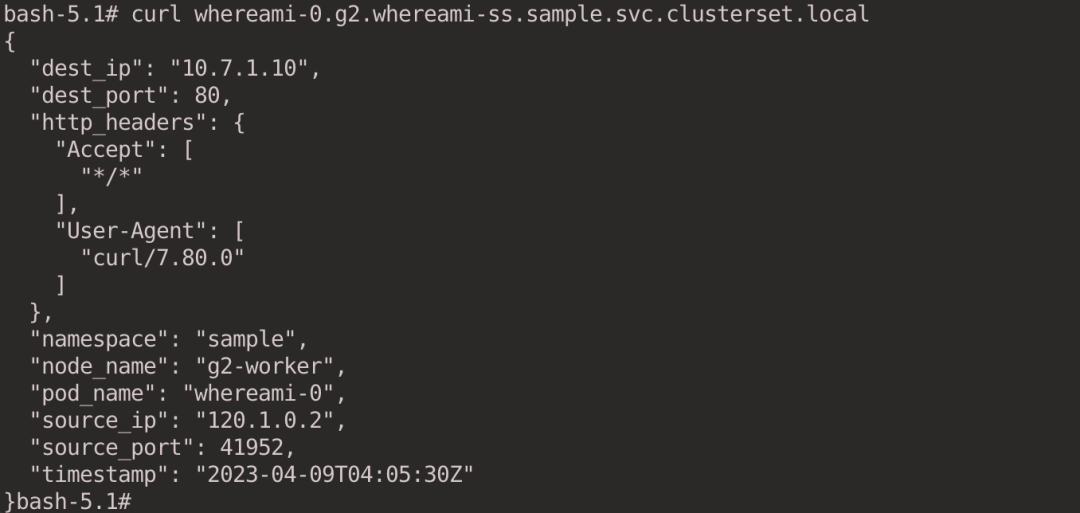

Specifies access to a Pod.

1

|

curl whereami-0.g2.whereami-ss.sample.svc.clusterset.local

|

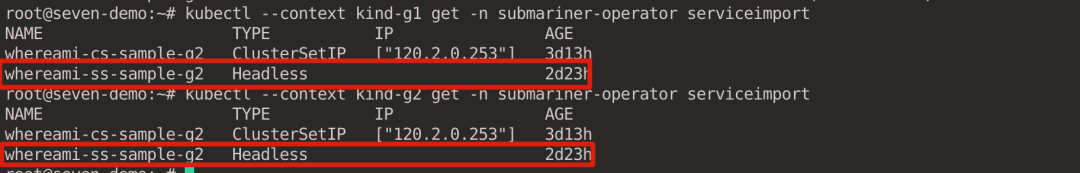

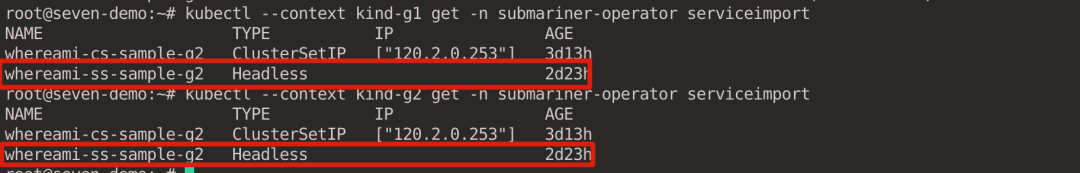

When you look at ServiceImport, the column for IP address is empty because the exported service type is Headless.

1

2

|

kubectl --context kind-g1 get -n submariner-operator serviceimport

kubectl --context kind-g2 get -n submariner-operator serviceimport

|

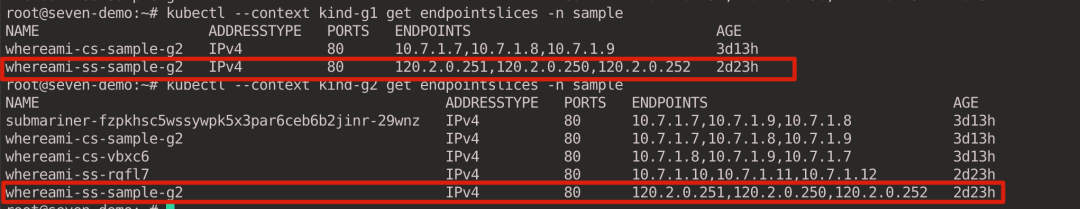

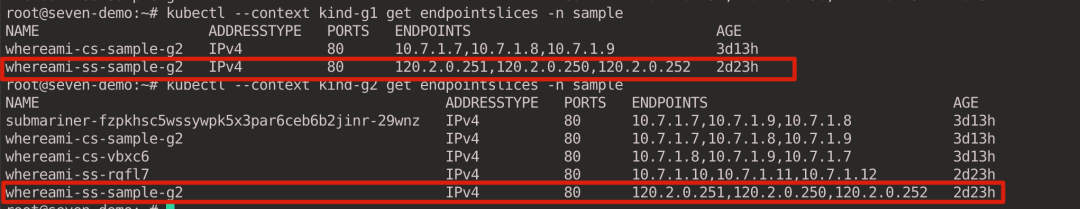

For Headless Service, the Pod IP is resolved according to Endpointslice.

1

2

|

kubectl --context kind-g1 get endpointslices -n sample

kubectl --context kind-g2 get endpointslices -n sample

|

Execute the docker exec -it g2-worker bash command to enter the g2-worker node, and then execute the iptables-save command to find the relevant Iptables rules.

1

2

3

4

5

6

7

8

9

10

11

12

13

|

# SNAT

-A SM-GN-EGRESS-HDLS-PODS -s 10.7.1.12/32 -m mark --mark 0xc0000/0xc0000 -j SNAT --to-source 120.2.0.252

-A SM-GN-EGRESS-HDLS-PODS -s 10.7.1.11/32 -m mark --mark 0xc0000/0xc0000 -j SNAT --to-source 120.2.0.251

-A SM-GN-EGRESS-HDLS-PODS -s 10.7.1.10/32 -m mark --mark 0xc0000/0xc0000 -j SNAT --to-source 120.2.0.250

# DNAT

-A SUBMARINER-GN-INGRESS -d 120.2.0.252/32 -j DNAT --to-destination 10.7.1.12

-A SUBMARINER-GN-INGRESS -d 120.2.0.251/32 -j DNAT --to-destination 10.7.1.11

-A SUBMARINER-GN-INGRESS -d 120.2.0.250/32 -j DNAT --to-destination 10.7.1.10

|

5. Clean up the environment

Execute the following command to delete the Kind cluster created for this experiment.

1

|

kind delete clusters broker c1 c2 g1 g2

|

6. Summary

This article first introduced the architecture of Submariner, including Broker, Gateway Engine, Route Agent, Service Discovery, Globalnet, and Submariner Operator, and then experimentally showed the reader how Submariner handles ClusterIP and Headless type traffic in a cross-cluster scenario. Then, experiments are conducted to show how Submariner handles ClusterIP and Headless types of traffic in cross-cluster scenarios. Finally, it demonstrates how Submariner’s Globalnet supports CIDR overlap between clusters via GlobalCIDR.