Google recently released an end-to-end neural audio codec – SoundStream. Most importantly, Google says this is the world’s first audio codec powered by a neural network and supporting different sound types such as voice, music and ambient sounds, which can process the above-mentioned kinds of audio in real time on a smartphone’s processor.

Audio codecs are essential tools for compressing audio files in order to make them smaller and save as much time as possible during transmission. As such, audio codecs are critical for services such as streaming media, online voice and video calls that require audio transmission.

While audio codecs can compress audio volume and speed up the audio delivery process, the compressed audio can also lose audio quality and detail, creating differences that can be noticed by the user. That’s where SoundStream can make up for it.

In February this year, Google released Lyra, a neural audio codec for low-bitrate speech, and officially opened source in April this year. SoundStream not only integrates Lyra’s capabilities for low-bitrate “speech”, but also has support for encoding more sound types, including clear speech, noisy speech, speech with echoes, music, and ambient sounds.

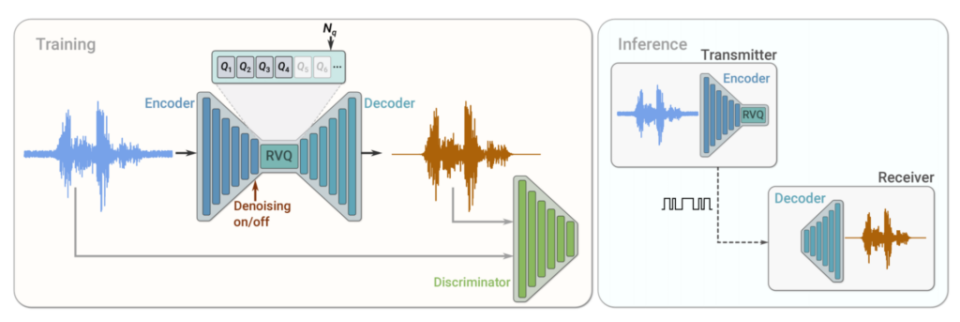

SoundStream is built around a neural network system consisting of an encoder, a decoder and a quantizer. The encoder converts the audio into an encoded signal, which is later compressed using the quantizer and converted back to audio using the decoder. Thus, after training the neural network model, the encoder and decoder can work on different clients, helping to transmit audio in various environments without loss of quality.

Google has on its website posted a comparison of different audio compression samples with the original audio samples. The comparison shows that the SoundStream-processed 3 kbps audio is better than the Opus audio codec-processed 12 kbps audio, and is very close to the ECS codec-processed 9 kbps.

Google’s own online meeting platform, Google Meet, and video platform, YouTube, are still using the Opus audio codec. As SoundStream technology continues to advance, it may soon be possible to see Google using its own technology in its own services.

Google says SoundStream is an important step in applying machine learning technology to audio codecs that works better than the current state-of-the-art codecs, Opus and EVS. SoundStream will be integrated into Lyra and will be available with the next release of Lyra. Developers can take advantage of the existing Lyra API and tools to deliver better sound quality.