Before learning Containerd we need to do a brief review of Docker’s development history, because it involves a bit more components in practice, there are many we will often hear, but it is not clear what these components are really for, such as libcontainer, runc, containerd, CRI, OCI and so on.

Docker

Since Docker 1.11, Docker containers are not simply started by Docker Daemon, but by integrating containerd, runc and other components to complete. Although the Docker Daemon daemon module has been constantly refactored, the basic functions and positioning have not changed much, and it has always been a CS architecture, where the daemon is responsible for interacting with the Docker Client side and managing Docker images and containers. In the current architecture, the component containerd is responsible for the lifecycle management of containers on cluster nodes and provides a gRPC interface to the Docker Daemon.

When we want to create a container, now Docker Daemon can’t create it for us directly, but request containerd to create a container, after containerd receives the request, it doesn’t operate the container directly, but creates a process called containerd-shim, and let this process operate the container. We specify that the container process needs a parent process to do state collection, maintain stdin and other fd open, etc. If the parent process is containerd, then if containerd hangs, all the containers on the whole host will have to exit, and the introduction of the containerd-shim shim can be used to circumvent this This problem can be circumvented by introducing the containerd-shim shim.

Then you need to do some configuration of namespaces and cgroups to create containers, and mount the root filesystem, etc. These operations actually have a standard specification, that is, OCI (Open Container Standard), runc is a reference implementation of it (Docker was forced to donate libcontainer and rename it to runc). runc, the standard is actually a document that specifies the structure of container images and the commands that containers need to receive, such as create, start, stop, delete, etc. runc can follow this OCI document to create a container in line with the specification, since it is a standard there must be other OCI implementations, such as Kata, gVisor, these containers runtime are in line with the OCI standard.

So the real start container is through containerd-shim to call runc to start the container, runc will exit directly after starting the container itself, containerd-shim will become the parent process of the container process, responsible for collecting the status of the container process, report to containerd, and take over the container’s child processes to clean up after the exit of the process with pid 1, to ensure that no zombie processes.

Docker will migrate container operations to containerd because the current do Swarm, want to enter the PaaS market, do this architecture cut, let Docker Daemon is responsible for the upper layer of packaging orchestration, of course, the results we know Swarm in front of Kubernetes is a fiasco, and then Docker company containerd project donated to the CNCF Foundation, this is also the current Docker architecture.

CRI

We know that Kubernetes provides a CRI container runtime interface, so what exactly is this CRI? This is actually closely related to the development of Docker.

In the early days of Kubernetes, when Docker was really hot, Kubernetes would of course choose to support Docker first, and call the Docker API directly by hard-coding. To support more and more streamlined container runtimes, Google and Red Hat led the introduction of the CRI standard to decouple the Kubernetes platform from specific container runtimes (mainly to kill Docker, of course).

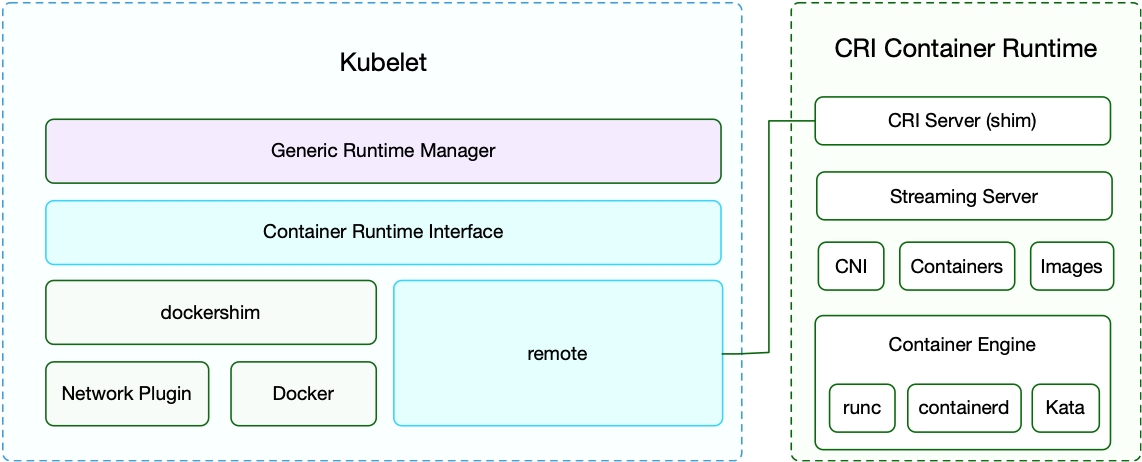

The CRI (Container Runtime Interface) is essentially a set of interfaces defined by Kubernetes to interact with the container runtime, so any container runtime that implements this set of interfaces can be docked to the Kubernetes platform. However, when Kubernetes introduced the CRI standard, it was not as dominant as it is now, so some container runtimes may not implement the CRI interface themselves, so there is shim (shim), a shim whose role is to act as an adapter to adapt various container runtimes’ own interfaces to Kubernetes’ CRI interface, where dockershim is a shim implementation for Kubernetes to adapt Docker to the CRI interface.

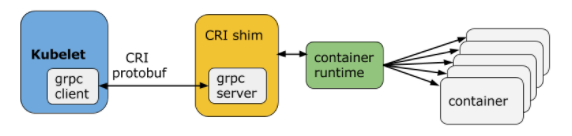

Kubelet communicates with the container runtime or shim through a gRPC framework, where kubelet acts as the client and CRI shim (and possibly the container runtime itself) acts as the server.

The CRI-defined API (https://github.com/kubernetes/kubernetes/blob/release-1.5/pkg/kubelet/api/v1alpha1/runtime/api.proto) consists mainly of two gRPC services, ImageService and RuntimeService. The ImageService service is mainly used for operations such as pulling images, viewing and deleting images, while the RuntimeService is used for managing the lifecycle of Pods and containers, and for calls to interact with containers (exec/attach/port- forward), you can configure the sockets of these two services by using the flags --container-runtime-endpoint and --image-service-endpoint in the kubelet.

However, there is also an exception here, that is, Docker, because Docker was a very high status, Kubernetes is directly built-in dockershim in the kubelet, so if you are using a container runtime such as Docker, you do not need to install a separate configuration adapter and so on, of course, this move also seems to paralyze the Docker company.

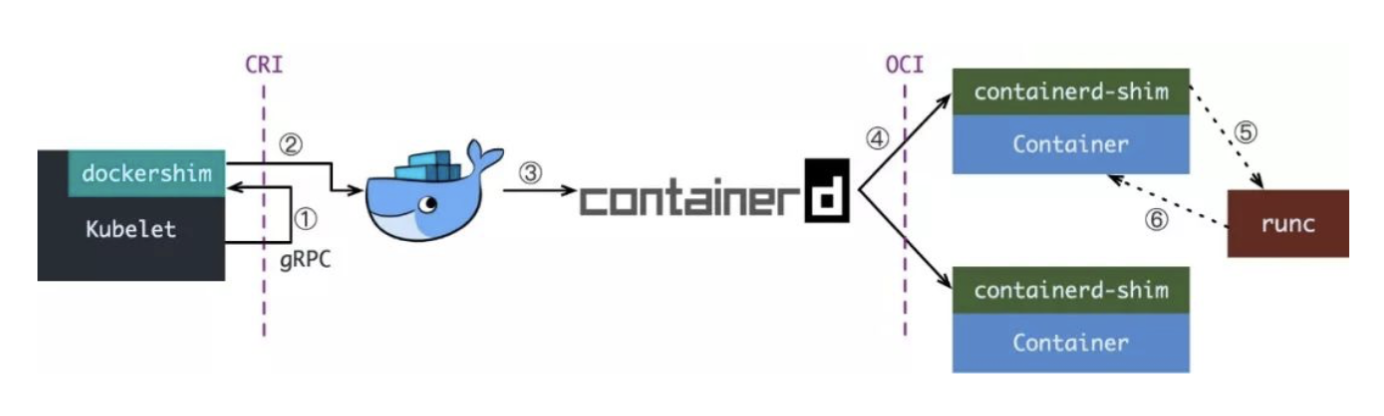

Now if we are using Docker, when we create a Pod in Kubernetes, the first thing that happens is that the kubelet calls dockershim through the CRI interface, requesting the creation of a container. kubelet can be seen as a simple CRI Client, and dockershim is the Server that receives the request, but they are both built into kubelet.

After dockershim receives the request, it is converted into a request that Docker Daemon can recognize, and sent to Docker Daemon to request the creation of a container, and after the request reaches Docker Daemon, it is the process of Docker creating a container, calling containerd, and then creating the containerd-shim process, which will call runc to actually create the container.

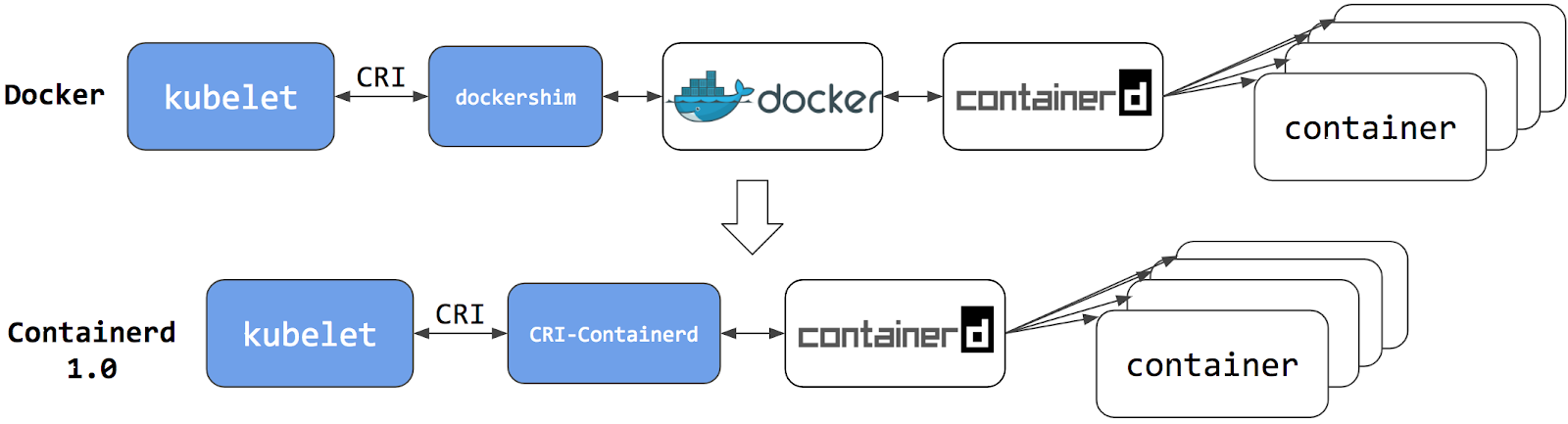

In fact, if we look closely, it is not difficult to find that the use of Docker is actually a long call chain, the real container-related operations in fact, containerd is completely sufficient, Docker is too complex and bulky, of course, Docker is popular for a large reason is to provide a lot of user-friendly features, but for Kubernetes to say that these features are not needed, because they are through the interface to operate the container, so naturally, you can switch the container runtime to containerd to.

Switching to containerd eliminates the middle ground and the experience is the same as before, but because containers are dispatched directly with the container runtime, they are not visible to Docker. As a result, the Docker tools you used to inspect these containers are no longer available.

You can no longer use the docker ps or docker inspect commands to get container information. Since you can’t list containers, you also can’t get logs, stop containers, or even execute commands in containers with docker exec.

Of course, we can still download images or build images with the docker build command, but images built or downloaded with Docker are not visible to the container runtime or to Kubernetes. In order to use it in Kubernetes, the image needs to be pushed to the image repository.

As you can see above, in containerd 1.0, the adaptation of CRI is done through a separate CRI-Containerd process, because at the beginning containerd will also adapt other systems (such as swarm), so there is no direct implementation of CRI, so the interfacing is left to the CRI-Containerd shim. Containerd` shim.

Then after containerd 1.1, the CRI-Containerd shim was removed and the adaptation logic was integrated directly into the main containerd process as a plugin, which is now much cleaner to call.

At the same time the Kubernetes community has made a CRI runtime CRI-O specifically for Kubernetes that is directly compatible with the CRI and OCI specifications.

This scheme and containerd’s scheme are obviously much simpler than the default dockershim, but since most users are more used to using Docker, people still prefer to use the dockershim scheme.

However, as the CRI scheme evolved and other container runtimes became better at supporting CRI, the Kubernetes community started to remove the dockershim scheme in July 2020: https://github.com/kubernetes/enhancements/tree/master/keps/sig-node/2221-remove-dockershim, the removal plan is now to separate the built-in kubelet The goal is to release 1.23⁄1.24 without dockershim (the code is still there, but to support out-of-the-box docker by default you need to build it yourself). kubelet, which will remove the built-in dockershim generation from the kubelet after a grace period.

So does this mean that Kubernetes no longer supports Docker? Of course not, this is just a deprecation of the built-in dockershim function, Docker and other container runtime will be treated the same, will not treat the built-in support separately, if we still want to use the Docker container runtime directly what should we do? We can extract the dockershim function and maintain a separate cri-dockerd, similar to the CRI-Containerd provided in containerd version 1.0, and of course, there is another way to implement the CRI interface built into Dockerd by the official Docker community.

But we also know that Dockerd is also to directly call the Containerd, and containerd 1.1 version after the built-in implementation of CRI, so Docker is no longer necessary to implement CRI alone, when Kubernetes is no longer built-in support for out-of-the-box Docker, the best way is of course to directly use the When Kubernetes no longer supports Docker out-of-the-box, the best way is to use the container runtime Containerd, which has also been practiced in production environments, so let’s learn how to use Containerd.

Containerd

We know that containerd has been part of the Docker Engine for a long time, but now it is separated from the Docker Engine as a separate open source project with the goal of providing a more open and stable container runtime infrastructure. The separated containerd will have more features, cover all the needs of the entire container runtime management, and provide more powerful support.

containerd is an industry standard container runtime that emphasizes simplicity, robustness and portability. containerd can be responsible for doing the following.

- Manage the container lifecycle (from container creation to container destruction)

- Pulling/pushing container images

- Storage management (managing the storage of images and container data)

- Calling runc to run containers (interacting with container runtimes such as runc)

- Managing container network interfaces and networks

Architecture

containerd can be used as a daemon for Linux and Windows, managing the complete container lifecycle of its host system, from image transfer and storage to container execution and monitoring, to underlying storage to network attachments and more.

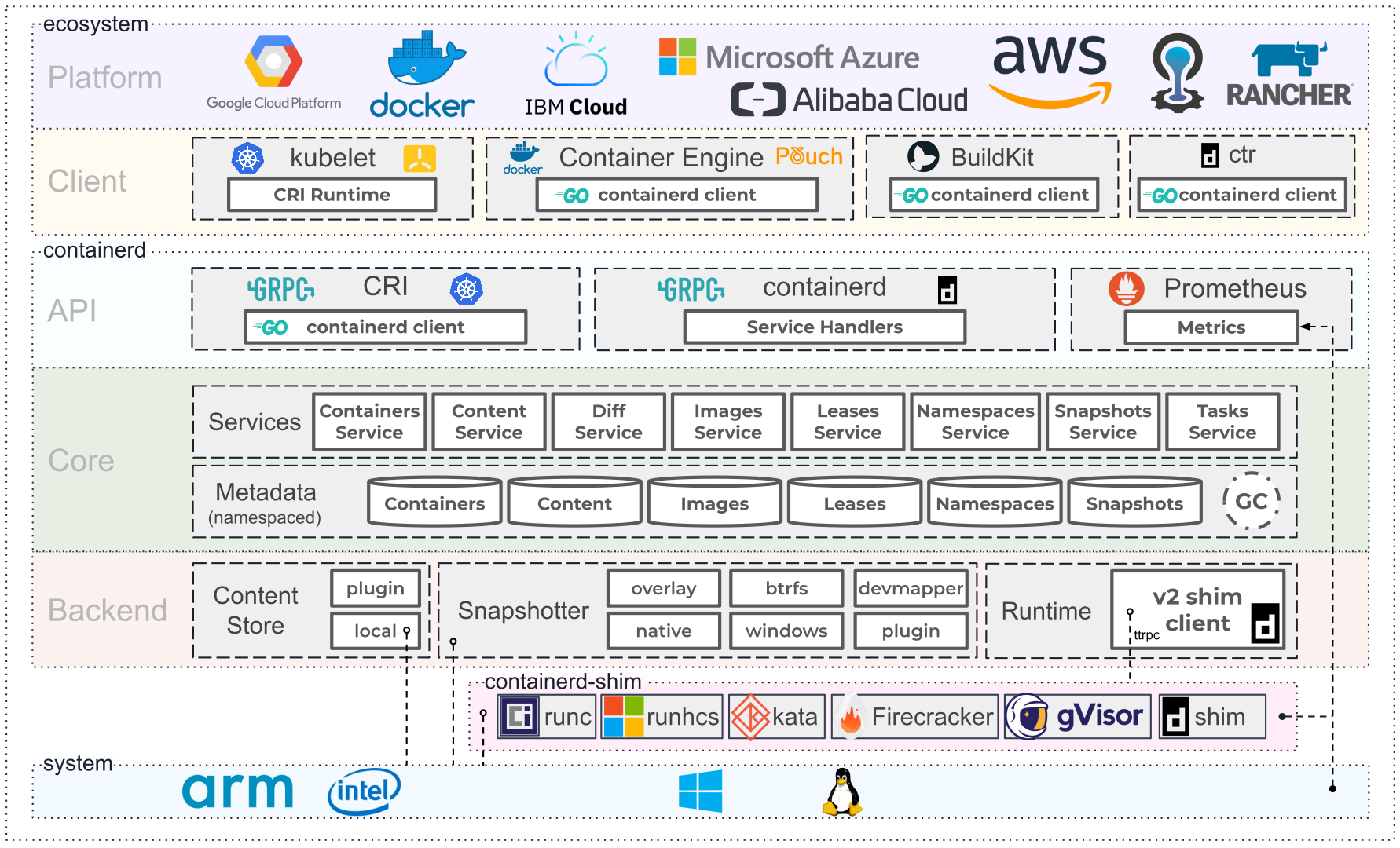

The above diagram is the official architecture provided by containerd, you can see that containerd is also using C/S architecture, the server side through the unix domain socket to expose the low-level gRPC API interface out, the client through these APIs to manage the containers on the node, each containerd is responsible for only one machine, Pull image Each containerd is responsible for only one machine, Pull image, operation of the container (start, stop, etc.), network, storage are all done by containerd. The specific running containers are runc’s responsibility, and in fact any container that conforms to the OCI specification can be supported.

In order to decouple, containerd divides the system into different components, each of which is completed by one or more modules in collaboration (the Core part), each type of module is integrated into Containerd in the form of plugins, and the plugins are interdependent, for example, each long dashed box in the above figure indicates a type of plugin, including Each small box indicates a subdivision of the plugins, for example Metadata Plugin relies on Containers Plugin, Content Plugin, etc. For example:

Content Plugin: Provides access to addressable content in the image, where all immutable content is stored.Snapshot Plugin: Used to manage file system snapshots of container images, each layer of the image is decompressed into a file system snapshot, similar to the graphdriver in Docker.

Overall containerd can be divided into three big blocks: Storage, Metadata and Runtime.

Installation

Here I am using Linux Mint 20.2 and first need to install the seccomp dependency.

Since containerd needs to call runc, we also need to install runc first, but containerd provides a zip archive containing the relevant dependencies cri-containerd-cni-${VERSION}. ${OS}-${ARCH}.tar.gz, which can be used directly for installation. First download the latest version of the archive from the release page, which is currently at version 1.5.5.

You can see directly which files are contained in the tarball with the -t option.

|

|

Unzip the package directly into each directory of the system

|

|

Of course, remember to append /usr/local/bin and /usr/local/sbin to the PATH environment variable in the ~/.bashrc file.

|

|

Then execute the following command to make it effective immediately.

|

|

The default configuration file for containerd is /etc/containerd/config.toml and we can generate a default configuration with the following command.

Since the containerd archive we downloaded above contains an etc/systemd/system/containerd.service file, we can configure containerd to run as a daemon via systemd, as follows.

|

|

There are two important parameters here.

Delegate: This option allows containerd as well as the runtime to manage its own cgroups for creating containers. if this option is not set, systemd will move the process to its own cgroups, which will result in containerd not getting the container’s resource usage correctly.KillMode: This option is used to handle the way containerd processes are killed. By default, systemd will look in the process’s cgroup and kill all of containerd’s child processes. the values that can be set for the KillMode field are as follows.control-group(default): All child processes in the current control group will be killed.process: Only the main process will be killed.mixed: The main process will receive a SIGTERM signal and the child processes will receive a SIGKILL signal.none: no process will be killed, only the stop command of the service will be executed

We need to set the KillMode value to process to ensure that upgrading or restarting containerd does not kill the existing containers.

Now we can start containerd, just execute the following command.

|

|

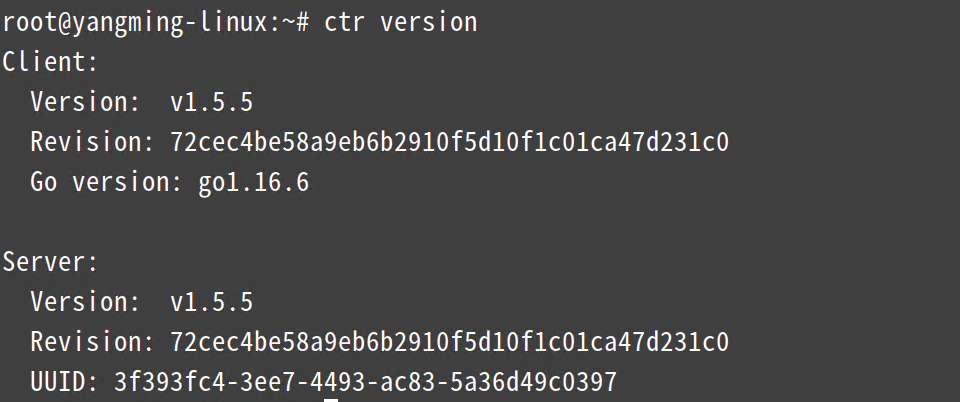

Once started, you can use containerd’s native CLI tool ctr, for example to view the version

Configuration

Let’s first look at the configuration file /etc/containerd/config.toml generated by default above.

|

|

This configuration file is rather complicated, we can focus on the plugins configuration, look carefully we can find that each top configuration block is named in the form of plugins. containerd.xxx.vx indicates the type of the plugin, and xxx after vx indicates the ID of the plugin, and we can view the list of plugins via ctr.

|

|

The sub-configuration blocks below the top-level configuration block represent various configurations of the plugin, for example, under the cri plugin there are configurations for containerd, cni and registry, and under containerd there are various runtimes that can be configured, as well as the default runtime. For example, if we want to configure an accelerator for a mirror, we need to configure registry.mirrors under the registry configuration block under the cri configuration block.

|

|

egistry.mirrors. "xxx": Indicates the mirror repository that needs to be configured for mirror, e.g.registry.mirrors. "docker.io"means configure mirror for docker.io.endpoint: Indicates a mirror acceleration service that provides mirror, e.g. we can register an Aliyun mirror service as a mirror for docker.io.

There are also two other configuration paths for storage in the default configuration.

Where root is used to store persistent data, including Snapshots, Content, Metadata and various plugin data, each plugin has its own separate directory, Containerd itself does not store any data, all its functions come from the loaded plugins.

The other state is used to store temporary runtime data, including sockets, pids, mount points, runtime state, and plugin data that does not need to be persisted.

Using

We know that the Docker CLI tool provides features to enhance the user experience, containerd also provides a corresponding CLI tool: ctr, but ctr is not as complete as docker, but the basic features about images and containers are there. Let’s start with a brief introduction to the use of ctr.

Help

Directly enter the ctr command to get all the relevant operation command usage.

|

|

Mirror operation

Pull Mirror

Pulling an image can be done using ctr image pull, for example, pulling the official Docker Hub image nginx:alpine, it should be noted that the image address needs to be added to the docker.io Host address.

|

|

You can also use the --platform option to specify the image for the corresponding platform. Of course, there is also a command to push the image ctr image push, and if it is a private image, you can use --user to customize the username and password of the repository when pushing.

List local mirrors

|

|

Use the -q (--quiet) option to print only the mirror name.

Detecting local mirrors

The main thing to look for is STATUS, where complete indicates that the image is in a fully available state.

Re-tagging

Similarly we can also re-tag the specified mirror with a Tag.

Delete Mirror

Images that are not needed can also be deleted using ctr image rm: ctr image rm.

Adding the -sync option will synchronize the deletion of the image and all associated resources.

Mount the image to the host directory

|

|

Uninstall the image from the host directory

Export the image as a zip archive

|

|

Importing images from a zip archive

|

|

Container operation

Container-related operations can be obtained with ctr container.

Create container

|

|

List containers

The list can also be streamlined by adding the -q option.

View detailed container configuration

Similar to the docker inspect function.

|

|

Delete container

In addition to the rm subcommand, you can also use delete or del to delete containers.

Task

The container we created above with the container create command is not in a running state, it is just a static container. A container object only contains the resources and related configuration data needed to run a container, which means that namespaces, rootfs and container configuration have been initialized successfully, but the user process has not been started yet.

A container is really running by Task tasks, Task can set up the NIC for the container, and can also configure tools to monitor the container, etc.

Task related operations can be obtained through ctr task, as follows we start the container through Task.

After starting the container, you can view the running containers via task ls: task ls.

The same can be done with the exec command to access the container.

However, you should note that you must specify the -exec-id parameter, which can be written in any way you like, as long as it is unique.

Pause the container, similar to -docker pause.

|

|

After the pause, the container status becomes PAUSED.

The container can also be restored using the resume command.

However, it should be noted that ctr does not have the ability to stop containers, it can only pause or kill them. To kill a container, you can use the task kill command:

After killing the container, you can see that the status of the container has changed to STOPPED. You can also delete Task by using the task rm command.

In addition, we can also get information about the container’s cgroup, which can be used to get the container’s memory, CPU and PID limits and usage using the task metrics command.

|

|

You can also use the task ps command to view the PIDs of all processes in the container on the host.

The first of these PIDs 3984 is process #1 in our container.

Namespace

Also the concept of namespaces is supported in Containerd, e.g. to view namespaces.

If not specified, ctr defaults to using the default space. A namespace can also be created using the ns create command.

Namespace can be deleted using remove or rm.

With namespaces you can specify namespace when manipulating resources, for example, to view a mirror of the test namespace, you can add the -n test option to the action command.

We know that Docker actually calls containerd by default, and in fact the namespace under containerd used by Docker is moby by default, not default, so if we start a container with docker, we can also use ctr -n moby to locate the following container.

|

|

Also the default namespace for containerd used under Kubernetes is k8s.io, so we can use ctr -n k8s.io to see the containers created under Kubernetes. We’ll cover how to switch the container runtime of a Kubernetes cluster to containerd later.