Background

In the existing network environment, some businesses using Redis clusters often need to perform node expansion operations as their business volume rises.

I have previously learned that after some operations and maintenance students expanded Redis clusters with a large number of nodes, the business side reported a decrease in cluster performance, as evidenced by a significant increase in access latency.

Some businesses are sensitive to Redis cluster access latency, such as the existing network environment for real-time reading of models, or some businesses rely on synchronization processes for reading Redis clusters, which can affect the real-time process latency of the business. This may not be acceptable on the business side.

To find the root cause of this problem, we troubleshoot the cluster performance degradation after a particular Redis cluster migration operation.

Problem Description

The scenario for this specific Redis cluster problem is that a particular Redis cluster has undergone a scaling operation. The business side uses Hiredis-vip for Redis cluster access and performs MGET operations.

The business side perceives that the latency of accessing the Redis cluster becomes high.

Description of the current network environment

- Most of the Redis versions deployed in the current network environment are 3.x or 4.x;

- The business is using the client Hiredis-vip to access the Redis cluster;

- The number of nodes in the Redis cluster is relatively large, with a size of 100+;

- The cluster had a previous expansion operation.

Observe the phenomenon

Because latency becomes high, we troubleshoot in several ways.

- whether the bandwidth is hitting full capacity.

- whether the CPU is over-occupied.

- whether the OPS is high.

By simple monitoring and troubleshooting, the bandwidth load is not high. However, the CPU performance was found to be abnormal.

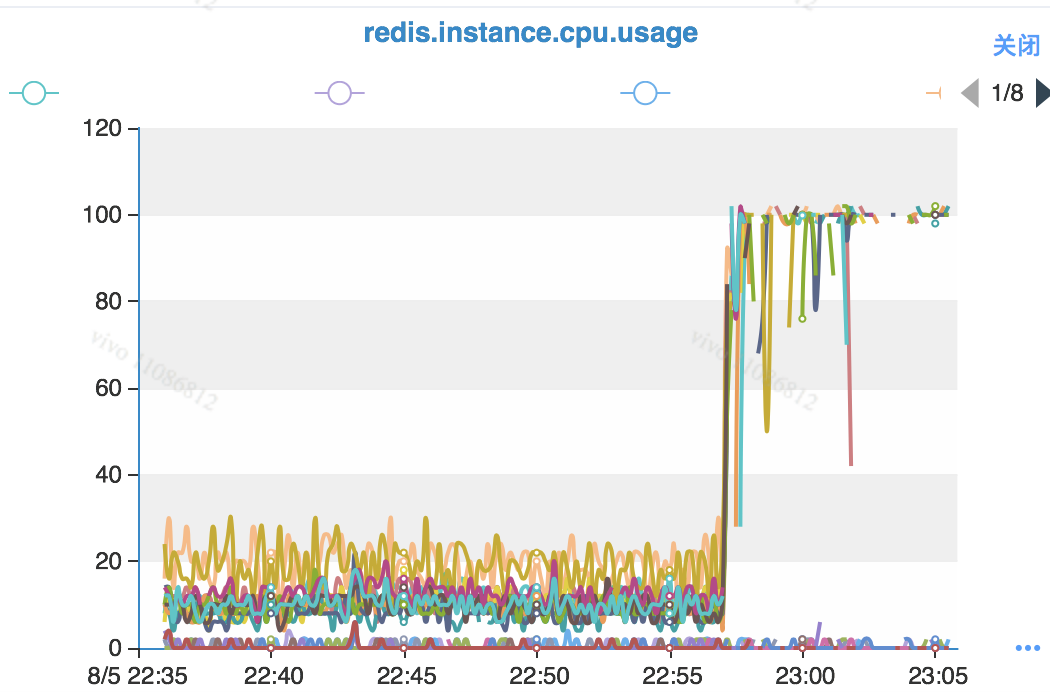

Comparing OPS and CPU load

Observing the MGET and CPU load used by the business feedback, we found the corresponding monitoring curve.

There is no direct correlation between MGET and high CPU load in terms of temporal analysis. The feedback from the business side is a general increase in MGET latency. Here we see that the OPS and CPU load of MGET are staggered.

Here we can temporarily determine that there is no direct relationship between business requests and CPU load for the time being, but it can be seen from the curve: there are staggered business requests and cpu load on the same timeline, and there should be an indirect relationship between the two.

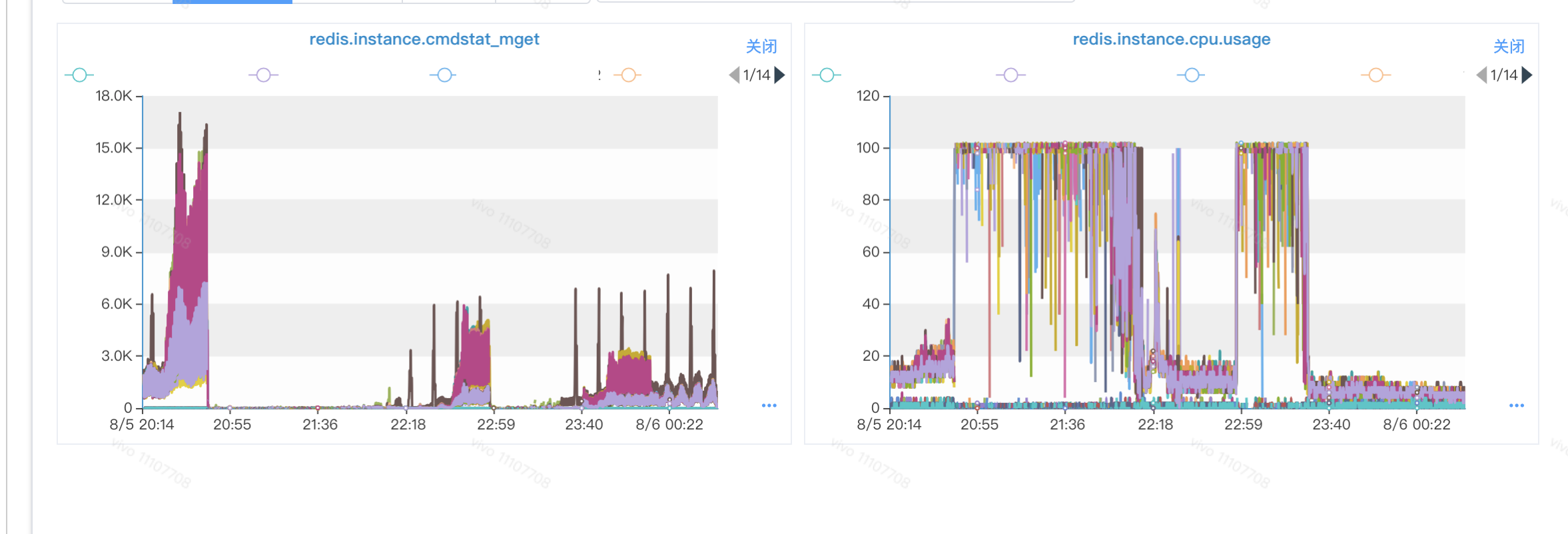

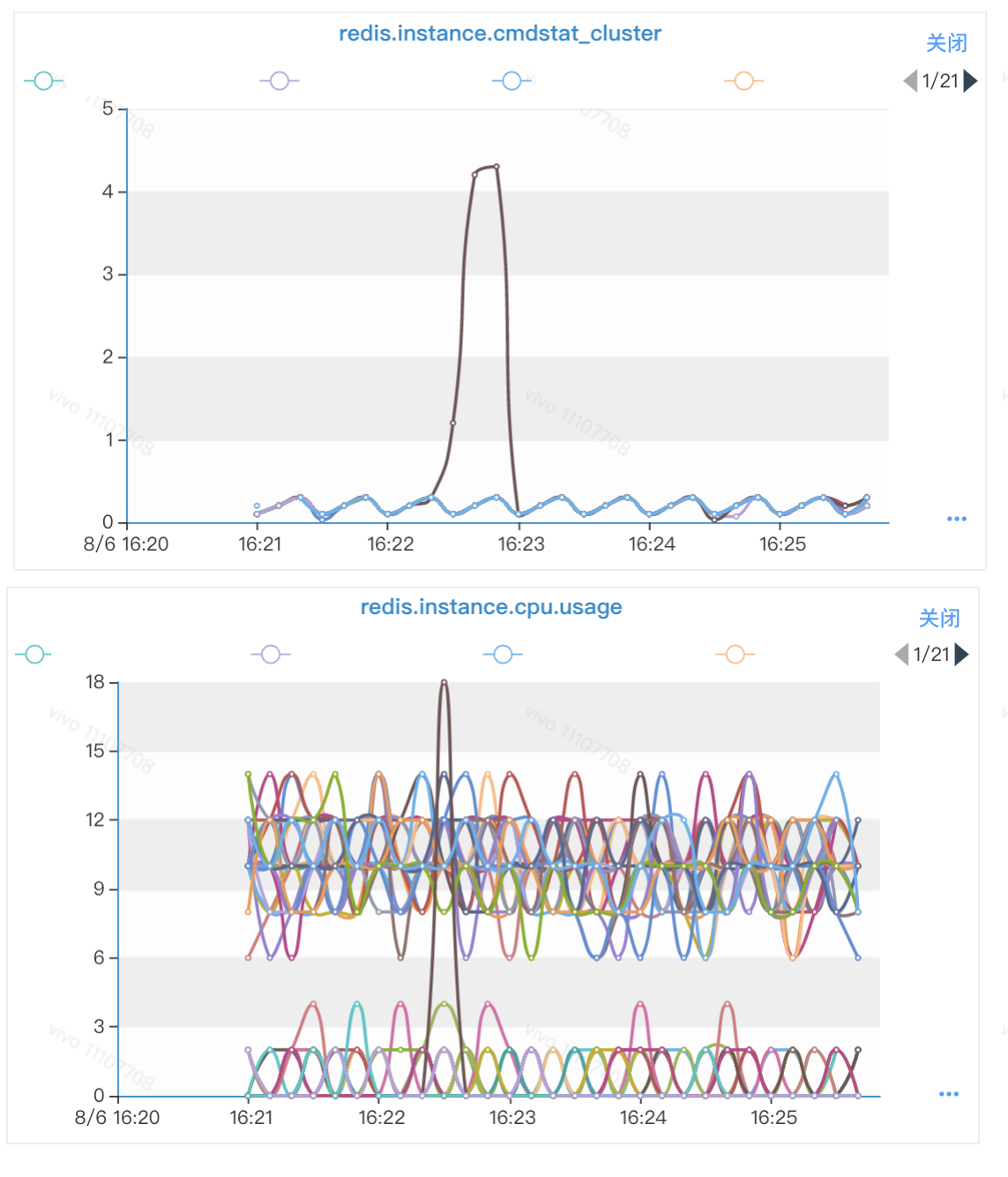

Compare Cluster instruction OPS and CPU load

Since some of my colleagues on the operations side had previously given feedback that the cluster had undergone expansion operations, there was bound to be a migration of slots.

Considering that business clients generally use caches to store slot topology information of Redis clusters, it was suspected that the Cluster directive would have some connection with CPU load.

We found that there was indeed some connection.

Here it is obvious: CPU usage rises significantly when a certain instance is executing Cluster instructions.

Based on the above phenomena, a simple focus can be roughly made on.

- MGET is executed on the business side, and Cluster instruction is executed for some reason.

- Cluster instruction is causing high CPU usage affecting other operations for some reasons.

- Cluster instructions are suspected to be a performance bottleneck.

Also, to elicit a few issues of concern.

Why are there more Cluster instructions being executed?

Why are CPU resources higher when Cluster instructions are executed?

Why are clusters with large node sizes prone to slot migration operations? Why is it easy to get “caught” in cluster migration slots with large node sizes?

Troubleshooting

Redis Hotspot Exclusion

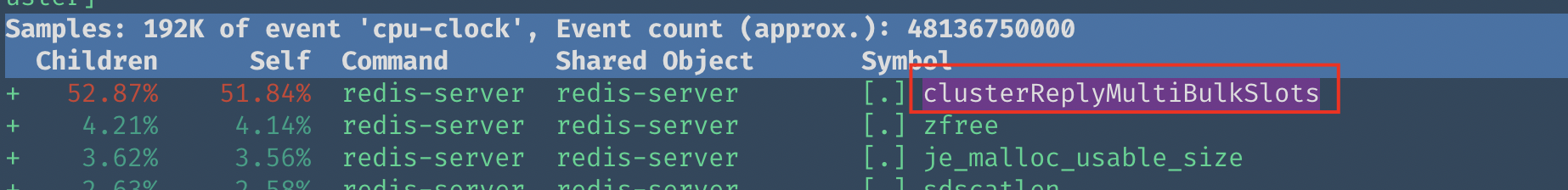

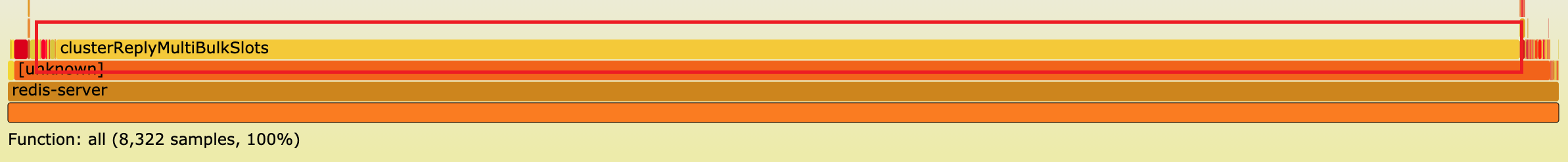

We performed a simple analysis using perf top on a Redis instance that appeared to have a high CPU load in the field.

As you can see from the above graph, the function (ClusterReplyMultiBulkSlots) takes up 51.84% of the CPU resources, which is abnormal.

ClusterReplyMultiBulkSlots implementation principle

We analyze the clusterReplyMultiBulkSlots function.

|

|

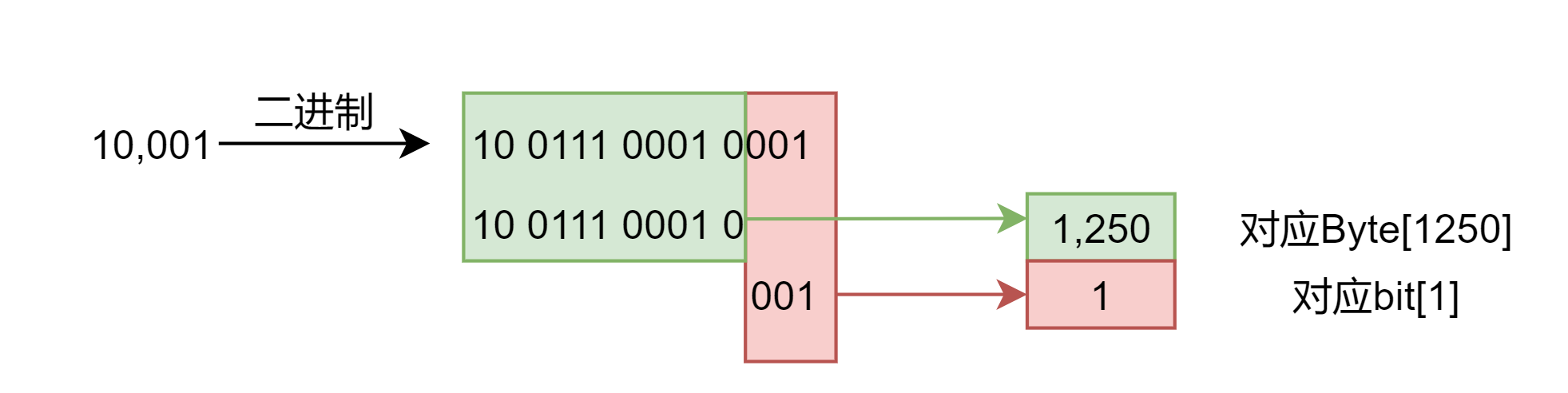

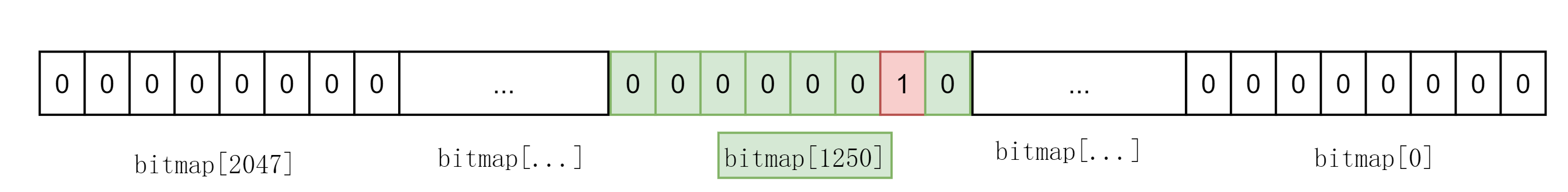

Each node (ClusterNode) uses a bitmap (char slots[CLUSTER_SLOTS/8]) to store information about the allocation of slots.

A brief description of the logic of BitmapTestBit: clusterNode->slots is an array of length CLUSTER_SLOTS/8. CLUSTER_SLOTS is a fixed value 16384. each bit of the array represents a slot. the subscript of the bitmap array here is 0 to 2047, and the range of slots is 0 to 16383. The slot range is 0 to 16383.

Because we have to determine whether the bit at the pos position is a 1 or not, therefore.

- off_t byte = pos/8: Get the information about which byte (Byte) on the bitmap holds this pos position. Since there are 8 bits in a byte, using pos/8 can guide which byte you need to find. Here the bitmap is treated as an array, and here it corresponds to the Byte with the corresponding subscript.

- int bit = pos&7: Get the information of which bit corresponds to the pos position on this byte. You can imagine grouping pos in groups of 8, and the last group (not satisfying 8) corresponds to the subscript position of the bit array on the corresponding byte of the bitmap.

(bitmap[byte] & (1<<bit)): determine whether the corresponding bit exists on the bitmap[byte].

Example with a slot of 10001.

Therefore, the slot 10001 corresponds to the byte with subscript 1250, and the bit to be verified is the subscript 1.

The corresponding position on ClusterNode->slots.

The green square shows bitmap[1250], which is the byte corresponding to slot 10001; the red box (bit[1]) corresponds to the position of 1<<bit. bitmap[byte] & (1<<bit), which is to confirm whether the position corresponding to the red box is 1. If yes, it means that 10001 on the bitmap has been marked. If yes, the bitmap is marked with 10001.

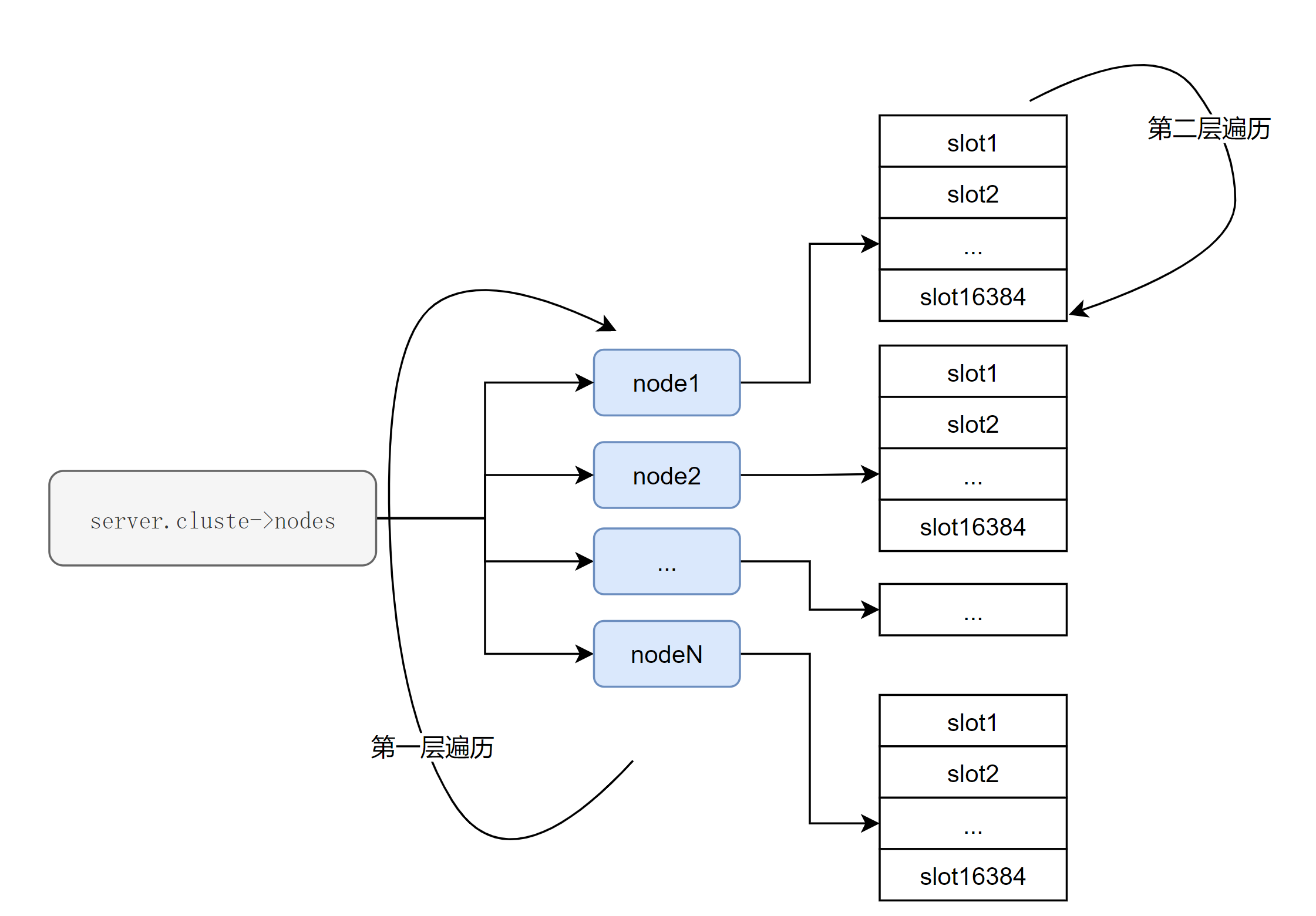

The summary logic of ClusterNodeGetSlotBit is: Determine whether the current slot is allocated on the current node . Therefore, the approximate logic of ClusterReplyMultiBulkSlots is as follows.

The approximate steps are as follows.

- Iterate over each node.

- For each node, traverse all slots and use ClusterNodeGetSlotBit to determine whether the slots in the traversal are assigned to the current node.

From the result of getting CLUSTER SLOTS command, we can see that the complexity is <number of cluster master nodes> * <total number of slots>. The total number of slots is 16384, a fixed value.

Redis Hotspot Exclusion Summary

As of now, the CLUSTER SLOTS instruction latency increases linearly with the number of master nodes in the Redis cluster. The larger number of master nodes in the cluster we are investigating explains the larger latency of the CLUSTER SLOTS instruction in the current network phenomenon.

Client troubleshooting

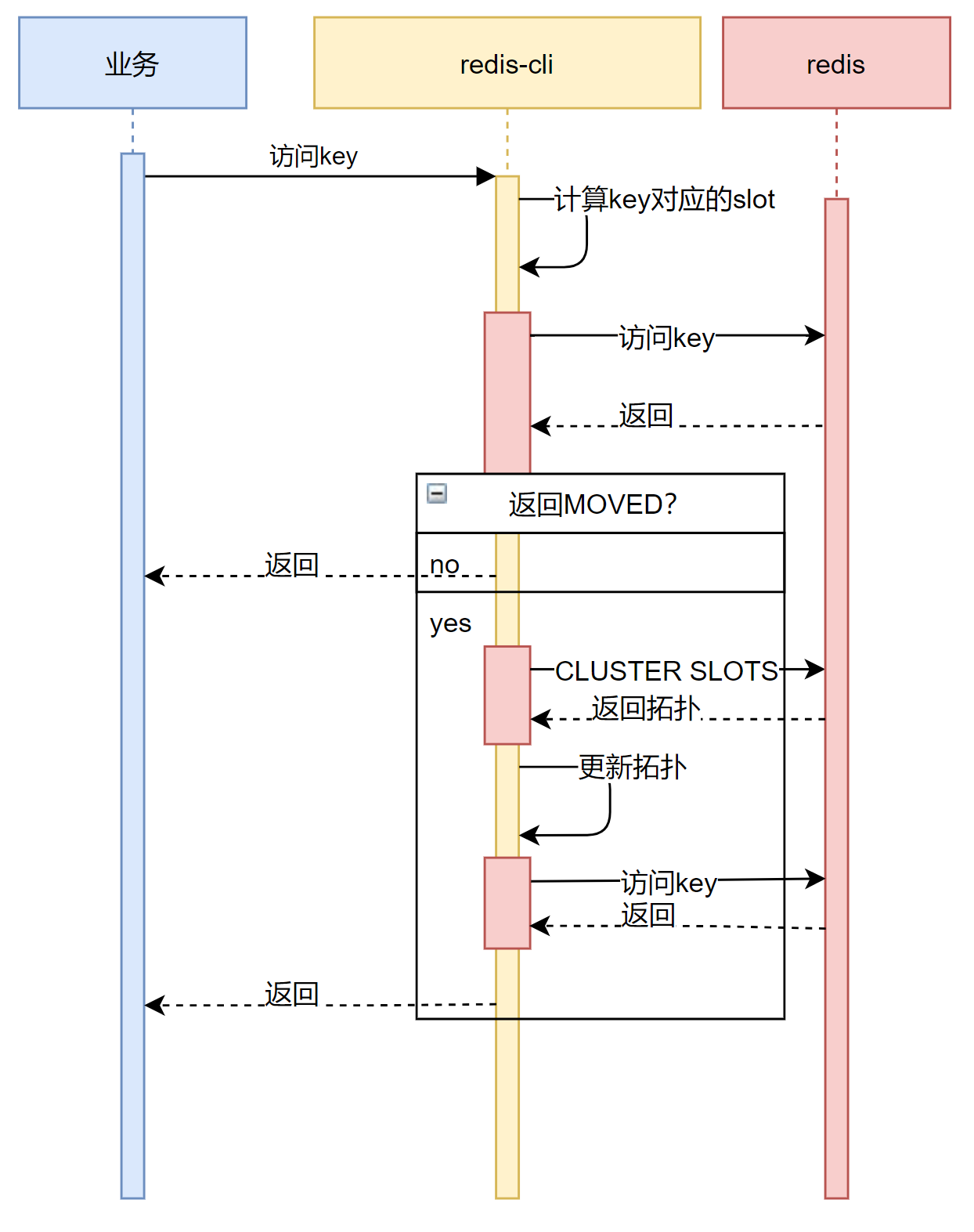

Understand that the operations and maintenance students exist expansion operations, expansion is bound to involve some key in the time of access there is a MOVED error.

The current use of Hiredis-vip client code for a simple browse, a brief analysis of the following current business use of Hiredis-vip client in the event of MOVED how to deal with. As most of the other business commonly used Jedis client, here is also a simple analysis of the corresponding process of the Jedis client.

Hiredis-vip on MOVED processing implementation principle

Hiredis-vip for MOVED operations.

To view the call procedure for Cluster_update_route.

Here the cluster_update_route_by_addr performs a CLUSTER SLOT operation. As you can see, when a MOVED error is obtained, Hiredis-vip re-updates the Redis cluster topology with the following characteristics.

- Because the nodes are hashed the same way through ip:port as the key, multiple clients can easily access the same node at the same time if the cluster topology is similar.

- If a node fails to access, it will find the next node through an iterator. Due to the above, multiple clients can easily access to the next node at the same time.

Jedis on MOVED processing implementation principle

A brief look at the Jedis client code shows that if there is a MOVED error, renewSlotCache will be called.

Looking at the renewSlotCache call, we can confirm that Jedis sends the Redis command CLUSTER SLOTS to re-pull the Redis cluster’s slot topology when it encounters a MOVED error in cluster mode.

Summary of client-side implementation principles

Since Jedis is the Redis client for Java and Hiredis-vip is the Redis client for C++, it can be simply assumed that this exception handling mechanism is a common operation.

The process for combing MOVED in client cluster mode is roughly as follows.

In summary.

-

access to the key using the client-side cached slot topology.

-

Redis node returns normal.

- Access is normal, continue with subsequent operations

-

Redis node returns MOVED.

- Perform CLUSTER SLOTS command execution on the Redis node to update the topology.

- Re-access to the key using the new topology.

Summary of client-side troubleshooting

The Redis cluster is expanding, which means that there are bound to be some Redis clients that are accessing the Redis cluster and encountering MOVED, executing the Redis command CLUSTER SLOTS for topology updates.

If the migration key hit rate is high, the CLUSTER SLOTS instruction will be executed more frequently. This results in the Redis cluster being continuously executed by the client during the migration process with the CLUSTER SLOTS instruction.

Summary of the survey

Here, combined with the Redis-side CLUSTER SLOTS mechanism and the client-side logic for MOVED, several questions can be answered.

Why are there more Cluster instructions being executed?

- Because of the migration operation, the business accessing some migrated keys will get the MOVED return, and the client will re-pull the slot topology information and execute CLUSTER SLOTS on the return.

Why are CPU resources higher when Cluster instructions are executed?

- Analyzing the Redis source code, I found that the time complexity of the CLUSTER SLOT instruction is proportional to the number of master nodes. The current Redis cluster has a high number of master nodes, which naturally consumes a high amount of time and CPU resources.

Why is it easy to have problems with migrating slot operations for clusters with large node sizes?

- The migration operation is bound to bring some clients to access the key when it returns MOVED.

- The client will execute CLUSTER SLOTS command for the return of MOVED.

- CLUSTER SLOTS instruction will increase the latency with the increase of the number of cluster master nodes.

- Business accesses rise during the migration of slots because of the CLUSTER SLOTS latency, which is perceived externally as elevated latency in executing instructions.

Optimization

Analysis of the current situation

According to the current situation, it is a normal process for clients to encounter MOVED for CLUSTER SLOTS execution, because the cluster’s slot topology needs to be updated to improve the efficiency of subsequent cluster access.

This process in addition to Jedis, Hiredis-vip, other clients should also be similar to the slot information cache optimization. There is not much room for optimization of this process, which is determined by the cluster access mechanism of Redis.

Therefore, the cluster information record of Redis is analyzed.

Redis Cluster Metadata Analysis

Each Redis node in the cluster will have some cluster metadata records, recorded in server.cluster, as follows.

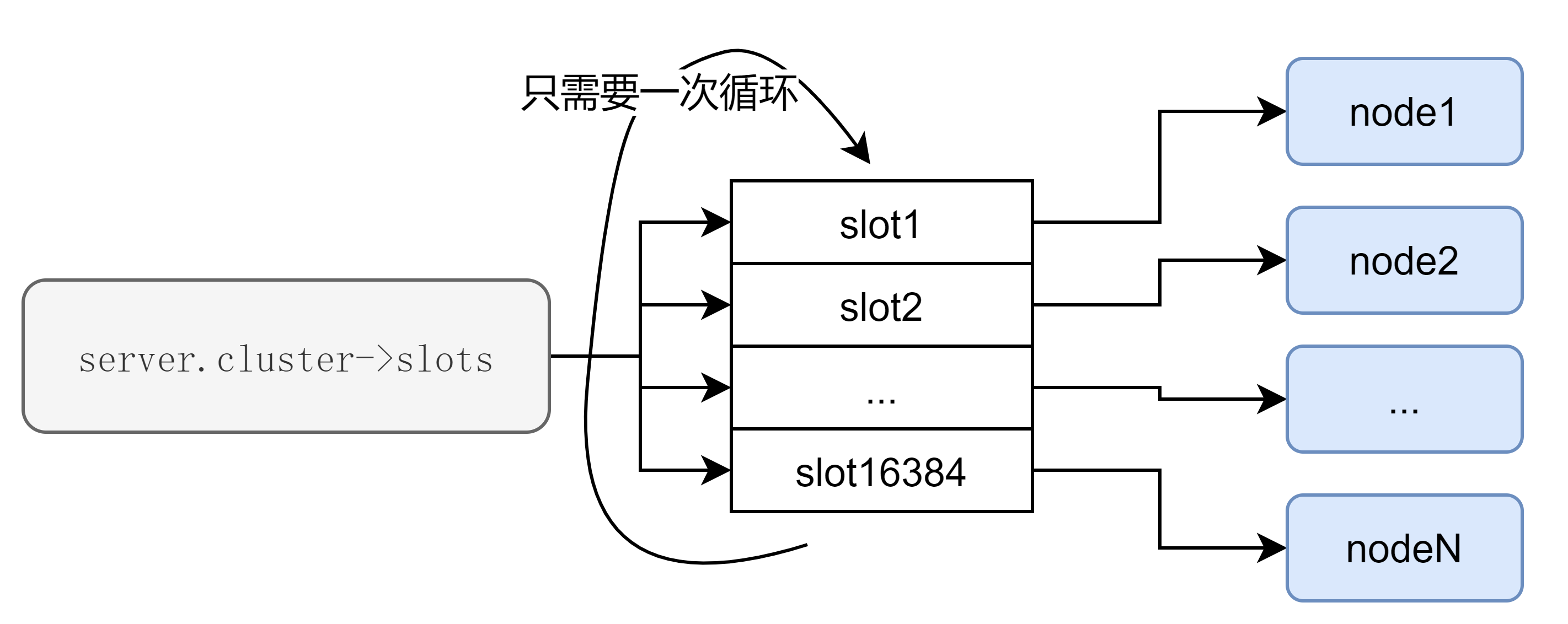

As described in 2.1, the original logic obtains the topology by traversing the slot information of each node.

Redis Cluster Metadata Analysis

Observe the results returned by CLUSTER SLOTS.

Combined with the cluster information stored in server.cluster, I think we can use server.cluster->slots for traversal here. Because server.cluster->slots has been updated at every topology change of the cluster, the node pointers are saved.

Optimization Solutions

A simple optimization idea is as follows.

- Iterate over the slot to find out which nodes in the slot are consecutive blocks.

- If the node of the currently traversed slot is the same as the previously traversed node, it means that the currently visited slot is under the same node as the previous one, i.e., it is in the “contiguous” slot region under a certain node.

- If the node of the currently traversed slot does not match the previously traversed node, it means that the currently visited slot is different from the previous one, and the previous “continuous” slot area can be output; and the current slot is used as the start of the next new “continuous” slot area.

Therefore, it is sufficient to iterate over server.cluster->slots to satisfy the requirement. A simple representation would be as follows.

This reduces the time complexity to <total number of slots>.

Realization

The optimization logic is as follows.

|

|

By traversing server.cluster->slots, we find the “contiguous” slot area under a node, and if it is not contiguous, we output the node information of the previous “contiguous” slot area and its backup nodes, and then continue to find the next “contiguous” slot area in the output. If the node is not contiguous, the node information of the previous “contiguous” slot area and its backup node information are output, and then the next “contiguous” slot area is found and output.

Comparison of optimization results

A side-by-side comparison of the CLUSTER SLOTS directive for both versions of Redis.

Test environment & pressure test scenarios

Operating system: manjaro 20.2

Hardware configuration:

- CPU:AMD Ryzen 7 4800H

- DRAM:DDR4 3200MHz 8G*2

Redis Cluster Information:

-

Persistence Configuration

- Turn off aof

- Turn off bgsave

-

Cluster node information.

- Number of nodes: 100

- All nodes are master nodes

Pressure testing scenarios:

- Using the benchmark tool to continuously send CLUSTER SLOTS commands to individual nodes of the cluster.

- After pressure testing one of the versions, recycle the cluster and redeploy it for the next round of pressure testing.

CPU resource usage comparison

perf export flame map. Original version.

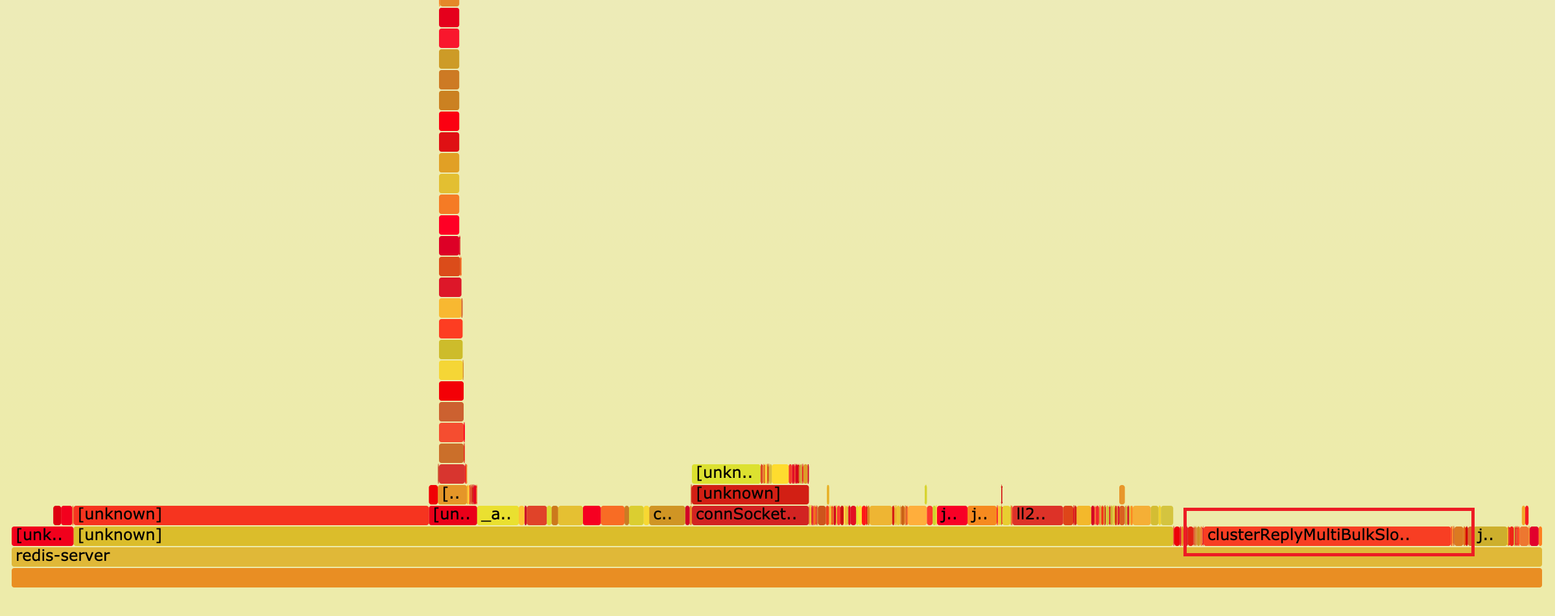

After optimization.

It can be clearly seen that the optimized share has dropped significantly. It is basically as expected.

Time consumption comparison

To test on, embed the time-consuming test code at

Input logs for comparison.

Original log output:

|

|

Optimized version log output:

|

|

The decrease in time consumption is obvious: from 2000+us to 200-us; the time consumption in a cluster of 100 master nodes is reduced to 8.2% of the original; the optimization results are basically as expected.

Summary

Here we can briefly describe the above actions of the article to draw such a conclusion: performance defects.

To briefly summarize the above troubleshooting and optimization process.

- A large Redis cluster was experiencing significant access latency on some nodes due to the CLUSTER command.

- Using the perf top command to troubleshoot Redis instances, I found that the clusterReplyMultiBulkSlots command was using abnormal CPU resources.

- Analysis of clusterReplyMultiBulkSlots revealed significant performance issues with this function.

- Optimization of clusterReplyMultiBulkSlots resulted in significant performance improvements.

From the above troubleshooting and optimization process, we can conclude that the current Redis has a performance defect in the CLUSTER SLOT instruction.

Because of Redis’ data slicing mechanism, the key access method in Redis cluster mode is to cache the topological information of the slot. The optimization point can only be started at CLUSTER SLOTS. The number of Redis cluster nodes is generally not so large, and the problem is not obvious.

In fact, the logic of Hiredis-vip also has some problems. As mentioned in 2.2.1, Hiredis-vip’s slot topology update method is to iterate through all nodes one by one to perform CLUSTER SLOTS, which can have a knock-on effect if the Redis cluster is large and the client size on the business side is large.

-

If the Redis cluster is large, CLUSTER SLOTS responds more slowly.

-

if a node does not respond or returns an error, the Hiredis-vip client will continue the request to the next node.

-

the same method of iterative traversal of the Redis cluster nodes in the Hiredis-vip client (since the information about the cluster is basically the same across clients), when the client size is large, there may be blocking at a Redis node, which causes the hiredis-vip client to traverse the next Redis node.

-

A large number of Hiredis-vip clients accessing a number of Redis nodes one by one, which can lead to Redis nodes being requested one by one under the “traversal” of a large number of Hiredis-vip clients if the Redis nodes cannot handle such requests.

In conjunction with point 3 above, imagine that there are 1w clients accessing the Redis cluster. Because there is a migration operation for a key with a high hit rate, all the clients need to update the slot topology. Since all clients have the same cluster node information cached, the order of traversing each node is the same. Redis nodes are accessed by most of the clients (e.g., 9k+ clients) in the same order of traversal, and the CLUSTER SLOTS command is executed. Executing the CLUSTER SLOTS command causes Redis nodes to block one by one.

-

The end result is that the CPU load on most Redis nodes spikes, and many Hiredis-vip clients continue to fail to update the slot topology.

The end result is that after a large-scale Redis cluster undergoes a slot migration operation, the business-side perception under large-scale Hiredis-vip client access is that the common command latency becomes high, while the Redis instance CPU resource usage rises high. This logic can be optimized to some extent.

The optimizations in subsection 3 above have now been committed and merged into Redis 6.2.2.