The Requests library is used to make standard HTTP requests in Python. It abstracts the complexity behind the request into a nice, simple API so you can focus on interacting with the service and using the data in your application.

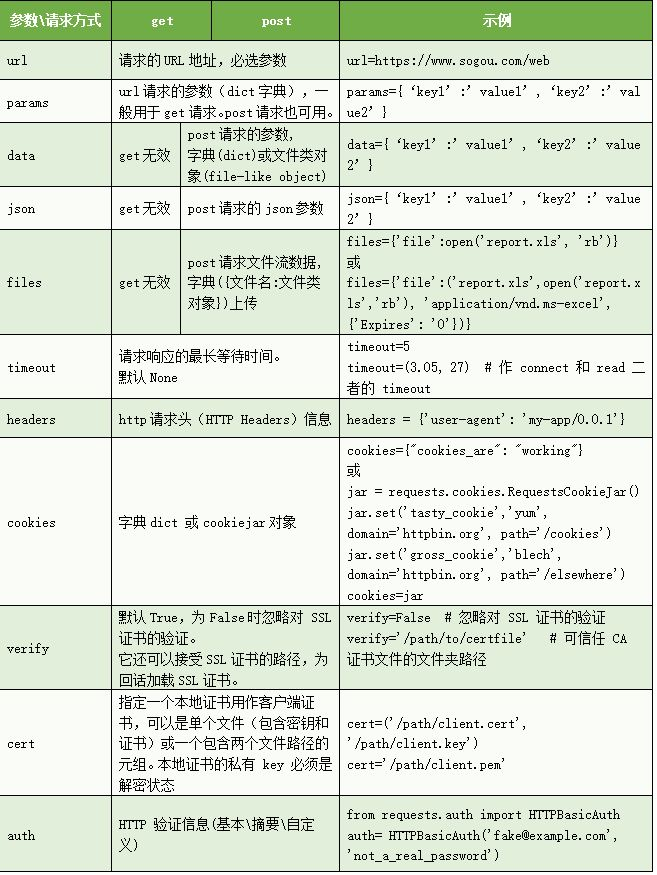

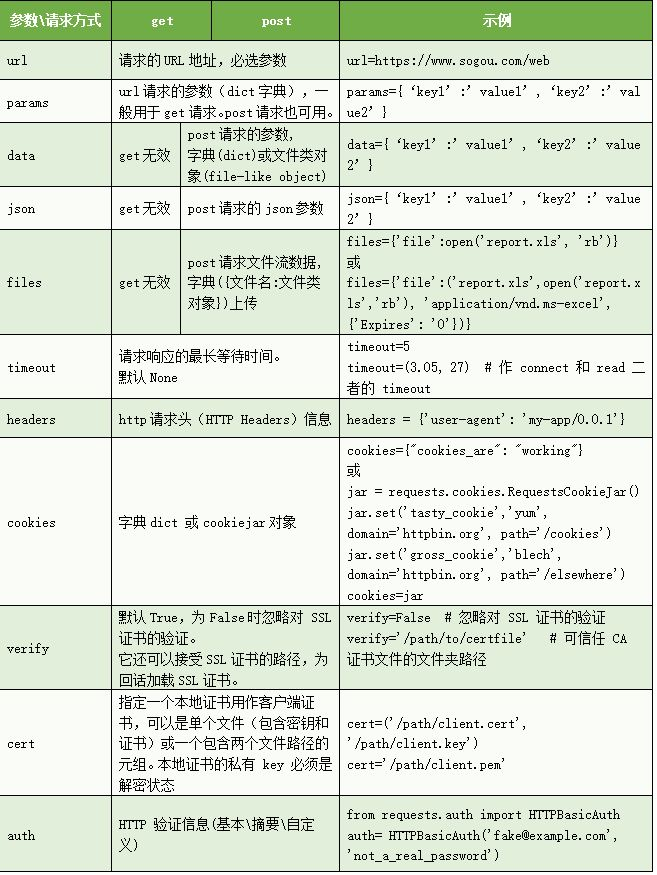

Requests POST/GET parameters

Commonly used parameters are listed in the following table.

Requests Return object Response common methods

Common properties and methods of Response class.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

|

Response.url 请求url,[见示例2.1]

Response.status_code 响应状态码,[见示例2.1]

Response.text 获取响应内容,[见示例2.1]

Response.json() 活动响应的JSON内容,[见示例2.1]

Response.ok 请求是否成功,status_code<400 返回True

Response.headers 响应header信息,[见示例2.1]

Response.cookies 响应的cookie,[见示例2.1]

Response.elapsed 请求响应的时间。

Response.links 返回响应头部的links连接,相当于Response.headers.get('link')

Response.raw 获取原始套接字响应,需要将初始请求参数stream=True

Response.content 以字节形式获取响应提,多用于非文本请求,[见示例2.2]

Response.iter_content() 迭代获取响应数据,[见示例2.2]

Response.history 重定向请求历史记录

Response.reason 响应状态的文本原因,如:"Not Found" or "OK"

Response.close() 关闭并释放链接,释放后不能再次访问’raw’对象。一般不会调用。

|

Requests Request Failure Retry Settings

When using requests to capture data, we often encounter connection timeout problems, so in order to make the program more robust, we need to implement a failure retry logic for requests.

1

2

3

4

5

6

7

8

|

import requests

from requests.adapters import HTTPAdapter

s = requests.Session()

s.mount('http://', HTTPAdapter(max_retries=3))

s.mount('https://', HTTPAdapter(max_retries=3))

s.get('http://example.com', timeout=5)

|

A reading of the source code provides an overview of the implementation mechanism.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

|

class HTTPAdapter(BaseAdapter):

"""The built-in HTTP Adapter for urllib3.

:param max_retries: The maximum number of retries each connection

should attempt. Note, this applies only to failed DNS lookups, socket

connections and connection timeouts, never to requests where data has

made it to the server. By default, Requests does not retry failed

connections. If you need granular control over the conditions under

which we retry a request, import urllib3's ``Retry`` class and pass

that instead.

Usage::

>>> import requests

>>> s = requests.Session()

>>> a = requests.adapters.HTTPAdapter(max_retries=3)

>>> s.mount('http://', a)

"""

__attrs__ = ['max_retries', 'config', '_pool_connections', '_pool_maxsize',

'_pool_block']

def __init__(self, pool_connections=DEFAULT_POOLSIZE,

pool_maxsize=DEFAULT_POOLSIZE, max_retries=DEFAULT_RETRIES,

pool_block=DEFAULT_POOLBLOCK):

if max_retries == DEFAULT_RETRIES:

self.max_retries = Retry(0, read=False)

else:

self.max_retries = Retry.from_int(max_retries)

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

83

84

85

86

87

88

|

class Retry(object):

""" Retry configuration.

Each retry attempt will create a new Retry object with updated values, so

they can be safely reused.

Retries can be defined as a default for a pool::

retries = Retry(connect=5, read=2, redirect=5)

http = PoolManager(retries=retries)

response = http.request('GET', 'http://example.com/')

Or per-request (which overrides the default for the pool)::

response = http.request('GET', 'http://example.com/', retries=Retry(10))

Retries can be disabled by passing ``False``::

response = http.request('GET', 'http://example.com/', retries=False)

Errors will be wrapped in :class:`~urllib3.exceptions.MaxRetryError` unless

retries are disabled, in which case the causing exception will be raised.

:param int total:

Total number of retries to allow. Takes precedence over other counts.

Set to ``None`` to remove this constraint and fall back on other

counts. It's a good idea to set this to some sensibly-high value to

account for unexpected edge cases and avoid infinite retry loops.

Set to ``0`` to fail on the first retry.

Set to ``False`` to disable and imply ``raise_on_redirect=False``.

....

:param iterable method_whitelist:

Set of uppercased HTTP method verbs that we should retry on.

By default, we only retry on methods which are considered to be

indempotent (multiple requests with the same parameters end with the

same state). See :attr:`Retry.DEFAULT_METHOD_WHITELIST`.

:param iterable status_forcelist:

A set of HTTP status codes that we should force a retry on.

By default, this is disabled with ``None``.

:param float backoff_factor:

A backoff factor to apply between attempts. urllib3 will sleep for::

{backoff factor} * (2 ^ ({number of total retries} - 1))

seconds. If the backoff_factor is 0.1, then :func:`.sleep` will sleep

for [0.1s, 0.2s, 0.4s, ...] between retries. It will never be longer

than :attr:`Retry.BACKOFF_MAX`.

By default, backoff is disabled (set to 0).

"""

DEFAULT_METHOD_WHITELIST = frozenset([

'HEAD', 'GET', 'PUT', 'DELETE', 'OPTIONS', 'TRACE'])

#: Maximum backoff time.

BACKOFF_MAX = 120

def __init__(self, total=10, connect=None, read=None, redirect=None,

method_whitelist=DEFAULT_METHOD_WHITELIST, status_forcelist=None,

backoff_factor=0, raise_on_redirect=True, raise_on_status=True,

_observed_errors=0):

self.total = total

self.connect = connect

self.read = read

if redirect is False or total is False:

redirect = 0

raise_on_redirect = False

self.redirect = redirect

self.status_forcelist = status_forcelist or set()

self.method_whitelist = method_whitelist

self.backoff_factor = backoff_factor

self.raise_on_redirect = raise_on_redirect

self.raise_on_status = raise_on_status

self._observed_errors = _observed_errors # TODO: use .history instead?

def get_backoff_time(self):

""" Formula for computing the current backoff

:rtype: float

"""

if self._observed_errors <= 1:

return 0

# 重试算法, _observed_erros就是第几次重试,每次失败这个值就+1.

# backoff_factor = 0.1, 重试的间隔为[0.1, 0.2, 0.4, 0.8, ..., BACKOFF_MAX(120)]

backoff_value = self.backoff_factor * (2 ** (self._observed_errors - 1))

return min(self.BACKOFF_MAX, backoff_value)

def sleep(self):

""" Sleep between retry attempts using an exponential backoff.

By default, the backoff factor is 0 and this method will return

immediately.

"""

backoff = self.get_backoff_time()

if backoff <= 0:

return

time.sleep(backoff)

def is_forced_retry(self, method, status_code):

""" Is this method/status code retryable? (Based on method/codes whitelists)

"""

if self.method_whitelist and method.upper() not in self.method_whitelist:

return False

return self.status_forcelist and status_code in self.status_forcelist

# For backwards compatibility (equivalent to pre-v1.9):

Retry.DEFAULT = Retry(3)

|

Retry design is relatively simple, in the HTTPConnectionPool according to the returned exceptions and access methods, distinguish which link failure (connect? read?), and then reduce the corresponding value can be. Then determine whether all the operation retries are zeroed out, and report MaxRetries exception if zeroed out. However, a simple backoff algorithm is used for the interval between each retry.

There are a few points to note when using retry:

- If you use a simple form such as get, it will retry 3 times by default.

- Retries are only retried if there are DNS resolution errors, link errors, link timeouts, and other exceptions. It will not retry if there is a read timeout, write timeout, HTTP protocol error, etc.

- Using Retries will cause the error returned to be MaxRetriesError instead of the exact exception.

Reference link.

Requests Chinese encoding garbled problem

When using requests, there are sometimes problems with encoding errors, which are mainly caused by errors in encoding recognition. The most common error that occurs when the content is garbled is the recognition of the encoding as ISO-8859-1.

What is ISO-8859-1?

ISO 8859-1, officially ISO/IEC 8859-1:1998, also known as Latin-1 or “Western European Language”, is the first 8-bit character set of ISO/IEC 8859 within the International Organization for Standardization. It is based on ASCII, with 96 letters and symbols in the vacant 0xA0-0xFF range for use in Latin alphabetic languages with additional symbols.

Why is recognized as ISO-8859-1?

With the error page, we found that even though the specific encoding of the page was specified in the HTML as follows, the error still occurred.

1

|

<meta http-equiv="Content-Type" content="text/html; charset=utf-8" />

|

By looking at the source code, we can find that the default encoding recognition logic of Requests is very simple: as long as the server response header can not get the encoding information that the default use of ISO-8859-1, the specific logic can be obtained from the Lib\site-packages\requests\utils.py. file.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

|

def get_encoding_from_headers(headers):

"""Returns encodings from given HTTP Header Dict.

:param headers: dictionary to extract encoding from.

"""

content_type = headers.get('content-type')

if not content_type:

return None

content_type, params = cgi.parse_header(content_type)

if 'charset' in params:

return params['charset'].strip("'\"")

if 'text' in content_type:

return 'ISO-8859-1'

|

From the code can be seen: requires will first look for the HTTP header “Content-Type” field, if not, the default encoding format is exactly “ISO-8859-1”. The code does not get the encoding format specified in the HTML page, about such processing, many people also submitted in the official issues spit, but the author replied is strictly http protocol standard to write this library. HTTP protocol RFC 2616 mentioned, if the HTTP response in the Content-Type field does not specify the charset, the default page is ‘ISO-8859-1’ encoding.

When you receive a response, Requests makes a guess at the encoding to use for decoding the response when you call the Response.text method. Requests will first check for an encoding in the HTTP header, and if none is present, will use charade to attempt to guess the encoding.

The only time Requests will not do this is if no explicit charset is present in the HTTP headers and the Content-Type header contains text. In this situation, RFC 2616 specifies that the default charset must be ISO-8859-1. Requests follows the specification in this case. If you require a different encoding, you can manually set the Response.encoding property, or use the raw Response.content.

How to solve the problem after finding the cause of the messy code?

If you know the explicit encoding, the solution is very simple, just specify the specific encoding.

1

2

3

|

resp = requests.get(url)

resp.encoding = 'utf-8'

print(resp.text)

|

Or to recode the data.

1

2

|

resp = requests.get(url)

print(resp.text.encode(resp.encoding).decode('utf-8'))

|

How to get the exact page encoding when the exact encoding is not known?

Actually Requests provides to get the encoding from the HTML content, it is just not used by default, see Lib\site-packages\requests\utils.py:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

|

def get_encodings_from_content(content):

"""Returns encodings from given content string.

:param content: bytestring to extract encodings from.

"""

warnings.warn((

'In requests 3.0, get_encodings_from_content will be removed. For '

'more information, please see the discussion on issue #2266. (This'

' warning should only appear once.)'),

DeprecationWarning)

charset_re = re.compile(r'<meta.*?charset=["\']*(.+?)["\'>]', flags=re.I)

pragma_re = re.compile(r'<meta.*?content=["\']*;?charset=(.+?)["\'>]', flags=re.I)

xml_re = re.compile(r'^<\?xml.*?encoding=["\']*(.+?)["\'>]')

return (charset_re.findall(content) +

pragma_re.findall(content) +

xml_re.findall(content))

|

In addition Requests provides encoding detection using chardet, see models.py:

1

2

3

4

|

@property

def apparent_encoding(self):

"""The apparent encoding, provided by the chardet library."""

return chardet.detect(self.content)['encoding']

|

For these three approaches, the rate of identification coding and the resources required differ.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

|

import requests

import cProfile

r = requests.get("https://www.biaodianfu.com")

def charset_type():

char_type = requests.utils.get_encoding_from_headers(r.headers)

return(char_type)

def charset_content():

charset_content = requests.utils.get_encodings_from_content(r.text)

return charset_content[0]

def charset_det():

charset_det = r.apparent_encoding

return charset_det

if __name__ == '__main__':

cProfile.run("charset_type()")

cProfile.run("charset_content()")

cProfile.run("charset_det()")

|

Implementation results.

1

2

3

|

charset_type() 25 function calls in 0.000 seconds

charset_content() 2137 function calls (2095 primitive calls) in 0.007 seconds

charset_det() 292729 function calls (292710 primitive calls) in 0.551 seconds

|

The final solution, creating a requests_patch.py file and importing it before the code is used, solves the problem.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

|

# 以下hack只适合Python2

import requests

def monkey_patch():

prop = requests.models.Response.content

def content(self):

_content = prop.fget(self)

if self.encoding == 'ISO-8859-1':

encodings = requests.utils.get_encodings_from_content(_content)

if encodings:

self.encoding = encodings[0]

else:

self.encoding = self.apparent_encoding

_content = _content.decode(self.encoding, 'replace').encode('utf8', 'replace')

self._content = _content

return _content

requests.models.Response.content = property(content)

monkey_patch()

|

The above code will report TypeError: cannot use a string pattern on a bytes-like object when executed under Python3, mainly because of the non-string data returned by .content in python3. To get the correct encoding under Python, the code is as follows.

1

2

3

4

5

6

7

8

9

10

11

12

13

|

# 此hack针对是是Python3

import requests

def charsets(res):

_charset = requests.utils.get_encoding_from_headers(res.headers)

if _charset == 'ISO-8859-1':

__charset = requests.utils.get_encodings_from_content(res.text)

if __charset:

_charset = __charset[0]

else:

_charset = res.apparent_encoding

return _charset

|

Downloading images or files using Requests

When using Python for data scraping, sometimes you need to keep files or images, etc. There are various ways to achieve this in Python.

Downloading images or files using requests

1

2

3

4

5

6

7

8

9

10

|

import requests

r = requests.get(url)

with open('./image/logo.png', 'wb') as f:

f.write(r.content)

# Retrieve HTTP meta-data

print(r.status_code)

print(r.headers['content-type'])

print(r.encoding)

|

1

2

3

4

5

6

7

8

9

10

11

12

|

from PIL import Image

from io import BytesIO

import requests

# 请求获取图片并保存

r = requests.get(url)

i = Image.open(BytesIO(r.content))

# i.show() # 查看图片

# 将图片保存

with open('img.jpg', 'wb') as fd:

for chunk in r.iter_content():

fd.write(chunk)

|

Use the urlretrieve method to download an image or file

1

2

3

4

5

|

#已被淘汰,不建议使用

import urllib.request

url = 'https://www.baidu.com/img/superlogo_c4d7df0a003d3db9b65e9ef0fe6da1ec.png'

urllib.request.urlretrieve(url, './image/logo.png')

|

Download images or files using the wget method

1

2

3

4

5

|

# wget是Linux下的一个命令行下载工具,在Python中可通过安装对应包后使用。

import wget

url = 'https://www.baidu.com/img/superlogo_c4d7df0a003d3db9b65e9ef0fe6da1ec.png'

wget.download(url, './image/logo.png')

|