I believe that many software engineers today use Linux or macOS systems, and unlike Windows, we hardly see the concept of disk defragmentation. From personal experience, the author has not defragmented a disk in macOS for the past seven or eight years, and you won’t find any related operations in today’s disk tools, only the diskutil command to set whether a disk is defragmented on or off.

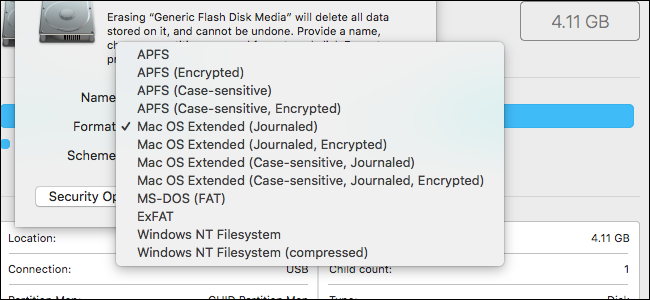

Windows operating systems may need to defragment their disks every once in a while, and there are two reasons behind this problem: one is that Windows uses a very simple file system called FAT, which is designed in such a way that the same files may be scattered in different locations on the disk, and the other is that solid state drives were not popular in the ancient times and mechanical hard drives have poor random read and write performance.

Linux and macOS systems do not need defragmentation for the opposite reasons that Windows needs defragmentation.

- Linux and macOS use file systems that either reduce the probability of fragmentation or implement automatic defragmentation features.

- Solid-state drives have different characteristics than mechanical drives, and defragmentation may not only not significantly help improve read and write performance, but also be detrimental to the life of the hardware.

File systems

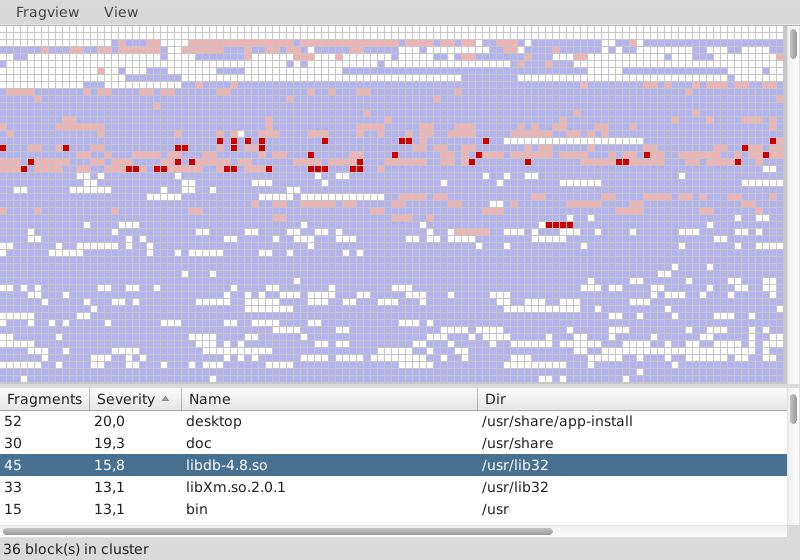

Linux generally uses Ext2, Ext3 and Ext4 file systems, with most Linux distributions today opting for Ext4. Unlike Windows, which stores multiple files consecutively, Linux scatters files to different parts of the disk, leaving some space between files to ensure that they are not fragmented when modified or updated.

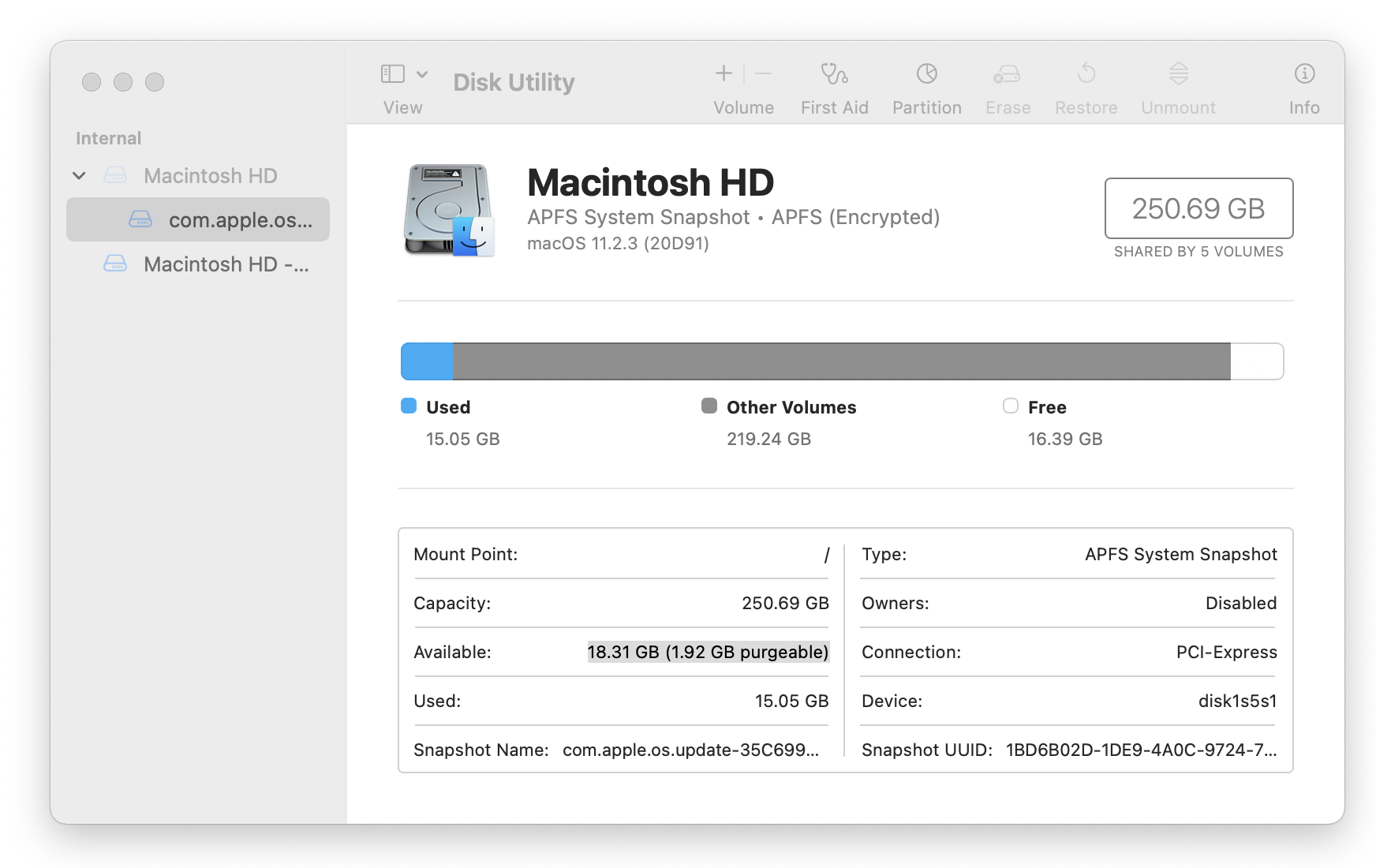

Most of today’s macOS uses the APFS file system, which is Apple’s file system optimized specifically for devices such as solid-state drives. The earlier HFS and HFS+ both used a block-based (Extent) design, where each block contains a serial number and a contiguous segment of storage space, and this allocation looks for several contiguous blocks in the file system to provide the space needed.

Both Linux and macOS have block-based file systems, and the disk space allocation is relatively reasonable, so there are no fragmented disks on Windows systems.

In addition to file systems designed to avoid fragmentation, both Linux and macOS also introduce a delayed space allocation strategy, where they delay disk writes as much as possible through buffers, which not only reduces the probability of swiping the disk, but also increases the probability of files being written to adjacent blocks, however, this mechanism is not without side effects, and more data may be lost in case of a system power failure or crash.

If fragmentation does occur on the disk, the Linux and macOS file systems will attempt to move the fragmented files without the need for additional defragmentation tools, which provides a much better user experience than manually triggering time-consuming defragmentation. macOS’ HFS+ system also supports real-time defragmentation, which is triggered when the following conditions are met.

- files less than 20 MB.

- the file is stored on more than 8 blocks.

- the file has not been updated in the last minute.

- the system has been booted for three minutes.

In most cases, the percentage of disk fragmentation in these operating systems is very low and fragmentation starts only when the disk is running low on space, so at that point we actually need a bigger disk or a newer computer, not to defragment the disk.

Solid State Drives

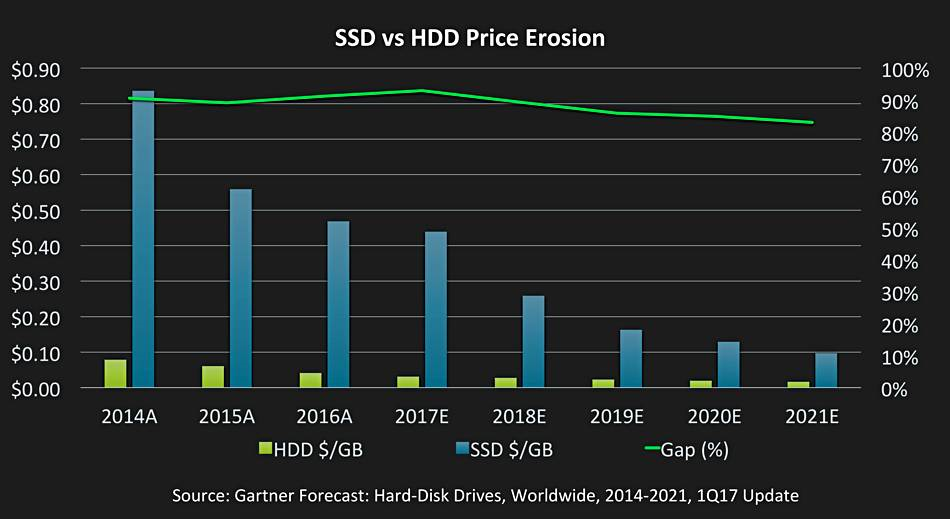

Solid state drives have actually been a storage medium for three decades, but they have not been popular in data centers and personal computers because they have been very expensive in the past. Even today, the price of mechanical disks has a significant advantage over solid state drives.

New storage media brings new features and performance, as we introduced in a previous article, because of the mechanical structure of a mechanical hard drive, its random I/O and sequential I/O performance can differ by hundreds of times, defragmentation can merge data scattered on the disk into one place, and the reduction in the number of random I/O will naturally improve the performance of reading and writing files.

Although there is a difference in performance between sequential I/O and random I/O on SSDs, the difference may be between a dozen and dozens of times, and the random I/O latency of SSDs is tens or even thousands of times better than that of mechanical disks, and the benefits of defragmentation on SSDs are more limited by now.

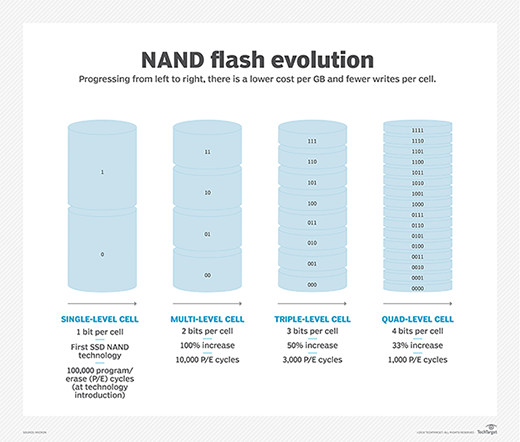

Although solid state drives as electronic components have better performance, solid state drives have a limit on the number of cycles of erasure, also known as P/E. Its lifespan is relatively limited compared to mechanical drives. If a 512 GB solid state drive is erased 1000 times, each time the data is written will consume a lifetime, and the drive will be scrapped after the number of erases reaches 1000, defragmentation is actually the active movement of data on the drive, which naturally affects the life of the hardware.

Summary

There is a very interesting phenomenon in software engineering, hardware and infrastructure engineers are desperately trying to optimize the performance of the system, but the application layer engineers often do not care about the small differences in performance, and this is also the result of the difference in job responsibilities, different positions determine the different focus.

The evolution and innovation of hardware deeply affects the design of upper-level software, and it is extremely difficult to design a universal system that does not take into account the characteristics of the underlying hardware when designing a file system, so it is impossible to take full advantage of the performance provided by the hardware and get the desired results. Here is a brief summary of two reasons why Linux and macOS do not need defragmentation.

- The block allocation-based design of the file system makes the probability of fragmentation on the disk very low, and the delayed allocation and automatic defragmentation policy frees the OS user from the need to consider disk defragmentation in most cases.

- The random read and write performance of SSDs is far better than that of mechanical drives, and while there are performance differences between random and sequential read and write, they are not as great as those of mechanical drives, and frequent defragmentation can affect the life of SSDs.