Many times we may directly expose private information such as application passwords or API Token in the source code, which is obviously not a good way to expose such private information. The Kubernetes system provides a Secret object to store private data, but it is only a simple Base64 encoding, which is better than exposing it directly, but it is still not enough to use Secret directly for applications with high security requirements. In this article, we will introduce how to use HashiCorp Vault for secret key management in Kubernetes clusters.

Introduction to Vault

Vault is a central management service for handling and encrypting secret keys across the infrastructure. Vault manages all secret keys through a secret engine. Vault has a set of secret engines that can be used.

There are many advantages of using Vault.

- The secret key management service can simply be seen as 1Password in the back-end domain. first of all, it will ensure the security of the secret key storage, no matter who gets the landed data file of the secret key management service, it still cannot be decrypted without the secret key.

- To get the previously configured passwords, secret keys and other key data from Vault, the administrator will need to assign Token, and for these assigned Token, the administrator can make various security policies including expiration, revocation, update and permission management, etc.

- The security level of Vault can provide services open to the public network, so it can provide a developer’s Vault for the development environment, and it can be very convenient to develop at home or off-site.

- Administrators can update c-security passwords or keys for individual data services at any time through Vault, and can withdraw or modify permissions for specific Tokens at any time. This is useful when Rolling out updates

- Using Vault forces code to obtain various data connection passwords or secret keys through the Vault interface. This prevents developers from inadvertently obtaining and using secret key passwords in their code. And because of the way Vault is managed, we can manage separate Vaults for different development stages, even though there is only one copy of the code. It is even possible to have only one person with Vault management privileges in a production environment and not feel overwhelmed with maintenance

- All secret key access and modification are logged. This can be used as evidence after the fact as a clue for intrusion

- Database and API keys are no longer scattered all over the code

Installation

Again for convenience we will use Helm3 here to install Vault on the Kubernetes cluster, corresponding to the environment version shown below.

|

|

Here you can install it directly using the official chart package provided by Vault: https://github.com/hashicorp/vault-helm. The package is not uploaded to the chart repository, so we can directly clone the code to the client where Helm3 is located and install it directly, or of course, you can install it directly by specifying Release package can also be installed by using the following command:

|

|

The above command will install a Helm release named vault under the kube-system namespace.

|

|

Seeing the above two Vault-related Pods running successfully proves that they have been installed successfully, so the installation is very easy, so let’s focus on how to use them.

Usage

If you want Vault to store the database username and password under the internal/database/config path of the application, you need to create the secret by opening the kv secret engine and putting the username and password in the specified path.

To access the command line interactive terminal of the vault-0 container.

Enable the kv-v2 secrets engine under the internal path.

Then add a username and password secret key under the internal/exampleapp/config path.

Once created, the secret created above can be verified with the following command.

|

|

This stores the username and password information in the Vault, which provides a Kubernetes authentication method that allows clients to authenticate by using the Kubernetes ServiceAccount for authentication.

To enable Kubernetes authentication.

Vault accepts Service Token from any client in the Kubernetes cluster, and during authentication, Vault verifies the ServiceAccount’s Token information with the configured Kubernetes address. Configure the Kubernetes authentication method with the ServiceAccount’s Token, Kubernetes address, and CA certificate information.

|

|

Both token_reviewer_jwt and kubernetes_ca_cert are injected into the Pod by default by Kubernetes, and the environment variable KUBERNETES_PORT_443_TCP_ADDR is also built-in to represent the Kubernetes APIServer’s intranet address of the Kubernetes APIServer. In order for the client to read the secret data defined in the previous step under the internal/database/config path, you also need to grant the read permission to that path.

Here we create a policy name internal-app that will enable read access to the secret in the path internal/database/config.

Then create a Kubernetes authentication role named internal-app.

This role connects a ServiceAccount named internal-app under the Kubernetes default namespace to Vault’s internal-app policy, and the Token returned after authentication has a 24-hour validity period. Finally, we exit vault-0 directly.

Now that the Vault-related preparations are done, the next step is how to read our Secret data above in Kubernetes. Above we defined a ServiceAccount named internal-app under the default namespace, which does not exist yet, so first create: (vault-sa.yaml)

Just create directly.

Then we use the sa object created above in our application: (vault-demo.yaml)

|

|

One of the more important things is that the spec.template.spec.serviceAccountName field needs to use the ServiceAccount resource object called internal-app that we created above, again directly.

|

|

The normal case is that the vault-agent-injector program in our deployed Vault will look up the annotations property of the deployed application in the Kubernetes cluster for processing. -7fb8449d7b-x8bft` pod is not getting any secret data, which can be verified by the following command.

You can see that there is no corresponding secret data in the container. At this point we need to add some instructions for getting the secret data via annotations: (vault-inject.yaml)

The annotations above defines some of the vault-related information, all starting with the prefix vault.hashicorp .com prefix.

agent-injectidentifies that the Vault Agent injection service is enabledroleindicates the Vault Kubernetes authentication roleagent-inject-secret-FILEPATHprefixes the path to the filedatabase-config.txtthat is written to/vault/secrets, corresponding to the secret data storage path defined in Vault.

Use the annotations defined above directly to patch the Deployment above.

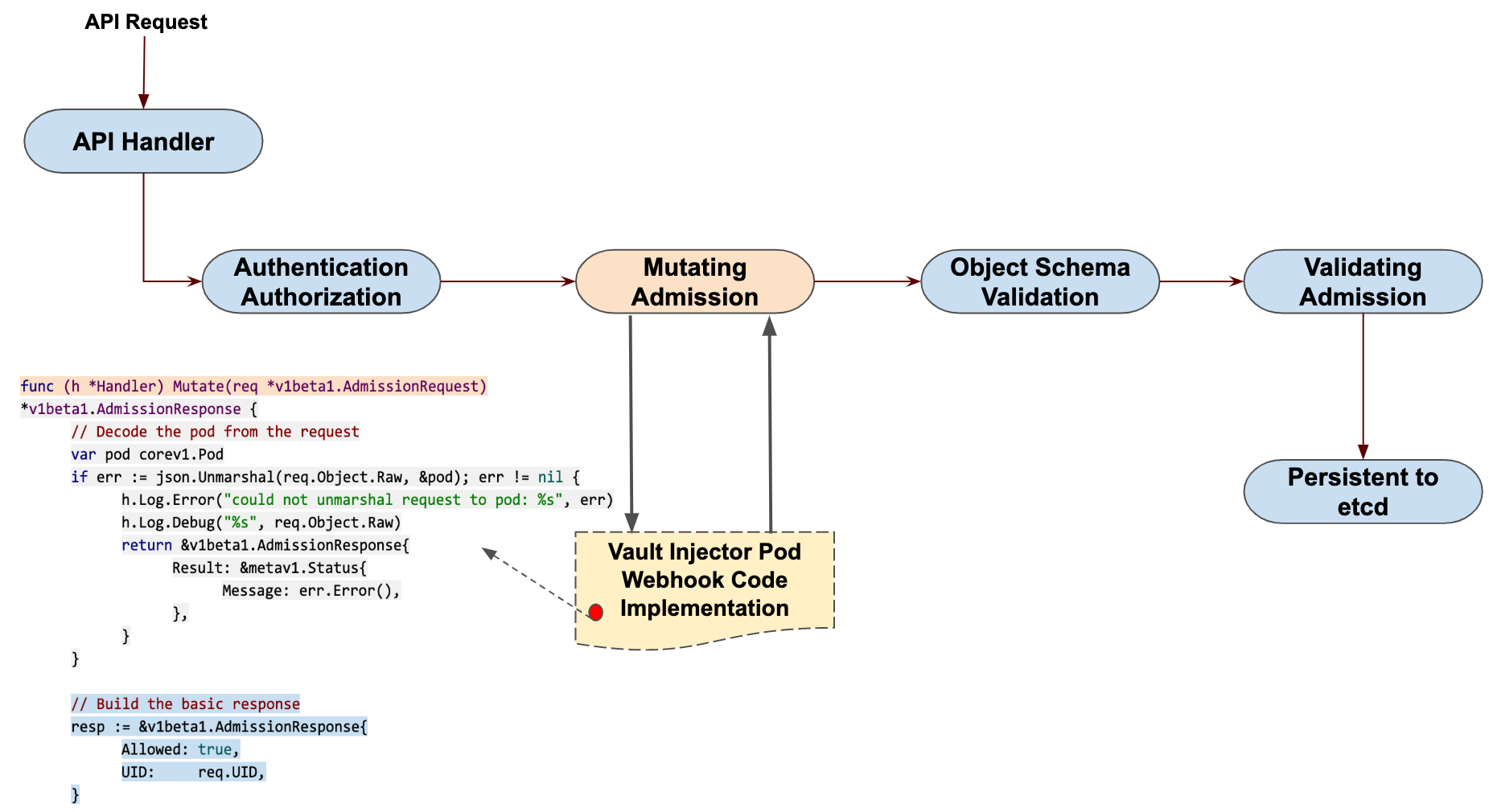

The new Pod will now contain two containers, the vault-demo container we defined and a Vault Agent container called vault-agent. Automatically adding a vault-agent Sidecar container to the Pod is actually done using the Mutating Admission Webhook, the same mechanism implemented by Istio.

We can now view the logs of the vault-agent container.

|

|

The vault-agent container manages the entire Token lifecycle and secret data retrieval, and the secret data we define is added to the application container under the /vault/secrets/database-config.txt path.

Here the secret data is successfully stored in our application container, but for practical applications we can read the corresponding secret data directly through the SDK provided by Vault. For example, the following is an example of reading dynamic authentication data through the Vault SDK.

|

|

It is also important to note that the authentication role we defined above only has 24 hours to expire, so you can perform a renew operation before it expires.

For more information on the use of Vault in conjunction with Kubernetes, check out the official documentation at https://learn.hashicorp.com/vault/getting-started-k8s/k8s-intro.