In almost all modern programming languages, the garbage collector is a complex system that requires a lot of effort in order to reclaim the deprecated memory without affecting the user program.

Java’s garbage collection mechanism is a good example. Java 8 contains four garbage collectors, linear, concurrent, parallel marker removal, and G1. It takes a lot of effort to understand how they work and the details of their implementation.

In this section, we will not only discuss the common garbage collection mechanisms and analyze their evolution from the v1.0 version of the Go language, but also dive into the source code to analyze how the garbage collectors work.

Design Principles

Today’s programming languages typically use both manual and automatic memory management. Programming languages such as C, C++, and Rust use manual memory management where engineers need to actively request or release memory, while languages such as Python, Ruby, Java, and Go use automatic memory management systems, generally garbage collection mechanisms, although Objective-C chooses automatic reference counting. Although reference counting is also an automatic memory management mechanism, we will not go into detail about it here; the focus of this section is still on garbage collection.

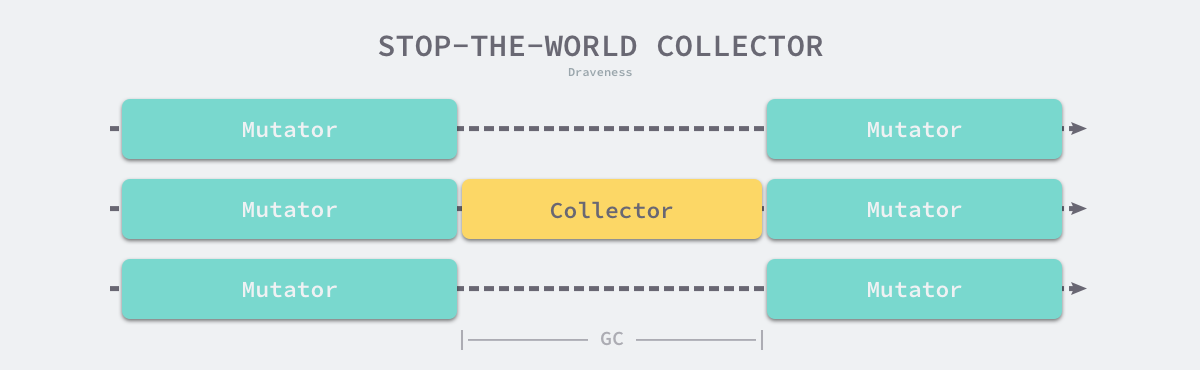

I believe that many people have the impression that the garbage collector is a suspended program (Stop the world, STW). As the user program requests more and more memory, the garbage in the system gradually increases; when the memory occupation of the program reaches a certain threshold, the entire application will be suspended and the garbage collector will scan all the objects that have been allocated and reclaim the memory space that is no longer in use.

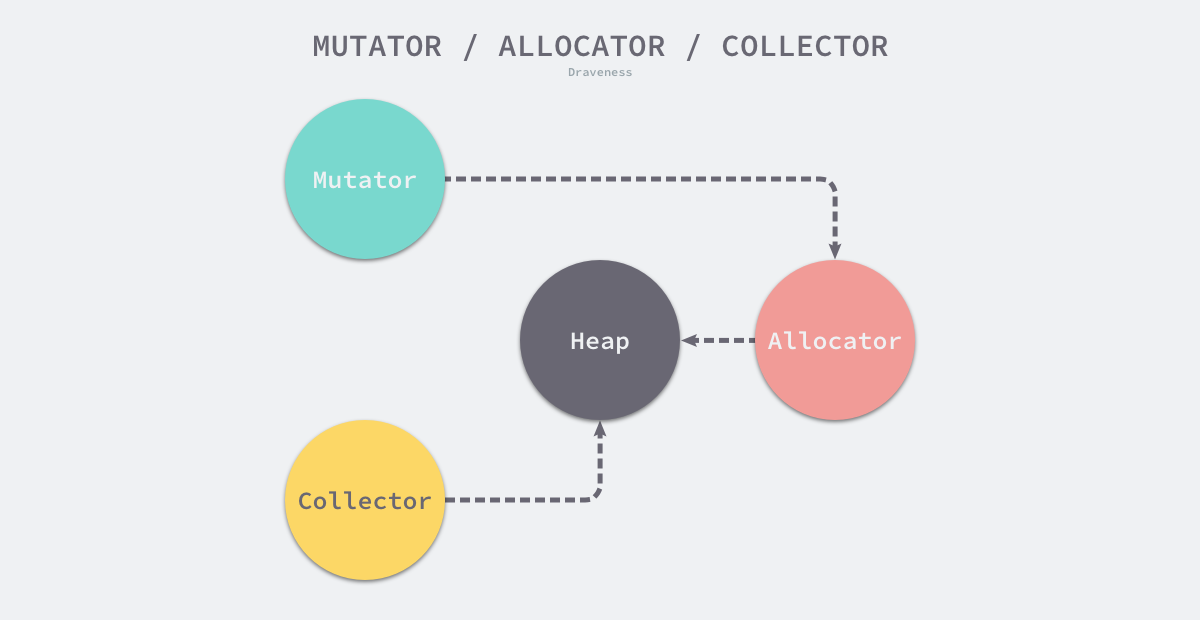

In the above diagram, the user program (Mutator) requests memory on the heap through the memory allocator (Allocator) and the garbage collector (Collector) reclaims the memory space on the heap. The memory allocator and the garbage collector together manage the heap memory space in the program. In this section, we will go over the key theories involved in Go language garbage collection to help us better understand the rest of this section.

Mark-Sweep

The Mark-Sweep algorithm is the most common garbage collection algorithm. The Mark-Sweep collector is a trace-based garbage collector whose execution can be divided into two phases: Mark and Sweep.

- marking phase - finding and marking all surviving objects in the heap from the root object.

- the cleanup phase - iterates through all objects in the heap, reclaiming unmarked garbage objects and adding the reclaimed memory to the free chain.

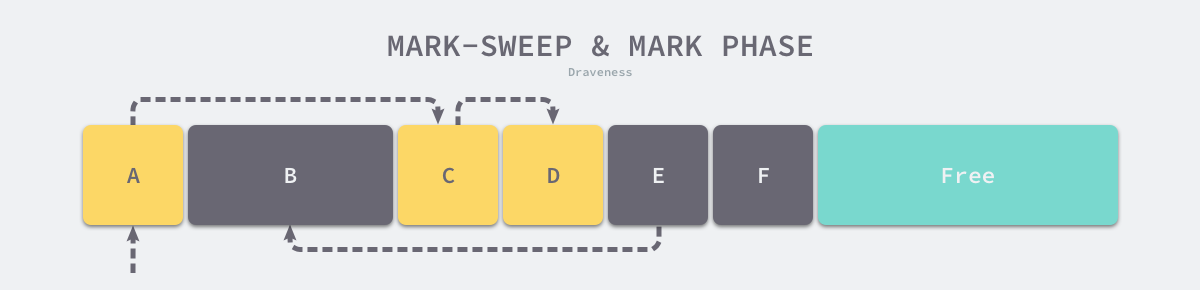

As shown in the figure below, the memory space contains multiple objects, we start from the root object and iterate through the object’s children and mark the objects reachable from the root node as alive, i.e., A, C and D. The remaining three objects, B, E and F, are treated as garbage because they are not reachable from the root node.

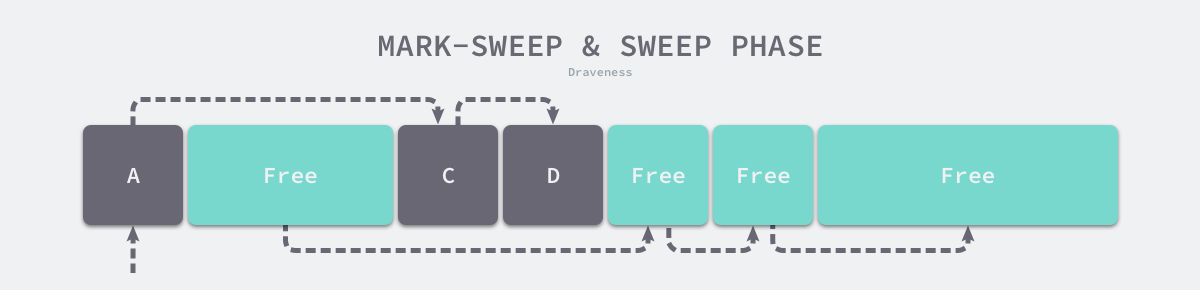

After the tagging phase, it enters the cleanup phase, in which the collector traverses all objects in the heap in turn, releasing the untagged B, E, and F objects and chaining the new free memory space in a chain structure for the memory allocator to use.

Here is the most traditional marker removal algorithm, the garbage collector starts from the root object of garbage collection, recursively iterates through the sub-objects pointed to by these objects and marks all the reachable objects as alive; after the marking phase, the garbage collector will sequentially iterate through the objects in the heap and remove the garbage from them, the whole process needs to mark the alive status of the objects, and the user program cannot be executed during the garbage collection process, we need to use a more complex mechanism to solve the problem of STW.

Three-color abstraction

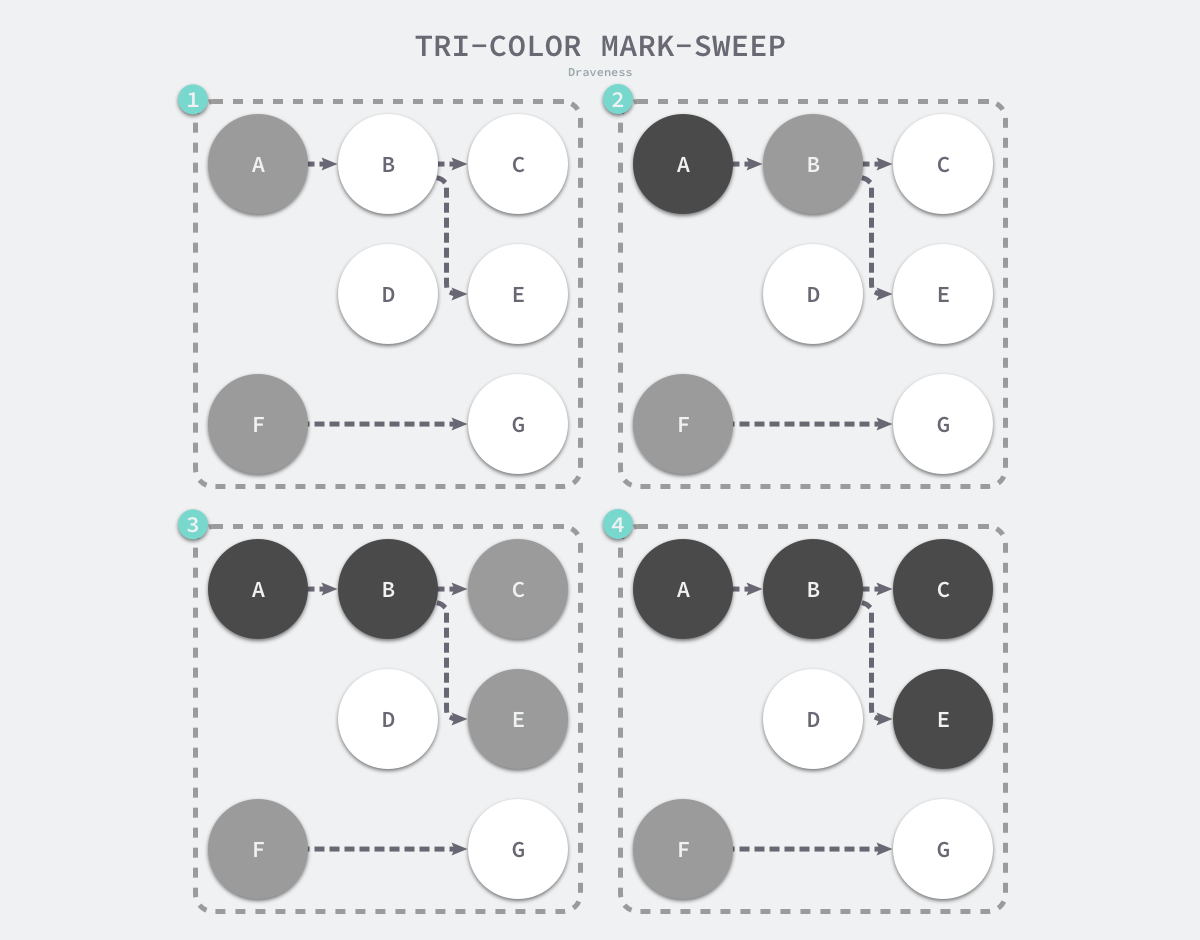

To address the long STW caused by the original marker removal algorithm, most modern trace garbage collectors implement a variant of the three-color marker algorithm to reduce the STW time. The three-color tagging algorithm classifies objects in the program into three categories: white, black, and gray.

1 white objects - potentially garbage whose memory may be reclaimed by the garbage collector. 2. black objects - active objects, including objects without any reference to external pointers and objects reachable from the root object. 3. gray objects - active objects, because of the presence of external pointers to white objects, whose children are scanned by the garbage collector.

When the garbage collector starts working, there are no black objects in the program, the root object of garbage collection will be marked as gray, the garbage collector will only take objects from the gray object collection and start scanning, the marking phase will end when there are no objects in the gray collection.

The working principle of the three-colored marker garbage collector is simple and we can summarize it in the following steps.

- select a gray object from the set of gray objects and mark it as black.

- mark all objects pointed to by the black object as gray, ensuring that neither the object nor the objects referenced by it will be reclaimed.

- repeat the above two steps until there are no gray objects in the object graph.

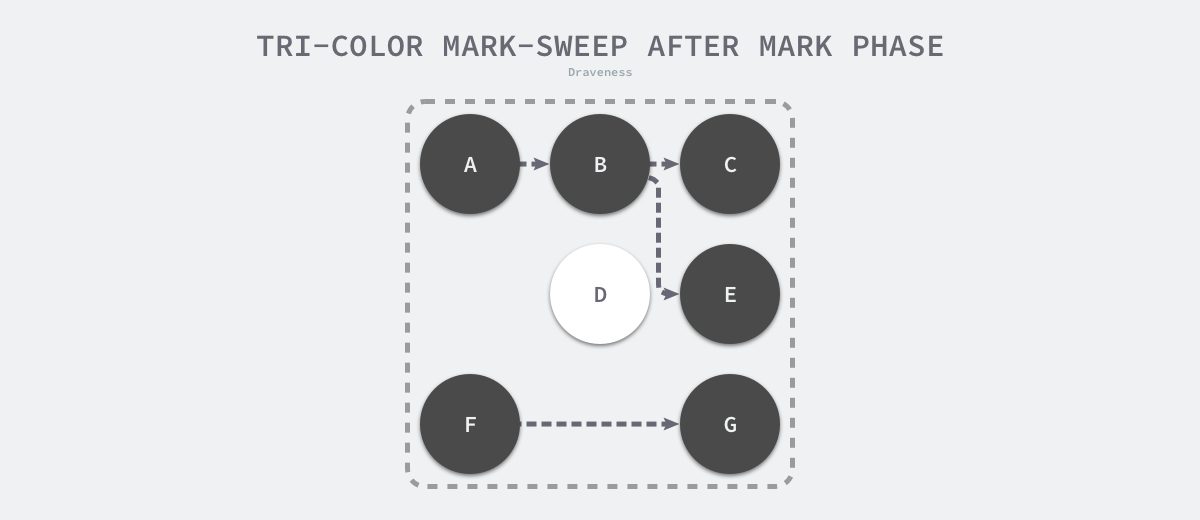

When the marking phase of the three-color marker cleanup is over, there are no gray objects in the heap of the application, we can only see black surviving objects and white garbage objects, which can be recycled by the garbage collector.

Because the user program may modify the object’s pointer during the marker execution, the three-color marker removal algorithm itself cannot be executed concurrently or incrementally; it still requires STW. In the three-color marker process shown below, the user program creates a reference from object A to object D, but since there is no longer a gray object in the program, object D is incorrectly reclaimed by the garbage collector.

Objects that should not be reclaimed but are reclaimed are very serious errors in memory management. We call such errors hanging pointers, i.e., pointers that do not point to a legal object of a specific type, affecting memory security. To mark objects concurrently or incrementally still requires the use of barrier techniques.

Barrier techniques

The memory barrier technique is a barrier instruction that allows the CPU or compiler to follow specific constraints when executing memory-related operations. Most modern processors today execute instructions out of order to maximize performance, but the technique ensures that memory operations are sequential, and that operations executed before the memory barrier must precede those executed after the memory barrier.

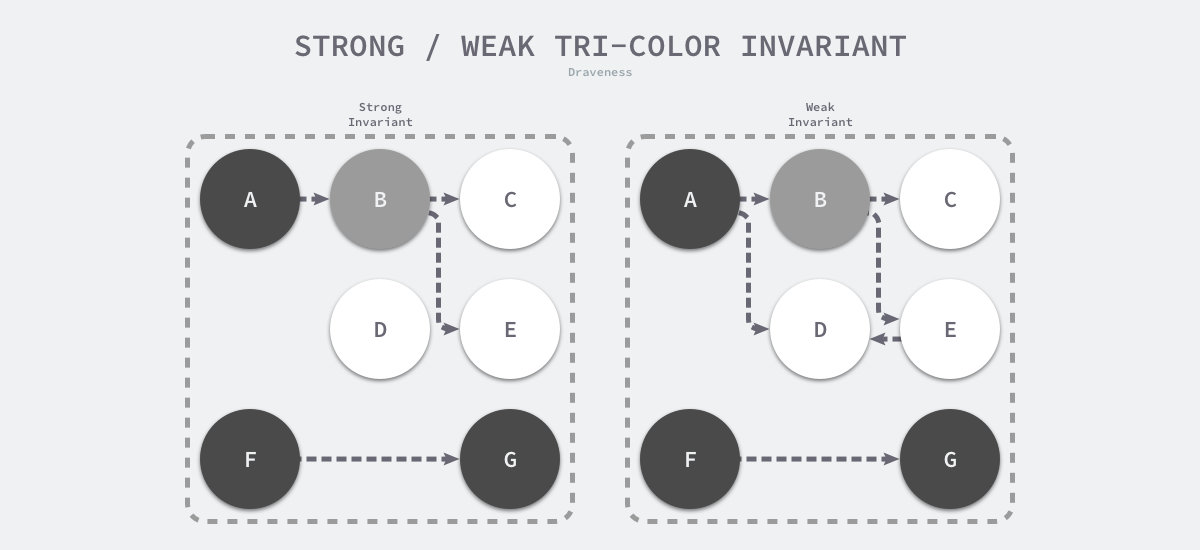

To guarantee correctness in concurrent or incremental marking algorithms, we need to reach one of the following two types of Tri-color invariant (TCI).

- strong tricolor invariance - black objects do not point to white objects, only to gray objects or black objects.

- weak tricolor invariant - the white object pointed to by the black object must contain a reachable path from the gray object through multiple white objects

The above diagram shows the heap memory with strong and weak tricolor invariance. By following either of the two invariants, we can guarantee the correctness of the garbage collection algorithm, and the barrier technique is an important technique to guarantee tricolor invariance during concurrent or incremental marking.

The barrier technique in garbage collection is more like a hook method, which is a piece of code that is executed when the user program reads an object, creates a new object, and updates the object pointer.

Depending on the type of operation, we can divide them into Read barriers and Write barriers. Since Read barriers require code fragments to be added to the read operation, which has a significant impact on the performance of the user program, programming languages tend to use Write barriers to ensure tri-color invariance.

Here we would like to introduce two write barrier techniques used in Go, namely, the insertion write barrier proposed by Dijkstra and the deletion write barrier proposed by Yuasa, and analyze how they guarantee tri-color invariance and correctness of the garbage collector.

Inserting Write Barriers

Dijkstra proposed in 1978 the insertion of a write barrier, by which the user program and the garbage collector can guarantee correct program execution while working alternately as follows.

The above pseudo-code for inserting the write barrier is pretty self-explanatory. Whenever we execute an expression like *slot = ptr, we execute the above write barrier to try to change the color of the pointer via the shade function. If the ptr pointer is white, then this function will set the object to gray, otherwise it will stay the same.

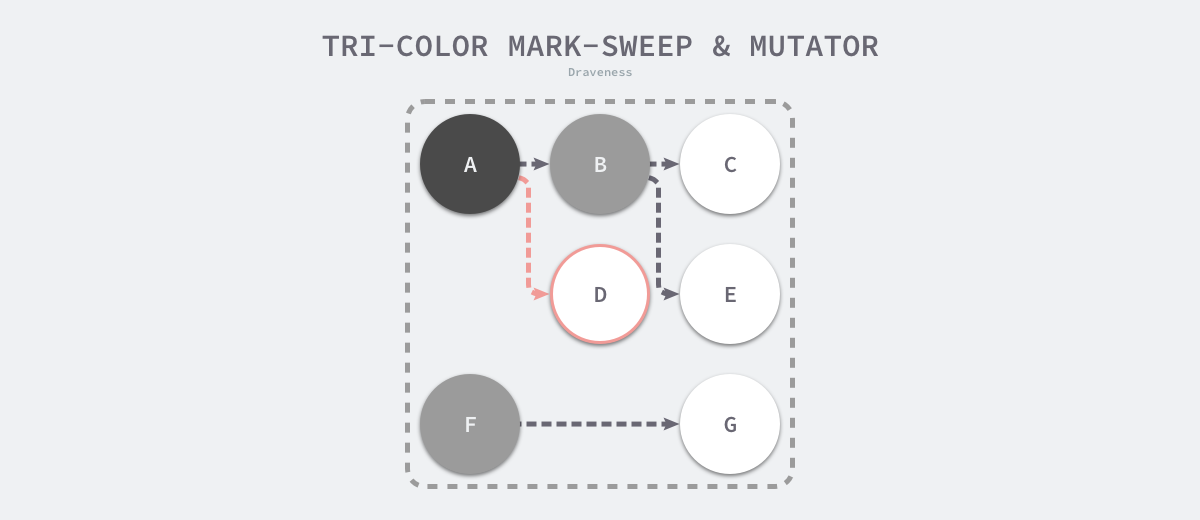

Assuming that we use Dijkstra’s proposed insertion-write barrier in our application, a scenario in which the garbage collector and the user program run alternately would result in a marking process as shown above.

- the garbage collector marks the root object pointing to object A as black and marks object B pointed to by object A as gray.

- the user program modifies the pointer to object A and points the pointer to object B to object C, which triggers the write barrier to mark object C as gray.

- the garbage collector iterates through the other gray objects in the program and marks each of them as black.

Dijkstra’s insertion-write barrier is a relatively conservative barrier technique that marks all potentially viable objects as gray to satisfy strong tricolor invariance. In the garbage collection process shown above, object B, which is no longer alive, is not recovered; if we change the pointer to object C back to B between the second and third steps, the garbage collector still considers object C alive, and these incorrectly marked garbage objects are recovered only in the next loop.

Although the plug-in Dijkstra write barrier is very simple to implement and guarantees strong tricolor invariance, it also has significant drawbacks. Because objects on the stack are also considered root objects in garbage collection, Dijkstra must either add a write barrier to objects on the stack or rescan the stack at the end of the marking phase in order to ensure memory safety, each of which has its own disadvantages. The designer of the algorithm needs to make a trade-off between the two.

Removing the write barrier

In his 1990 paper Real-time garbage collection on general-purpose machines, Yuasa proposed removing the write barrier because once it starts working, it guarantees the reachability of all objects on the heap when the write barrier is turned on. This is why it is also called Snapshot GC.

This guarantees that no objects will become unreachable to the garbage collector traversal all objects which are live at the beginning of garbage collection will be reached even if the pointers to them are overwritten.

The algorithm will guarantee the correctness of the program when garbage collection is performed incrementally or concurrently using a write barrier as shown below.

The above code will paint the old object in white gray when the reference to the old object is removed, so that removing the write barrier will ensure weak tricolor invariance and the downstream object referenced by the old object can definitely be referenced by the gray object.

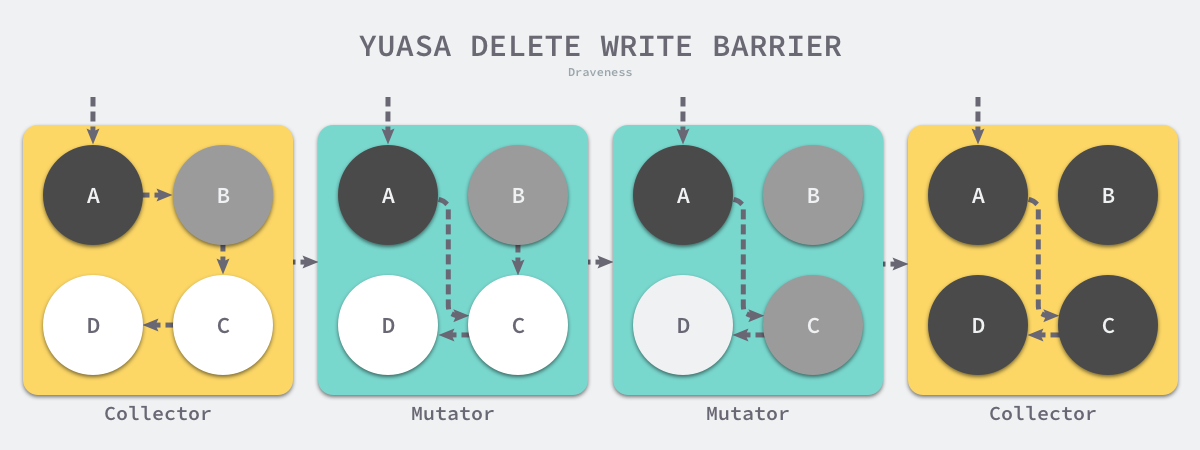

Assuming that we use Yuasa’s proposed removal of the write barrier in our application, a scenario in which the garbage collector and the user program run alternately would result in a marking process as shown above.

- the garbage collector marks the root object pointing to object A as black and marks object B pointed to by object A as gray.

- the user program points the pointer of object A originally pointing to B to C, triggering the removal of the write barrier, but leaves the object B unchanged because it is already gray.

- the user program removes the pointer to object B originally pointing to C, triggering the removal of the write barrier, and the white C object is grayed out. the garbage collector iterates through the other gray objects in the program in turn, marking them each as black.

The third step in the above procedure triggers Yuasa to remove the coloring of the write barrier because the user program removes the pointer of B to the C object, so the two objects C and D will violate strong and weak trichromatic invariance, respectively.

- strong tricolor invariant - the black A object points directly to the white C object.

- weak tricolor invariance - the garbage collector cannot access both white C and D objects from some gray object through several consecutive white objects.

Yuasa removes the write barrier by coloring the C object to ensure that the C object and the downstream D object survive this garbage collection loop and avoid hanging pointers to ensure the correctness of the user program.

Incremental and concurrent

Traditional garbage collection algorithms suspend the application during the execution of garbage collection. Once garbage collection is triggered, the garbage collector will seize CPU usage to occupy a large amount of computational resources to complete the marking and purging work, however, many applications pursuing real-time cannot accept a long STW.

Computational resources were not as abundant in ancient times as they are today, and today’s computers tend to have multi-core processors, and the garbage collector wastes a large amount of computational resources once it starts executing. In order to reduce the maximum time that an application can pause and the total pause time for garbage collection, we would optimize the modern garbage collector using the following strategy.

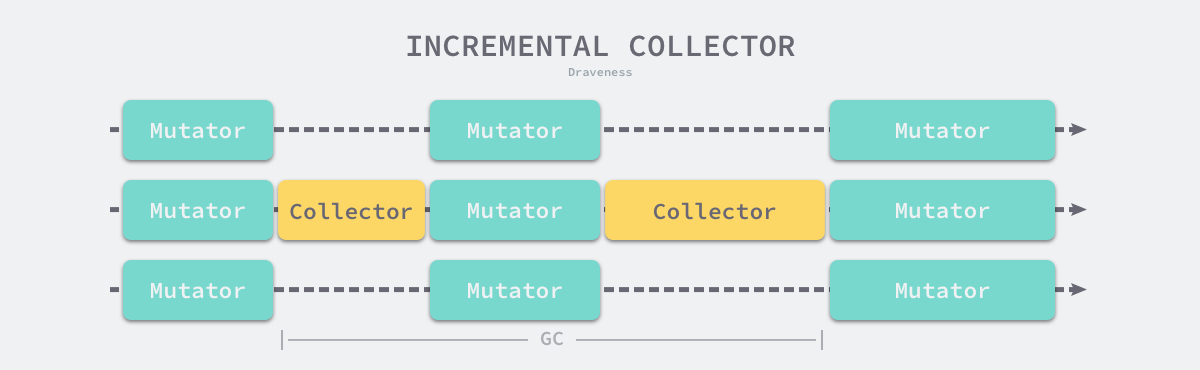

- Incremental garbage collection - incrementally marking and removing garbage, reducing the maximum amount of time an application can be suspended.

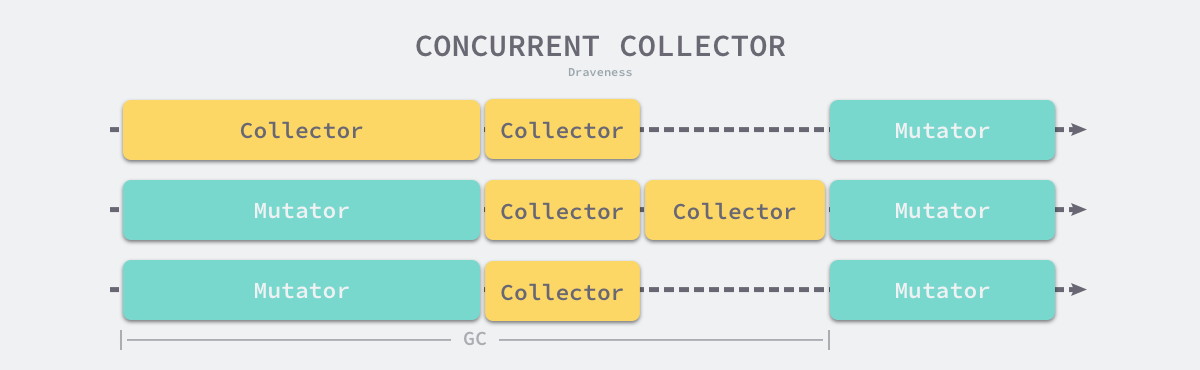

- Concurrent garbage collection - using the computing resources of multiple cores to mark and remove garbage concurrently while the user program is executing.

Because both incremental and concurrent can run alternately with the user program, we need to use barrier techniques to ensure correct garbage collection; at the same time, the application cannot wait until memory overflows to trigger garbage collection, because when memory is low, the application can no longer allocate memory, which is no different from directly suspending the program. Incremental and concurrent garbage collection needs to be triggered in advance and complete the whole loop before memory runs out, avoiding a long suspension of the program.

Incremental Collector

Incremental garbage collection is a solution to reduce the maximum amount of time a program can be paused by slicing an otherwise long pause into multiple smaller GC time slices, which reduces the maximum amount of time an application can be paused, even though it takes longer from the start of garbage collection to the end.

It should be noted that incremental garbage collection needs to be used with the tri-color marking method. To ensure correct garbage collection, we need to turn on the write barrier before garbage collection starts, so that user programs modifying memory will go through the write barrier first, ensuring strong tri-color invariance or weak tri-color invariance of object relationships in heap memory. Although incremental garbage collection can reduce the maximum program pause time, incremental collection also increases the total time of a GC loop, and during garbage collection, the user program also needs to bear additional computational overhead because of the write barrier, so incremental garbage collection does not bring only benefits, but the overall benefits still outweigh the disadvantages.

Concurrent Collector

Concurrent garbage collection reduces not only the maximum suspension time of the program, but also the entire garbage collection phase. By turning on the read/write barrier, taking advantage of multi-core parallel execution with the user program , concurrent garbage collectors can indeed reduce the impact of garbage collection on the application by.

Although the concurrent collector can run with the user program, not all phases can run with the user program, and some phases still need to suspend the user program, but compared with the traditional algorithm, concurrent garbage collection can execute the work that can be executed concurrently as much as possible; of course, because of the introduction of the read-write barrier, concurrent garbage collector must also bring extra overhead, which will not only increase the total time of garbage collection, but also affect the user program, which is something we must pay attention to when designing the garbage collection strategy.

Evolutionary Process

The Go language garbage collector has been evolving since day one, except for a few versions without major updates, almost every minor release will improve the performance of garbage collection, and along with the performance improvement is the complexity of the garbage collector code, this section will analyze the evolution of the garbage collector from the Go language v1.0.

- v1.0 - a fully serialized tagging and purging process, requiring a pause of the entire process.

- v1.1 - parallel execution of the mark and purge phases of garbage collection on multicore hosts.

- v1.3 - runtime added support for accurate scanning of stack memory based on the assumption that only values of pointer types contain pointers, enabling truly accurate garbage collection.

- the conversion of unsafe.Pointer types to values of integer types identified as illegitimate, which could cause serious problems such as hanging pointers.

- v1.5 - Implemented a concurrent garbage collector based on a three-color marker sweep.

- a significant reduction in garbage collection latency from several hundred ms to less than 10ms.

- calculating the appropriate time for garbage collection to start and accelerating the process by concurrency.

- v1.6 - implementation of a decentralized garbage collection coordinator.

- explicitly based state machine enabling any Goroutine to trigger state migration for garbage collection.

- using dense bitmaps instead of heap memory represented by idle chains to reduce CPU usage during the purge phase.

- v1.7 - reducing garbage collection time to less than 2ms by parallel stack shrinkage.

- v1.8 - reducing garbage collection time to within 0.5ms using a hybrid write barrier.

- v1.9 - completely removed the process of rescanning the stack for suspended procedures.

- v1.10 - updated the implementation of the garbage collection modulator (Pacer) to separate soft and hard heap size targets.

- v1.12 - simplifying several phases of the garbage collector with a new marked termination algorithm.

- v1.13 - solving the problem of returning memory to the operating system for applications with excessive transient memory usage with a new Scavenger.

- v1.14 - optimizing the speed of memory allocation with a new page allocator.

We can see from the evolution of the Go language garbage collector that the implementation and algorithms of the component have become more and more complex. The initial garbage collector was an imprecise single-threaded STW collector, but the latest version of the garbage collector supports concurrent garbage collection, decentralized coordination, and other features, and we will introduce the components and features associated with the latest version of the garbage collector here.

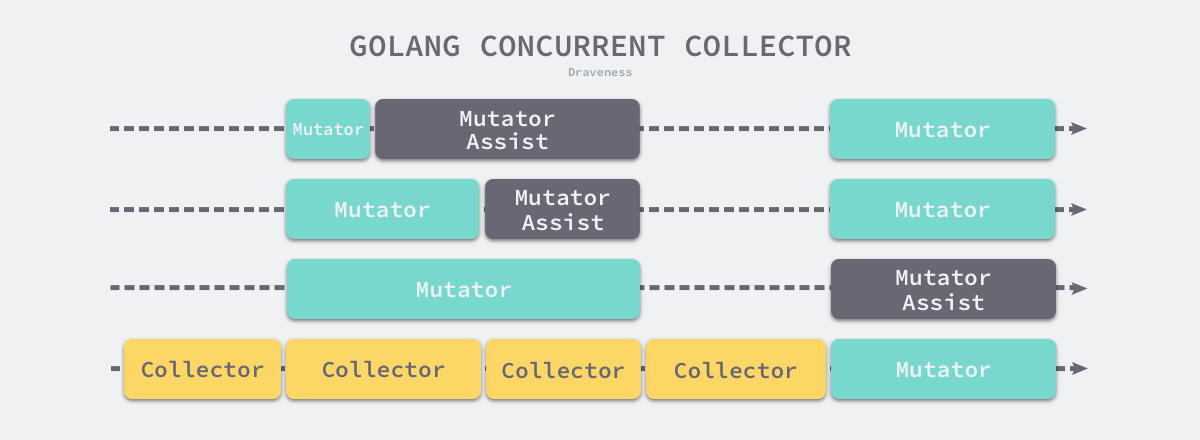

Concurrent Garbage Collection

The Go language introduced concurrent garbage collector in v1.5. The garbage collector uses the three-color abstraction and write barrier techniques we mentioned above to ensure the correctness of the garbage collector execution.

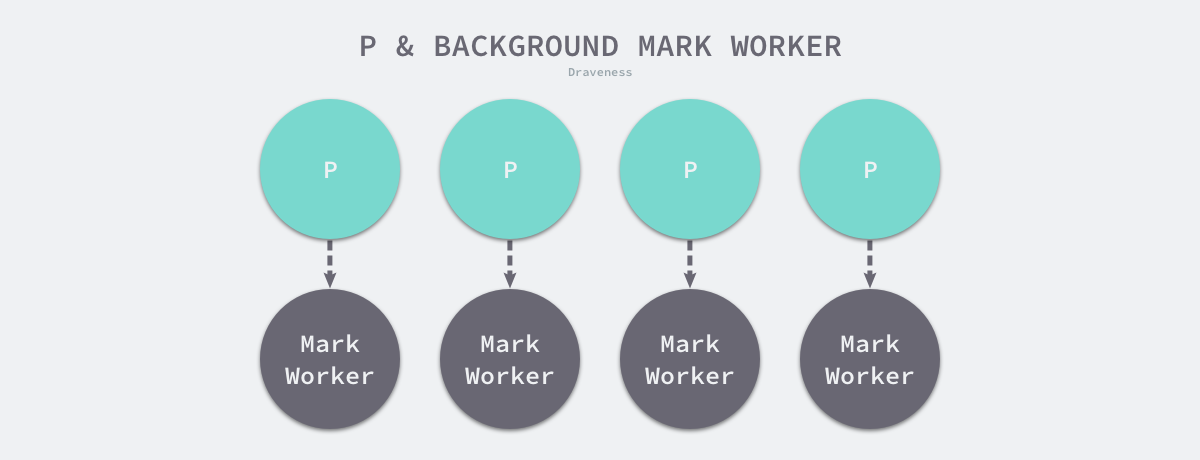

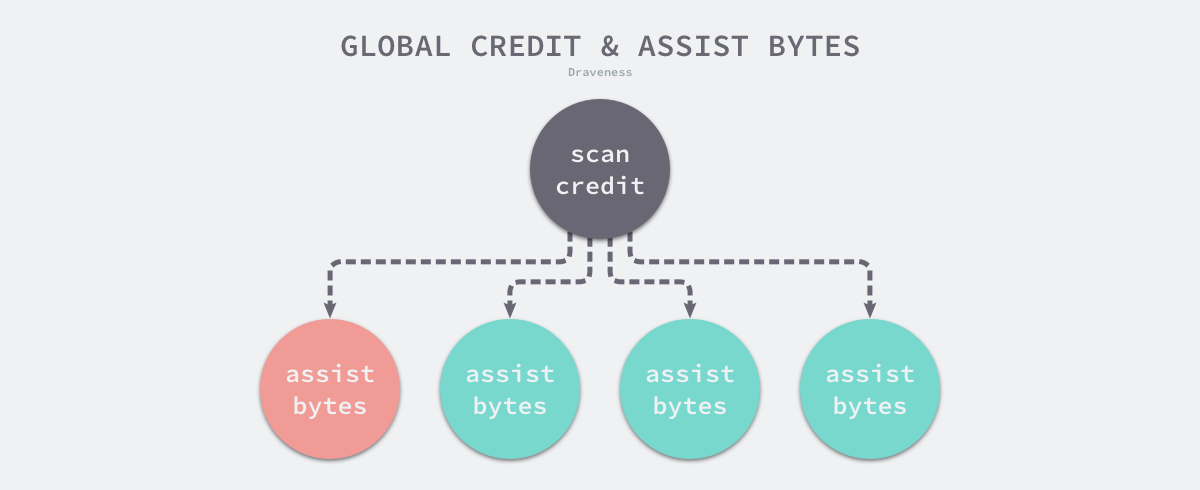

First, the concurrent garbage collector must trigger the garbage collection loop at the right point in time. Assuming our Go language program is running on a 4-core physical machine, then after garbage collection starts, the collector takes 25% of the computational resources in the background to scan and mark objects in memory.

The Go language’s concurrent garbage collector pauses the program to do some preparatory work for marking objects before scanning them, including starting the background marked garbage collector and turning on the write barrier. If the garbage collector executing in the background is not fast enough and the application requests memory faster than expected, the runtime will let the application requesting memory assist in completing the scanning phase of garbage collection, and after the marking and marking termination phases are over it will enter an asynchronous cleanup phase to reclaim the unused memory increments.

The v1.5 implementation of the concurrent garbage collection strategy has a dedicated Goroutine responsible for synchronizing and coordinating the state of garbage collection among processors. When other Goroutines discover that garbage collection needs to be triggered, they need to notify the main Goroutine responsible for modifying the state, however this notification process introduces a certain delay, the time window of this delay is likely to be uncontrollable, and the user program will continue to allocate memory during this time.

v1.6 introduces a decentralized garbage collection coordination mechanism that turns the garbage collector into an explicit state machine, and any Goroutine can call methods to trigger the migration of state.

- runtime.gcStart - switches from _GCoff to _GCmark phase, enters the concurrent mark phase and opens the write barrier.

- runtime.gcMarkDone - call * runtime.gcMarkTermination if all reachable objects have finished scanning.

- runtime.gcMarkTermination - converts from _GCmark to _GCmarktermination phase, enters mark termination phase and enters _GCoff upon completion.

The above three methods were introduced in a commit related to the runtime: replace GC coordinator with state machine issue, and they remove what used to be a centralized state migration process.

Recycle heap target

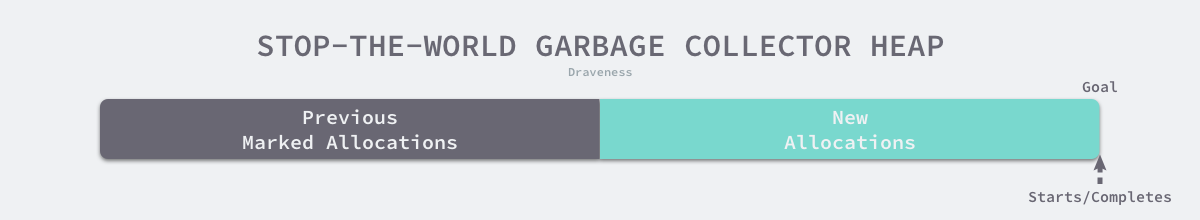

STW’s garbage collector, although it needs to pause the program, can effectively control the size of the heap memory. The default configuration of the Go language runtime triggers a new round of garbage collection when the heap memory reaches twice the size of the previous one, a behavior that can be adjusted by the environment variable GOGC, which by default has a value of 100, i.e. it takes a 100% growth of the heap memory to trigger a GC.

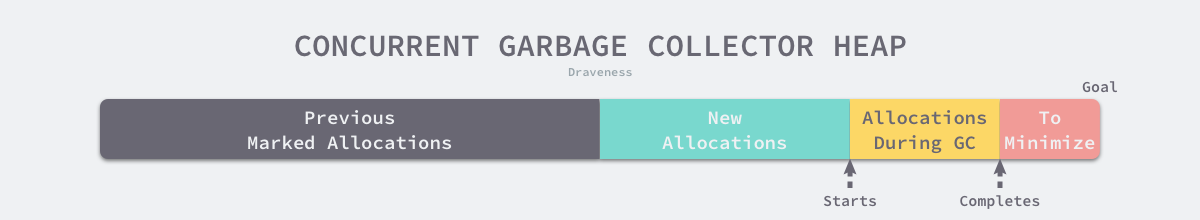

Because the concurrent garbage collector will run with the program, it cannot accurately control the size of the heap memory. The concurrent collector needs to trigger garbage collection before reaching the target so that the memory size can be controlled, and the concurrent collector needs to ensure that the heap memory at the end of garbage collection is as consistent as possible with the user-configured GOGC.

The Go language v1.5 introduces concurrent garbage collector while using the garbage collection pacing algorithm to calculate the optimal time to trigger garbage collection, ensuring that the trigger time neither wastes computational resources nor exceeds the expected heap size. As shown above, the black part is the heap size marked after the last garbage collection, the green part is the newly allocated memory since the last garbage collection ended, and since we use concurrent garbage collection, the yellow part is the memory allocated during the garbage collection, and the last red part is the difference between the end of garbage collection and the target, we want to reduce the red part of memory as much as possible to reduce the We want to reduce as much as possible the red part of the memory to reduce the extra overhead and the suspension time of the program.

The garbage collection pacing algorithm was introduced along with v1.5, and the goal of the algorithm is to optimize the heap growth rate and the CPU utilization of the garbage collector.23 In v1.10, the algorithm was optimized, and the original destination heap size was split into two goals: hard and soft.24 Because adjusting the execution frequency of garbage collection involves more complex formulas, which are of limited help in understanding the principle of garbage collection, this section does not include the following more limited, this section will not be introduced, interested readers can read for themselves.

Mixed write barriers

Prior to Go v1.7, the runtime used Dijkstra to insert write barriers to ensure strong tricolor invariance, but the runtime did not turn on inserting write barriers on all garbage collection root objects. Because applications can contain hundreds or thousands of Goroutines, and the root object of garbage collection generally includes global variables and stack objects, it would be a huge additional overhead to turn on write barriers on the stacks of hundreds of Goroutines at runtime, so the Go team chose to pause the program at the end of the marking phase, mark all stack objects as gray, and rescan In a program with a lot of active Goroutines, the rescan process takes 10-100ms.

The Go language in v1.8 combines the Dijkstra insertion write barrier and the Yuasa deletion write barrier to form a hybrid write barrier that marks overwritten objects as gray and marks new objects as gray when the current stack is not scanned as follows.

To remove the stack rescanning process, in addition to introducing a mixed write barrier, we need to mark all new objects created as black during the mark phase of garbage collection to prevent objects in newly allocated stack memory and heap memory from being reclaimed incorrectly, since the stack memory exists mark phase will eventually turn black, so there is no longer any need to rescan the stack space.

Implementation Principles

Before describing the evolution of the garbage collector, we need a preliminary understanding of the execution cycle of the latest garbage collector, which will be more helpful in understanding its global design. garbage collection in Go language can be divided into four different phases of purge termination, mark, mark termination and purge, which accomplish different jobs.

- the cleanup termination phase.

- pause the program , at which point all processors go to the Safe point (Safe point).

- if the current garbage collection loop is forcibly triggered, we also need to deal with the memory management units that have not yet been cleaned up.

- the marking phase.

- switch the state to

_GCmark, turn on the write barrier, user program assists (Mutator Assists) and queue the root object. - resuming execution of the program, the marker process and the user program used to assist will start concurrently marking objects in memory, the write barrier will mark both the overwritten pointer and the new pointer in gray, and all newly created objects will be marked directly in black.

- starts scanning the root object, including the stack of all Goroutines, global objects, and runtime data structures not in the heap, suspending the current processor during the scan of the Goroutine stack.

- processes the objects in the gray queue in turn, marking the objects black and marking the objects they point to gray.

- checking the remaining work using a distributed termination algorithm and entering the mark termination phase when the mark phase is found to be complete.

- switch the state to

- marking the termination phase.

- pause the program, switch the state to

_GCmarkterminationand close the auxiliary marker user program. - clearing the thread cache on the processor.

- pause the program, switch the state to

- the cleanup phase.

- switch state to

_GCoffto start the cleanup phase, initialize the cleanup state and turn off the write barrier. - resuming the user program, with all newly created objects marked white.

- clean up all memory management units concurrently in the background, triggered when a Goroutine requests a new memory management unit.

- switch state to

Although the runtime will only use _GCoff, _GCmark and _GCmarktermination states to represent all phases of garbage collection, the implementation is much more complicated, and this section will analyze the implementation principles in detail according to the different phases of garbage collection.

Global variables

There are some relatively important global variables in garbage collection. Before analyzing their process, we will introduce each of these important variables, which recur in the various phases of garbage collection, so it is very important to understand their functions, and we will start with some of the simpler ones.

- runtime.gcphase is the current phase of the garbage collector, which may be in _GCoff, _GCmark and _GCmarktermination, and Goroutine needs to ensure atomicity when reading or modifying this phase.

- runtime.gcBlackenEnabled is a boolean variable that is set to 1 when garbage collection is in the marking phase, where user programs that assist in garbage collection and background marking tasks can black out objects.

- runtime.gcController implements the pacing algorithm for garbage collection, which can determine when parallel garbage collection is triggered and the jobs to be processed.

- runtime.gcpercent is the memory growth percentage to trigger garbage collection, by default 100, i.e. the GC should be triggered when the heap memory grows by 100% compared to the last garbage collection, and the parallel garbage collector will finish garbage collection before reaching that target; * runtime.writeBarrier is the memory growth percentage to trigger garbage collection.

- runtime.writeBarrier is a structure containing the status of the write barrier, where the enabled field indicates whether the write barrier is on or off.

- runtime.worldsema is a global semaphore, and the thread that gets it has the right to suspend the current application.

In addition to the global variables mentioned above, we need to take a brief look at the runtime.work variable here.

|

|

This structure contains a number of fields related to garbage collection, such as the number of garbage collection cycles completed, the current cycle time and CPU utilization, the mode of garbage collection, etc. We will see more fields in this structure in later subsections.

Trigger timing

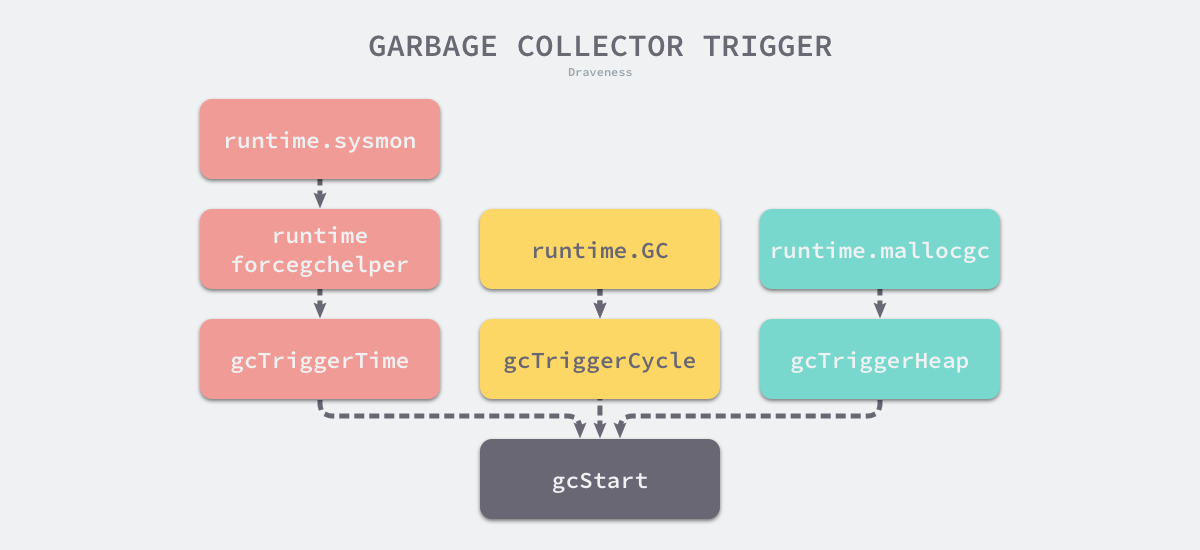

The runtime determines whether garbage collection should be triggered by the runtime.gcTrigger.test method as follows. When the basic conditions for triggering garbage collection are met - garbage collection is allowed, the program does not crash, and it is not in a garbage collection loop - the method is triggered in three different ways with different checks.

|

|

- gcTriggerHeap - the allocation of heap memory up to the trigger heap size calculated by the controller.

- gcTriggerTime - a new cycle is triggered if it is not triggered within a certain time, the trigger condition is controlled by the runtime.forcegcperiod variable and defaults to 2 minutes.

- gcTriggerCycle - Triggers a new cycle if garbage collection is not currently enabled

The method runtime.gcStart used to start garbage collection receives a predicate of type runtime.gcTrigger, and all occurrences of the runtime.gcTrigger structure are code that triggers garbage collection.

- runtime.sysmon and runtime.forcegchelper - background running of timing checks and garbage collection.

- runtime.gc - garbage collection triggered manually by the user program.

- runtime.mallocgc - triggers garbage collection based on heap size when requesting memory.

In addition to using the system monitor running in the background and the forced garbage collection helper to trigger garbage collection, the other two methods will trigger garbage collection from any processor, this does not require the central component coordination is introduced in the v1.6 version, we will then expand on the three different trigger timing.

background trigger

The runtime opens a Goroutine in the background at application startup to force garbage collection. The duty of the Goroutine is very simple - it calls runtime.gcStart to try to start a new round of garbage collection.

To reduce the use of computational resources, the Goroutine calls runtime.goparkunlock in a loop to actively hibernate and wait for other Goroutines to wake up. runtime.forcegchelper is hibernated most of the time, but it is woken up by the system monitor runtime. sysmon wakes up when the garbage collection conditions are met.

The system monitor actively builds a runtime.gcTrigger in each loop and checks if the trigger condition for garbage collection is met. If the condition is met, the system monitor adds the Goroutine held in the runtime.forcegc state to the global queue waiting to be dispatched by the scheduler.

manually triggered

The user program is actively notified of runtime execution during program runtime via the runtime.GC function, which when called blocks the caller until the current garbage collection loop is complete, and may also pause the entire program during garbage collection via STW.

|

|

- the runtime needs to wait for the completion of the mark termination, mark and clear termination phases of the previous loop via runtime.gcWaitOnMark before formally starting garbage collection.

- calling runtime.gcStart to trigger a new round of garbage collection and waiting for the mark termination phase of that round to finish properly via runtime.gcWaitOnMark.

- continuously call runtime.sweepone to clean up all pending memory management units and wait for all cleanup to complete, while waiting for runtime.Gosched to let the processor out.

- after completing the current round of garbage collection cleanup, publish a snapshot of the heap memory state for that phase via runtime.mProf_PostSweep, which allows us to obtain the memory state at that point.

Manually triggering garbage collection is not particularly common and generally only occurs in runtime test code, although we can call the method directly if we think it is necessary to trigger active garbage collection, but the authors do not consider this a recommended practice.

requesting memory

The last thing that may trigger garbage collection is runtime.mallocgc. As we described in the previous section on memory allocators, the runtime will divide the objects on the heap into three categories by size: micro-objects, small objects, and large objects, the creation of which may trigger a new garbage collection loop.

|

|

- when there is no free space in the memory management unit of the current thread, creating micro- and small objects requires calling runtime.mcache.nextFree to get a new management unit from the central cache or page heap, at which point garbage collection may be triggered; and

- the runtime.gcTrigger struct must be constructed to try to trigger garbage collection when a user program requests the allocation of a large object of 32KB or more.

Triggering garbage collection from heap memory requires comparing two fields in runtime.mstats - heap_live, which indicates the number of bytes of live objects in garbage collection, and gc_trigger, which indicates the size of the heap memory that triggered the token; when the number of bytes of live objects in memory is greater than the size of the heap that triggered the garbage collection, a new round of garbage collection starts when the number of bytes of objects alive in memory is larger than the heap size that triggers garbage collection. Here, we will describe the calculation of these two values separately.

- heap_live - to reduce lock contention, the runtime will only update when the central cache allocates or frees memory management units and when large objects are allocated on the heap.

- gc_trigger - call runtime.gcSetTriggerRatio during the mark termination phase to update the heap size for triggering the next garbage collection.

runtime.gcController calculates the trigger ratio at the end of each loop and sets gc_trigger via runtime.gcSetTriggerRatio, which determines when to trigger garbage collection and how many flagged tasks are processed by the user program and the background, using a feedback-controlled algorithm to determine the timing of triggering garbage collection based on heap growth and garbage The timing of triggering garbage collection is determined by a feedback-controlled algorithm based on heap growth and garbage collection CPU utilization.

You can find the garbage collection pacing algorithm proposed in v1.5 in runtime.gcControllerState.endCycle, and the soft and hard heap target separation algorithm introduced in v1.10 in runtime.gcControllerState.revise.

Garbage collection start

Garbage collection in the startup process will definitely call runtime.gcStart, although the implementation of this function is more complex, its main responsibility is to modify the global garbage collection state to _GCmark and do some preparatory work, we will introduce the implementation of this function in the following stages.

- two calls to runtime.gcTrigger.test to check whether the garbage collection conditions are met.

- suspending the program, starting the work Goroutine in the background for the tagging task, making sure that all memory management units are cleaned up, and other preparations before the tagging phase begins.

- entering the tagging phase, preparing the tagging job in the background, the tagging job for the root object and the microobject, resuming the user program, and entering the concurrent scanning and tagging phase.

While verifying the garbage collection condition, this method also calls runtime.sweepone in the loop to clean up the memory cells that have been marked, completing the last garbage collection loop.

After verifying the garbage collection conditions and completing the wrap-up, the method obtains the global worldsema semaphore via semacquire, calls runtime.gcBgMarkStartWorkers to start background marker tasks, calls runtime.stopTheWorldWithSema on the system stack to pause the program and call runtime.finishsweep_m to ensure that the last memory cell is reclaimed properly.

|

|

In addition, the above procedure modifies the state held by the global variable runtime.work, including the number of Goroutines needed for garbage collection and the number of completed loops.

After all the preparatory work is done, the method enters the final stage of execution. In this phase, we modify the global garbage collection state to _GCmark and perform the following steps in sequence.

- call runtime.gcBgMarkPrepare to initialize the state needed for background scanning.

- calling runtime.gcMarkRootPrepare to scan for root objects on the stack, global variables, etc. and add them to the queue.

- setting the global variable runtime.gcBlackenEnabled, where user programs and marker tasks can blacken objects.

- call runtime.startTheWorldWithSema to start the program and the background task will also start marking objects in the heap.

|

|

In analyzing the garbage collection startup process, we have omitted several key processes, including suspending and resuming the application and background task startup, and we will analyze the implementation principles of these processes in detail below.

Suspend and Resume Programs

runtime.stopTheWorldWithSema and runtime.startTheWorldWithSema are a pair of core functions used to pause and resume programs. They have exactly the opposite function, but the program pause will be more complicated than resume, let’s look at the implementation of the former principle: the

|

|

preemptall, which calls runtime.preemptone, which we introduced earlier. Since the maximum number of active processors in the program is gomaxprocs, runtime.stopTheWorldWithSema will subtract one from this variable each time it finds a stopped processor. will decrement this variable by one each time a stopped processor is found, until all processors have stopped running. The function stops the current processor, waits for a processor on a system call, and fetches and grabs an idle processor in turn, and the status of the processor is updated to _Pgcstop when the function returns, pending a re-wake from the garbage collector.

The program resumes using runtime.startTheWorldWithSema, which is also relatively simple to implement.

- call runtime.netpoll to fetch pending tasks from the network poller and add them to the global queue.

- calling runtime.procresize to expand or reduce the global processor capacity.

- call runtime.notewakeup or runtime.newm to wake up the processor or create a new thread for the processor in turn.

- creating additional processors to assist in completing tasks if there are too many Goroutines currently waiting to be processed.

|

|

The process of suspending and starting a program is relatively simple. Suspending a program will use runtime.preemptall to preempt all processors, and resuming a program will use runtime.notewakeup or runtime.newm to wake up the processors in the program.

Background Marking Mode

During garbage collection startup, the runtime will call runtime.gcBgMarkStartWorkers to create Goroutines for each processor globally to perform background marking tasks, each Goroutine will run runtime.gcBgMarkWorker, all Goroutines running runtime. All Goroutines running runtime.gcBgMarkWorker fall into hibernation after startup and wait for the scheduler to wake them up: gcBgMarkWorker

These Goroutines have a one-to-one relationship with the processor, and runtime.findrunnable executes the Goroutine’s auxiliary concurrent object tagging on the current processor when garbage collection is in the tagging phase and the current processor does not need to do any tasks.

The scheduler can also obtain and execute tasks for background marking in the scheduling loop runtime.schedule via the garbage collection controller’s runtime.gcControllerState.findRunnabledGCWorker.

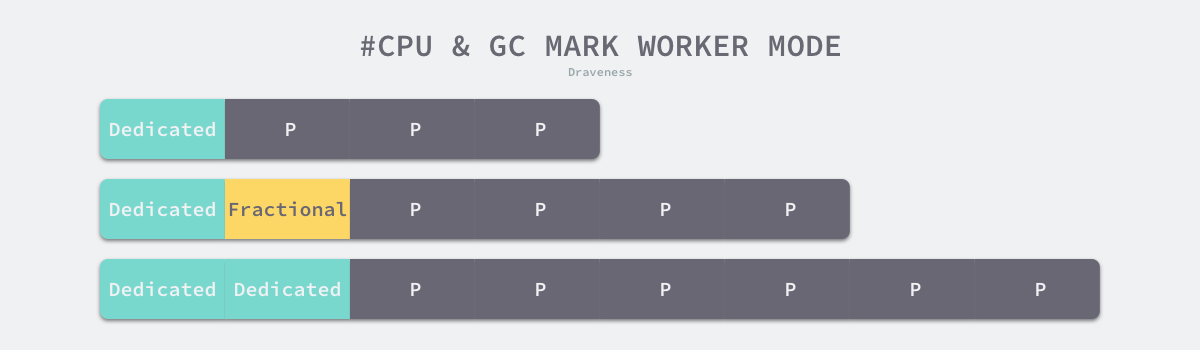

There are three different modes of Goroutine for concurrently scanning objects runtime.gcMarkWorkerMode, and the three different modes of Goroutine use completely different strategies for marking objects, and the garbage collection controller executes different types of work co-workers as needed.

- gcMarkWorkerDedicatedMode - the processor is dedicated to marking objects that will not be preempted by the scheduler.

- gcMarkWorkerFractionalMode - when the garbage collection’s background CPU usage does not reach the expected rate (default is 25%), a worker concurrent of this type is started to help the garbage collection reach the utilization target, as it takes only part of the resources of the same CPU and therefore can be scheduled.

- gcMarkWorkerIdleMode - when the processor has no Goroutine available for execution, it runs the garbage collection’s marker task until it is preempted.

runtime.gcControllerState.startCycle calculates the above dedicatedMarkWorkersNeeded and fractionalUtilizationGoal based on the number of global processors and the CPU utilization of garbage collection to determine the number of worker co-processes in different modes of different modes.

Since the CPU utilization of the background marker task is 25%, if the host is 4 or 8 cores, then garbage collection requires 1 or 2 Goroutines dedicated to the task in question; however, if the host is 3 or 6 cores, since it is not divisible by 4, then it requires 0 or 1 Goroutine dedicated to garbage collection, which will take some The CPU utilization is guaranteed by using the gcMarkWorkerFractionalMode co-processing.

The garbage collection controller will set the processor’s gcMarkWorkerMode in the runtime.gcControllerState.findRunnabledGCWorker method.

|

|

The implementation of the above approach is relatively clear, as the controller determines the number of Goroutines dedicated to the marker task through dedicatedMarkWorkersNeeded and decides whether to start a Goroutine in gcMarkWorkerFractionalMode based on the time spent performing the marker task and the total time; in addition to these In addition to these two controller-required worker co-processes, the scheduler also performs garbage collection in runtime.findrunnable using idle processors to speed up the process.

|

|

The three different modes of worker co-processing work in concert with each other to ensure that the CPU utilization of garbage collection reaches the desired threshold and that the marking task is completed before reaching the target heap size.

Concurrent scanning with tagging assistance

runtime.gcBgMarkWorker is a background function for the execution of the marker task. The loop of this function performs the scanning and marking of the object graph in memory, and we present the principle of the implementation of this function in three parts.

- getting the current processor and the Goroutine wrapped into a structure of type runtime.gcBgMarkWorkerNode and actively falling into hibernation waiting to be woken up.

- determine the strategy for scanning tasks based on the gcMarkWorkerMode mode on the processor.

- calling the runtime.gcMarkDone method to complete the marking phase after all marking tasks have been completed.

First let’s look at the preparation of the background marker task, where the runtime creates runtime.gcBgMarkWorkerNode, a structure that will pre-store the processor and the current Goroutine, and when we call runtime.gopark to trigger a hibernation, the runtime will safely establish the binding between the processor and the background marker task in the system stack relationship.

|

|

A dormant Goroutine via runtime.gopark does not enter the run queue, it only waits for a direct wakeup from the garbage collection controller or scheduler; upon wakeup, we choose a different marker execution strategy depending on the processor gcMarkWorkerMode, and the different execution strategies call runtime.gcDrain Scan work buffer runtime.gcWork.

|

|

Note that tasks in gcMarkWorkerDedicatedMode cannot be preempted. To reduce additional overhead, preemption is allowed on the first call to runtime.gcDrain, but once the processor is preempted, the current Goroutine will transfer all runnable Goroutines on the processor to the global queue to ensure garbage collection of occupied CPU resources.

When all background tasks are waiting and there is no work left, we consider the marking phase of the garbage collection round to be over and call runtime.gcMarkDone at that point.

runtime.gcDrain is the core method for scanning and marking objects in heap memory. In addition to this method, we will also cover the implementation principles of work pools, write barriers, and marking assistance.

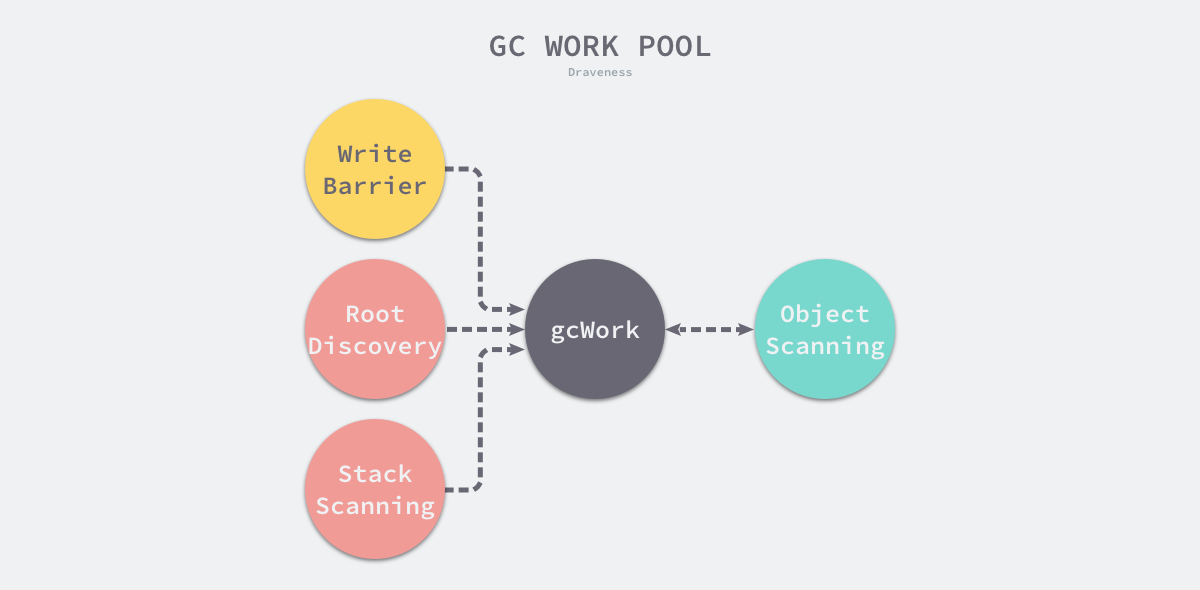

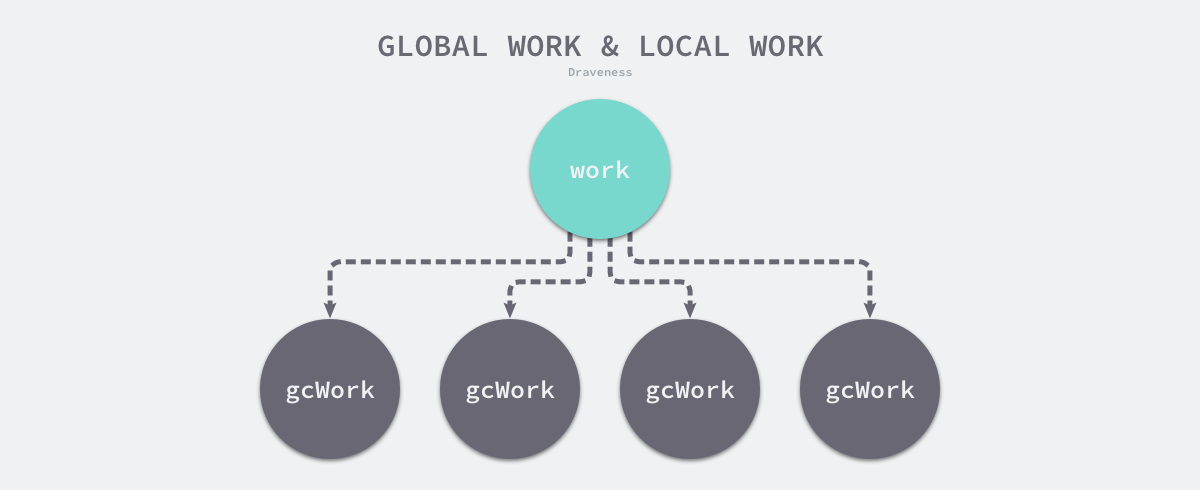

Work pools

When calling runtime.gcDrain, the runtime will pass in runtime.gcWork on the processor. This structure is an abstraction of the work pool in the garbage collector, which implements a producer and consumer model, and we can use this structure as a starting point for understanding tagged work as a whole:

Write barriers, root object scans, and stack scans all add additional gray objects to the work pool waiting to be processed, while the object scanning process marks gray objects as black and may also find new gray objects, and the whole scanning process ends when the work queue does not contain gray objects.

To reduce lock contention, the runtime keeps separate pending scans on each processor, however this runs into the same problem as the scheduler - uneven resources across processors, leaving some processors with nothing to do. The scheduler introduces work stealing to solve this problem, and the garbage collector uses a similar mechanism to balance the pending tasks across processors.

runtime.gcWork.balance puts a portion of the processor’s local work back into the global queue for other processors to handle, ensuring load balancing across processors.

runtime.gcWork provides an abstraction of production and consumption tasks for the garbage collector. This structure holds two important work buffers, wbuf1 and wbuf2, which are the primary and backup buffers, respectively.

When we add or delete objects to this structure, it will always operate the main buffer first, once the main buffer space is insufficient or there is no object, it will trigger the switch between the main and backup buffers; and when both buffers are insufficient or empty, it will insert or get the object from the global working buffer, the implementation of the related methods of this structure are very simple, so we won’t start the analysis here.

Scanning objects

The runtime will use runtime.gcDrain to scan the working buffer for gray objects, and it will choose a different strategy depending on the gcDrainFlags passed in.

|

|

- gcDrainUntilPreempt - returned when the preempt field of the Goroutine is set to true.

- gcDrainIdle - call runtime.pollWork, returned when the processor contains other pending Goroutines.

- gcDrainFractional - call runtime.pollFractionalWorkerExit, returned when CPU usage exceeds 20% of fractionalUtilizationGoal; * gcDrainFractional - call runtime.pollFractionalWorkerExit, returned when CPU usage exceeds 20% of fractionalUtilizationGoal.

- gcDrainFlushBgCredit - call runtime.gcFlushBgCredit to calculate the amount of tagging tasks completed in the background to reduce the workload of user programs that assist in garbage collection during concurrent tagging.

The runtime will use the check in the local variable to check if the tagging task should currently be exited and the processor given up. Once we are done with the preparation, we can start scanning the global variables for the root object, which is the first task that needs to be executed in the markup phase

|

|

Scanning the root object requires runtime.markroot, which scans the cache, data segments, BSS segments holding global and static variables, and the Goroutine’s stack memory; once the scan of the root object is complete, the current Goroutine starts fetching pending tasks from the local and global work cache pools:

|

|

Scanning for objects will use runtime.scanobject, which will start scanning from the location passed in and during the scan will call runtime.greyobject to color the active objects found.

When the current scan is interrupted due to a change in external conditions, this function records the number of memory bytes scanned to reduce the workload of auxiliary markers via runtime.gcFlushBgCredit.

The process of scanning and marking objects in memory involves many bit operations and pointer operations, and the related code implementation is rather complicated, so we will not go into the related contents here, and interested readers can use runtime.gcDrain as an entry point to study the specific process of three-color marking.

Write Barriers

Writebarrier is an indispensable technique for securing concurrent markup in Go. We need to use hybrid writebarrier to maintain weak tricolor invariance of the object graph, however, the implementation of writebarrier requires the collaboration of compiler and runtime. In the SSA intermediate code generation phase, the compiler adds write barriers to the Store, Move, and Zero operations using cmd/compile/internal/ssa.writebarrier to generate code as follows.

When Go enters the garbage collection phase, the enabled field in the global variable runtime.writeBarrier is set to on and all write operations call runtime.gcWriteBarrier.

|

|

In the above assembly function, the DI register is the destination address of the write operation, and the AX register stores the overwritten value. The function will overwrite the original value and notify the garbage collector via runtime.wbBufFlush to add the original value and the new value to the current processor’s work queue, because the implementation of this write barrier is relatively complex, so the write barrier still has a relatively large impact on the performance of the program The write barrier has a significant impact on the performance of the program, as it now requires dozens of instructions for work that previously required only one instruction to complete.

We mentioned above that the hybrid write barrier consisting of Dijkstra and Yuasa write barriers requires all newly created objects to be painted directly black when they are turned on, where the marking process is done by runtime.gcmarknewobject

|

|

runtime.mallocgc will call this function after garbage collection starts, get the memory cell corresponding to the object and the marker bit runtime.markBits and call runtime.markBits.setMarked to directly paint the new object black.

Mark Assist

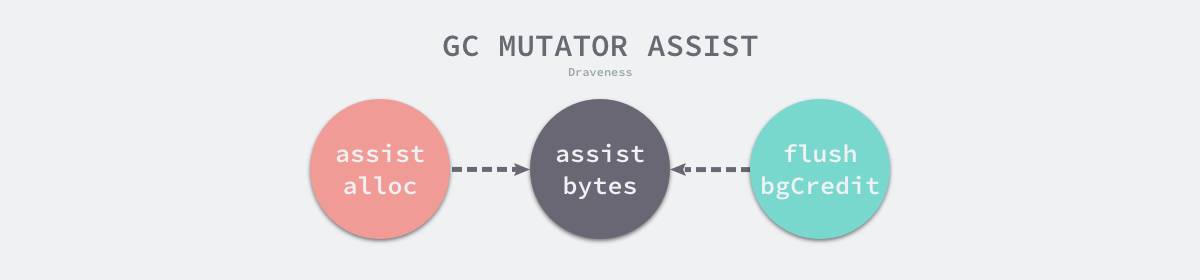

To ensure that the user program does not allocate memory faster than the background task can tag, the runtime also introduces tagging assistance, which follows a very simple and uncomplicated principle of allocating as much memory as is needed to complete the tagging task. Each Goroutine holds the gcAssistBytes field, which stores the number of bytes of objects that the Goroutine is currently assisting in tagging. During the concurrent tagging phase, when a Goroutine calls runtime.mallocgc to allocate a new object, this function checks to see if the Goroutine requesting memory is in a state of overrunning.

|

|

gcAssistAlloc, called when requesting memory, and runtime.gcFlushBgCredit, called when scanning memory, are responsible for borrowing and repaying debt, respectively, and through this debt management system, we can ensure that Goroutine runs properly without putting too much pressure on garbage collection and that the heap size target is reached when The marking phase is completed when the heap size target is reached.

The gcAssistBytes held by each Goroutine indicates the number of bytes of the current concurrent auxiliary token, and the bgScanCredit held by the global garbage collection controller indicates the number of bytes of the background concurrent auxiliary token, which can be reimbursed using the common credit bgScanCredit when the local Goroutine has allocated a larger number of objects. Let’s start by analyzing the runtime.gcAssistAlloc implementation.

|

|

This function will first calculate the number of marker tasks to be completed based on the Goroutine’s gcAssistBytes and the garbage collection controller’s configuration. If there are available points in the global credit bgScanCredit, then that number of points will be subtracted, because the concurrent execution is not locked, so the global credit may be updated to a negative value, however this is not a more important issue in the long run.

If the global credit is not sufficient to cover the local debt, the runtime will call runtime.gcAssistAlloc1 on the system stack to perform the marker task, which will directly call runtime.gcDrainN to complete the specified number of marker tasks and return.

If the current Goroutine is still underrun and the Goroutine is not preempted after completing the marker assist task, the runtime will execute runtime.gcParkAssist; if the global credit is still underrun, the runtime will put the current Goroutine into hibernation via runtime.gcParkAssist and adds it to the global queue of helper markers and waits for the background marker task to wake up.

The implementation of runtime.gcFlushBgCredit for debt repayment is relatively simple, as the current credit is added directly to the global credit bgScanCredit if there is no waiting Goroutine in the auxiliary queue.

|

|

If the auxiliary queue is not empty, the above function decides whether to wake up the dormant Goroutines based on the number of debts and completed work for each Goroutine; if there are still marker tasks left after waking up all Goroutines, these marker tasks are added to the global credit.

The core purpose of user program assisted tagging is to avoid user program memory allocation from affecting the expected time for the garbage collector to complete the tagging job. It ensures that the user program does not overburden the garbage collection by maintaining the account system, and once the user program allocates a large amount of memory, that user program balances the book by assisted tagging, a process that will reach relative balance at the end, ensuring that the tagging task completes when the desired heap size is reached.

Marker termination

When all the processor’s local tasks are completed and there are no remaining working Goroutines, the background concurrent tasks or the user program that assisted in marking them will call runtime.gcMarkDone to notify the garbage collector. When all reachable objects are marked, this function switches the state of garbage collection to _GCmarktermination; if there are still pending tasks in the local queue, the current method adds all tasks to the global queue and waits for other Goroutines to finish processing.

|

|

If the runtime contains no global tasks and there are no local tasks in the processor, then all gray objects in the current garbage collection loop are also marked black and we can start triggering the garbage collection phase migration:

The above function ends by closing the hybrid write barrier, waking up all user programs assisting in garbage collection, resuming scheduling of the user Goroutine and calling runtime.gcMarkTermination to enter the mark termination phase.

|

|

We omitted a lot of statistics in this function, including the size of the memory being used, the pause time of the current round of garbage collection, CPU utilization, etc. These data can help the controller decide the heap size for the next round of triggered garbage collection, and in addition to the statistics, this function also calls runtime.gcSweep to reset the relevant state of the cleanup phase and block if needed. The _GCmarktermination state does not last long in garbage collection, it quickly transitions to _GCoff and resumes the application, where the full process of garbage collection is essentially over and the user program is inert to reclaim memory when it requests it.

Memory cleanup

The garbage collection cleanup includes object reclaimers (Reclaimers) and memory cell reclaimers, which use different algorithms to clean up heap memory:

- The object reclaimers look for and release untagged objects in the memory management unit, but if all objects in runtime.mspan are untagged, the entire unit is reclaimed directly, which is triggered asynchronously by runtime.mcentral.cacheSpan or runtime.sweepone.

- the memory unit reclaimer looks for runtime.mspan in memory where all objects are untagged, the process is triggered by runtime.mheap.reclaim.

runtime.sweepone is a function we often see during garbage collection, which looks in heap memory for memory management units to be cleaned up.

|

|

When looking for a memory management unit, the state and sweepgen fields are used to determine if the current unit needs to be processed. If the sweepgen of the memory cell is equal to mheap.sweepgen - 2, then the current cell needs to be cleaned up, and if it is equal to mheap.sweepgen - 1, then the current management cell is being cleaned up.

All recycling is ultimately done by runtime.mspan.sweep, which recovers the garbage in the memory cell based on the concurrent marker phase and clears the marker to avoid affecting the next round of garbage collection.

Summary

Go language garbage collector implementation is very complex, the authors believe that this is the most complex module in the programming language, the complexity of the scheduler and garbage collector is not at all a level, we had to omit many implementation details in the analysis of the garbage collector, including the process of concurrently marking objects, the specific implementation of clearing garbage, these processes design a large number of underlying bit operations and pointer operations, this section contains links to all the relevant code, interested readers can explore on their own.

Garbage collection is a very old technology, its execution speed and utilization largely determines the speed of the program, Go language in order to achieve a high-performance concurrent garbage collector, the use of three-color abstraction, concurrent incremental recycling, mixed write barriers, step adjustment algorithm and user program assistance and other mechanisms to optimize the garbage collection pause time to less than milliseconds, from the early version to see today, we can appreciate the engineering design and evolution, the authors feel that the study of garbage collection is the principle of implementation is still very worthwhile.