The memory of an application is generally divided into heap and stack areas, and the program can actively request memory space from the heap area during runtime.

This memory is allocated by the memory allocator and reclaimed by the garbage collector. We have analyzed the process of requesting and releasing heap memory in detail in the last two sections, and this section will introduce the management of Go language stack memory.

Design Principles

The memory in the stack area is generally allocated and released automatically by the compiler, which stores the function entry parameters and local variables. These parameters are created with the creation of the function and die out when the function returns, and generally do not exist in the program for a long time. This linear memory allocation strategy has a very high efficiency, but the engineer also often has no control over the allocation of the stack memory, and this part of the work is basically done by the compiler.

Registers

Registers are a scarce resource in the central processing unit (CPU). They have very limited storage capacity, but provide the fastest read and write speeds, and taking full advantage of register speed can build high-performance applications. Registers are very limited on physical machines, yet operations in the stack area will use more than two registers, which is a good indication of the importance of the presence of applications within the stack.

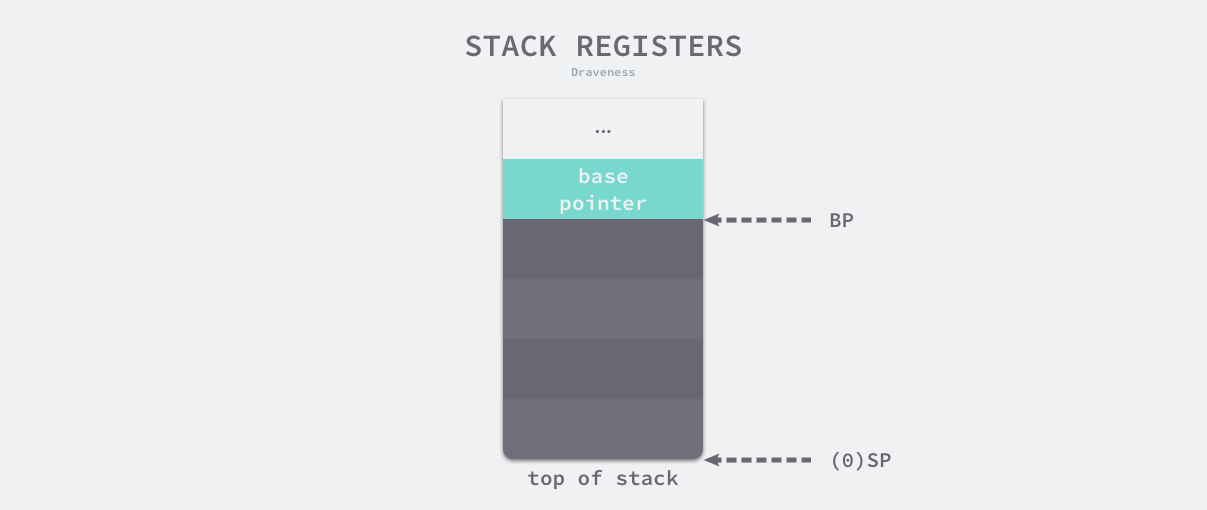

The stack register is one of the CPU registers, and its main role is to keep track of the function call stack. The Go language assembly code contains two stack registers, BP and SP, which store the base address pointer of the stack and the address of the top of the stack, respectively. The stack memory is very closely related to the function calls, and we have introduced the stack area in the section on function calls, and the memory between BP and SP is the current function call stack.

For historical reasons, stack memory is expanded from high addresses to low addresses, and applications only need to modify the SP register value when requesting or releasing stack memory.

Thread stack

If we execute the pthread_create system call in the Linux OS, the process starts a new thread and if the user does not specify the size of the thread stack through the soft resource limit RLIMIT_STACK, the OS chooses a different default stack size depending on the architecture.

| architecture | default stack size |

|---|---|

| i386 | 2 MB |

| IA-64 | 32 MB |

| PowerPC | 4 MB |

| … | … |

| x86_64 | 2 MB |

Most architectures have a default stack size of about 2-4 MB, and very few architectures use a 32 MB stack on which user programs can store function parameters and local variables. However, this fixed stack size is not the right value in some scenarios. If the program needs to run hundreds or even thousands of threads at the same time, most of these threads will only use a small amount of stack space, and when the function call stack is very deep, the fixed stack size will not meet the needs of the user program.

Threads and processes are both contexts for code execution, but if an application contains hundreds or thousands of execution contexts and each context is a thread, it can take up a lot of memory space and incur other additional overhead.

Escape Analysis

In programming languages such as C and C++, which require manual memory management, the allocation of objects or structures to the stack or heap is at the discretion of the engineer, and this poses a challenge for the engineer’s work. If the engineer can accurately allocate a reasonable amount of space to each variable, then the overall program must run most efficiently and use memory efficiently, but manually allocating memory leads to two problems as follows.

- objects allocated to the heap that do not need to be allocated to the heap are allocated to the heap - wasting memory space.

- objects that need to be allocated to the heap are allocated to the stack - hanging pointers, compromising memory safety.

Compared to hanging pointers, wasting memory space is a minor problem. In C, it is a common error for a variable on the stack to be returned to the caller as the return value of a function. In the code shown below, the variable i on the stack is incorrectly returned.

When the dangling_pointer function returns, its local variable is reclaimed by the compiler and the caller gets a dangerously hanging pointer, a problem that is more difficult to detect and locate in large projects when we are not sure if the value the current pointer is pointing to is legal.

In compiler optimization, escape analysis is the method used to determine the dynamic scope of a pointer. The Go language compiler uses escape analysis to determine which variables should be allocated on the stack and which variables should be allocated on the heap, which includes memory implicitly allocated using methods such as new, make, and literals, and the Go language escape analysis follows two invariants.

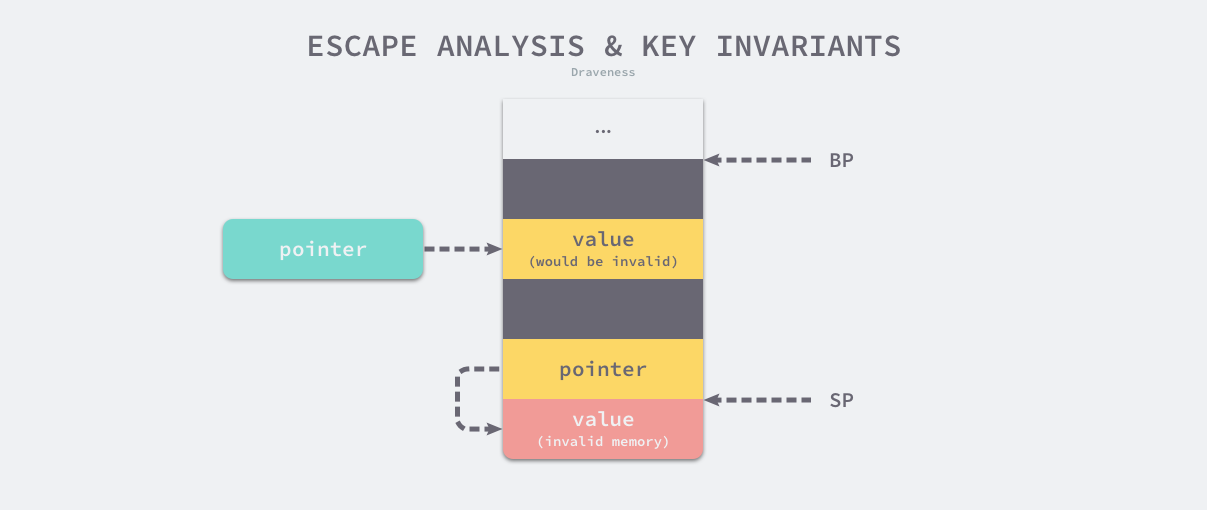

- a pointer to a stack object cannot exist in the heap.

- a pointer to a stack object cannot survive the recycling of the stack object.

When we violate the first invariant, the green pointer on the heap points to the yellow memory on the stack. Once the function returns, the function stack will be recycled and the value pointed to by the green pointer is no longer legal; if we violate the second invariant, the memory pointed to by the yellow pointer is no longer legal because the memory under the register SP has been freed due to the function return.

Escape analysis is a kind of static analysis, after the compiler parses the Go language source file, it can get the abstract syntax tree (AST) of the whole program, the compiler can analyze the static data flow according to the abstract syntax tree, we will realize the whole process of static analysis by the following steps.

- construct a directed graph with weights, where the vertex cmd/compile/internal/gc.EscLocation indicates the assigned variable, the edge cmd/compile/internal/gc.EscEdge indicates the assignment relationship between variables, and the weights indicate the number of addressing and fetching addresses.

- traverse the object assignment graph and look for variable assignment relationships that violate two invariants, if a variable on the heap points to a variable on the stack, then the variable needs to be assigned on the heap.

- recording the flow of data from the call parameters of a function to the heap and to the return value to enhance the escape analysis of function parameters.

Deciding whether a variable is on the stack or the heap, although important, is a relatively well-defined problem, and we can make decisions uniformly through the compiler. To ensure absolute memory safety, the compiler may incorrectly assign some variables to the heap, but because the heap is also scanned by the garbage collector, it does not cause memory leaks and safety issues such as hanging pointers, freeing up the engineer’s productivity.

Stack memory space

The Go language uses the user-state thread Goroutine as the execution context, which has much less additional overhead and default stack size than threads, however the stack memory space and stack structure of Goroutine has also undergone some changes in earlier versions.

- v1.0 ~ v1.1 - minimum stack memory space of 4KB.

- v1.2 - raised the minimum stack memory to 8KB7.

- v1.3 - replaced the segmented stack of the previous version with a contiguous stack8.

- v1.4 - reduced the minimum stack memory to 2KB9.

The initial stack memory of Goroutine was modified several times in the first few versions, and the increase from 4KB to 8KB was a temporary solution to mitigate the performance impact of stack splitting in segmented stacks; after the introduction of continuous stacks in v1.3, the initial stack size of Goroutine was reduced to 2KB, further reducing the amount of memory occupied by Goroutine. memory space.

segmented stack

The segmented stack is a pre v1.3 implementation of the Go language, where all Goroutines are initialized by calling `runtime.stackalloc:go1.2 to allocate a fixed size block of memory, denoted by runtime.StackMin:go1.2, which is 8KB in v1.2.

|

|

If the memory requested by this method is a fixed 8KB in size or if other conditions are met, the runtime will find a free block of memory in the global stack cache chain and return it as the stack space for the new Goroutine; in the rest of the cases, the stack memory space will request a suitable block of memory from the heap.

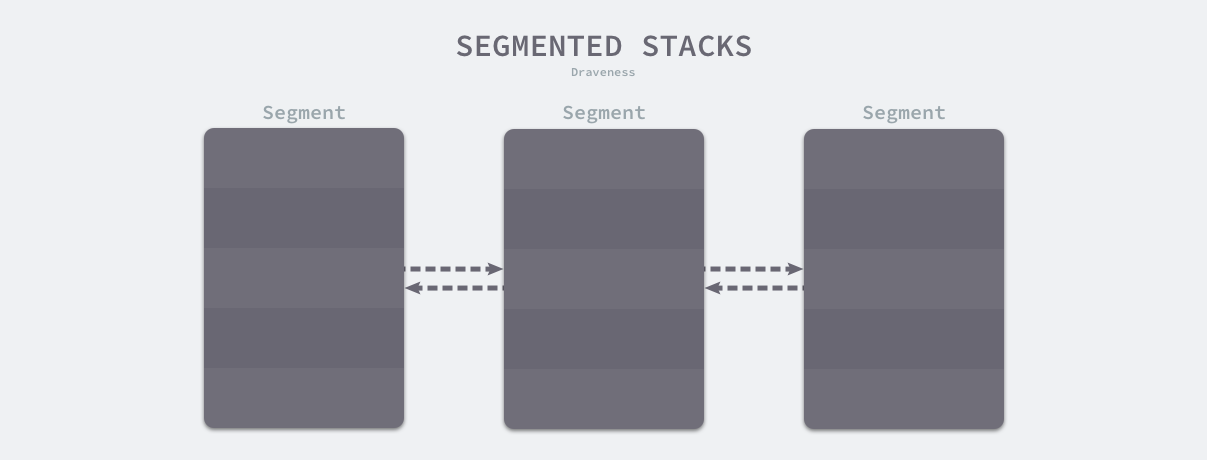

As the Goroutine calls more and more function levels or local variables, the runtime calls runtime.morestack:go1.2 and runtime.newstack:go1.2 to create a new stack space that is not contiguous, but multiple stack spaces of the current Goroutine are linked in a chain These stack spaces are not contiguous, but the multiple stack spaces of the current Goroutine are linked together in a chain, and the runtime finds the contiguous stack fragments by means of pointers to

Once the stack space requested by a Goroutine is no longer needed, the runtime calls runtime.lessstack:go1.2 and runtime.oldstack:go1.2 to free up the memory space that is no longer in use.

While the segmented stack mechanism can allocate memory for the current Goroutine on demand and reduce the memory footprint in a timely manner, it also has two major problems.

- if the current Goroutine’s stack is almost full, then any function call will trigger stack expansion, which in turn will trigger stack shrinkage when the function returns, and if the function is called in a loop, the allocation and release of the stack will cause significant additional overhead, which is known as the hot split problem.

- once the memory used by the Goroutine crosses the expansion and contraction threshold of the segmented stack, the runtime triggers the expansion and contraction of the stack, resulting in additional workload.

Continuous Stack

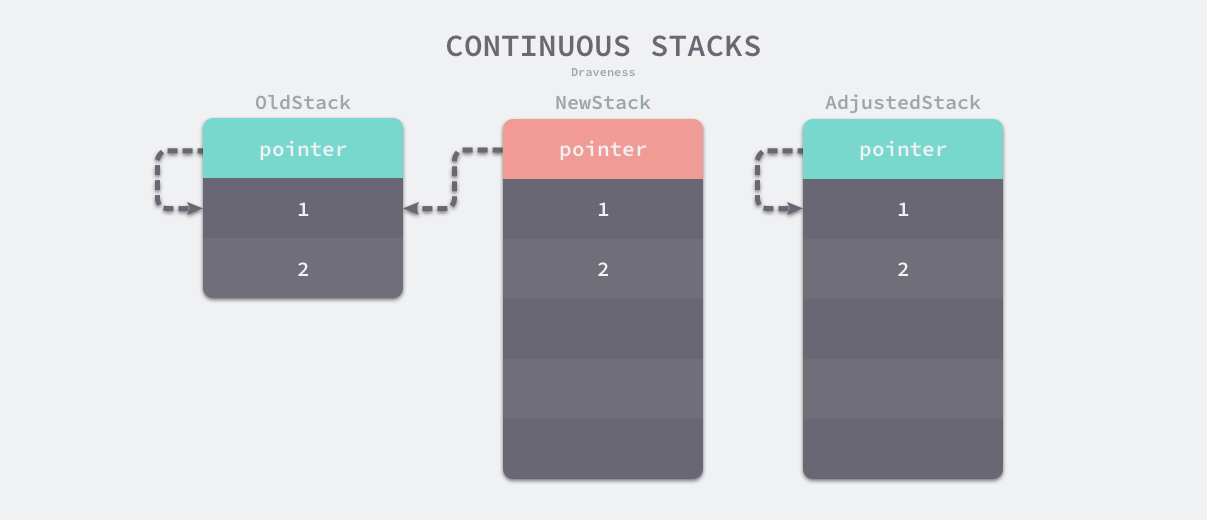

The core principle is that whenever a program runs out of stack space, a larger stack is initialized and all the values in the original stack are migrated to the new stack, so that new local variables or function calls have sufficient memory space. When using the continuous stack mechanism, the expansion due to lack of stack space goes through the following steps.

- allocating a larger amount of stack memory space in the memory space.

- copying all the contents of the old stack to the new stack.

- redirecting the pointers to the variables corresponding to the old stack to the new stack.

- destroying and reclaiming the memory space of the old stack.

The most important step in the expansion process is the third step of adjusting the pointers, which ensures the correctness of the pointers to the stack, because the memory of all the variables in the stack changes, so the pointers that were pointing to the variables in the stack need to be adjusted as well. We mentioned earlier that Go language programs that undergo escape analysis follow the following invariant – pointers to stack objects cannot exist in the heap, so pointers to variables on the stack can only be on the stack, and we only need to adjust all variables on the stack to ensure memory safety.

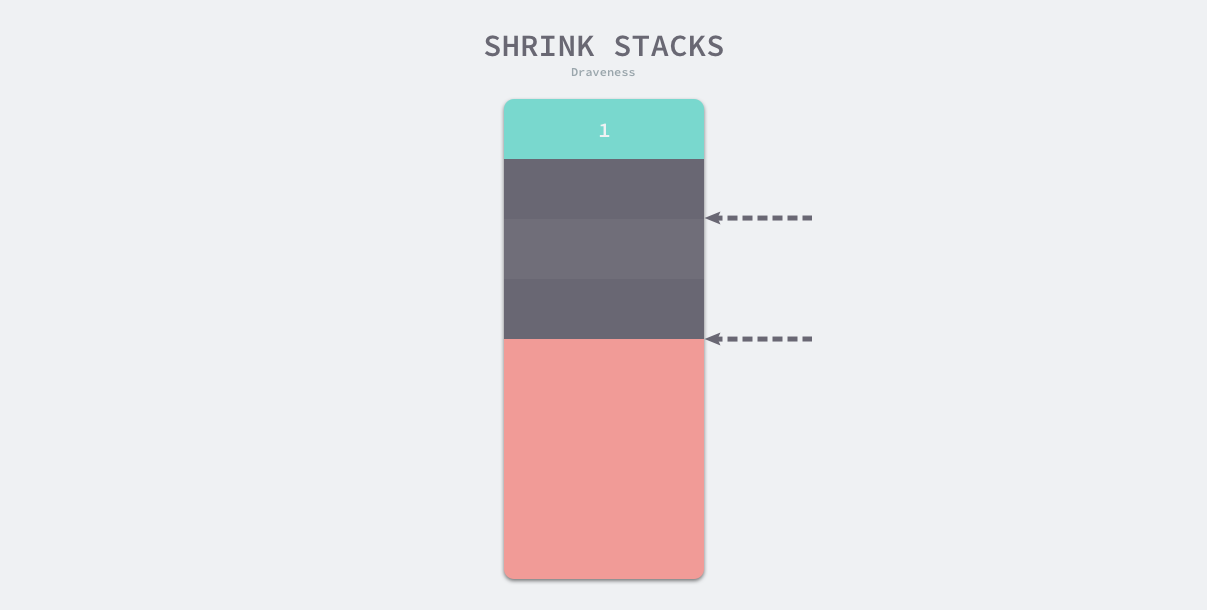

Because of the need to copy variables and adjust pointers, the contiguous stack adds additional overhead to the stack expansion, but the performance problems caused by hot splits can be avoided by a reasonable stack shrinkage mechanism. If the Goroutine uses a quarter of the stack memory during GC, it will be reduced by half, so that it will only be expanded once when the stack memory is almost full and will not be expanded and shrunk as often as function calls.

Stack operations

The execution stack in Go is represented by runtime.stack, which contains only two fields, representing the top of the stack and the bottom of the stack, each representing a memory space in the range [lo, hi).

The structure of the stack is very simple, but to understand how the Goroutine stack is implemented, we need to start with two phases, during compilation and at runtime.

- the compiler will insert the runtime.morestack or runtime.morestack_noctxt function before calling the function at the compilation stage via cmd/internal/obj/x86.stacksplit.

- the runtime will call runtime.stackalloc in runtime.malg when creating a new Goroutine to request new stack memory and check for sufficient stack space in runtime.morestack inserted by the compiler.

Note that the Go compiler does not insert runtime.morestack for all functions, it only inserts instructions when necessary to reduce additional runtime overhead. The compiler instruction nosplit can skip the stack overflow check, which reduces some of the overhead, but there is also a risk of overflow with a fixed size stack. This section analyzes the initialization of the stack, the allocation of the stack when the Goroutine is created, the expansion of the stack by the compiler and the runtime, and the shrinking process when the stack space is underutilized.

Stack initialization

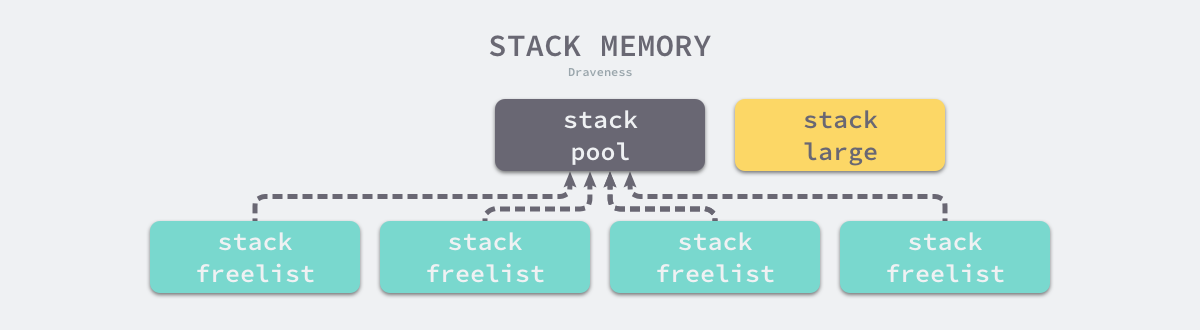

The stack space contains two important global variables in the runtime, runtime.stackpool and runtime.stackLarge, which represent the global stack cache, which can allocate less than 32KB of memory, and the large stack cache, which is used to allocate stack space larger than 32KB.

|

|

Both of these global variables for allocating space are related to the memory management unit runtime.mspan, and we can assume that Go’s stack memory is allocated on the heap, and that runtime initialization calls runtime.stackinit to initialize these global variables.

From the experience of scheduler and memory allocation, if only global variables are used to allocate memory at runtime, it will inevitably cause lock competition between threads and thus affect the execution efficiency of the program. stack memory is more closely related to threads, so we add a stack cache to each thread cache runtime.mcache to reduce the impact of lock competition.

The runtime allocates less than 32KB of stack memory using the global runtime.stackpool and the free chain table in the thread cache, and more than 32KB using the global runtime.stackLarge and heap memory to improve the performance of locally allocated stack memory.

Stack allocation

The runtime.stackalloc is called in the Goroutine’s initialization function runtime.malg to allocate a stack memory space of sufficient size. Depending on the size of the thread cache and the requested stack, the function allocates the stack space in three different ways.

- if the stack space is small, allocate memory using the global stack cache or a fixed-size free chain table on the thread cache.

- if the stack space is large, obtain memory space from the global large stack cache runtime.stackLarge.

- if the stack space is large and runtime.stackLarge space is insufficient, request a slice of memory on the heap of sufficient size.

We will describe here the runtime allocation of stack space in two parts according to the size of the stack. On Linux, _FixedStack = 2048, _NumStackOrders = 4, _StackCacheSize = 32768, i.e. if the requested stack space is less than 32KB, we initialize the memory in the global stack cache pool or in the thread’s stack cache at.

|

|

runtime.stackpoolalloc will fetch new memory in the global stack cache pool runtime.stackpool, and if the stack cache pool does not contain any remaining memory, the runtime will request a piece of memory from the heap; if the thread cache contains enough space, we can fetch memory from the thread’s local cache, and once we find that there is not enough space will call runtime.stackcacherefill to get new memory from the heap.

If the Goroutine requests too much memory space, the runtime will see if there is any space left in runtime.stackLarge, and if there is no space left, it will also request new memory from the heap.

|

|

Note that because OpenBSD 6.4+ has special requirements for stack memory, we need to call runtime.osStackAlloc to do some extra processing whenever we request stack memory from the heap, however, other operating systems do not have this limitation.

Stack expansion

The compiler inserts a runtime.morestack runtime check for function calls in cmd/internal/obj/x86.stacksplit, which will check if the current Goroutine stack memory is sufficient before almost all function calls, and if the current stack needs to be expanded, we will save some information about the stack and call runtime.newstack to create a new stack.

|

|

runtime.newstack will first do some preparation and check if the current Goroutine has issued a preemption request, and if it has issued a preemption request.

- call runtime.gogo directly to trigger scheduling of the scheduler when the current thread is available for seizure.

- call runtime.shrinkstack if the current Goroutine has been marked by runtime.scanstack as needing to shrink the stack during garbage collection.

- if the current Goroutine is hung by the runtime.suspendG function, call runtime.preemptPark to passively relinquish control of the current processor and change the Goroutine’s state to _Gpreempted.

- call runtime.gopreempt_m to actively relinquish control of the current processor.

If the current Goroutine does not need to be preempted, meaning we need new stack space to support function calls and the initialization of local variables, the runtime will first check if the target size stack will overflow.

|

|

If the size of the target stack does not exceed the program’s limits, we switch the Goroutine to the _Gcopystack state and call runtime.copystack to start the stack copy. Before copying the stack memory, the runtime will allocate new stack space via runtime.stackalloc.

The initialization of the new stack and the copying of the data is a relatively simple process, but this is not the most complicated part of the whole process. We also need to point the memory in the source stack to the new stack, during which we need to adjust the following pointers separately.

- call runtime.adjustsudogs or runtime.syncadjustsudogs to adjust the pointer to the runtime.sudog structure.

- call runtime.memmove to copy the entire slice of memory from the source stack to the new stack.

- calling runtime.adjustctxt, runtime.adjustdefers and runtime.adjustpanics to adjust pointers to the remaining Goroutine-related data structures.

|

|

Adjusting pointers to stack memory calls runtime.adjustpointer, which adjusts the pointers using the memory address difference between the new stack and the old stack calculated by runtime.adjustinfo. Once all the pointers have been adjusted, we can update several variables in Goroutine and free the original stack memory space with runtime.stackfree.

Stack shrinkstack

runtime.shrinkstack The function called when the stack is shrunk, the principle of this function is very simple, most of which is the code to check whether the preconditions for shrinkage are met, the core logic is only the following lines.

If a stack reduction is to be triggered, the new stack will be half the size of the original stack, although the reduction process will stop if the new stack size is below the program’s minimum limit of 2KB.

The runtime will only shrink the stack when it is under 1/4 of its memory usage, and the shrink will also call the runtime.copystack used in the expansion to open up new stack space.

Summary

Stack memory is an important memory space in an application that can support local variables and function calls. Variables in the stack space are created and destroyed along with the stack, and this part of the memory space does not require much intervention and management by engineers. Modern programming languages reduce our workload through escape analysis, and understanding the allocation of the stack space is a great help in understanding the runtime of the Go language.