We should not be unfamiliar with several states of PV and PVC, but we may have some questions in the process of using them, such as why the PV has become Failed state, how can the newly created PVC bind the previous PV, and can I restore the previous PV? Here we will explain several state changes in PV and PVC again.

In different cases, the state changes of PV and PVC we use the following table to illustrate.

Create PV

Under normal circumstances, a PV is created successfully and is Available.

By creating the PV object directly above, you can see that the status is Available, indicating that it can be used for PVC binding.

New PVC

The newly added PVC state is Pending, if there is a suitable PV, this Pending state will immediately change to Bound state, and the corresponding PVC will also change to Bound, the PVC and PV are bound. We can add the PVC first and then add the PV, so that we can ensure to see the Pending state.

The new PVC resource object above will be in the Pending state when it is first created.

When a PVC finds a suitable PV binding, it immediately becomes Bound and the PV changes from Available to Bound.

Delete PV

Since the PVC and PV are now bound, what will happen if we accidentally delete the PV at this time?

|

|

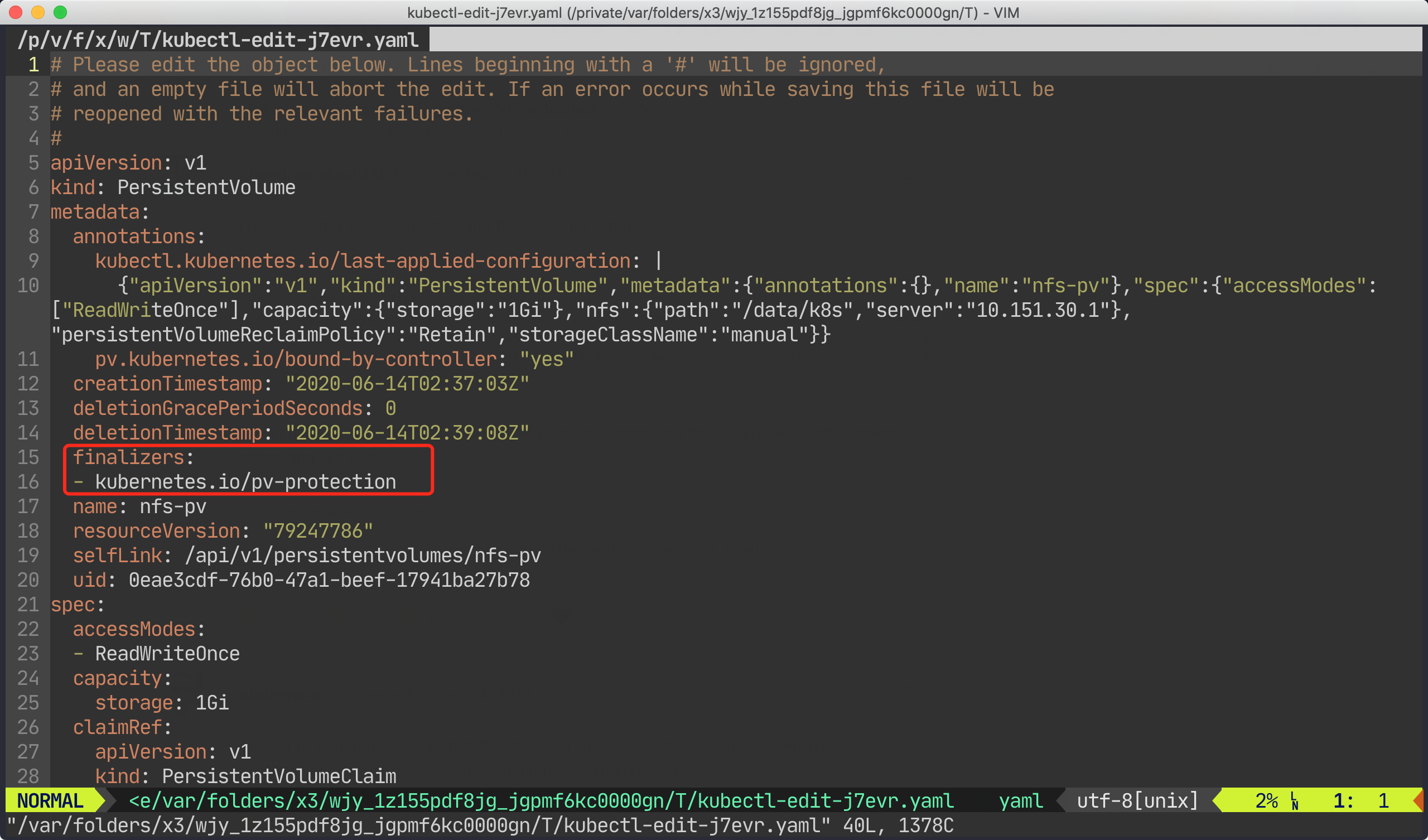

In fact, we delete the PV here is hanged, that is, can not really delete the PV, but this time the PV will become Terminating state, while the corresponding PVC is still Bound state, that is, this time because the PV and PVC have been bound together, you can not first delete the PV, but now the state is Terminating state, there is still no effect on the PVC, so how should we deal with this time?

We can force the PV to be deleted by editing the PV and deleting the finalizers property in the PV.

Once the editing is complete, the PV is actually deleted and the PVC is now Lost.

Re-create PV

When we see that the PVC is in the Lost state, don’t worry, this is because the previously bound PV is no longer available, but the PVC still has the binding information of the PV.

So to solve this problem is also very simple, just recreate the previous PV:

Once the PV has been successfully created, both the PVC and PV states revert to the Bound state.

|

|

Delete PVC

The above is the case of deleting the PV first, so what will be the situation if we delete the PVC first?

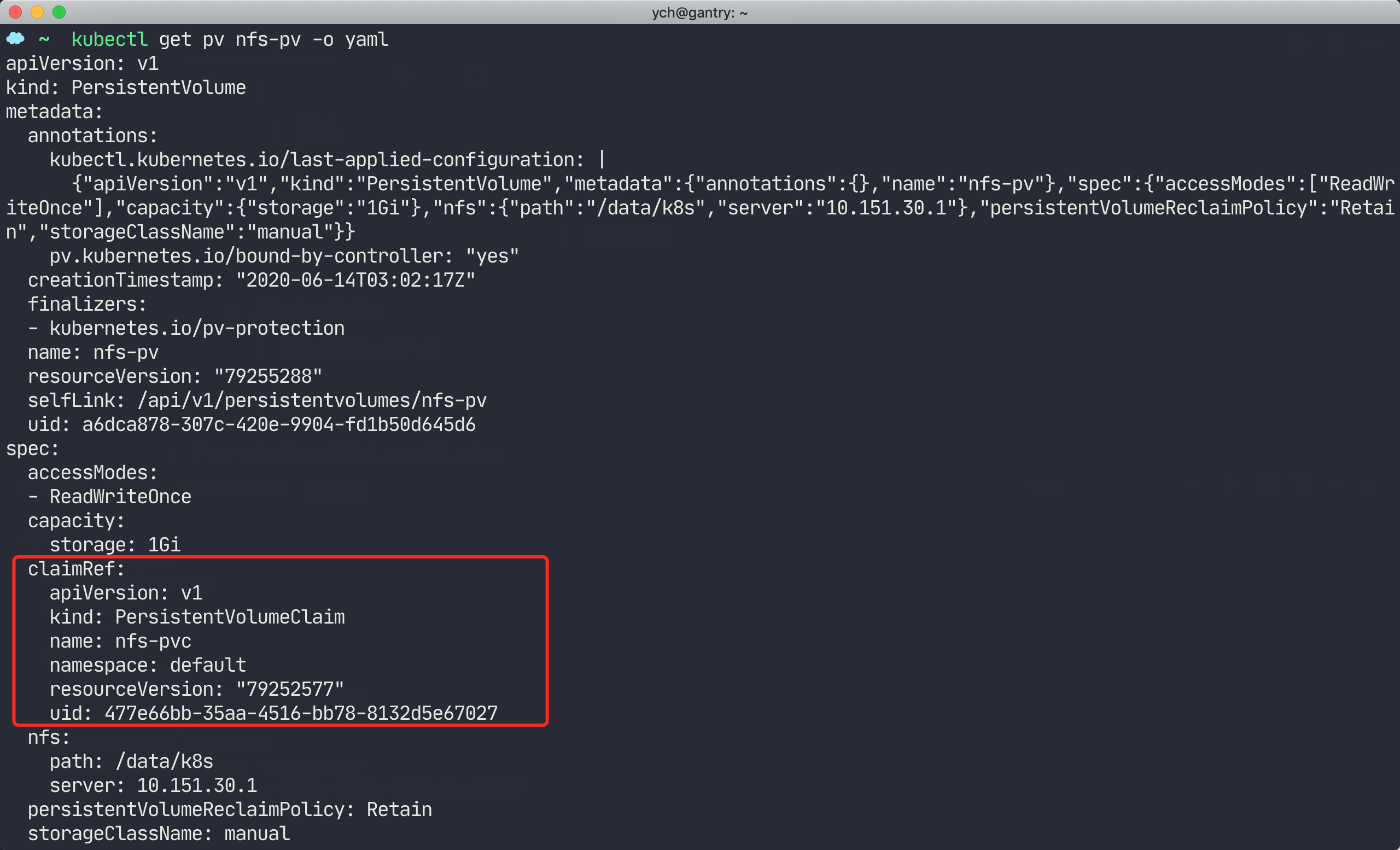

We can see that after the PVC is deleted, the PV becomes Released, but we look closely at the CLAIM attribute at the back, which still retains the binding information of the PVC, and the object information of the PV can be exported by the following command.

At this point, you might think that now that my PVC has been deleted and the PV has become Released, I can rebuild the previous PVC and rebind it, but this is not the case.

This is when we need to intervene manually. In a real production environment, the administrator will back up or migrate the data, then modify the PV and remove the reference to the claimRef to the PVC, and when the PV controller of Kubernetes watches the PV change, it will modify the PV to the Available state, and the Available state PV can of course be bound to other PVCs.

You can edit the PV directly to remove the contents of the cliamRef property.

Once the deletion is complete, the PV will become a normal Available state, and the previous PVC can be rebuilt to bind normally.

Various enhancements have also been made to PV in newer versions of Kubernetes clusters, such as cloning, snapshots, and other features that are very useful, and we’ll come back later to explain these new features.