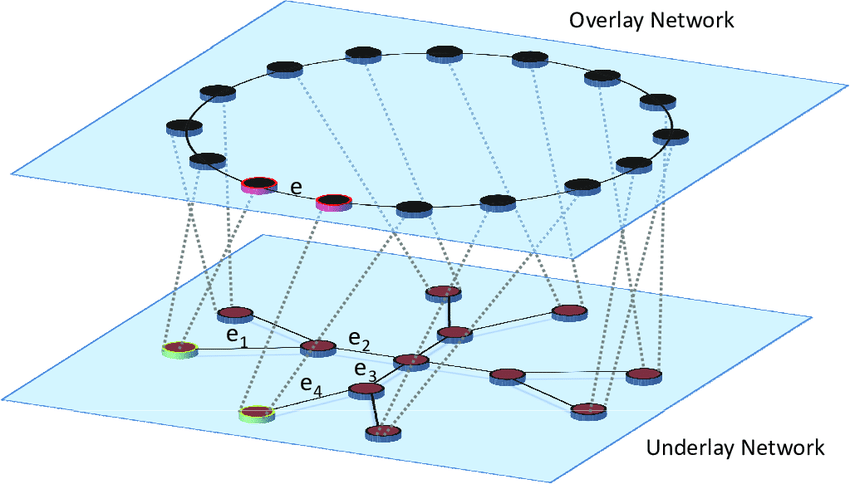

Engineers who know a little about computer networks or Kubernetes networks should have heard of Overlay Network, which is not a new technology, but a computer network built on top of another network, a form of network virtualization technology, which has been promoted by the evolution of cloud computing virtualization technology in recent years.

Because the Overlay network is a virtual network built on top of another computer network, it cannot stand alone, and the network on which the Overlay layer depends is the Underlay network, and the two concepts often appear in pairs.

Underlay network is an infrastructure layer specifically used to carry user IP traffic, and the relationship between it and Overlay network is somewhat similar to that of physical machine and virtual machine. Overlay networks and virtual machines are virtualized tiers that rely on lower-level entities using software.

Before analyzing the role of Overlay network, we need to have a general understanding of its common implementation. In practice, we usually use Virtual Extensible LAN (VxLAN) to form an Overlay network. In the following figure, two physical machines can access each other through a three-layer IP network.

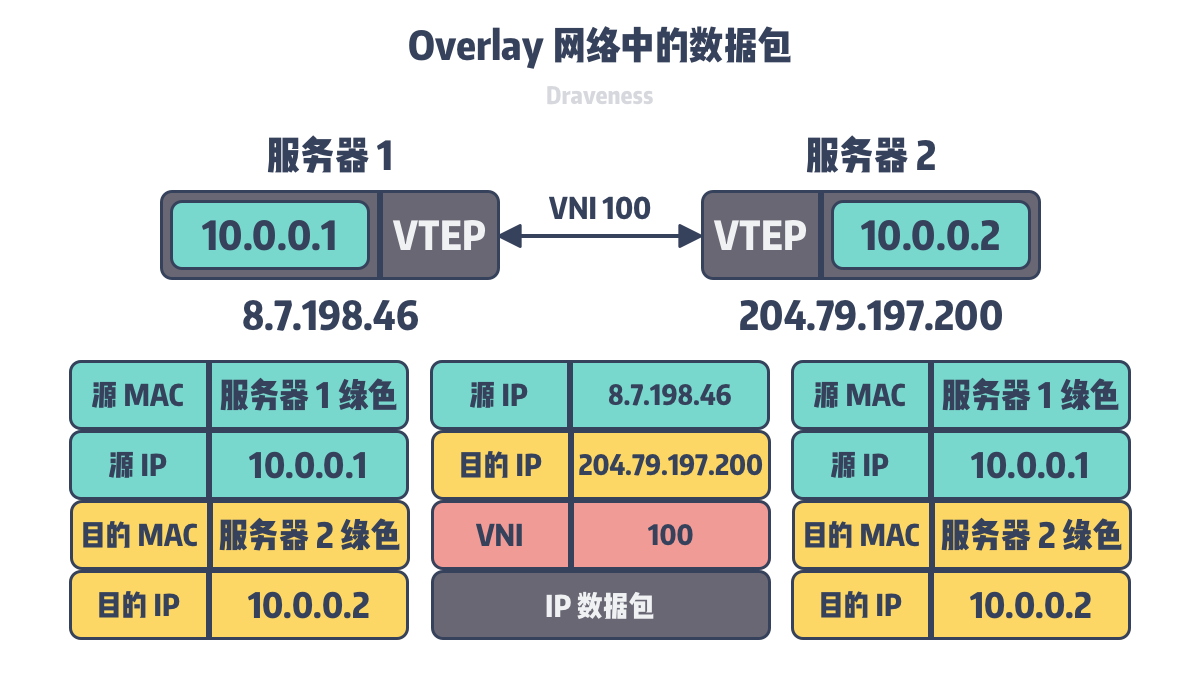

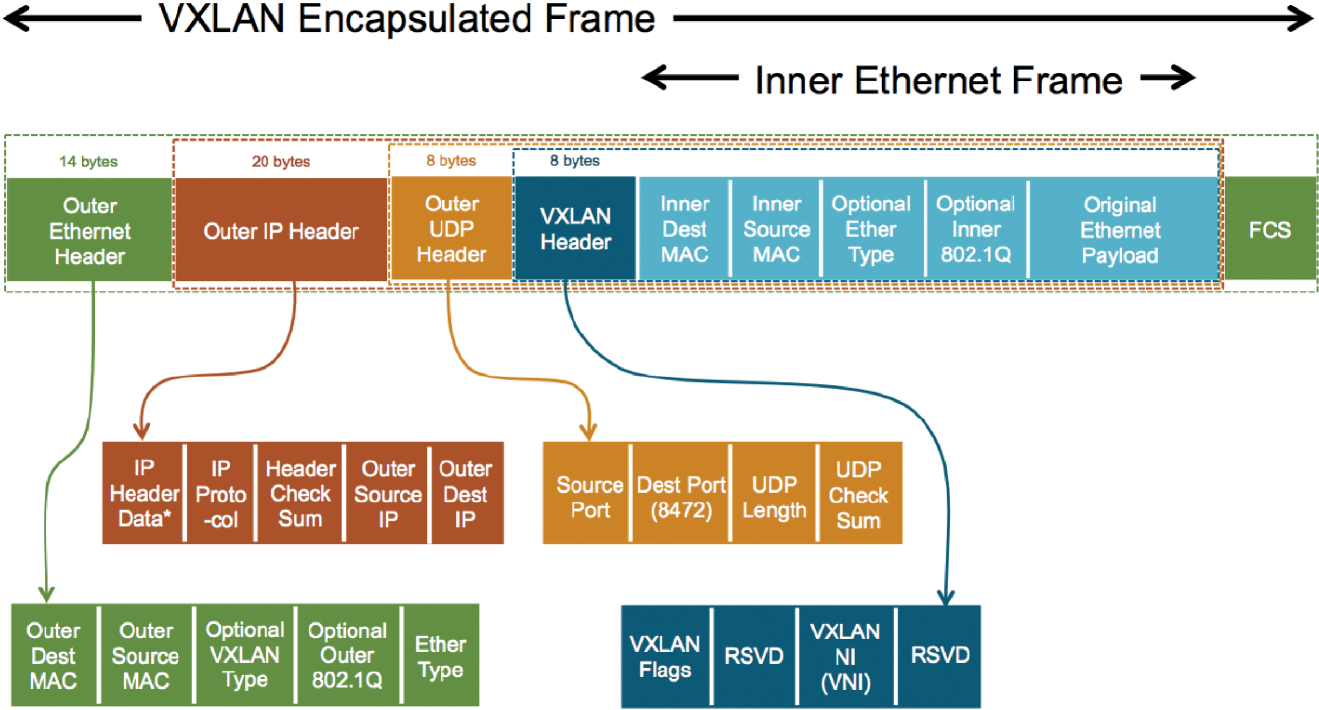

VxLAN uses Virtual Tunnel End Point (VTEP) devices for secondary encapsulation and unencapsulation of packets sent and received by the server.

For example, the VTEP in Server 1 needs to know that to access the 10.0.0.2 virtual machine in the green network, it needs to access Server 2 with IP address 204.79.197.200. These configurations can be manually configured by the network administrator, automatically learned, or set by the These configurations can be configured manually by the network administrator, learned automatically, or set by the upper-level manager. When the green 10.0.0.1 virtual machine wants to send data to the green 10.0.0.2, it goes through the following steps.

- 10.0.0.1 in green sends IP packets to the VTEP.

- the VTEP of server 1 receives the packet sent by 10.0.0.1.

- obtains the MAC address of the destination virtual machine from the received IP packet.

- look up in the local forwarding table the IP address of the server where this MAC address is located, i.e. 204.79.197.200.

- construct a new UDP packet with the virtual network identifier (VxLAN Network Identifier, VNI) where the green VM is located and the original IP packet as the load.

- sending the new UDP packet to the network.

- after the UDP packet is received by the VTEP on Server 2.

- removes the protocol header from the UDP packet.

- checks the VNI in the packet.

- forwards the IP packet to the target green server 10.0.0.2.

- the green 10.0.0.2 will receive the packet from the green server 10.0.0.1

During the transmission of packets, the two communicating parties are unaware of the transformations made by the underlying network, and they think they can access each other through the Layer 2 network, but in fact, they are relayed through the Layer 3 IP network and connected through the tunnel established between VTEPs. Overlay networks can use the underlying network to form a Layer 2 network between multiple data centers, but the packetization and unpacketization process also brings additional overhead, so why do we need Overlay networks for our clusters? The three problems that Overlay networks solve are.

- Migration of VMs and instances within clusters, across clusters, or between data centers is more common in cloud computing.

- The size of VMs in a single cluster can be very large, and the large number of MAC addresses and ARP requests can put tremendous pressure on network devices.

- Traditional network isolation technology VLANs can only create 4096 virtual networks, and public clouds and large-scale virtualized clusters require more virtual networks to meet the demand for network isolation.

Virtual Machine Migration

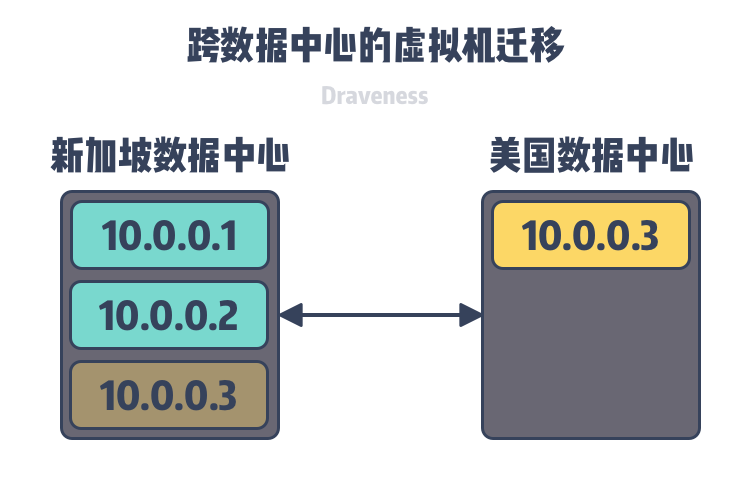

Kubernetes is now the de facto standard in container orchestration, and while many traditional industries are still using physical machines to deploy services, more and more computing tasks will be run on virtual machines in the future. VM migration is the process of moving VMs from one physical hardware device to another. Large-scale VM migration in clusters is a relatively common occurrence because of routine maintenance updates, and large clusters of thousands of physical machines make it easier to schedule resources within the cluster, and we can use VM migration to improve resource utilization, tolerate VM errors, and improve node portability.

When the host where the virtual machine is located is down due to maintenance or other reasons, the current instance needs to be migrated to another host. To ensure that the business is not interrupted, we need to ensure that the IP address remains unchanged during the migration process, because Overlay implements Layer 2 network at the network layer, so multiple physical machines can form a virtual LAN as long as the network layer is reachable, and the virtual machines or containers are still in the same Layer 2 network after migration, so there is no need to change the IP address.

As shown in the above figure, although the migrated VMs and other VMs are located in different data centers, the migrated VMs can still form a Layer 2 network with the VMs in the original cluster through the Overlay network because the two data centers can be connected through IP protocols, and the hosts inside the cluster are not aware of and do not care about the underlying network architecture, they only know that different VMs can be connected to each other.

Virtual Machine Scale

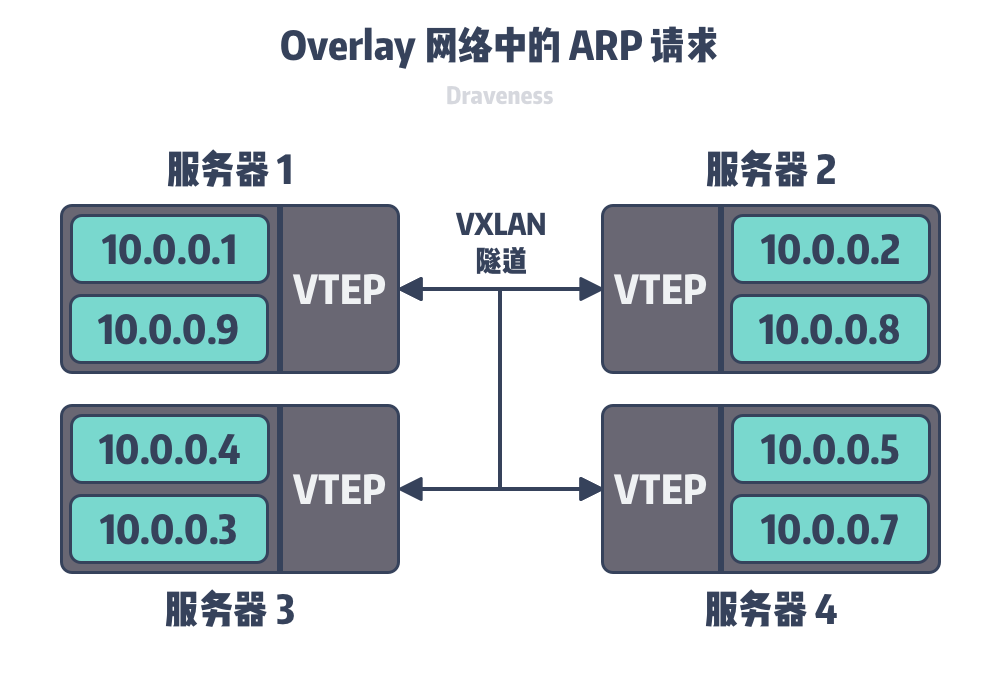

A traditional Layer 2 network relies on MAC addresses for communication, and a traditional Layer 2 network requires network devices to store forwarding tables from IP addresses to MAC addresses.

If each of these 5000 nodes contains only one container, it is not too much pressure on the internal network equipment, but in reality, a 5000-node cluster contains tens or even hundreds of thousands of containers, and when a container sends an ARP request to the cluster, all containers in the cluster will receive the ARP request, which will bring a very high network load.

In an overlay network built with VxLAN, the network will re-encapsulate the data sent by virtual machines into IP packets, so that the network only needs to know the MAC addresses of different VTEPs, thus reducing the hundreds of thousands of data in the MAC address table entries to a few thousand, and the ARP requests will only be spread among the VTEPs in the cluster, and the remote VTEPs will only broadcast the data locally after unpacking it, which will not affect the network. Although this is still a high requirement for the network equipment in the cluster, it has greatly reduced the pressure on the core network equipment.

Overlay networks are closely related to Software-defined networking (SDN), which introduces a data plane and a control plane, where the data plane is responsible for forwarding data and the control plane is responsible for computing and distributing forwarding tables. A network composed of this technology can learn the MAC and ARP table entries in the network through the traditional self-learning mode, but in a large-scale cluster, we still need to introduce the control plane to distribute the routing forwarding table.

Network Isolation

Large-scale data centers often provide cloud computing services to the outside world, and the same physical cluster may be split into multiple small pieces to be assigned to different tenants (Tenant), because the data frames of the Layer 2 network may be broadcasted, so for security reasons network isolation is needed between these different tenants to avoid the traffic between tenants from affecting each other or even malicious attacks. Traditional network isolation will use virtual LAN technology (Virtual LAN, VLAN), VLAN will use 12 bits to represent the virtual network ID, and the maximum number of virtual network is 4096.

4096 virtual networks are not enough for large scale data centers, VxLAN will use 24-bit VNI to represent the number of virtual networks, a total of 16,777,216 virtual networks, which can also meet the needs of data center multi-tenant network isolation.

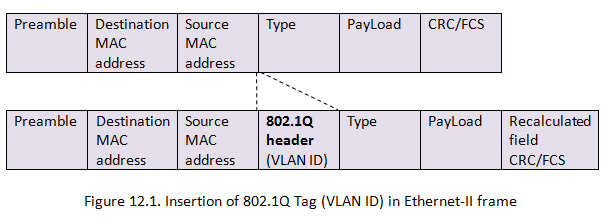

More virtual networks are actually a benefit that comes hand-in-hand with VxLAN, and it should not be a decisive factor in using VxLAN. the extension of the VLAN protocol, IEEE 802.1ad, allows us to include two 802.1Q protocol headers in an Ethernet frame, and two VLAN IDs consisting of 24 bits can also represent 16,777,216 virtual networks6, so Trying to address network isolation is not a sufficient condition for using VxLAN or Overlay networks.

Summary

Today’s data centers contain multiple clusters and a large number of physical machines. The Overlay network is an intermediate layer between the virtual machines and the underlying network devices, and by using the Overlay network as an intermediate layer, we can solve the migration problem of virtual machines, reduce the pressure on the Layer 2 core network devices and provide a larger number of virtual networks.

- In a Layer 2 network using VxLAN, VMs can still be guaranteed reachability on the Layer 2 network after migration across clusters, availability zones and data centers, which can help us ensure the availability of online services, improve resource utilization of clusters, and tolerate VM and node failures.

- The scale of virtual machines in a cluster may be tens of times larger than physical machines, and the number of MAC addresses contained in a cluster composed of virtual machines may be one or two orders of magnitude larger than in a traditional cluster formed by a physical body, and it is difficult for network equipment to bear such a large scale of Layer 2 network requests; Overlay networks can reduce the MAC address table entries and ARP requests in a cluster through IP packets and control planes.

- The protocol header of VxLAN uses 24-bit VNI to represent virtual networks, which can represent 16 million virtual networks in total, and we can allocate network bandwidth separately for different virtual networks to meet the network isolation needs of multi-tenants.

It should be noted that Overlay network is only a kind of virtual network on the physical network, using this technology does not directly solve the problems such as scale in the cluster, and VxLAN is not the only way to form Overlay network, we can consider using different technologies in different scenarios, for example: NVGRE, GRE, etc. At the end of the day, let’s look at some more open related issues, and interested readers can think carefully about the following questions.

- VxLAN encapsulates raw packets into UDP for distribution across the network, so what methods are used to transmit data by NVGRE and STT, respectively?

- What technologies or software should be used to deploy Overlay networks in Kubernetes?