There is a Kubernetes webhook maintained within the group that intercepts pod creation requests and makes some changes (e.g. adding environment variables, adding init-container, etc.).

The business logic itself is simple, but it’s hard to handle if errors are generated in the process. Either you directly prevent the pod from being created, then there is a risk that the application will not start. Either ignore the business logic, then it will lead to silent failure and no one will know that an error has occurred here.

So the plain idea is to tap into the alerting system, but this leads to coupling the current component with the specific alerting system.

In Kubernetes, there are Event mechanisms that can do the job of logging some events, such as warnings, errors, and other information, which are more suitable for this scenario.

What are Events/Event in Kubernetes?

An Event is one of the many resource objects in Kubernetes that are typically used to record state changes that occur within a cluster, ranging from cluster node exceptions to Pod starts, successful scheduling, and so on.

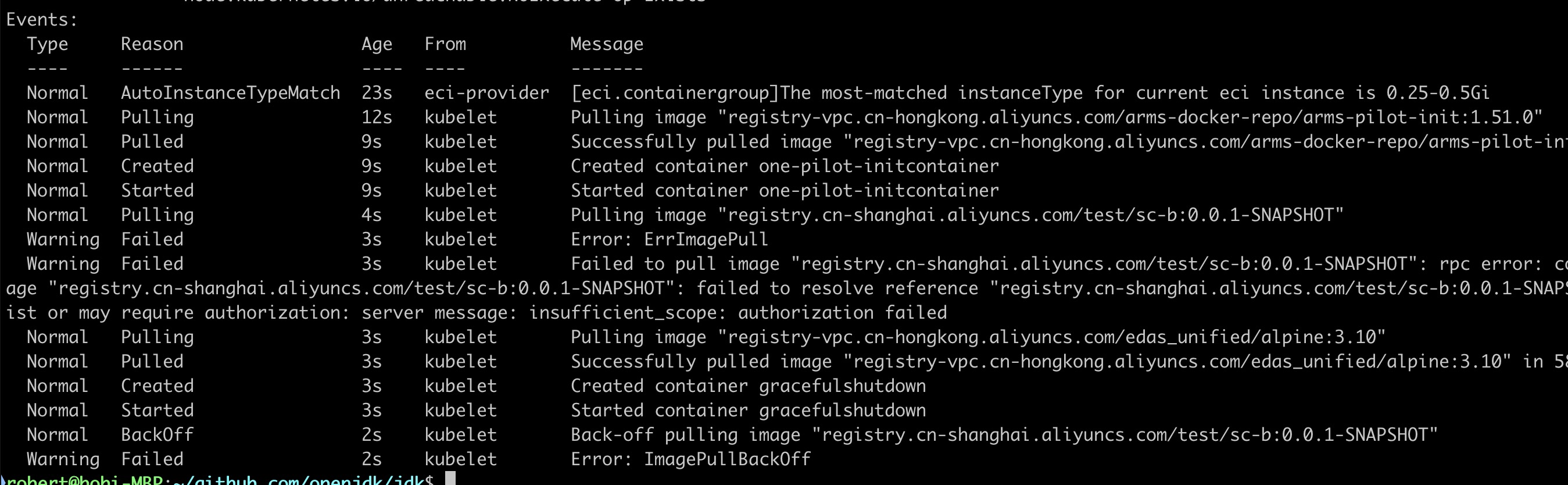

For example, if we Describe a pod, we can see the events corresponding to that pod:

kubectl describe pod sc-b-68867c5dcb-sf9hn

As you can see, everything from scheduling, to starting, to the eventual failure of this pod to pull the image is recorded by way of an Event.

Let’s look at the structure of an Event.

$ k get events -o json | jq .items[10]

|

|

As you can see, an event, the more important fields: * type - the type of the event, can be Warning, Normal, Error, etc.

- type - the type of the event, can be Warning, Normal, Error, etc.

- reason - the reason for the event, can be Failed, Scheduled, Started, Completed, etc.

- message - the description of the event

- involvedObject - the resource object for the event, could be Pod, Node, etc.

- source - the source of the event, can be kubelet, kube-apiserver, etc.

- firstTimestamp,lastTimestamp - the first and last time of the event

Based on this information, we can do some cluster-level monitoring and alerting, such as Aliyun’s ACK, which will send Event to SLS, and then do alerting according to the corresponding rules.

How to report events

The previous section talks about what an Event is in Kubernetes, but we have to report the event to let the Kubernetes cluster know that it happened and thus make subsequent monitoring and alerting.

How to access the Kubernetes API

The first step in reporting events is to access the Kubernetes API, which is based on the Restful API, and Kubernetes is also based on this API, wrapped with an SDK that is directly available.

To connect to the Kubernetes API through the SDK, there are two ways.

The first one is accessed through the kubeconfg file (from outside), and the second one is accessed through serviceaccount (from Pod).

For the sake of simplicity, we use the first way as an example.

|

|

By running this code, you can connect to the cluster and get the Kubernetes Server version.

|

|

How to create, escalate events

In the above example, with the clientset object, we now have to rely on this object to create an event in the Kuberentes cluster.

|

|

In the above example, we have created an Event under the namespace default starting with test- and the type of this Event is Warning.

We can also look at the final Event that is generated.

kubectl get events -o json | jq .items[353]

|

|

In this way, people who care about the corresponding events, such as those of the operations and maintenance staff, can do monitoring and alerting based on this information.

Usage Scenarios

Unlike business events, Kubernetes events are resources in the cluster, and the people who care about them are mostly the cluster maintainers.

Therefore, this event reporting mechanism is more suitable for some basic components to use, so that cluster maintainers can understand the current state of the cluster.

If you need to have more flexible alerting and monitoring, then you can use a time and alerting system that is closer to the business and has richer rules.