1. What this article is about

Growth in business volume and evolution of business forms require solid and robust IT systems to support them. Business content is transparent to the market, but IT systems can’t be built overnight. The competition between companies in the future will mainly come from the competition between IT systems, and the ability to respond quickly to business needs is the key to victory.

IT systems are constantly evolving. The cost of building efficient and intelligent IT systems is very high. At first, it only needs to be enough, then it is good, and finally it becomes the core competence.

Change is not scary, what is scary is the heavy historical baggage. For technicians, new requirements are not difficult, what is difficult is replacing components at high flight speeds. Both to ensure that the original function is normal, but also to meet the new needs, but also to replace the IT infrastructure.

In making the containerized, Kubernetes-ized transition, how to directly distribute files to virtual machines (VMs) and execute scripts on them is the focus of this paper’s thinking. Directly manipulating VMs does not fit the definition of cloud-native immutable infrastructure, but historical business scenarios require that a solution be provided as an IT platform party. This paper will provide an answer to this.

2. Why You Need a PaaS Platform

When an IT Ops team starts building PaaS, they are really standing up.

In the current environment, business models and patterns are no longer trade secrets. The rapid flow of information and people has put companies in a naked confrontation with each other. I can have the business you have, and you can add the features I have. The era of short-time explosive business growth has passed, and we are in an era of refined operation and data-based decision making.

The new era has more demands on IT system, and these demands cannot be met in the traditional model. The traditional model is to develop SaaS services for specific scenarios, encapsulating skills in fixed processes, reducing costs and increasing efficiency, and controlling risks. This is also sufficient in the early days, but as the business scale grows, operations and maintenance staff will be caught in endless overtime to change and add features.

The purpose of PaaS is to abstract some public functionality. The middle office is also built in such a way that the unchanged domain implementation is landed to the platform and the external service interface is provided. Let the front-end is directly bound to the business to cope with the rapid changes in the market.

When there is a PaaS platform, IT skills will have a direction of sedimentation, and IT personnel can be taken out of the repetitive and complicated tasks to think about the business, and through the collocation can quickly support the business.

3. How to implement file distribution, script execution

3.1 In a traditional PaaS platform

If you ask a maintenance person to distribute a file or execute a script in bulk, he can do it quickly using Ansible.

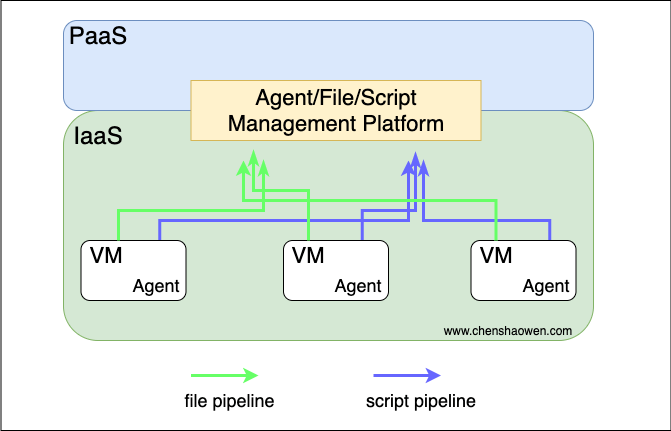

But the above mentions the need to free up your hands and build a PaaS platform. Here is a diagram of a traditional IT facility architecture.

In the traditional IT process, each machine purchased needs to be registered in CMDB, and then the Agent is installed for management. Through the file and script pipeline provided by Agent, the upper platform can realize the function of file distribution and script execution.

However, the development cost of Agent is very high. In some open source solutions, Agent is not open source as the core of company IT.

3.2 Under Kubernetes

In a cloud-native context, it is not allowed to directly modify the state of the IaaS tier VMs, called immutable infrastructure. In some practices, SSHD is even disabled for the container, and the container will instantly exit once an SSH login is available.

Distributing files and executing scripts directly to nodes is not advocated under Kubernetes.

The logic of immutable infrastructure (IaC) is designed to ensure that state is reproducible, consistent with declarative semantics. Directly modifying the infrastructure is a procedural operation where the infrastructure is in a running state with a lot of uncertainty and cannot be accurately described.

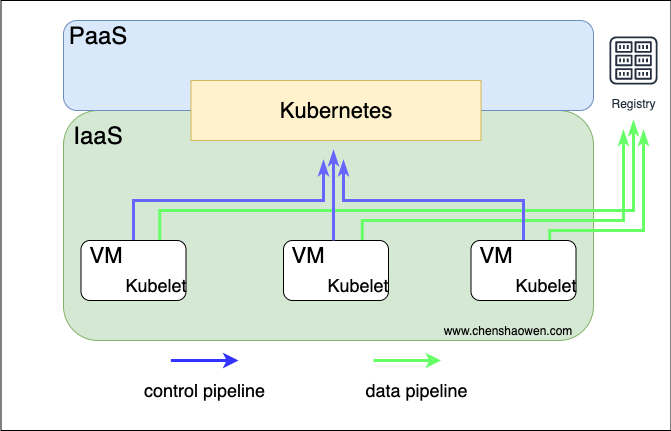

Below is a diagram of the IT facility architecture under cloud-native.

Kubernetes takes over the resources of the IaaS layer and controls the operation of the whole system. The services of the business are mainly distributed through the image repository, and the log collection and monitoring of the business need to be done with other open source components.

4. Kubernetes distributes files, executes script plans

4.1 Preparing for the walkthrough

Here is the list:

- A Kuberentes cluster, which needs to be able to execute the kubectl command

- VMs to be distributed have been added to the cluster nodes

- Docker environment and Dockerhub account

4.2 Content of the exercise

The walkthrough is divided into the following steps:

- prepare scripts and files for execution

- Build and push the image

- create Kubernetes Jobs for distribution

4.3 Objectives of the walkthrough

The goals of the walkthrough are as follows:

- Run a web service on a virtual machine that provides file downloading capabilities

- Distribute a file to the virtual machine and add it to the download service

5. Kubernetes distributes files, executes scripts

5.1 Cluster description

Due to budget constraints, a multi-node environment is not laid out here. However, in order to fit the real scenario, nodeSelector is used to select the specified nodes during Job execution without letting the distribution process get out of control.

5.2 Preparing distribution files and executing scripts

-

file directory structure

- demo

- Dockerfile

- start.sh

The following commands related to building the image are executed in the demo directory.

-

script start.sh content

The Kubernetes cluster is using the CentOS 7 operating system, which comes with a Python 2 interpreter. Here, for simplicity, we use

SimpleHTTPServerto provide the download service. -

Dockerfile content

-

Contents of the file to be distributed

The file can be a local file in the build environment, or can be any URL file link. Here I chose a PDF file link:

https://www.chenshaowen.com/static/file/ui-autotest.pdf

5.3 Building images

OCI images are common in Kubernetes, so you need to package files and scripts into images and distribute them through the image repository.

-

Package the files to be distributed into a image

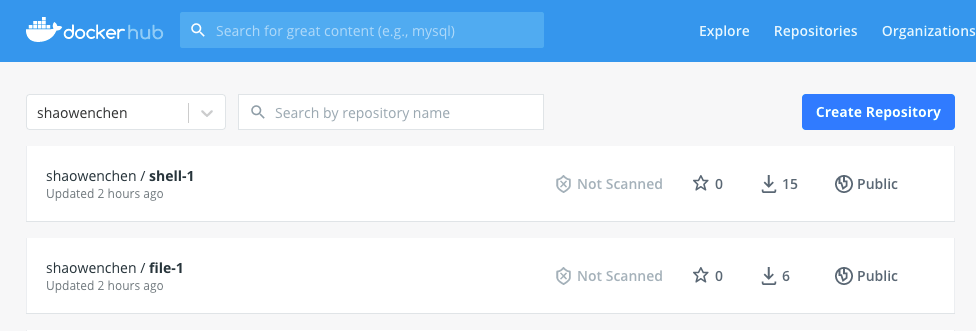

1docker build --build-arg file=https://www.chenshaowen.com/static/file/ui-autotest.pdf -t shaowenchen/file-1:latest ./Push image.

1docker push shaowenchen/file-1:latest -

Package the script to be executed into the image

1docker build --build-arg file=./start.sh -t shaowenchen/shell-1:latest ./Push

1docker push shaowenchen/shell-1:latest -

View images in Dockerhub

5.4 Kubernetes Node Preprocessing

In addition to the nodes to be distributed that need to be added to the Kubernetes cluster, another important area is the preprocessing of the nodes.

Node preprocessing is mainly about adding labels to nodes to mark them for accurate distribution. In production, the network is usually partitioned, so two dimensions of labeling are introduced: zone and ip.

- Labeling nodes zone, ip

zone indicates the partition, here labeled a. ip indicates the IP address of the virtual machine in this partition. In practice, this can be handled in bulk when installing a Kubernetes cluster.

- View tagged tags

|

|

5.5 Distribute scripts to specified nodes and execute them

Here we mainly use hostpath to mount the files from the container to the host, and then use nsenter to access the namespace of the host to do the operation.

|

|

Since the image is small, the script gets executed very quickly. Log on to the VM and check if there are any relevant service processes.

Indicates that the SimpleHTTPServer service has run successfully on the virtual machine.

5.6 Distribute files to the specified node

|

|

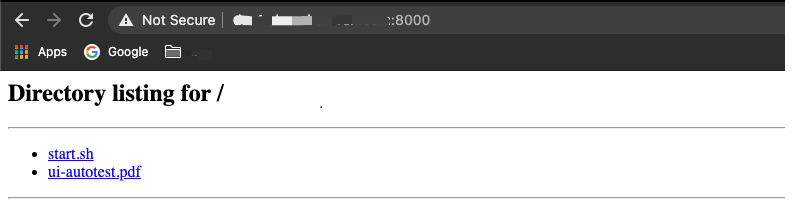

The download page provided can be viewed by visiting the page.

View the distributed files on the virtual machine.

6. Summary

This article demonstrates how to distribute files and execute scripts for virtual machines under Kubernetes, and gives you some ideas on how to design a PaaS platform.

- Kubelet is used as a traditional Agent, Kubelet manages Pods and Agent manages IaaS, there are commonalities between the two. In addition, Kubernetes supports up to 5000 nodes in a single cluster, which can meet most of the demand scenarios. More nodes can be supported with multiple clusters.

- Binary distribution from more sources can be supported. The example uses https files, or you can use local files, and you can download files from S3 and package them locally. Also, the final image is only a few M larger than the original file.

- Script execution can be further optimized. When the Job execution finishes, the script execution will also finish. In practice, you should add hosted services to the host. Here, for the sake of simplicity of demonstration, it is not deeper.

- Using a Pod with hostIPC/hostPID directly instead of a service process on a traditional VM is also an option.