The previous article “Kubernetes Multi-Cluster Management: Kubefed (Federation v2)” gave a brief introduction to the basic concepts and working principles of Federation v2, and this article focuses on the use of Kubefed.

The experimental environment for this article is v0.1.0-rc6.

1

2

|

$ kubefedctl version

kubefedctl version: version.Info{Version:"v0.1.0-rc6", GitCommit:"7586b42f4f477f1912caf28287fa2e0a7f68f407", GitTreeState:"clean", BuildDate:"2019-08-17T03:55:05Z", GoVersion:"go1.12.5", Compiler:"gc", Platform:"linux/amd64"}

|

Installation

The installation of Federation v2 is divided into two parts, one is Controller Plan and kubefedctl.

Controller Plan

Controller Plan can be deployed using Helm (currently Helm is still using v2 version), refer to the official installation documentation: https://github.com/kubernetes-sigs/kubefed/blob/master/charts/kubefed/README.md

To add the helm repo.

1

2

3

4

5

|

$ helm repo add kubefed-charts https://raw.githubusercontent.com/kubernetes-sigs/kubefed/master/charts

$ helm repo list

NAME URL

kubefed-charts https://raw.githubusercontent.com/kubernetes-sigs/kubefed/master/charts

|

Find the current version.

1

2

3

4

|

$ helm search kubefed

NAME CHART VERSION APP VERSION DESCRIPTION

kubefed-charts/kubefed 0.1.0-rc6 KubeFed helm chart

kubefed-charts/federation-v2 0.0.10 Kubernetes Federation V2 helm chart

|

Then use helm to install the latest version directly.

1

|

$ helm install kubefed-charts/kubefed --name kubefed --version=0.1.0-rc6 --namespace kube-federation-system

|

kubefedctl

kubefedctl is a binary program, the latest version of which can be found for download on Github’s Release page: https://github.com/kubernetes-sigs/kubefed/releases

1

2

3

4

5

|

$ wget https://github.com/kubernetes-sigs/kubefed/releases/download/v0.1.0-rc6/kubefedctl-0.1.0-rc6-linux-amd64.tgz

$ tar -zxvf kubefedctl-0.1.0-rc6-linux-amd64.tgz

$ mv kubefedctl /usr/local/bin/

|

kubefedctl provides a lot of convenient operations, such as cluster registration, resource registration, etc.

Multicluster Management

You can use the kubefedctl join command to access new clusters. Before accessing them, you need to configure multiple clusters information in the local kubeconfig.

The basic usage is.

1

|

kubefedctl join <集群名称> --cluster-context <要接入集群的 context 名称> --host-cluster-context <HOST 集群的 context>

|

For example.

1

2

3

4

|

kubefedctl join cluster1 --cluster-context cluster1 \

--host-cluster-context cluster1 --v=2

kubefedctl join cluster2 --cluster-context cluster2 \

--host-cluster-context cluster1 --v=2

|

Kubefed uses CR to store the data it needs, so when you use kubefedctl join, you can see the cluster information in the host cluster.

1

2

3

4

5

|

$ kubectl -n kube-federation-system get kubefedclusters

NAME READY AGE

cluster1 True 3d22h

cluster2 True 3d22h

cluster3 True 3d22h

|

The kubefedctl join command simply translates the configuration in Kubeconfig into a KubeFedCluster custom resource stored in the kube-federation-system namespace.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

|

$ kubectl -n kube-federation-system get kubefedclusters cluster1 -o yaml

apiVersion: core.kubefed.io/v1beta1

kind: KubeFedCluster

metadata:

creationTimestamp: "2019-10-24T08:05:38Z"

generation: 1

name: cluster1

namespace: kube-federation-system

resourceVersion: "647452"

selfLink: /apis/core.kubefed.io/v1beta1/namespaces/kube-federation-system/kubefedclusters/cluster1

uid: 4c5eb57f-5ed4-4cec-89f3-cfc062492ae0

spec:

apiEndpoint: https://172.16.200.1:6443

caBundle: LS....Qo=

secretRef:

name: cluster1-shb2x

status:

conditions:

- lastProbeTime: "2019-10-28T06:25:58Z"

lastTransitionTime: "2019-10-28T05:13:47Z"

message: /healthz responded with ok

reason: ClusterReady

status: "True"

type: Ready

region: ""

|

Resources

One of the reasons for the obsolescence of Federation v1 is the rigidity of resource expansion (the need to expand the API Server) and the lack of the expected large-scale application of CRD, so Federation v2 is very flexible in resource management.

For KubeFed, there are two types of resource management, one is resource type management, and the other is federated resource management.

For resource types, kubefedctl provides enable to enable new resources to be federated.

1

|

kubefedctl enable <target kubernetes API type>

|

- Types, i.e. Kind (e.g. Deployment)

- plural nouns (e.g. deployments)

- Plural resource nouns with api groups (e.g. deployment.apps)

- Abbreviations (e.g. deploy)

For example, if we need to give the VirtualService resources in istio to the federation to manage, we can use.

1

|

kubefedctl enable VirtualService

|

Because Kubefed manages resources through CRDs. Therefore, when enable is executed, you can see that a new CRD named federatedvirtualservices has been added to the Host Cluster.

1

2

3

|

$ kubectl get crd | grep virtualservice

federatedvirtualservices.types.kubefed.io 2019-10-24T13:12:46Z

virtualservices.networking.istio.io 2019-10-24T08:06:01Z

|

This CRD describes required fields of type federatedvirtualservices, such as placement, overrides, etc.

kubefedctl enable completes the management of resource types, and resource management edits that need to be federated are started based on the newly created CRD. However, before deploying resources, you need to create federatednamespaces, and resources from multiple clusters will only be deployed to namespaces that are managed by kubefed.

1

2

3

|

$ kubectl get federatednamespaces

NAME AGE

default 3d21h

|

Here is an attempt to create a resource of type federatedvirtualservices.

1

2

3

|

$ kubectl get federatedvirtualservices

NAME AGE

service-route 3d4h

|

Complete yaml.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

|

apiVersion: types.kubefed.io/v1beta1

kind: FederatedVirtualService

metadata:

name: service-route

namespace: default

spec:

placement:

clusters:

- name: cluster1

- name: cluster2

- name: cluster3

template:

metadata:

name: service-route

spec:

gateways:

- service-gateway

hosts:

- '*'

http:

- match:

- uri:

prefix: /

route:

- destination:

host: service-a-1

port:

number: 3000

|

At this point, Kubefed creates the corresponding virtualservice resource for the target cluster based on the description in the template.

1

2

3

|

$ kubectl get virtualservices

NAME GATEWAYS HOSTS AGE

service-route [service-gateway] [*] 3d4h

|

Scheduling

Kubefed can currently only do some simple inter-cluster scheduling, i.e., manual assignment.

For manually specified scheduling there are two main parts, one is to specify the destination directly in the resource, and the other is to do proportional allocation via ReplicaSchedulingPreference.

For each federated resource, there is a placement field to describe which cluster it will be deployed to, which can be understood from the CRD’s description of the definition idea.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

|

placement:

properties:

clusterSelector:

properties:

matchExpressions:

items:

properties:

key:

type: string

operator:

type: string

values:

items:

type: string

type: array

required:

- key

- operator

type: object

type: array

matchLabels:

additionalProperties:

type: string

type: object

type: object

clusters:

items:

properties:

name:

type: string

required:

- name

type: object

type: array

type: object

|

Usage examples are as follows, either by specifying a cluster list via clusters, or by selecting clusters based on cluster tags via clusterSelector.

1

2

3

4

5

6

7

8

|

spec:

placement:

clusters:

- name: cluster2

- name: cluster1

clusterSelector:

matchLabels:

foo: bar

|

However, there are two things to note.

- if the

clusters field is specified, clusterSelector will be ignored

- the selected clusters are equal and the resource will deploy an undifferentiated replica in each selected cluster

If you need to schedule differently across multiple clusters you need to introduce ReplicaSchedulingPreference for scaled scheduling.

ReplicaSchedulingPreference defines a scheduling policy that includes several scheduling-related fields.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

|

apiVersion: scheduling.kubefed.io/v1alpha1

kind: ReplicaSchedulingPreference

metadata:

name: test-deployment

namespace: test-ns

spec:

targetKind: FederatedDeployment

totalReplicas: 9

clusters:

A:

minReplicas: 4

maxReplicas: 6

weight: 1

B:

minReplicas: 4

maxReplicas: 8

weight: 2

|

- totalReplicas defines the total number of replicas

- clusters describes the maximum and minimum replicas of different clusters and their weights

Kubefed maintains the number of replicas for different clusters according to the scheduling policy definition, as detailed in the documentation: (https://github.com/kubernetes-sigs/kubefed/blob/master/docs/userguide.md#replicaschedulingpreference).

Networking

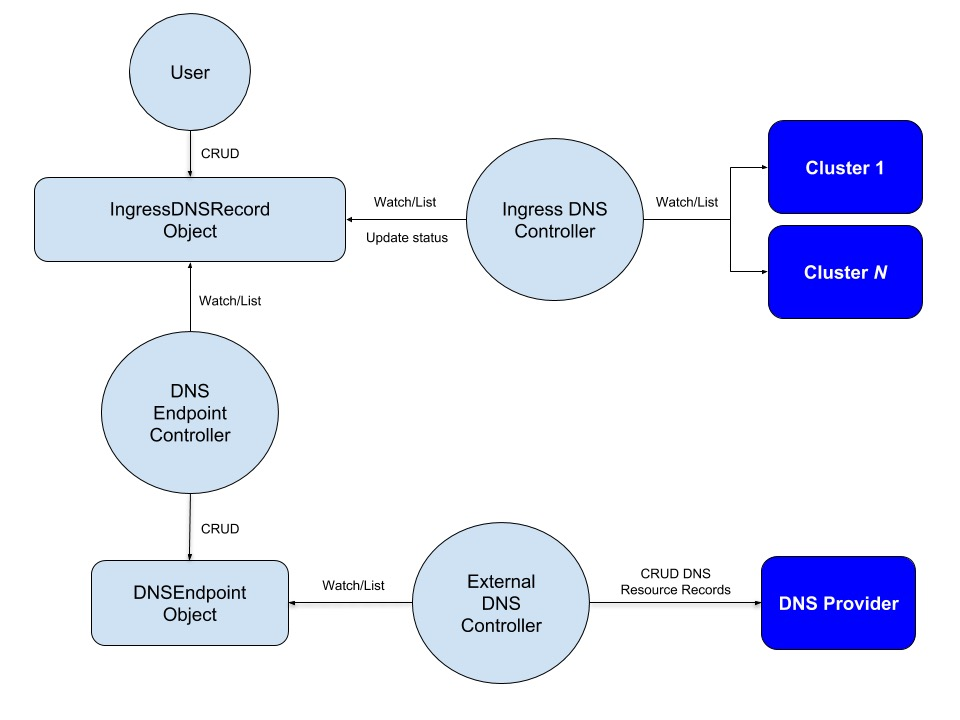

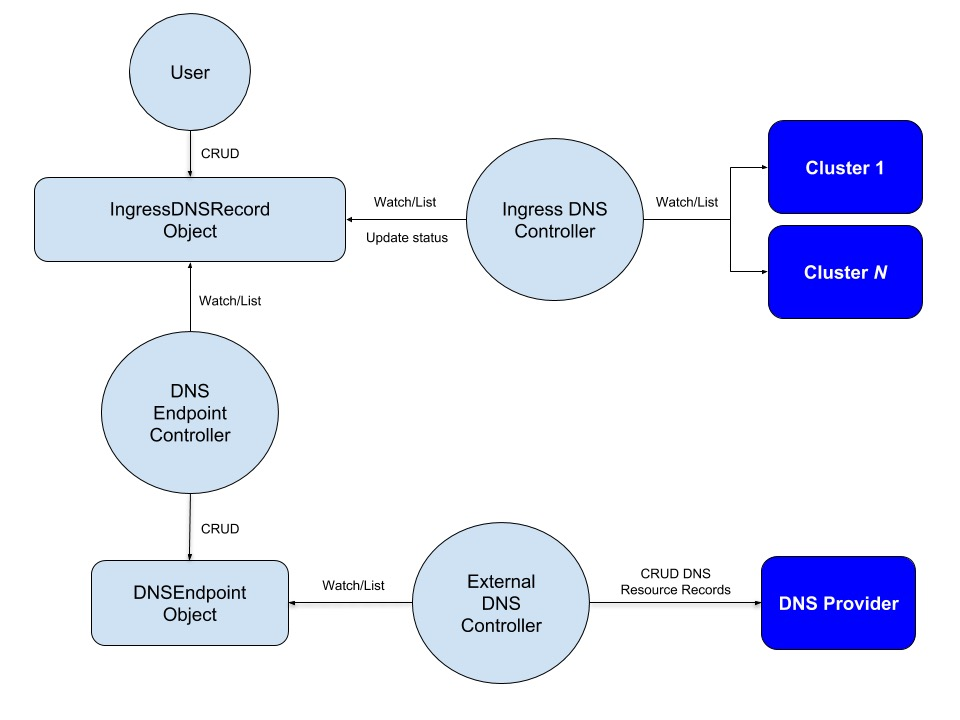

Another highlight feature of Kubefed is network access across clusters. kubefed makes cross-cluster traffic configurable by introducing an external DNS that combines the Ingress Controller with an external LB such as metallb.

In the case of Ingress, for example, users can create IngressDNSRecord type resources and specify domain names, and Kubefed will custom configure the relevant DNS policies based on the IngressDNSRecord and apply them to the external servers.

Creates a resource of type IngressDNSRecord.

1

2

3

4

5

6

7

8

9

|

apiVersion: multiclusterdns.kubefed.io/v1alpha1

kind: IngressDNSRecord

metadata:

name: test-ingress

namespace: test-namespace

spec:

hosts:

- ingress.example.com

recordTTL: 300

|

The DNS Endpoint controller will generate the associated DNSEndpoint.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

|

$ kubectl -n test-namespace get dnsendpoints -o yaml

apiVersion: v1

items:

- apiVersion: multiclusterdns.kubefed.io/v1alpha1

kind: DNSEndpoint

metadata:

creationTimestamp: 2018-10-10T20:37:38Z

generation: 1

name: ingress-test-ingress

namespace: test-namespace

resourceVersion: "251874"

selfLink: /apis/multiclusterdns.kubefed.io/v1alpha1/namespaces/test-namespace/dnsendpoints/ingress-test-ingress

uid: 538d1063-cccc-11e8-bebb-42010a8a00b8

spec:

endpoints:

- dnsName: ingress.example.com

recordTTL: 300

recordType: A

targets:

- $CLUSTER1_INGRESS_IP

- $CLUSTER2_INGRESS_IP

status: {}

kind: List

metadata:

resourceVersion: ""

selfLink: ""

|

The ExternalDNS controller listens to the DNSEndpoint resource and applies the record to the DNS server upon receipt of the event. If the internal DNS server of the member cluster uses the external DNS server as the upstream server, then the member cluster can access it directly for the domain name to enable cross-cluster access.

Deployment

There is a full example in the official repository for experimentation, see: https://github.com/kubernetes-sigs/kubefed/tree/master/example

In addition to scheduling, Kubefed enables differential deployment between clusters via the overrides field.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

|

apiVersion: types.kubefed.io/v1beta1

kind: FederatedDeployment

metadata:

name: test-deployment

namespace: test-namespace

spec:

template:

metadata:

labels:

app: nginx

spec:

replicas: 3

selector:

matchLabels:

app: nginx

template:

metadata:

labels:

app: nginx

spec:

containers:

- image: nginx

name: nginx

placement:

clusters:

- name: cluster2

- name: cluster1

overrides:

- clusterName: cluster2

clusterOverrides:

- path: "/spec/replicas"

value: 5

- path: "/spec/template/spec/containers/0/image"

value: "nginx:1.17.0-alpine"

- path: "/metadata/annotations"

op: "add"

value:

foo: bar

- path: "/metadata/annotations/foo"

op: "remove"

|

After deploying the Deployment, you can check the deployment status via kubectl describe.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

|

$ kubectl describe federateddeployment.types.kubefed.io/test-deployment

Name: test-deployment

Namespace: default

Labels: <none>

Annotations: kubectl.kubernetes.io/last-applied-configuration:

{"apiVersion":"types.kubefed.io/v1beta1","kind":"FederatedDeployment","metadata":{"annotations":{},"name":"test-deployment","namespace":"d...

API Version: types.kubefed.io/v1beta1

Kind: FederatedDeployment

Metadata:

Creation Timestamp: 2019-10-28T07:55:34Z

Finalizers:

kubefed.io/sync-controller

Generation: 1

Resource Version: 657714

Self Link: /apis/types.kubefed.io/v1beta1/namespaces/default/federateddeployments/test-deployment

UID: 6016a3eb-7e7f-4756-ba40-b655581f06ad

Spec:

Overrides:

Cluster Name: cluster2

Cluster Overrides:

Path: /spec/replicas

Value: 5

Path: /spec/template/spec/containers/0/image

Value: nginx:1.17.0-alpine

Op: add

Path: /metadata/annotations

Value:

Foo: bar

Op: remove

Path: /metadata/annotations/foo

Placement:

Clusters:

Name: cluster2

Name: cluster1

Template:

Metadata:

Labels:

App: nginx

Spec:

Replicas: 3

Selector:

Match Labels:

App: nginx

Template:

Metadata:

Labels:

App: nginx

Spec:

Containers:

Image: nginx

Name: nginx

Status:

Clusters:

Name: cluster1

Name: cluster2

Conditions:

Last Transition Time: 2019-10-28T07:55:35Z

Last Update Time: 2019-10-28T07:55:49Z

Status: True

Type: Propagation

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal CreateInCluster 14s federateddeployment-controller Creating Deployment "default/test-deployment" in cluster "cluster2"

Normal CreateInCluster 14s federateddeployment-controller Creating Deployment "default/test-deployment" in cluster "cluster1"

Normal UpdateInCluster 0s (x10 over 12s) federateddeployment-controller Updating Deployment "default/test-deployment" in cluster "cluster2"

|

and the differences that can be seen between the different clusters.

1

2

3

4

5

|

$ kubectl --context=cluster1 get deploy | grep test

test-deployment 3/3 3 3 98s

$ kubectl --context=cluster2 get deploy | grep test

test-deployment 5/5 5 5 105s

|