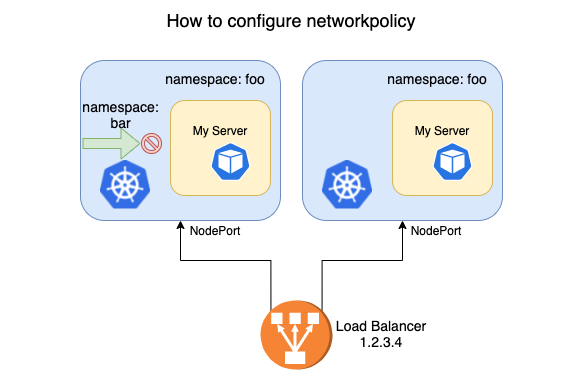

1. Background of requirements

As shown above, the business side needs to isolate the namespae’s service by disabling load access to the bar space and allowing users to access the service from the Load Balancer (LB) via NodePort. A network policy can be easily written.

|

|

However, traffic accessing from LB is completely banned, which is not expected. The answer from searching the technical community may be that Kubernetes NetworkPolicy is mainly for in-cluster access policies, and external traffic cannot hit the policy after the IP changes after SNAT.

The configuration will vary from network plugin to network plugin, using different patterns. This article only provides an idea to configure NodePort’s traffic access policy using the common Calico IPIP mode as an example.

2. Pre-requisite knowledge points

2.1 NetworkPolicy in Kubernetes

NetworkPolicy is a network isolation object in Kubernetes that describes network isolation policies and depends on network plugins for implementation. Currently, network plugins such as Calico, Cilium, and Weave Net support network isolation functionality.

2.2 Several modes of operation for Calico

- BGP mode

In BGP mode, BGP clients in a cluster are interconnected two by two to synchronize routing information.

- Route Reflector mode

In BGP mode, the number of client connections reaches N * (N - 1), with N denoting the number of nodes. This approach limits the size of the nodes, and the community recommends no more than 100 nodes.

In Route Reflector mode, instead of synchronizing routing information two-by-two, BGP clients synchronize routing information to a number of specified Route Reflectors. All BGP clients only need to establish connections to the Route Reflector, and the number of connections is linearly related to the number of nodes.

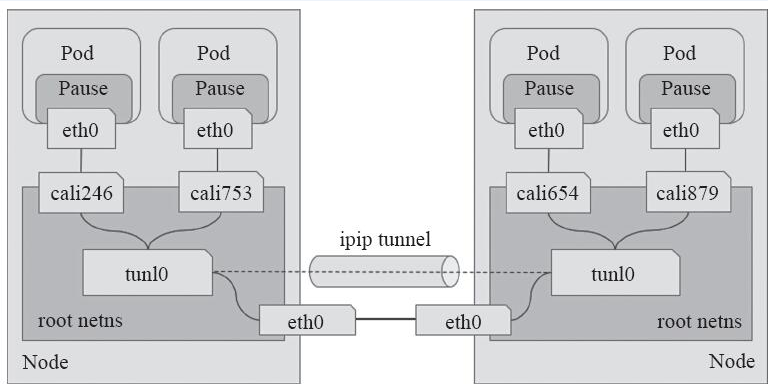

- IPIP Mode

Unlike the BGP mode, the IPIP mode establishes tunnels between nodes for network connectivity through tunnels. The following diagram depicts the traffic between Pods in IPIP mode.

3. why the network policy does not work

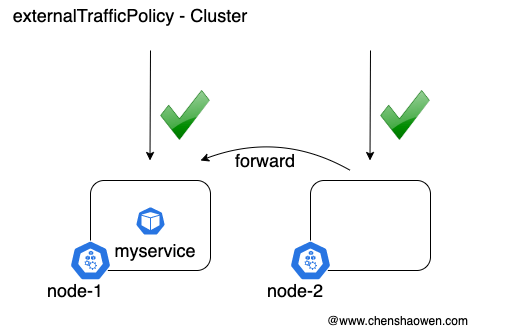

In Cluster mode, if node-2:nodeport is accessed, the traffic will be forwarded to node-1, the node with the service Pod.

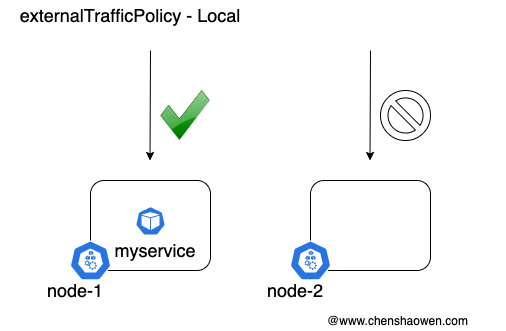

In Local mode, if the accessed node-2:nodeport, the traffic will not be forwarded and cannot respond to the request.

Usually we default to Cluster mode, which performs SNAT, or source address modification, when forwarding traffic. This causes the access request to not hit the network policy, mistakenly thinking that the network policy is not in effect.

Here we try two solutions.

- add the source address after SNAT to the access whitelist

- Use Local mode. Since LB has the ability to probe live, it can forward the traffic to the node with the service pod, thus preserving the source address.

4. NetworkPolicy configuration under NodePort

4.1 Test environment

- Kubernetes version

v1.19.8

- kube-proxy forwarding mode

IPVS

- Node information

|

|

- Load of the test

The load runs on the node2 node

- The tested service

4.2 How NodePort traffic is forwarded to Pods

There are two main cases to consider here.

-

accessing node node1 where no Pod load exists

- Service forwarding rules

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16ipvsadm -L TCP node1:31602 rr -> 10.233.96.32:9097 Masq 1 0 0 TCP node1:31602 rr -> 10.233.96.32:9097 Masq 1 0 0 TCP node1.cluster.local:31602 rr -> 10.233.96.32:9097 Masq 1 0 0 TCP node1:31602 rr -> 10.233.96.32:9097 Masq 1 0 0 TCP localhost:31602 rr -> 10.233.96.32:9097 Masq 1 0 0You can see that traffic accessing node1:31602 is forwarded to 10.233.96.32:9097, which is the IP address and port of the Service Pod.

- IP routing rules

Next, look at the routing rules. Access to the 10.233.96.0/24 network segment is routed to tunl0, which is tunneled to node2 and then to the service.

-

Access node node2 with Pod load

- Service forwarding rules

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16ipvsadm -L TCP node2:31602 rr -> 10.233.96.32:9097 Masq 1 0 0 TCP node2:31602 rr -> 10.233.96.32:9097 Masq 1 0 0 TCP node2.cluster.local:31602 rr -> 10.233.96.32:9097 Masq 1 0 1 TCP node2:31602 rr -> 10.233.96.32:9097 Masq 1 0 0 TCP localhost:31602 rr -> 10.233.96.32:9097 Masq 1 0 0As with node1, access to the NodePort service on node2 is forwarded to the IP address and port of the service pod.

- Routing Forwarding Rules

But the routing rules are different. Packets with a destination address of 10.233.96.32 are sent to cali73daeaf4b12. And cali73daeaf4b12 and the NIC in the Pod form a set of veth pair and the traffic will be sent directly to the service Pod.

From the return of the above command, we know that if we access a node without Pod load, the traffic will be forwarded through tunl0; if we access a node with Pod load, the traffic will be routed directly to the Pod without tunl0.

4.3 Option 1, add tunl0 to the network policy whitelist

- Check the tunl0 information of each node

node1

node2

node3

- Network policy configuration

|

|

- Test verification

Does not meet expectations. All traffic passing through tunl0 is allowed. bar The namespace load can access the service by accessing node1:31602, node3:31602, tekton-dashboard.tekton-pipelines.svc:9097 (not the load on node2) and no restrictions can be placed on the traffic.

4.4 Option 2, using Local mode

- Modify svc’s externalTrafficPolicy to Local mode

- Reject all entrance traffic

- Add access whitelist

- Test verification

Meets expectations. Using the network policy above, the business requirements are met by blocking access to the bar namespace and allowing external access to the NodePort via LB forwarding.

5. Summary

Networking is a relatively difficult part of Kuberntes to master, yet it is one of the aspects that has a large and far-reaching impact on the business. Therefore, it is necessary and worthwhile to spend a little more time on networking.

This paper focuses on the business requirements and further elaborates on Calico’s network mode, solving the problem that the source IP changes due to SNAT and eventually the NetworkPolicy does not meet expectations.

In Calico’s IPIP mode, the access policy for NodePort needs to use externalTrafficPolicy: Local traffic forwarding mode. Combine this with the network policy best practice of adding a whitelist policy after first disabling all traffic.