1. The advantages of Tekton for multi-cluster builds

With Kubernetes, Tekton is already very resilient and can support large scale builds. At the same time, the development task mainly uses Yaml and Shell, which extends the scope of Tekton for various scenarios.

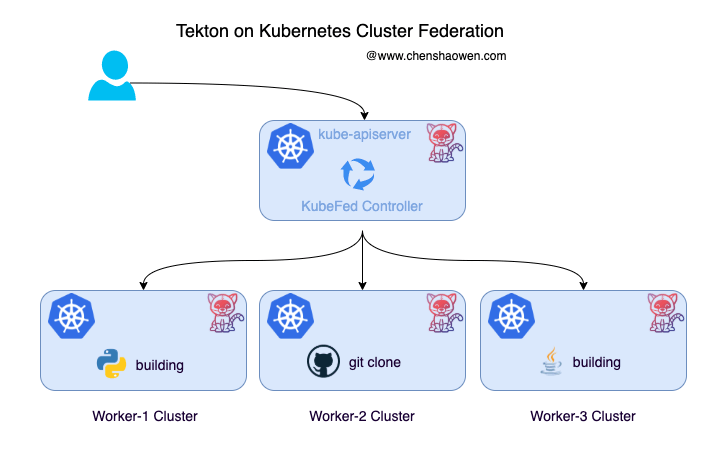

Above is a diagram of Tekton under multiple clusters. Why does Tekton need a multi-cluster execution pipeline?

- Variable Kubernetes clusters at any time. A single Kubernetes cluster cannot meet the requirements of operations and maintenance, and cannot be changed at any time. With multiple clusters, you can take down some of the clusters for maintenance.

- CI consumes a lot of CPU, memory, and IO resources, and can easily overwhelm nodes and even clusters. Multiple clusters can effectively share the load pressure and improve availability.

- Business isolation. Businesses have different requirements for code security level, build speed, and build environment. Multi-clusters can provide isolated environment and customized pipeline services.

2. Kubernetes Cluster Federation

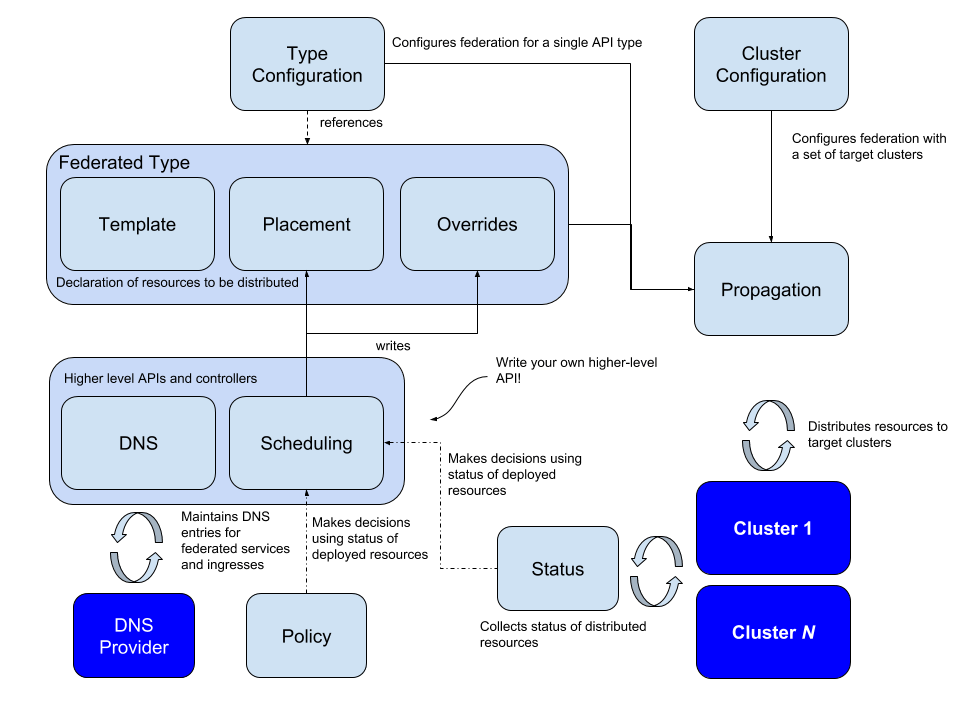

Kubernetes Cluster Federation is called KubeFed for short. The KubeFed Controller manages these CRDs and implements features such as synchronized Resources orchestration across clusters, enabling modularity and customization. Here is a diagram of the community’s architecture.

KubeFed configures two types of information.

- Type configuration, which declares the type of APIs KubeFed handles

- Cluster configuration, which declares which clusters KubeFed manages

Type configuration has three basic concepts.

- Templates, which defines the template description of the resource in the cluster

- Placement, which defines which clusters the resource needs to be distributed to

- Overrides, which defines the fields in the cluster that need to override Templates

In addition, more advanced functionality can be achieved through Status, Policy and Scheduling:

- Status collects the status of the distributed resources in each cluster

- Policy allows policy control on which clusters to allocate resources to

- Scheduling allows resources to migrate copies across clusters

In addition, KubeFed provides MultiClusterDNS, which can be used for service discovery across multiple clusters.

3. Federalizing a Kubernetes Cluster

3.1 Preparing the cluster and configuring the Context

Deploy two clusters here: dev1 as the main cluster to act as Tekton’s control plane and not run pipeline tasks; dev2 as a subcluster to execute Tekton pipeline tasks.

-

Prepare the two clusters

Main cluster dev1

Subcluster dev2

-

Configure the Contexts of all the clusters on the main cluster (requires that the cluster Apiserver portal is on a network and can be directly connected), which can be used to add sub-clusters

The name in the contexts should not have special characters like

@, otherwise it will report an error when joining. Because the name will be used to create the Secret, it needs to conform to the Kubernetes naming convention.Put the kubeconfig of the main cluster dev1 in

~/.kube/config-1and change the name and other information in the following format.Place the kubeconfig for subcluster dev2 in

~/.kube/config-2and change the name and other information in the following format. -

merge kubeconfig

-

View the added cluster Context

-

switch to the main cluster dev1

3.2 Installing KubeFed on the main cluster

-

Install KubeFed using Helm

-

Check the load

1 2 3 4 5 6 7 8 9 10kubectl get deploy,pod -n kube-federation-system NAME READY UP-TO-DATE AVAILABLE AGE deployment.apps/kubefed-admission-webhook 1/1 1 1 95s deployment.apps/kubefed-controller-manager 2/2 2 2 95s NAME READY STATUS RESTARTS AGE pod/kubefed-admission-webhook-598bd776c6-gv4qh 1/1 Running 0 95s pod/kubefed-controller-manager-6d9bf98d74-n8kjz 1/1 Running 0 17s pod/kubefed-controller-manager-6d9bf98d74-nmb2j 1/1 Running 0 14s

3.3 Installing kubefedctl on the main cluster

Execute the command :

3.4 Adding a cluster

Execute the command on the master cluster, add both dev1 and dev2 to the master cluster dev1.

|

|

|

|

View the list of clusters:

3.5 Testing if the cluster is federated successfully

- View federated resources

After installing KubeFed, many common resources have been federated and can be viewed in the CRD.

|

|

In federatedtypeconfigs you can also see the resources that have been federated.

|

|

- Create a federated Namespace

Namespace-level resources need to be placed under a federated Namespace, otherwise the Controller will report an error when distributing resources.

- Create a federated Deployment in the main cluster

A common Deployment looks like this.

And the federal Deployment is this.

|

|

FederatedDeployment is written with three fields in mind.

- Verify that the resources are distributed successfully

On the dev1 cluster

On the dev2 cluster

4. Federalizing Tekton’s CRD resources

4.1 Installing Tekton

Tekton needs to be installed on all clusters

|

|

Since the Tekton community uses the gcr.io image, some host environments may not be able to pull it. I made a backup of it on Dockerhub, and the relevant yaml can be found here, https://github.com/shaowenchen/image-syncer/tree/main/tekton.

4.2 Federating Tekton’s CRDs

When installing KubeFed, the common Deployment, Secret, etc. will be federated by default, but for user-defined CRDs, you need to enable them manually.

Execute the command:

|

|

In the case of taskruns, kubefedctl enable taskruns.tekton.dev will automatically create two resources:

- customresourcedefinition.apiextensions.k8s.io/federatedtaskruns.types.kubefed.io, the federated CRD resource federatedtaskruns

- federatedtypeconfig.core.kubefed.io/taskruns.tekton.dev, under the kube-federation-system namespace, creates a resource of type federatedtypeconfig taskruns to enable resource distribution

4.3 Edit the newly created federal CRD resource to add fields

Missing this step will result in an empty CR resource content for synchronization to the subcluster. This is because the kubefedctl enable federalized CRD resource is missing the template field.

Execute the command:

|

|

At the level of overrides and placement, just add the template content of the following example.

If you don’t think it’s clear enough, you can modify it by referring to https://github.com/shaowenchen/demo/tree/master/tekton-0.24.1-kubefed. If you are also using version 0.24.1, you can directly kubectl apply these CRD resources.

4.4 Testing Tekton object distribution in multiple clusters

Here, to avoid pasting a lot of yaml, we directly create Task resources on subclusters in advance, instead of using FederatedTask for distribution.

- Create the Task on the subcluster

|

|

- Create FederatedTaskRun on master cluster dev1 to distribute resources to subcluster dev2

|

|

- View Tekton’s Taskrun tasks on subcluster dev2

5. Summary

In this paper, we introduced and practiced the federalization of Tekton CRD resources using KubeFed to manage multiple clusters.

Tekton under multiple clusters, using the main cluster to manage resources and sub-clusters to execute pipelines, can effectively balance the load, increase the concurrent execution of pipelines, and improve the maintainability of the CICD system.

KubeFed here is mainly used to store and distribute Tekton object resources. KubeFed is used to do cross-cluster resource distribution, which is very suitable.