In the previous note, we learned about a typical principle of VPN through TUN/TAP devices when introducing network devices, but did not practice how TUN/TAP virtual network devices specifically function practically in linux. In this note we will look at how a very typical IPIP tunnel in the cloud computing field is implemented through TUN devices.

IPIP Tunneling

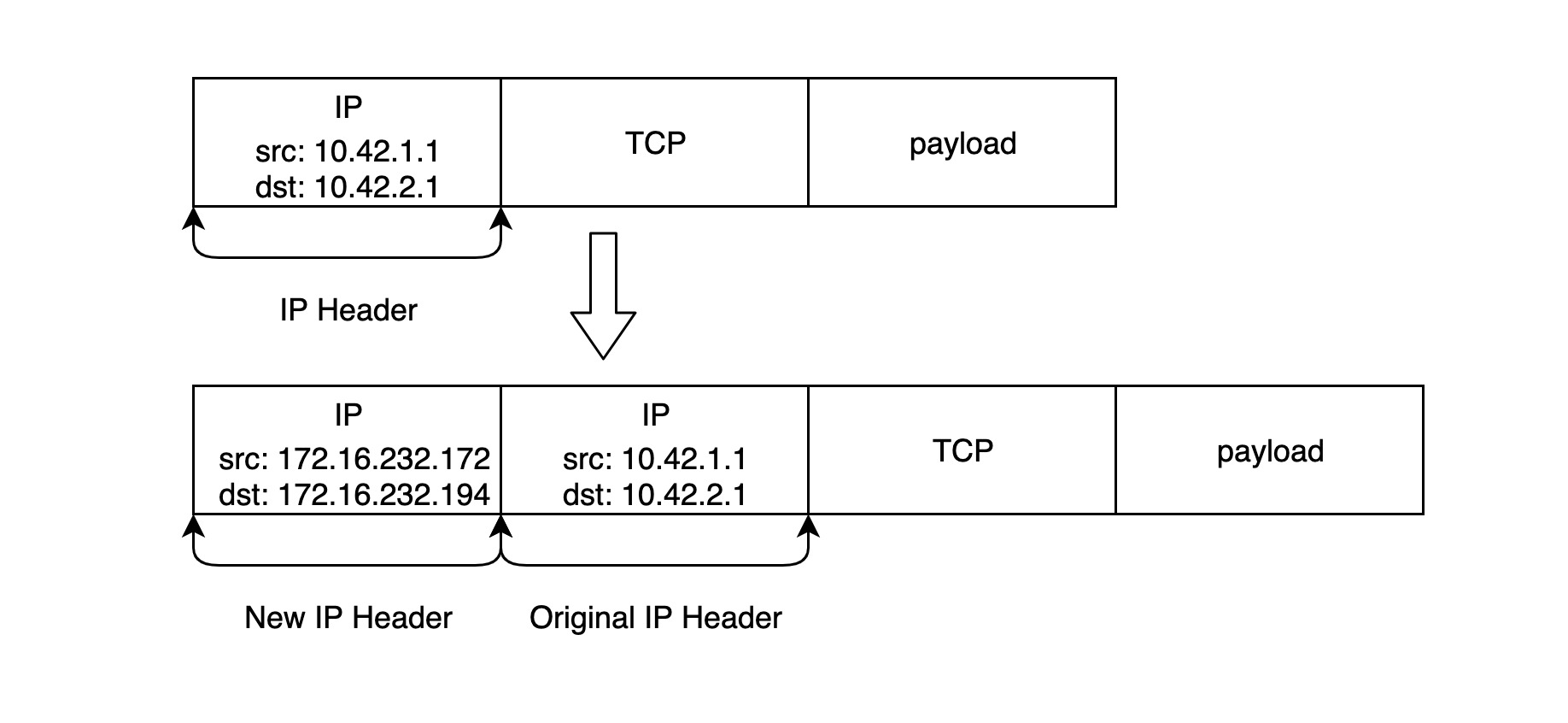

As we mentioned in the previous note, the TUN network device can encapsulate a Layer 3 (IP) network packet within another Layer 3 network packet, and it looks like the packet sent out through the TUN device will look like this.

This is the structure of a typical IPIP tunnel packet. Linux natively supports several different types of IPIP tunnels, but all rely on TUN network devices, and we can view the relevant types of IPIP tunnels and their operations by using the command ip tunnel help.

|

|

where mode represents the different IPIP tunnel types, Linux natively supports a total of 5 types of IPIP tunnels.

- ipip: Common IPIP tunneling, which is the encapsulation of an IPv4 message on top of a message

- gre: Generic Routing Encapsulation, which defines a mechanism to encapsulate any other network layer protocol on top of any other network layer protocol, so it works for both IPv4 and IPv6

- sit: sit mode is mainly used for IPv4 messages to encapsulate IPv6 messages, i.e. IPv6 over IPv4

- isatap: Intra-Site Automatic Tunnel Addressing Protocol, similar to sit, is also used for IPv6 tunnel encapsulation

- vti: Virtual Tunnel Interface, an IPsec tunneling technology

Some other useful parameters.

- ttl Nsets the TTL of the incoming tunnel packet to N (N is a number between 1 and 255, 0 is a special value indicating that the TTL value of this packet is inherited (inherit)), the default value of the ttl parameter is for inherit- tos T/dsfield TSet the TOS field of the incoming channel packet, the default is inherit- [no]pmtudiscDisable or turn on Path MTU Discovery on this tunnel, the default is enable

Note: The nopmtudisc option is not compatible with fixed ttl, if the fixed ttl parameter is used, the system will turn on Path MTU Discovery.

one-to-one

Let’s start with the most basic one-to-one IPIP tunneling mode as an example to introduce how to build IPIP tunnels in linux to communicate between two different subnets.

Before you start, it should be noted that not all linux distributions load the ipip.ko module by default. You can check if the kernel loads the module by using lsmod | grep ipip; if not, use modprobe ipip to load it first; if everything is fine then it should look like this.

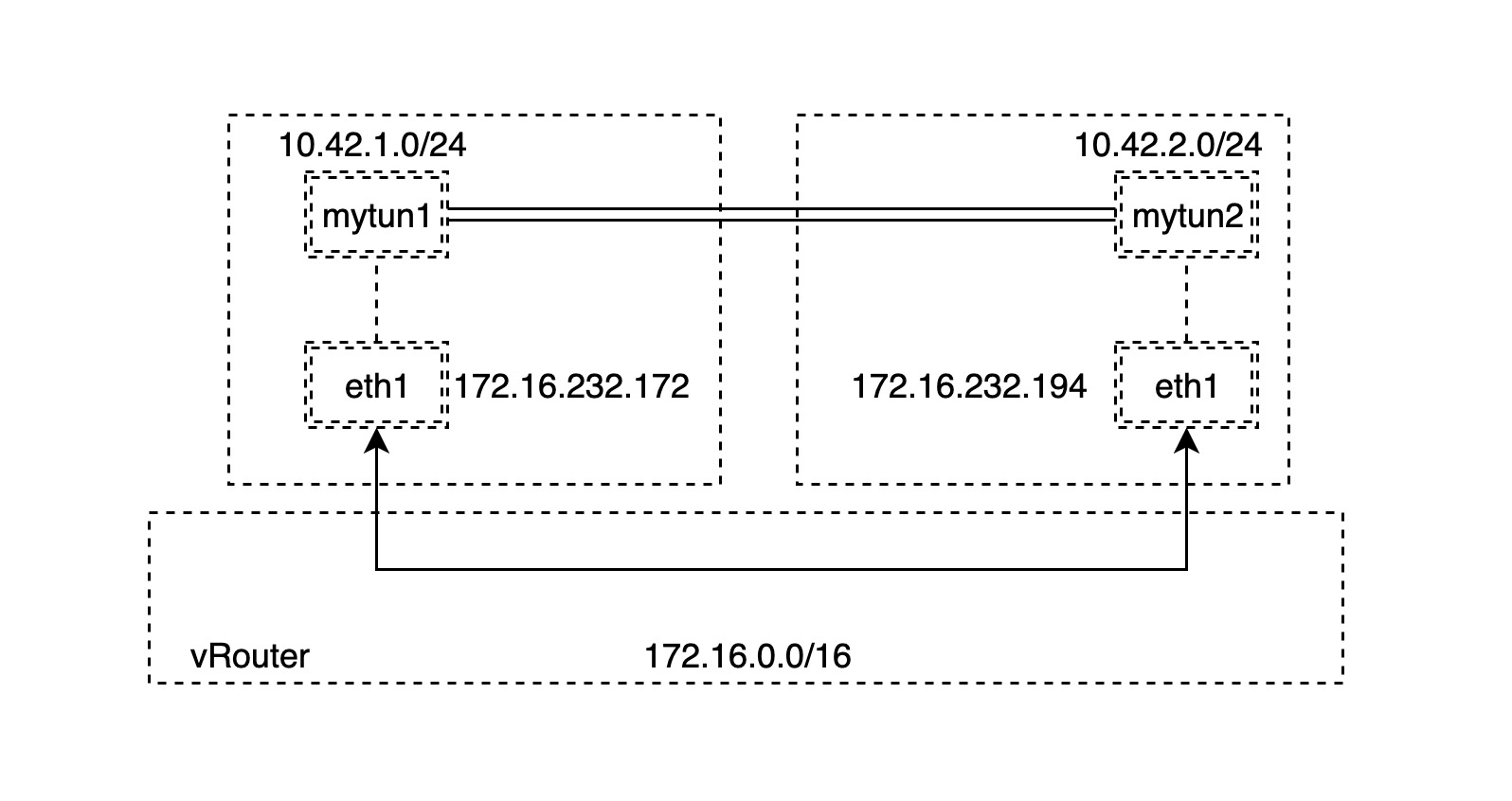

Now it’s time to start building the IPIP tunnel. Our network topology is shown in the following figure.

There are two hosts A and B in the same network segment 172.16.0.0/16, so they can be connected directly. What we need to do is to create two different subnets on each of the two hosts.

Note: In fact, the two hosts A and B are unnecessarily in the same subnet, as long as they are in the same layer 3 network, which means that they can be routed through the layer 3 network to complete the IPIP tunnel.

To simplify things, we first create the bridge network device mybr0 on node A and set the IP address to the gateway address of the 10.42.1.0/24 subnet, then enable mybr0.

Similarly, a similar operation is then performed separately on node B.

B:

Next, we separately on two nodes A and B

- create the corresponding TUN network devices

- set the corresponding local and remote addresses as the routable addresses of the node

- set the corresponding gateway address as the TUN network device we are going to create

- Enable the TUN network device to create the IPIP tunnel

Note: Step 3 is to save and simplify the steps of creating subnets by setting the gateway address directly without creating additional network devices.

A:

The above command creates a new tunnel device tunl0 and sets the remote and local IP addresses of the tunnel, which are the outer addresses of the IPIP packets; for the inner addresses, we set two separate subnet addresses, so that the IPIP packets will look as shown below.

B:

In order to ensure that we access subnets on two different hosts through the IPIP tunnel created, we need to manually add the following static route.

A:

|

|

B:

|

|

The routing table for host AB is now shown below.

A:

B:

At this point we can start verifying that the IPIP tunnel is working properly:

A:

|

|

B:

|

|

Yes, it can ping through, and we grab the data at the TUN device via tcpdump.

|

|

Up to this point, our experiment was successful. However, it should be noted that if we are using gre mode, it is possible that we need to set up a firewall to allow the two subnets to interoperate, which is more common in building IPv6 tunnels.

one-to-many

In the previous section, we created a one-to-one IPIP tunnel by specifying the local address and remote address of the TUN device. In fact, it is possible to create an IPIP tunnel without specifying the remote address at all, just add the corresponding route on the TUN device, and the IPIP tunnel will know how to encapsulate the new IP packets and deliver them to the route to the specified destination address.

To illustrate, suppose we now have three nodes in the same Layer 3 network.

Attach three different subnets on each of these three nodes at the same time.

Unlike the previous subsection, instead of directly setting the gateway address of the subnet to the IP address of the TUN device, we create an additional bridge network device to simulate the actual common container network model. We create the bridge network device mybr0 on node A and set the IP address to the gateway address of the 10.42.1.0/24 subnet, and then enable mybr0.

Similarly, a similar operation is then performed on nodes B and C, respectively.

B:

C:

Our ultimate goal is to build IPIP tunnels between each of the three nodes to ensure that the three different subnets can communicate directly with each other, so the next step is to create the TUN network device and set up the routing information. On nodes A and B, respectively.

- create the corresponding TUN network device and enable it

- set the IP address of the TUN network device

- set the routes to different subnets and specify the next hop address

The corresponding gateway address is the TUN network device we are going to create respectively

- Enable the TUN network device to create IPIP tunnel

Note: The IP address of the TUN network device is the subnet address of the corresponding node, but the subnet mask is 32 bits, for example, the subnet address on node A is

10.42.1.0/24, the IP address of the TUN network device on node A is10.42.1.0/32. The reason for this is that sometimes addresses on the same subnet (e.g.10.42.1.0/24) are assigned the same MAC address and therefore cannot communicate directly through the link layer of layer 2, whereas if the IP address of the TUN network device and any address are guaranteed not to be on the same subnet, there is no direct communication at the link layer of layer 2. On this point please refer to the implementation principle of calico, each container will have the same MAC address, later we have the opportunity to explore in depth.Note: Another point to note is that when setting up the route to the TUN network device,

onlinkis specified, the purpose of this is to ensure that the next hop is directly attached to the TUN network device, so that even if the nodes are not in the same subnet, you can still build IPIP tunnels.

A:

B:

C:

At this point we can start verifying that the IPIP tunnel we built is working properly.

A:

|

|

Everything seems to work fine, and if we reverse the process and ping the other subnets from node B or C respectively, we can get through. This shows that we can indeed create one-to-many IPIP tunnels, and this one-to-many pattern is very useful in creating overlay communication models in some typical multi-node networks.

under the hood

We then grab the data via tcpdump on the TUN devices in B and C respectively.

B:

|

|

C:

|

|

In fact, from the process of creating one-to-many IPIP tunnels we can roughly guess that the Linux ipip module gets the internal IPIP packet based on routing information and then encapsulates it with an external IP into a new IP packet. As for how to unpack IPIP packets, let’s look at the process of ipip module to receive packets.

|

|

From https://github.com/torvalds/linux/blob/master/net/ipv4/ip_input.c#L187-L224

As you can see, the ipip module will unblock the packet according to its protocol type, and then do it again with the unblocked skb packet. The above is just some very superficial analysis, if you are interested, we recommend to go to see more source code implementation of ipip module.

Reference https://houmin.cc/posts/cc24de6a/