In order to facilitate testing, I am going to configure a LoadBalaner type Service for the Ingress controller, and since this is a local private environment, I need to deploy a load balancer that supports this service type, the most popular one in the community should be MetalLB This project, which now also belongs to the CNCF sandbox project, was launched at the end of 2017 and has been widely adopted in the community after 4 years of development, but my side has been unstable during testing and use, and often needs to restart the controller to take effect. So I turned my attention to another load balancer, OpenELB, which was recently open sourced by China’s Green Cloud.

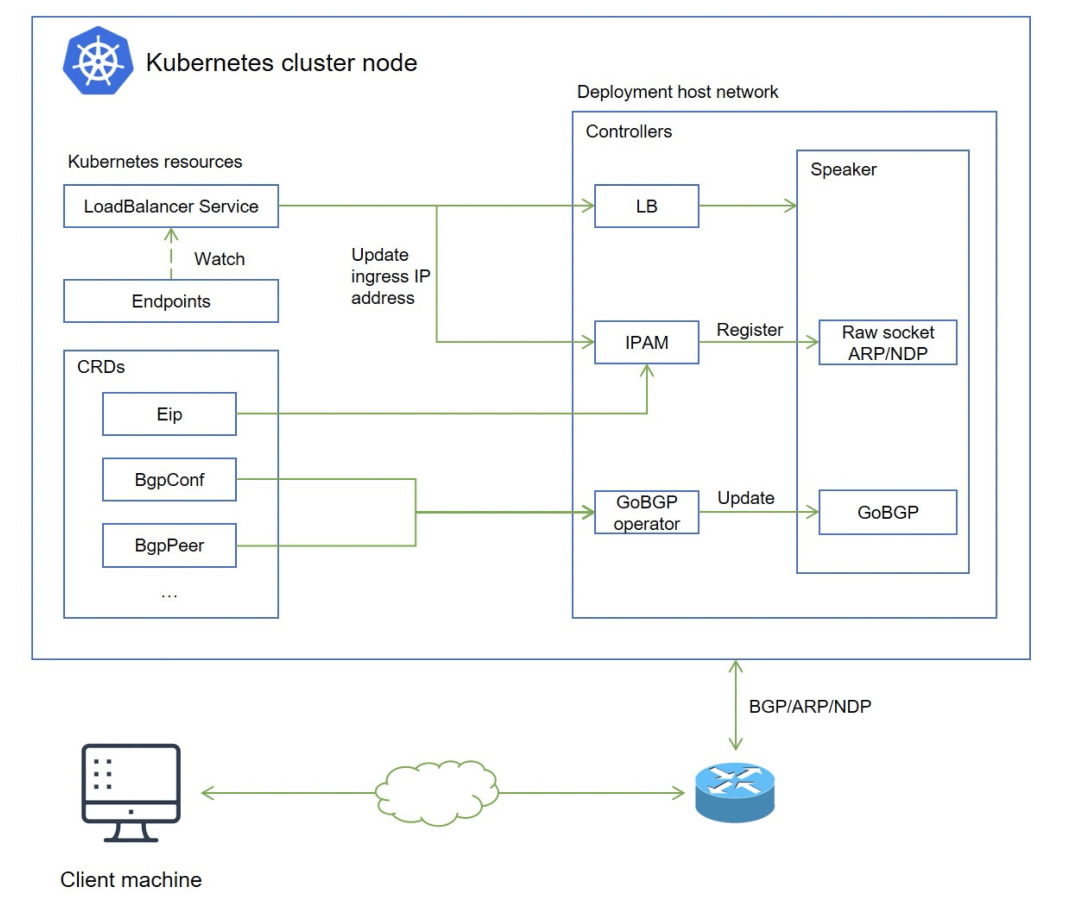

OpenELB, previously called PorterLB, is a load balancer plug-in designed for physical machines (Bare-metal), edges (Edge) and privatized environments, and can be used as a LB plug-in for Kubernetes, K3s, KubeSphere to expose LoadBalancer-type services outside the cluster, and is now a CNCF sandbox project. Project, core features include.

- Load balancing based on BGP and Layer 2 mode

- Load balancing based on router ECMP

- IP address pool management

- BGP configuration using CRD

Comparison with MetaLB

OpenELB, as a latecomer, adopts a more Kubernetes-native implementation and can be configured and managed directly through CRD. Here is a brief comparison of OpenELB and MetaLB.

Cloud Native Architecture

In OpenELB, you can use CRD to configure both address management and BGP configuration management. For users used to Kubectl, OpenELB is very user-friendly. In MetalLB, you need to configure them via ConfigMap and perceive their status by looking at monitoring or logs.

Flexible Address Management

OpenELB manages addresses through a custom resource object called EIP, which defines subresource Status to store address assignment status so that there are no conflicts between replicas when assigning addresses.

Publishing routes using gobgp

Unlike MetalLB’s own implementation of the BGP protocol, OpenELB uses the standard gobgp to publish routes, which has the following benefits.

- Low development cost and gobgp community support

- The ability to take advantage of gobgp’s rich features

- Dynamic configuration of gobgp via BgpConf/BgpPeer CRD, allowing users to dynamically load the latest configuration information without restarting OpenELB

- OpenELB implements the BgpConf/BgpPeer CRD with the same API that the community provides when using gobgp as a lib, and is compatible with it.

- OpenELB also provides status to view BGP neighbor configuration, rich in status information

Simple architecture, low resource consumption

OpenELB currently only deploys Deployment to achieve high availability through multiple replicas, and partial replica crashes do not affect the normal connections already established.

In BGP mode, the different copies of Deployment are connected to the router for issuing equivalent routes, so normally we can deploy two copies. In Layer 2 mode, the different replicas elect a leader between them through the Leader Election mechanism provided by Kubernetes, which in turn answers ARP/NDP.

Installation

In a Kubernetes cluster, you only need to install OpenELB once. once the installation is complete, an openelb-manager Deployment is installed in the cluster, which contains an openelb-manager Pod. openelb-manager Pod implements the OpenELB functionality for the entire Kubernetes cluster The openELB functionality is implemented for the entire Kubernetes cluster. Once installed, you can extend the openelb-manager Deployment to distribute multiple copies of OpenELB (openelb-manager Pods) to multiple cluster nodes to ensure high availability. For more information, see Configuring Multiple OpenELB Replicas.

To install and use OpenELB is very simple and can be installed with one click directly using the following command.

The resource list above deploys a Deployment resource object named openelb-manager. The Pod of openelb-manager implements OpenELB functionality for the entire Kubernetes cluster, and the controller can be extended to two copies to ensure high availability. The first installation will also configure https certificates for the admission webhook, and check the status of the Pod after the installation is complete.

|

|

In addition, several related CRD user OpenELB configurations will be installed.

Configuration

Next we will demonstrate how to use layer2 mode OpenELB, first you need to ensure that all Kubernetes cluster nodes must be in the same layer 2 network (under the same router), my test environment has a total of 3 nodes, node information is shown below.

|

|

The IP addresses of the 3 nodes are 192.168.0.109, 192.168.0.110, and 192.168.0.111.

First you need to enable strictARP for kube-proxy so that all NICs in the Kubernetes cluster stop responding to ARP requests from other NICs, and OpenELB handles the ARP requests.

Then just execute the following command to restart the kube-proxy component.

|

|

If the node where OpenELB is installed has more than one NIC, you need to specify the NIC to be used by OpenELB in Layer 2 mode. If the node has only one NIC, you can skip this step, assuming that the master1 node where OpenELB is installed has two NICs (eth0 192.168.0.2 and ens33 192.168.0.111) and eth0 192.168.0.2 will be used for OpenELB, then you need to add an annotation for the master1 node to specify the NIC.

|

|

Next, you can create an Eip object to act as an IP address pool for OpenELB, creating a resource object as shown below.

Here we specify a pool of IP addresses via the address attribute, which can be filled with one or more IP addresses (taking care that IP segments in different Eip objects do not overlap), to be used by OpenELB. The value format can be.

- IP address, e.g. 192.168.0.100

- IP address/subnet mask, e.g. 192.168.0.0/24

- IP address 1 - IP address 2, e.g. 192.168.0.91 - 192.168.0.100

protocol attribute is used to specify which OpenELB mode the Eip object is used for, can be configured as layer2 or bgp, the default is bgp mode, we want to use layer2 mode here, so we need to show the specified interface is used to specify the NIC that OpenELB listens to for ARP or NDP requests, this field is only valid when the protocol This field is only valid when the protocol is set to layer2, my environment here is ens33 NIC disable indicates whether to disable the Eip object

After creating the Eip object, you can check the status of the IP pool via Status.

|

|

Here the address pool for LB is ready, next we create a simple service to be exposed through LB as shown below.

A simple nginx service is deployed here.

Then create a Service of type LoadBalancer to expose our nginx service, as follows.

|

|

Note that we have added several annotations to the Service here.

lb.kubesphere.io/v1alpha1: openelbto specify that the Service uses OpenELBprotocol.openelb.kubesphere.io/v1alpha1: layer2specifies that OpenELB is used in Layer2 modeeip.openelb.kubesphere.io/v1alpha2: eip-poolspecifies the Eip object used by OpenELB, if this annotation is not configured, OpenELB will automatically use the first available Eip object matching the protocol, or you can remove this annotation and addspec: loadBalancerIPfield (e.g. spec:loadBalancerIP: 192.168.0.108) to assign a specific IP address to the Service.

Also create the above Service directly.

Once created, you can see that the Service service has been assigned an EXTERNAL-IP, and we can access the nginx service above from that address.

|

|

In addition, OpenElb also supports BGP mode and clustered multi-route scenarios, see the official documentation at https://openelb.github.io/docs/ for more information on how to use it.