Concepts

epoll is an I/O event notification mechanism, which is an implementation of IO multiplexing in the linux kernel. IO multiplexing means listening to multiple input and output sources at the same time in a single operation, returning when one or more of them are available, and then performing read and write operations on them.

I/O

The input/output objects can be files, sockets, and pipes between processes. In linux systems, these are represented by file descriptors (fd).

Events

- A readable event is triggered when the kernel read buffer associated with the file descriptor is readable. (readable: the kernel buffer is not empty and there is data to read)

- Writable event, triggered when the kernel write buffer associated with the file descriptor is writable. (writable: kernel buffer is not empty, there is free space to write)

Notification mechanism

The notification mechanism, which is when an event occurs, then proactively notifies. The flip side of the notification mechanism is the polling mechanism.

epoll in layman’s terms

Combined with the above three items, epoll is a mechanism that notifies the kernel when the kernel buffer of a file descriptor is not empty by sending a readable signal, and notifies the kernel when the write buffer is full by sending a writable signal

APIs of epoll

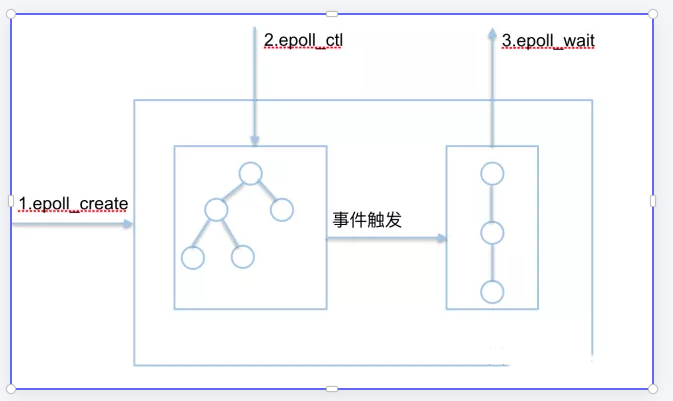

The core of epoll is three APIs, and the core data structures are: a red-black tree and a linked list.

1. int epoll_create(int size)

Function.

- The kernel generates an epoll instance data structure and returns a file descriptor. This particular descriptor is the handle to the epoll instance, and the two interfaces that follow center on it (i.e., the epfd formal parameter).

The size parameter indicates the maximum value of the file descriptor to be monitored, but has been deprecated in later versions of Linux (also, don’t pass 0 for size, it will report an invalid argument error)

2. int epoll_ctl(int epfd, int op, int fd, struct epoll_event *event)

Function.

- Add the descriptor being listened to or remove it from the red-black tree or modify the maximum value of the file descriptor to be monitored for listening events, though this has been deprecated in later versions of Linux (also, do not pass 0 for size, an invalid argument error will be reported)

For the set of file descriptors to be monitored, epoll_ctl manages the red-black tree, in which each member consists of the descriptor value and the reference to the file table entry pointed to by the file descriptor to be monitored, etc.

The op parameter describes the type of operation.

- EPOLL_CTL_ADD: Adds a descriptor to the interest list that needs to be monitored

- EPOLL_CTL_DEL: removes a descriptor from the interest list

- EPOLL_CTL_MOD: modify a descriptor in the interest list

The struct epoll_event describes the epoll behavior of a file descriptor. When using the epoll_wait function to return a list of descriptors in the ready state, the

- the data field is the only field that gives information about the descriptor, so when calling epoll_ctl to add a descriptor that needs to be monitored, make sure to write information about the descriptor in this field

- events field is a bit mask that describes a set of epoll events, which is interpreted in the epoll_ctl call as: the epoll events expected by the descriptor, which can be multi-selected.

Commonly used epoll events are described as follows.

- EPOLLIN: descriptor is in readable state

- EPOLLOUT: Descriptor is in writable state

- EPOLLET: set the epoll event notification mode to edge triggered

- EPOLLONESHOT: the first time to notify, no more monitoring afterwards

- EPOLLHUP: the local descriptor generates a hang-up event, the default monitoring event

- EPOLLRDHUP: the opposite descriptor generates a pending event

- EPOLLPRI: triggered by out-of-band data

- EPOLLERR: Triggered when the descriptor generates an error, default detection event

3. int epoll_wait(int epfd, struct epoll_event *events, int maxevents, int timeout)

Function.

- blocking wait for registered events to occur, return the number of events, and write the triggered events to the events array.

- events: used to record the triggered events, its size should be the same as maxevents

- maxevents: the maximum number of events to be returned

The file descriptors in the ready state are copied into the ready list, and epoll_wait is used to return the ready list to the user process. events and maxevents arguments describe a user-allocated struct epoll event array, and when the call returns, the kernel copies the ready list to this array and returns it. When the call returns, the kernel copies the ready list into this array and returns the actual number of copies as the return value. Note that if the ready list is longer than maxevents, only the first maxevents members can be copied; conversely, the ready list can be copied completely. Also, the events field in the struct epoll event structure is interpreted here as the actual events that occurred on the file descriptor being monitored. The parameter timeout describes the upper limit of blocking time in ms in a function call.

- timeout = -1 means that the call will keep blocking until a file descriptor enters the ready state or a signal is caught before returning.

- timeout = 0 is used for non-blocking to detect if a descriptor is in ready state, and the call returns immediately regardless of the result.

- timeout > 0 means the call will last for at most timeout time, and return if a detected object changes to ready state or a signal is captured during that time, otherwise until the timeout.

The two triggering methods of epoll

epoll monitors I/O events for multiple file descriptors. epoll supports edge trigger (ET) or level trigger (LT), which waits for I/O events via epoll_wait and blocks the calling thread if no events are currently available.

select and poll only support LT working mode, and the default working mode of epoll is LT mode.

1. Timing of horizontal trigger

- for read operation, LT mode returns read ready as long as the buffer is not empty. 2. for write operation, LT mode returns read ready as long as the buffer is not empty.

- For write operations, LT mode returns write ready as long as the buffer is still full.

When a read/write event occurs on the monitored file descriptor, epoll_wait() notifies the handler to read or write. If you don’t read or write all the data at once (e.g., the read/write buffer is too small), then the next time epoll_wait() is called, it will notify you to continue reading or writing on the file descriptor you didn’t finish reading or writing on, but of course if you never read or write, it will keep notifying you. If the system has a large number of ready file descriptors that you don’t need to read or write, and they return every time, this can greatly reduce the efficiency of the handler retrieving the ready file descriptors it cares about.

2. Timing of edge trigger

-

For read operation

- When the buffer changes from unreadable to readable, i.e. when the buffer changes from empty to not empty.

- When new data arrives, i.e. when the buffer becomes full of data to be read.

- when there is data readable in the buffer and the application process performs EPOLL_CTL_MOD to modify the EPOLLIN event for the corresponding descriptor.

-

For write operations

- When the buffer is changed from unwritable to writable.

- When there is old data being sent away, i.e. the buffer becomes less content.

- when there is space in the buffer to be written and the application process performs an EPOLL_CTL_MOD modify EPOLLOUT event on the corresponding descriptor.

When a read-write event occurs on the monitored file descriptor, epoll_wait() notifies the handler to read and write. If it does not read or write all the data this time (e.g., the read/write buffer is too small), then the next time epoll_wait() is called, it will not notify you, i.e., it will only notify you once until a second read/write event occurs on that file descriptor. This mode is more efficient than horizontal triggering, and the system is not flooded with a lot of ready file descriptors that you don’t care about.

In ET mode, the buffer goes from unreadable to readable, which wakes up the application process, and does not wake up the application process again if the buffer data becomes low.

Example 1.

- the read buffer is empty at the beginning

- 2KB of data is written to the read buffer

- Both horizontal trigger and edge trigger modes will send out a readable signal at this time

- After receiving the signal notification, 1KB of data is read and 1KB of data remains in the read buffer

- Horizontal trigger will notify again, while edge trigger will not notify again

Example 2: (Take the high and low level of pulse as an example)

- Horizontal trigger: 0 is no data, 1 is data. If there is data in the buffer, it will always be 1, then it will always trigger.

- Edge triggered hair: 0 for no data, 1 for data, triggered as long as the rising edge of 0 changes to 1.

JDK does not implement edge triggering, Netty re-implemented the epoll mechanism, using edge triggering; also like Nginx also uses edge triggering.

The JDK already uses epoll by default in Linux, but JDK epoll uses horizontal triggering, while Netty re-implemented the epoll mechanism, using edge triggering. netty epoll transport exposes more configuration parameters that nio does not, such as TCP_CORK, SO_REUSEADDR and so on; others like Nginx also use edge triggering.

Epoll vs. select and poll

1. User state way to pass file descriptors into the kernel

- select: Create 3 sets of file descriptors and copy them to the kernel, listening for read, write, and exception actions respectively. This is limited by the number of fd’s a single process can open, default is 1024.

- poll: Copy the incoming array of struct pollfd structures to the kernel to listen.

- epoll: executing epoll_create creates a red-black tree in the kernel’s high-speed cache area and a readiness linkedlist (which stores the file descriptors that are already ready). Then the epoll_ctl function executed by the user to add file descriptors will add the corresponding nodes to the red-black tree.

2. Kernel state detection of file descriptor read/write status

- select: polling, iterating through all fd’s, and returning a mask of whether the descriptor is ready for read/write operations, and assigning a value to fd_set based on this mask.

- poll: also polling, querying the status of each fd, adding an item to the waiting queue if it is ready, and continuing to iterate.

- epoll: Uses a callback mechanism. When executing the add operation of epoll_ctl, it not only puts the file descriptor in the red-black tree, but also registers a callback function. The kernel will call the callback function when it detects that a file descriptor is readable/writable, and the callback function will place the file descriptor in the ready linkedlist.

3. Find the ready file descriptors and pass them to the user state

- select: Passes a copy of the previously passed fd_set out to the user state and returns the total number of file descriptors that are ready. The user state does not know which file descriptors are in the ready state and needs to iterate through them.

- poll: Passes a copy of the previously passed in fd array out to the user state and returns the total number of file descriptors that are ready. The user state does not know which file descriptors are in the ready state and needs to be traversed to determine this.

- epoll: epoll_wait simply watches for data in the ready linkedlist and finally returns the linkedlist to the array and the number of ready files. The kernel puts the ready file descriptors in the incoming array, so you can just iterate through them in order. The file descriptors returned here are passed by mmap allowing the kernel and user space to share the same block of memory, reducing unnecessary copies.

4. Handling of duplicate listeners

- select: Pass a copy of the new set of listen file descriptors into the kernel and continue with the above steps.

- poll: pass a copy of the new struct pollfd array into the kernel, and continue with the above steps.

- epoll: no need to reconstruct the red-black tree, just follow the existing one.

The reasons why epoll is more efficient

- select and poll have basically the same action, except that poll uses a linkedlist for file descriptor storage, while select uses fd labeled bits for storage, so select is limited by the maximum number of connections, while poll is not.

- select, poll, and epoll all return the number of ready file descriptors. But select and poll do not explicitly indicate which file descriptors are ready, while epoll does. The difference is that after the system call returns, the program calling select and poll needs to iterate through the entire listener file descriptors to find out who is ready, while epoll can handle it directly.

- select and poll both need to copy the data structure of the file descriptor into the kernel and then copy it out. The data structure of the file descriptor created by epoll is itself stored in the kernel state, and the system call returns with mmap() file mapped memory to speed up message passing to the kernel space: that is, epoll uses mmap to reduce the copy overhead.

- select and poll use polling to check if the file descriptor is in the ready state, while epoll uses a callback mechanism. The result is that the efficiency of select and poll decreases linearly as the fd increases, while epoll is not affected too much unless there are many active sockets.

- epoll’s edge-triggered mode is efficient and the system is not flooded with uncaring ready file descriptors

Although epoll has the best performance, select and poll may perform better than epoll when the number of connections is small and the connections are all very active, after all epoll’s notification mechanism requires many function callbacks.