Although the container field of entrepreneurship with the sale of CoreOS, Docker, and gradually relegated to silence, but with the rise of the Rust language, Firecracker, youki project in the container field ripples, for cloud-native practitioners, interviews and other scenarios will more or less talk about the history and technical background of some containers.

Requirement Profile

In the days of Docker, the term container runtime was well defined as the software that ran and managed containers. However, as Docker has grown to encompass more and more, and as multiple container orchestration tools have been introduced, the definition has become increasingly vague.

When you run a Docker container, the general steps are

- Download the image

- Unpack the image into a bundle, i.e., tiling the layers into a single filesystem.

- Run the container

The original specification states that only the part of running a container is defined as container runtime, but the average user, defaults all three of these steps to the capabilities necessary to run a container, thus making the definition of container runtime a confusing topic.

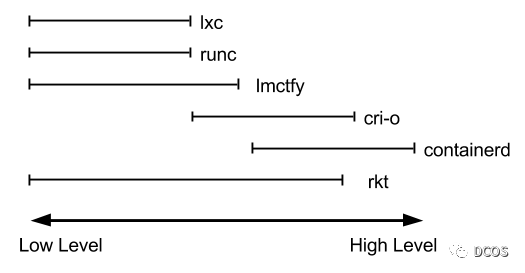

When one thinks of container runtime, a series of related concepts may come to mind; runc, runv, lxc, lmctfy, Docker (containerd), rkt, cri-o. Each of them is implemented based on different scenarios and all implement different functions. For example, containerd and cri-o, both can actually use runc to run containers, but they implement features such as image management, container API, etc., which can be seen as more advanced features than runc has.

As you can see, the container runtimes are quite complex. Each runtime covers different parts from low-level to high-level, as shown in the following figure.

According to the functional scope, it is divided into Low level Container Runtime (Low level Container Runtime) and High level Container Runtime (High level Container Runtime), where the focus is only on the operation of the container itself is usually called Low level Container Runtime. Container Runtime). Runtimes that support more advanced features, such as image management and some gRPC/Web APIs, are often referred to as High level Container Runtime. It is important to note that the low level runtime and the high level runtime are fundamentally different and each solves a different problem.

Low-level container runtime

The low-level runtime has limited functionality and typically performs the low-level tasks of running containers. It is not used by most developers in their daily work. It generally refers to implementations that can receive runnable roofs file systems and configuration files and run isolated processes according to the OCI specification. This runtime is only responsible for running processes in a relatively isolated resource space and does not provide a storage implementation or a network implementation. However, other implementations can have the relevant resources pre-defined in the system, and the low-level container runtime can load the corresponding resources through the config.json declaration. The low-level runtime is characterized by being low-level, lightweight, and the limitations are clear at a glance.

- Only knows rootfs and config.json, no other mirroring capabilities

- No network implementation

- No persistent implementation

- No cross-platform capability, etc.

Low-level runtime demos

Simple containers are implemented by using Linux cgcreate, cgset, cgexec, chroot and unshare commands as root.

First, set up a root filesystem with the busybox container image as a base. Then, create a temporary directory and extract the busybox to that directory.

Create uuid immediately after and set limits for memory and CPU. Memory limit is set in bytes. Here, set the memory limit to 100MB.

For example, if we want to limit our container to two cpu cores, we can set a period of one second and a quota of two seconds (1s = 1,000,000us), which will allow the process to use two cpu cores for a period of one second.

Next, execute the command in the container.

Finally, delete the cgroup and temporary directory created earlier.

In order to better understand the low-level container runtime, several low-level runtime representatives are listed below, each implementing different functions.

runC

runC is currently the most widely used container runtime. It was originally integrated inside Docker and later extracted as a separate tool and as a public library.

runC is by far the most widely used container runtime. It was initially integrated inside Docker and later extracted as a separate tool and as a public library.

In 2015, with the support of the Open Container Initiative (OCI) (the organization responsible for developing container standards), Docker donated its container format and runC runtime to OCI. oCI developed 2 standards based on this: the runtime standard Runtime Specification (runtime-spec) and Image Specification (image-spec), and here is a brief introduction to runC with examples.

First create the root filesystem. Here we will use busybox again.

Next, create a config.json file.

|

|

This command creates a template config.json for the container.

By default, it runs the command in the root filesystem located at . /rootfs directory to run the command.

rkt(deprecated)

rkt is a runtime with both low-level and high-level functionality. Much like Docker, for example, rkt allows you to build container images, fetch and manage container images in your local repository, and run them with a single command.

runV

runV is an OCF hypervisor-based runtime Runtime. runV is compatible with OCF. runV as a virtual container runtime engine is obsolete. runV team created the Kata Containers project in OpenInfra Foundation with Intel.

youki

Rust is the most popular programming language today, and container development is a trendy application area. Combining the two using Rust for container development is a worthwhile experience. youki is an implementation of the OCI runtime specification using Rust, similar to runc.

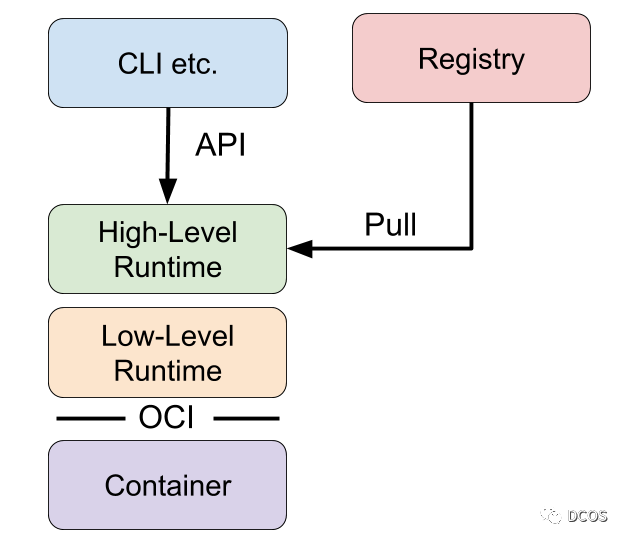

High-level container runtime

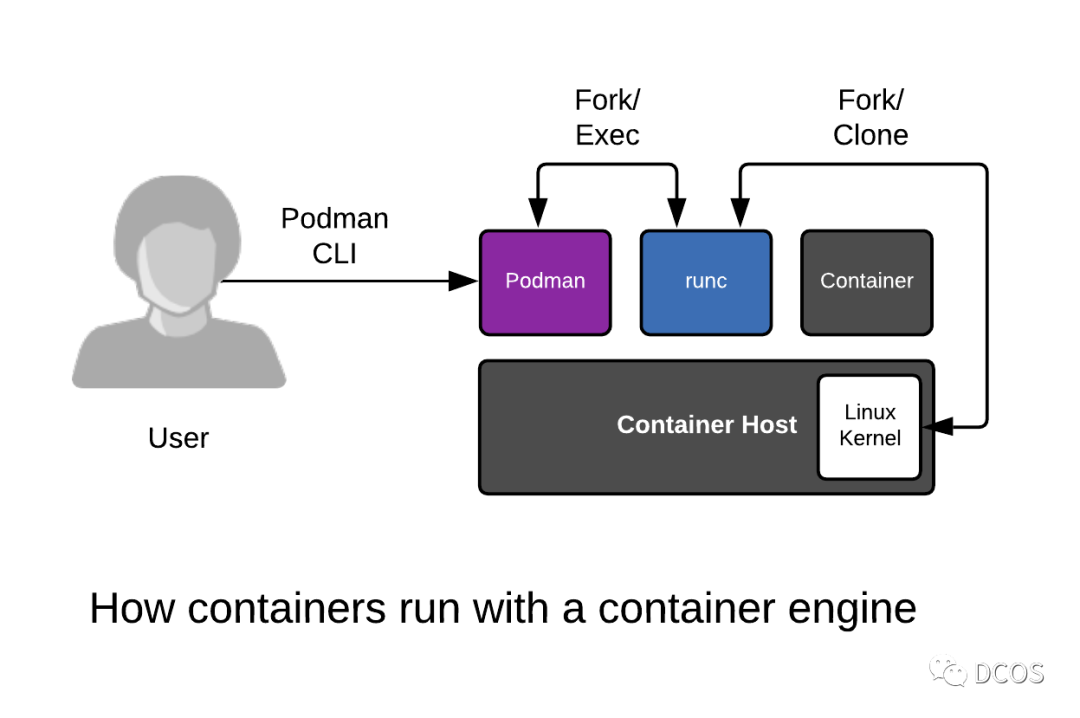

The high-level runtime is responsible for the transfer and management of container images, decompressing them and passing them to the low-level runtime to run the containers. Typically, the high-level runtime provides a daemon and an API that remote applications can use to run containers and monitor them, and they sit on top of the low-level runtime or other high-level runtimes.

The high-level runtime also provides some seemingly low-level functionality. For example, managing network namespaces and allowing a container to join another container’s network namespace.

Here is a similar logical hierarchy diagram that can help understand how these components work together.

High-level runtime representatives

Docker

Docker is one of the first open source container runtimes. It was developed by dotCloud, a platform-as-a-service company, to run users’ applications in containers.

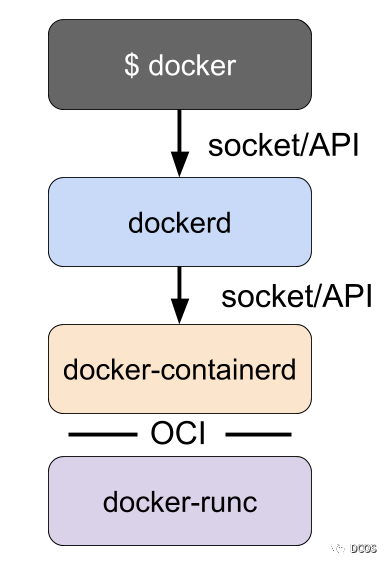

Docker is a container runtime that includes building, packaging, sharing, and running containers. docker is implemented on a C/S architecture and originally consisted of a daemon, dockerd, and a docker client application. The daemon provides most of the logic for building containers, managing images and running containers, as well as some APIs. command line clients can be used to send commands and get information from the daemon.

It was the first runtime to catch on, and it is no overstatement to say that Docker has contributed greatly to the spread of containers.

Docker initially implemented both high-level and low-level runtime features, but these were later broken down into separate projects such as runc and containerd. The previous architecture of Docker is shown in the figure below, and the existing architecture has seen docker-containerd become containerd and docker-runc become runc.

While dockerd provides features such as building images, dockerd uses containerd to provide features such as image management and running containers. For example, the build step of Docker is really just some logic that interprets the Docker file, uses containerd to run the necessary commands in the container, and saves the resulting container filesystem as an image.

Containerd

containerd is a high-level runtime separate from Docker. containerd implements downloading images, managing images, and running containers in images. When a container needs to be run, it unpacks the image into an OCI runtime bundle and sends init to runc to run it.

Containerd also provides APIs that can be used to interact with it. containerd’s command line clients are ctr and nerdctl.

A container image can be pulled via ctr.

|

|

List all mirrors.

|

|

Running containers.

|

|

List running containers.

|

|

Stop container.

|

|

These commands are similar to the way the user interacts with Docker.

rkt(deprecated)

rkt is a runtime with both low-level and high-level functionality. Much like Docker, for example, rkt allows you to build container images, fetch and manage container images in your local repository, and run them with a single command.

Kubernetes CRI

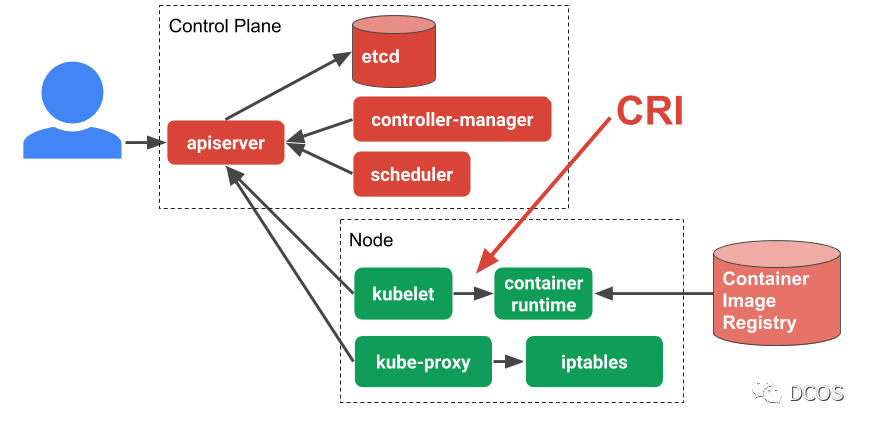

CRI was introduced in Kubernetes 1.5 as a bridge between the kubelet and container runtimes. The community expects the high-level container runtime integrated with Kubernetes to implement CRI. this runtime handles the management of images, supports Kubernetes pods, and manages containers, so by definition a runtime that supports CRI must be a high-level runtime. Lower-level runtimes do not have the above features.

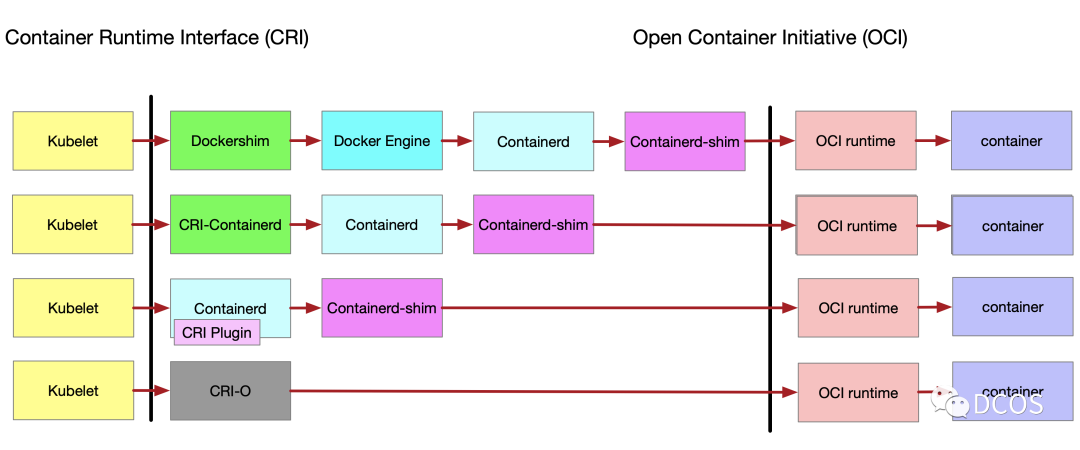

To further understand CRI, take a look at the entire Kubernetes architecture. kubelets represent worker nodes, located on each node of a Kubernetes cluster, and kubelets are responsible for managing the workloads of their nodes. When the workload needs to be run, the kubelet communicates with the runtime through the CRI. As you can see, the CRI is just an abstraction layer that allows switching between different container runtimes.

CRI Specification

The CRI defines the gRPC API, which is defined in the cri-api directory in the Kubernetes repository.The CRI defines several remote procedure calls (RPCs) and message types. These RPCs are used to manage things like workloads, such as “pull image” (ImageService.PullImage), “create pod” (RuntimeService.RunPodSandbox), “create container” (RuntimeService.CreateContainer), “start StartContainer” (RuntimeService.StartContainer), “StopContainer” (RuntimeService.StopContainer), and so on.

For example, starting a new Pod via CRI (some simplification work for limited space.) RunPodSandbox and CreateContainer RPCs return IDs in their responses, which are used in subsequent requests.

|

|

The crictl tool can be used directly to interact with the CRI runtime, which can be used to debug and test CRI-related implementations.

Or specify via the command line.

|

|

See the official website for the use of crictl.

The runtime of CRI support is as follows.

Containerd

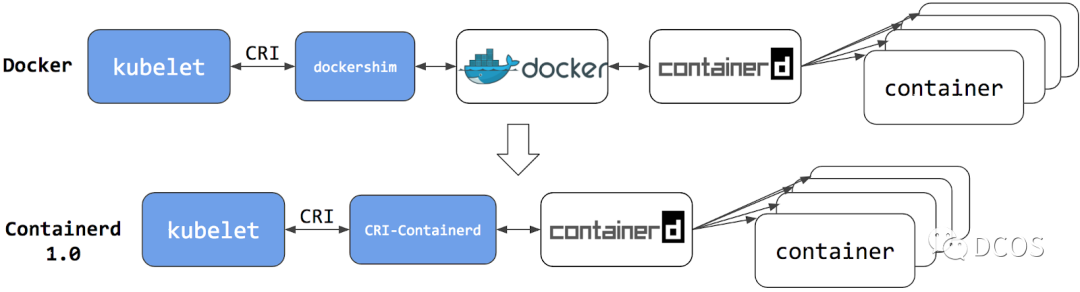

containerd is supposed to be the most popular CRI runtime available. It implements CRI as a plugin and is enabled by default. It listens for messages on unix sockets by default.

Starting with version 1.2, it supports a variety of low-level runtimes via runtime handlers. The runtime handler is passed through a field in the CRI, and based on that runtime handler, containerd runs shim’s application to start the container. This can be used to run runc and other low-level runtime containers such as gVisor, Kata Containers, etc. Runtime configuration is done in the Kubernetes API via RuntimeClass.

The following diagram shows the history of Containerd.

Docker

docker-shim was first developed by the K8s community as a shim between kubelet and Docker. with Docker breaking down many of its features into containerd, CRI is now supported through containerd. when modern versions of Docker are installed, containerd is also installed along with With the official deprecation of docker-shim, it is time to consider related migration efforts, and K8s has done a lot of work in this area, as described in the official documentation.

CRI-O

cri-o is a lightweight CRI runtime that supports OCI and provides image management, container process management, monitoring logging and resource isolation.

The default address for cri-o communication is at /var/run/crio/crio.sock.

The following chart shows the evolution of the CRI plug-in.