The created components can be seen under the knative-serving namespace after the deployment of knative.

|

|

Create knative serving

First create a knative service for testing, the yaml file is shown below.

|

|

After creation, verify the kpa function of knative. After creating the helloworld-go serving, there will be a pod instance, and if the pod is not accessed for a long time, it will be destroyed. First verify the scenario of requesting helloworld-go for the first time, when the instance of the service is completely destroyed, requesting the URL of helloworld-go, an instance will be started first, as follows.

The k8s cluster built with minkube does not have a loadBalancer and is accessed by nodeport, whose port 31046 will be forwarded to port 8080 of the backend instance. When the traffic reaches the helloworld-go service, which has no instances at this time, activator will sense the request and perform an expansion of the deployment of helloworld-go, specifying the replicas of the deployment as 1. deployment will pull up a pod instance, which will be slow because of the first start of the pod.

In a real environment, it is unacceptable to start the pod after the traffic has been reached, and generally an identical instance of the pod or a lower-configured instance is kept to avoid situations such as correspondingly slow or lost traffic during cold starts.

Once an instance exists, the entire request process can be seen to be very fast when accessed again, as shown below.

knative serving components

There are 6 main serving components, 5 of which are under the knative-serving namespace, namely controller, webhook, autoscaler, autoscaler-hpa, and activator. There is also a queue that runs in each application’s pod and exists as a sidecar for the pod.

- Controller: Management of the entire life cycle of the load service, involving, Configuration, Route, Revision, etc. CURD. is a controller that updates the state of the cluster based on user input;

- Webhook: mainly responsible for the creation and updating of parameter checks.

- Activator: Intercepts user requests after the application is scaled to 0, notifies autoscaler to start the corresponding application instance, and waits for the request to be forwarded after it is started. Responsible for scaling the service to 0 and forwarding requests.

- Autoscaler: scaling up and down the application according to the concurrency of requests from the application.

- Queue: Load intercepting requests forwarded to Pods, used to count the request concurrency of Pods, etc., autoscaler will access the queue to get the corresponding data to scale up and down the application.

- Autoscaler-hpa: responsible for the scaling of autoscaler applications.

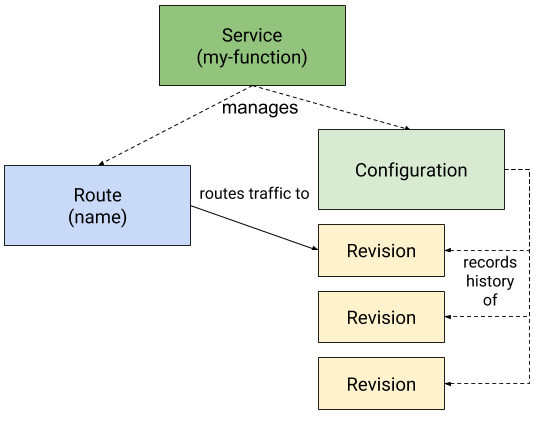

Knative manages all the capabilities of the application in a unified CRD resource - Service, which is different from the Service accessed by K8s native users, and is a custom resource of Knative that manages the entire lifecycle of the Knative application.

- Service: The

service.serving.knative.devresource manages the entire lifecycle of the workload. It controls the creation of other objects (Route, Configration, Revison) and ensures that every update to the Service acts on the other objects. - Route: The

route.serving.knative.devresource maps network endpoints to one or more Revision. a variety of traffic management methods can be implemented by configuring Route, including partial traffic and named routes. - Configuration: The

configuration.serving.knative.devresource maintains the state required for deployment. It provides a clear separation between code and configuration and follows the twelve element application approach. Modifying Configuration will create a new Revision. - Revision: The

revision.serving.knative.devresource is a point-in-time snapshot of the code and configuration for each modification made to the workload. Revisions are invariant objects that can be retained for as long as they are useful. revision can be automatically scaled up or down based on incoming traffic.

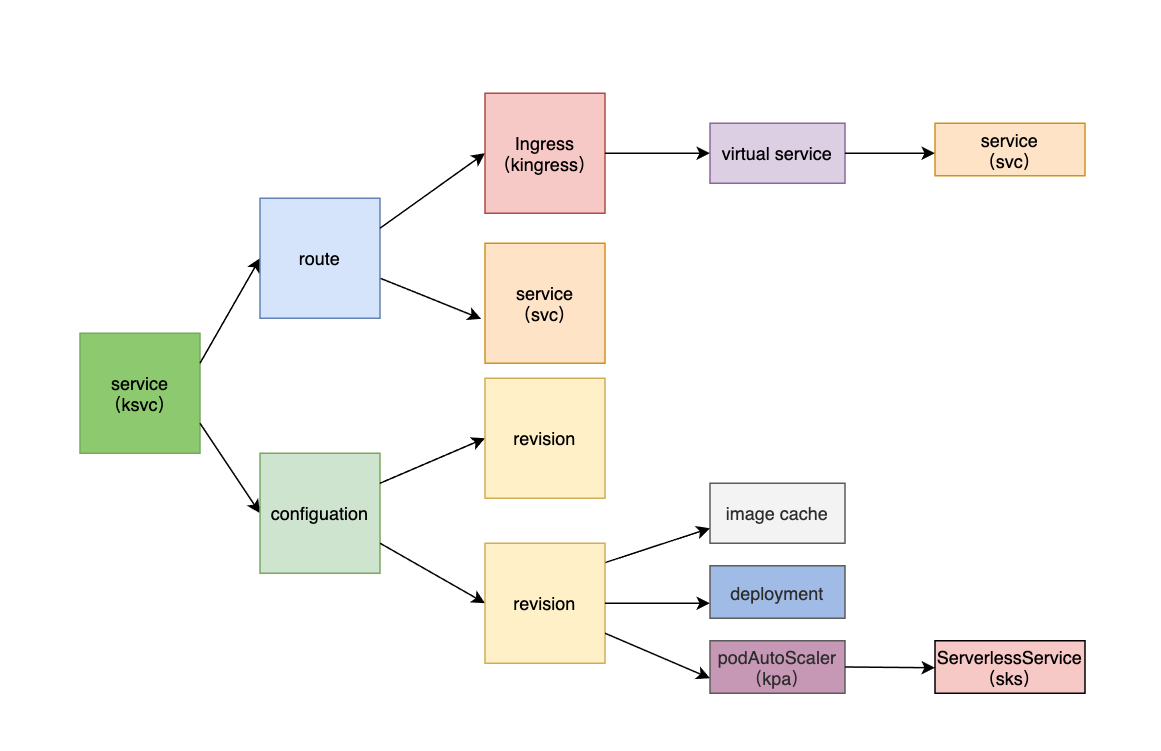

All resources associated with Serving are shown in the figure below.

- revision creates imageCache, deployment, kpa, and sks components. deployment is the running service, kpa scales the corresponding deployment according to the number of concurrency, and ServerlessService creates an accessible service for the kpa service both inside and outside the cluster. The image cache is mainly to solve the problem of slow image pulling during cold starts.

- route creates svc, kingress, and virtualService components for access between services and externally.

Automatic expansion and contraction

1->n: Any request to access the application will be intercepted by the Queue after entering the Pod, and the concurrency of the current Pod will be counted, and the Queue will open a metric interface. When it needs to scale up or down, autoscalor will modify the number of instances of the deployment under Revision to achieve the effect of scaling up or down.

0->1: When the application is not accessed for a long time, the instances are reduced to 0. At this time, requests to access the application are forwarded to the activator and the Revision information of the requested access is marked before the request is forwarded to the activator (implemented by the controller to modify the VirtualService). The activator receives the request, adds 1 to the concurrency of Revision, and pushes the metric to the autoscalor to start the Pod. at the same time, the activator monitors the start status of Revision, and forwards the request to the corresponding Pod after Revision starts normally.

Of course, after Revision starts normally, requests from the application will not be sent to the activator anymore, but directly to the Pod of the application (implemented by the controller modifying the VirtualService).

Using the hey pressure test under mac, the number of instances of the service will also increase when the number of requests increases.

|

|

knative network mode

knative currently uses Istio as the base of the network by default, but knative does not rely strongly on istio, in addition to istio, you can also choose ambassador, contour, gloo, kourier, etc. The network model is divided into two parts, one for inter-service access and one for external access.

Access between services.

- istio will resolve the virtualService of the knative service and send it to the envoy of each pod. When the applications access each other through the domain name, envoy will intercept the request and forward it to the corresponding pod directly.

External access.

- If the access is outside the cluster, the default request entry is ingressgateway. ingressgateway forwards the request to the application based on the access domain, as shown in the example above, the nodeport of ingressgateway is accessed on the locally built k8s cluster. ingressgateway forwards the request to the backend service.

- If accessed on a cluster node, each knative service corresponds to a k8s service, and the backends of this service are all ingressgateway, and ingressgateway forwards the request to the application based on the access domain.

knative generates a domain name for each revision for external access. The default primary domain name of the service is example.com, and all independent domains generated by the knative service are subdomains of this primary domain. You can specify the default domain name by modifying the config.

|

|