In our daily work, usually applications are deployed containerized using Kubernetes, but there are always problems, for example, the JVM heap is smaller than the memory size set in the Docker container and the memory size of Kubernetes, but it is still OOMKilled.

Exit Code 137

- indicates that the container has received a SIGKILL signal and the process has been killed, corresponding to

kill -9. The SIGKILL is triggered by a docker kill, which can be initiated by the user or by the docker daemon, executed manually: docker kill. - 137 is more common, if the limit resources in the pod are set small, it will run out of memory resulting in OOMKilled, in which case the “OOMKilled” value in state is true and you can see the OOM log in dmesg -T on your system.

Why am I OOMKilled even though I have set the size relationship correctly?

Why do I get OOMKilled even though my heap size is definitely smaller than the size of the Docker container and Pod?

Cause Analysis

This problem often occurs after JDK8u131 or JDK9 when running the JVM in a container: in most cases, the JVM will generally use the host Node’s memory as the Native VM space (which includes heap space, direct memory space and stack space) by default, rather than the container’s space as the standard.

For example on my machine.

The above information appears contradictory, we set the container memory to 100MB at runtime and -XshowSettings:vm prints out that the JVM will have a maximum heap size of 444M, if we allocate memory according to this it is likely to cause the node host to kill my JVM at some point.

How to fix this problem

JVM sensing cgroup limits

One way to solve the JVM memory overrun problem is a method that allows the JVM to automatically sense the cgroup limits of the docker container and thus dynamically adjust the heap memory size. jDK8u131 has a nice feature in JDK9 that allows the JVM to detect how much memory is available when running in a Docker container. In order for the jvm to retain memory according to the container specification, the flag -XX:+UnlockExperimentalVMOptions -XX:+UseCGroupMemoryLimitForHeap must be set.

Note: If these two flags are set together with the Xms and Xmx flags, what will be the behaviour of jvm? The -Xmx flag will override the

-XX:+ UseCGroupMemoryLimitForHeapflag.

Summary

- The

-XX:+ UseCGroupMemoryLimitForHeapflag allows the JVM to detect the maximum heap size in the container. - The

-Xmxflag sets the maximum heap size to a fixed size. - In addition to the JVM heap space, there will be some additional memory usage for non-heap and jvm stuff.

Experimenting with JDK9’s container-aware mechanism

We can see that after memory-awareness, the JVM is able to detect that the container is only 100MB and set the maximum heap to 44M. Let’s adjust the memory size to see if it can be dynamically adjusted and aware of memory allocation, as shown below.

We have set up the container to have 1GB of memory allocated and the JVM uses 228M as the maximum heap. Since there are no processes running in the container other than the JVM, can we extend the allocation for the Heap heap a little further?

At lower versions you can use the -XX:MaxRAMFraction parameter, which tells the JVM to use the available memory MaxRAMFract as the maximum heap. With -XX:MaxRAMFraction=1 we use almost all available memory as the maximum heap. As you can see from the results above the memory allocation can already reach 910.50M.

Problem Analysis

-

will maxheap occupy total memory still cause your process to be killed due to other parts of memory (e.g. “metaspace”)?

Answer: MaxRAMFraction=1 will still leave some space for other non-heap memory.

However, this can be risky if the container uses off-heap memory, as almost all container memory is allocated to the heap. You must set -XX:MaxRAMFraction=2 so that the heap only uses 50% of the container memory, or use Xmx .

Container internal awareness of CGroup resource limits

Docker 1.7 starts to mount container cgroup information into the container, so applications can get memory, CPU, etc. settings from files like /sys/fs/cgroup/memory/memory.limit_in_bytes, and set parameters like -Xmx, -XX:ParallelGCThreads, etc. correctly in the container’s startup command according to the Cgroup configuration

Improved container integration in Java10

- The -XX:MaxRAM parameter has been deprecated in Java10+ as the JVM will correctly detect this value. Improved container integration in Java10. No need to add additional flags, the JVM will use 1/4 of the container memory for the heap.

- java10+ does correctly identify the memory docker limit, but you can use the new flag

MaxRAMPercentage(e.g.: -XX:MaxRAMPercentage=75) instead of the old MaxRAMFraction to more precisely size the heap instead of the rest (stack, native …) - UseContainerSupport option on java10+ and is enabled by default, no need to set it. Also UseCGroupMemoryLimitForHeap this is deprecated and not recommended to continue using it, while also allowing more fine-grained control of the memory ratio used by the JVM with the parameters -XX:InitialRAMPercentage,

-XX:MaxRAMPercentage,-XX:MinRAMPercentage.

Java programs call external processes when they run, for example to request Native Memory. So even if you are running a Java program in a container, you still have to reserve some memory for the system. Therefore

-XX:MaxRAMPercentageshould not be configured too large. Of course it is still possible to use the-XX:MaxRAMFraction=1option to compress all the memory in the container.

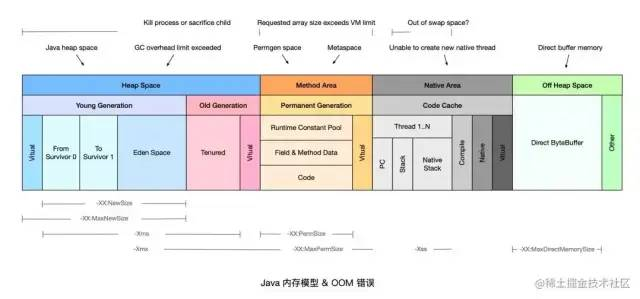

Above we know how to set and control the relationship between the corresponding heap memory and the container memory, so as to prevent the JVM heap memory from exceeding the container memory and causing the container to be OOMKilled. But in terms of the whole JVM process system, not only does it contain Heap memory, there are actually other related memory storage spaces that we need to consider to prevent these memory spaces from causing our container memory overflow scenarios, as shown in the diagram below.

Next, we need to analyse the part of memory outside the heap that is Off Heap Space, which is also known as Direct buffer memory. The main way to do this is to use the Unsafe method of requesting memory, and most scenarios will also be obtained through the Direct ByteBuffer method. So without further ado, let’s get to the point.

JVM parameter MaxDirectMemorySize

Let’s first examine jvm’s -XX:MaxDirectMemorySize, which specifies the limit of space that can be allocated by the DirectByteBuffer. If no display is specified to start jvm with this parameter, the default value is the value corresponding to xmx (in lower versions it is minus the “surviving area " size).

The DirectByteBuffer object is a typical “iceberg object”, where there is a small amount of leaked objects in the heap, but they are connected with out-of-heap memory underneath, a situation that can easily result in a large amount of memory being used without being freed.

|

|

-XX:MaxDirectMemorySize=size is used to set the maximum size of New I/O (java.nio) direct-buffer allocations. size can be used in units of k/K, m/M, g/G; if this parameter is not set it defaults to 0, meaning that the JVM itself automatically selects the maximum size for NIO direct-buffer allocations.

What is the default value of -XX:MaxDirectMemorySize?

In sun.misc.VM, it is Runtime.getRuntime.maxMemory(), which is what is configured using -Xmx. And how are the corresponding JVM parameters passed to the JVM underlay? Mainly through hotspot/share/prims/jvm.cpp. Let’s have a look at the JVM source code of jvm.cpp to analyse it.

|

|

jvm.cpp has a section of code that converts the - XX:MaxDirectMemorySize command parameter to a property with the key sun.nio.MaxDirectMemorySize. We can see that he sets and initializes the direct memory configuration after converting to this property.

|

|

As you can see above 64MB is initially set arbitrarily. The -XX:MaxDirectMemorySize is the VM parameter used to configure the NIO direct memory limit. You can look at this line of code from the JVM.

But what is the maximum amount of memory that direct memory can request by default if it is not configured? The default value for this parameter is -1, which is obviously not a “valid value”. So the real default value must come from somewhere else.

|

|

As you can see from the source code above, the sun.nio.MaxDirectMemorySize property is read, and if it is null or empty or - 1, then it is set to Runtime.getRuntime().maxMemory (); if MaxDirectMemorySize is set and the value is greater than - 1, then that value is used as the value of directMemory; and the VM’s maxDirectMemory method returns the value of directMemory.

Because the MaxDirectMemorySize parameter is -1 when it is not explicitly set, maxDirectMemory() is called by the static constructor of the java.lang.System during initialization of the Java class library, with the following logic.

And the implementation of Runtime.maxMemory() in the HotSpot VM is as follows.

This max_capacity() actually returns -Xmx minus the reserved size of a survivor space.

Conclusion

When MaxDirectMemorySize is not explicitly configured, the maximum amount of space that can be requested by NIO direct memory is -Xmx minus a survivor space reservation. For example if you do not configure -XX:MaxDirectMemorySize and configure -Xmx5g, the “default” MaxDirectMemorySize will also be 5GB-survivor space area and the total heap + direct memory usage of the application may grow to 5 + 5 = 10 Gb.

Other API methods for getting the value of maxDirectMemory

BufferPoolMXBean and getMemoryUsed for JavaNioAccess.BufferPool (via SharedSecrets) can get the size of direct memory; with the java9 module, SharedSecrets changed from sun.misc.SharedSecrets to jdk.internal.access.SharedSecrets under the java.base module; use -add-exports java.base/jdk.internal.access=ALL-UNNAMED to export it to UNNAMED so that it can be run.

|

|

MAnalysis of memory problems

-XX:+DisableExplicitGC vs. direct memory for NIO

-

With the -XX:+DisableExplicitGC parameter, the call to System.gc() becomes an air conditioner and does not trigger any GC at all (but the overhead of the “function call” itself is still there ~).

-

This is the biggest problem we usually encounter, as we do ygc to reclaim the unreachable DirectByteBuffer objects in the new generation and their off-heap memory, but not the old DirectByteBuffer objects and their off-heap memory. If a large number of DirectByteBuffer objects are moved to old, but then never do cms gc or full gc, but only ygc, then we may slowly run out of physical memory, but we don’t know what’s happening yet, because the heap clearly has plenty of memory left (provided we have System.gc disabled).