This article will start with the basics and teach you how to use Logging to record program logs with simple examples.

1. logging Quick start

1.1. Why not use Print?

Logging is an extremely important part of deploying a program to a production environment, and logging is useful for programs in three ways

- Status logging: for each point in time, it is important to record the status of the business operation at that time, and often you need to rely on this information to determine business problems

- Bug reproduction: Production environments are not equipped for single-step debugging, and bugs in production environments are extremely difficult to reproduce, so logging is the only and most effective means of reproducing bugs.

- Set levels: set different output levels for different modules to make the log files look more concise

logging is Python’s own logging library, which combines the power of logging with the flexibility to write to a file, print to the console or to a remote HTTP/FTP server, whether you want to do it all!

1.2. logging usage examples

To start with, you can see an official example, which will be used as the basis for the rest of the explanation, with some modifications to make it easier to run.

- demo

- main.py

- auxiliary_module.py

main.py

|

|

auxiliary_module.py

|

|

Run the Main.py file directly and get the following output.

|

|

It is recommended to take a closer look at the demo code, which actually covers the basics of logging, which has several built-in objects

- Logger: Logger object, if not actively initialised, will be a default Logger object when calling logging.info(‘hello world’)

- LogRecord, the logger, receives the logs passed by the Logger object and sends them to the corresponding Handler processor

- Handler: the core processor that outputs the generated logs to the specified location (i.e. determines whether the logs go to the output desk/HTTP server/local)

- Filter: filter, providing better granularity to control the filtering of some defined output content

- Formatter: a formatter that works with the Handler to implement different log output formats

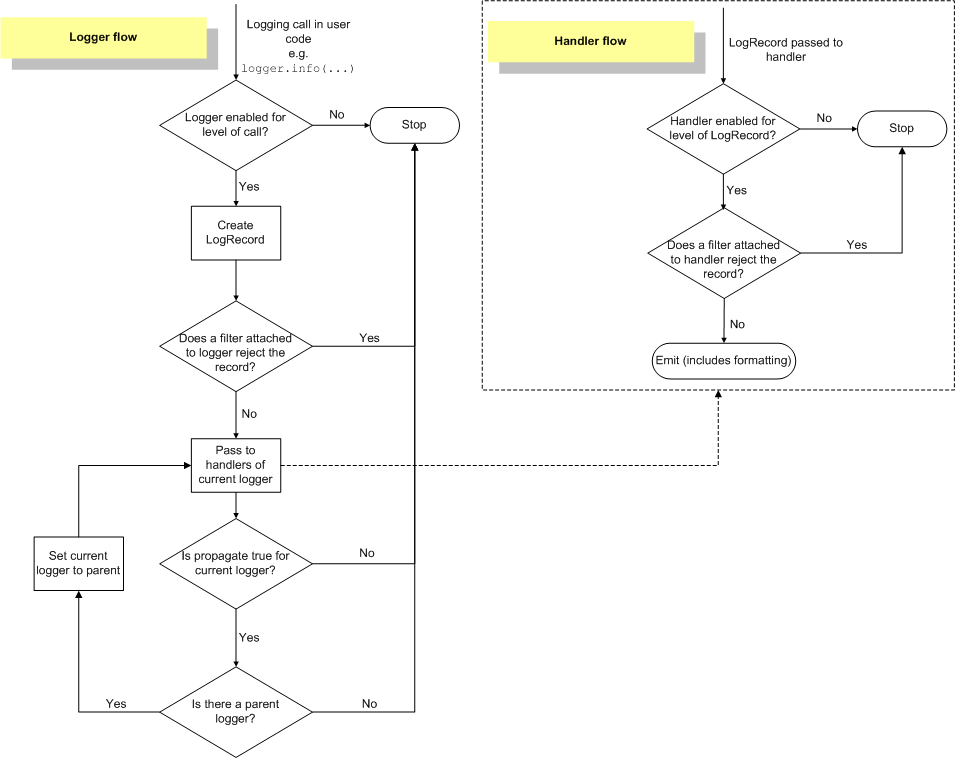

The official example diagram of the logging workflow (don’t worry if you don’t understand it, keep reading and come back to this diagram later to see that it’s not difficult).

For simple use, then we only need to focus on three parts, namely Logger/Handler/Formatter.

1.3. Just need to master Logger/Handler/Formatter

Logger is the basic logging object, e.g. the example code uses logging.getLogger(‘spam_application’) to get a logging object called spam_application

Then, by adjusting some properties of this logging object, we can get a customised logging object, which will be used again in other modules, so that the customisation done before will take effect

Next, analyse each sentence of the example code in turn to see what is being achieved

|

|

Set this logging object to output logs at the Debug level and above. The official documentation for the logging level is extracted below, if the Debug level is set, then all logs with values > DEBUG level will be output.

| Level | value |

|---|---|

| CRITICAL | 50 |

| FATAL | 50 |

| ERROR | 40 |

| WARNING | 30 |

| WARN | 30 |

| INFO | 20 |

| DEBUG | 10 |

| NOTSET | 0 |

|

|

A handler named FH is created, specifying the output format of the DEBUG log output target, and the same for the ch object, where FileHandler indicates that this is a file-based output handler that will output the log to a file, different handlers have different parameters, and the list of common handlers is as follows.

| Handler | Description |

|---|---|

| StreamHandler | Log output to a stream, either sys.stderr, sys.stdout or a custom file |

| FileHandler | Outputting logs to file |

| BaseRotatingHandler | Rolling logs |

| RotatingHandler | A rolling log output that allows you to control the number of logs. |

| TimeRotatingHandler | Support for scrolling log files by time. |

| SocketHandler | Output logs by sockets protocol to the specified remote machine |

| SMTPHandler | Output logs to smtp server (email) |

| SysLogHandler | Output to sys log file |

| HTTPHandler | Output to HTTP server |

|

|

A formatter is created. The parameters in it are customisable and the list of parameters is as follows.

| Parameters | Description |

|---|---|

| %(levelno)s | Log level values |

| %(levelname)s | Name of the log level |

| %(pathname)s | The path to the currently executing program, which is actually sys.argv[0] |

| %(filename)s | Name of currently executing program |

| %(funcName)s | Name of the function |

| %(lineno)d | Code line number |

| %(asctime)s | Time of printing |

| %(thread)d | Thread ID |

| %(threadName)s | Thread Name |

| %(process)d | Process ID |

| %(processName)s | Process Name |

| %(module)s | Module name |

| %(message)s | Log messages |

The remaining four lines set the corresponding formatting object/processor object to the specified object to complete the output, and with this understanding, Logging can be used for basic usage without any problems.

If you are interested in exception catching/custom configuration files you can read on in the next section.

2. Flexible logging

2.1. Exception output

For exception output, I’m sure the following output will come to mind the first time you write it.

The fact that printing e directly shows the stack information is not obvious. It is more recommended to use logging.error('Error as',exc_info=True)/logging.exception('Error') to print the stack information in both ways

2.2. Custom log file configuration

logging supports custom logging configuration files, simply use the following code to read the logging configuration file.

The configuration files support a wide range of formats, including config/ini/yaml/json etc. which can all be used as logging configuration files

Below is a yaml configuration file that automatically scrolls by date.

|

|

The relevant code can be found here.

For more complex usage it is recommended to refer to the official documentation.