Introduction to the concept

Qemu

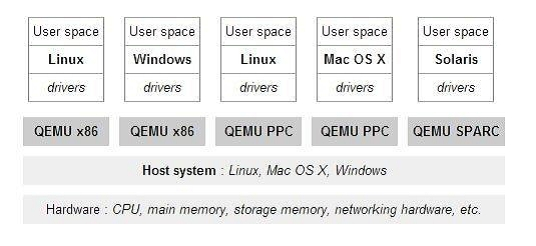

Qemu is an emulator that simulates the CPU and other hardware to the Guest OS. The Guest OS thinks it is dealing directly with the hardware, but in fact it is dealing with the hardware simulated by Qemu, and Qemu translates these instructions to the real hardware.

Since all instructions have to pass through Qemu, performance is poor.

KVM

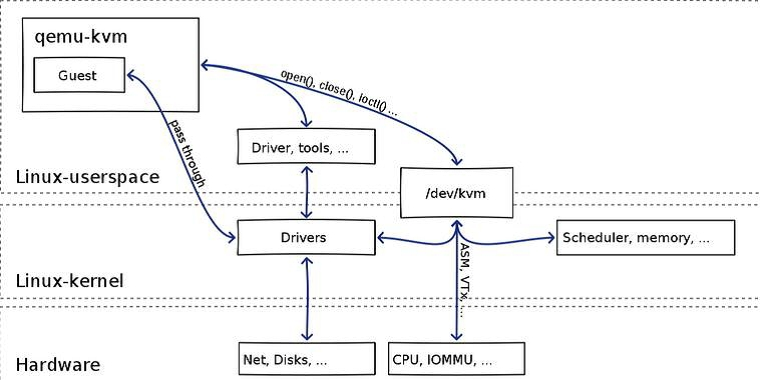

KVM is a module for the linux kernel which requires CPU support and uses hardware-assisted virtualization technologies Intel-VT, AMD-V, memory-related such as Intel’s EPT and AMD’s RVI technologies. CPU instructions for Guest OS do not have to be translated by Qemu and run directly, greatly increasing speed. KVM exposes the interface via /dev/kvm and user-state programs can access this interface via the ioctl function. See the following pseudo-code.

The KVM kernel module itself can only provide CPU and memory virtualization, so it must be combined with QEMU to form a complete virtualization technology, which is called qemu-kvm.

qemu-kvm

Qemu integrates KVM, calling the /dev/kvm interface via ioctl and leaving the CPU instructions to the kernel module. kvm is responsible for cpu virtualization + memory virtualization, enabling virtualization of cpu and memory, but kvm cannot emulate other devices. qemu emulates IO devices (network cards, disks, etc.), and kvm with qemu enables true server virtualization. This is called qemu-kvm because it uses both of these things.

Qemu emulates other hardware such as Network, Disk, which also affects the performance of these devices, so pass through paravirtualised devices virtio_blk, virtio_net were created to improve device performance.

From UCSB CS290B

Libvirt

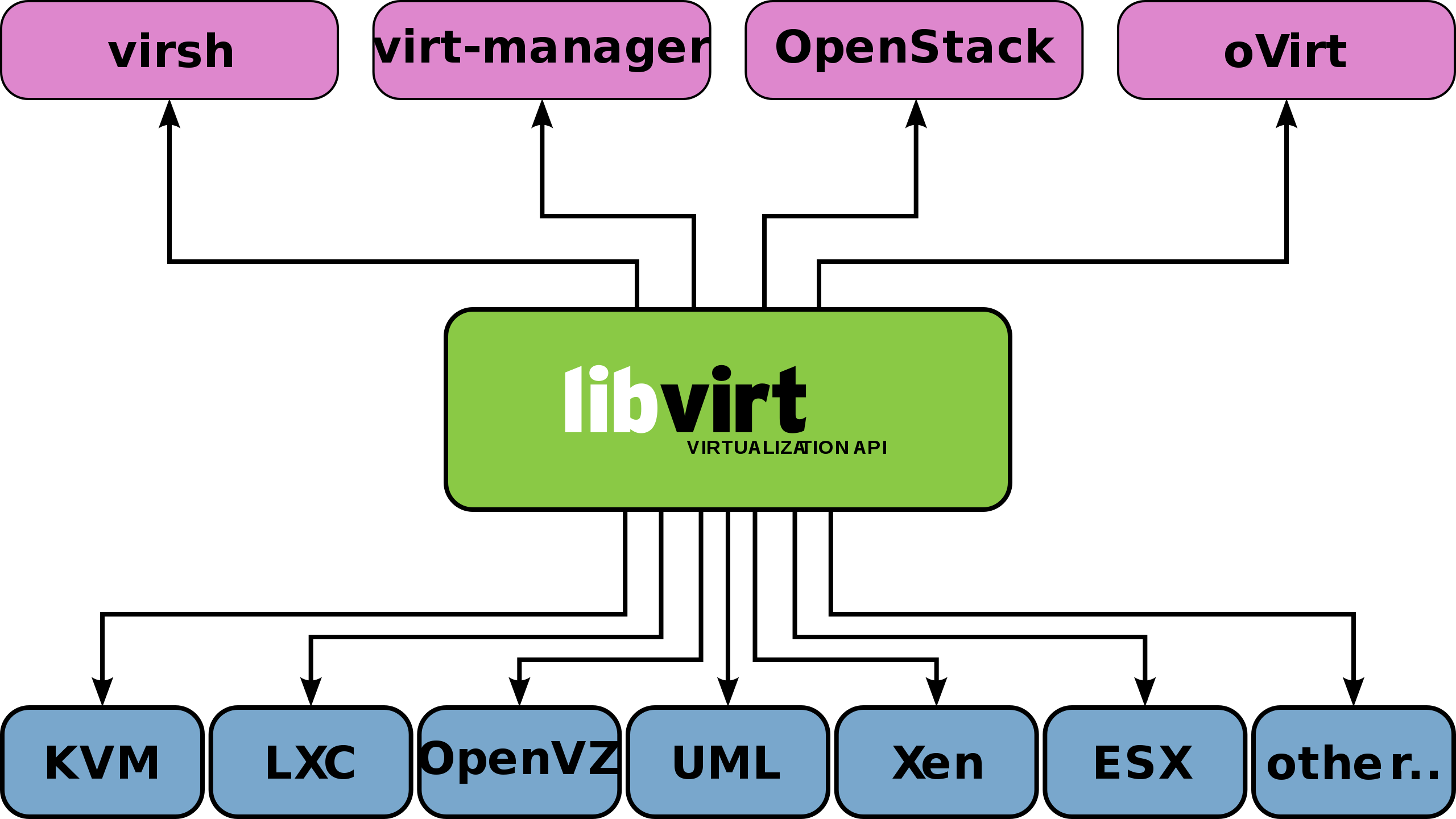

Why do you need Libvirt?

- Hypervisors such as qemu-kvm have numerous command line virtual machine management tools with many parameters that are difficult to use.

- Hypervisors are numerous and there is no unified programming interface to manage them, which is important for cloud environments.

- There is no unified way to easily define the various manageable objects associated with a VM.

What does Libvirt offer?

- It provides a unified, stable, open source application programming interface (API), a daemon (libvirtd) and a default command line management tool (virsh).

- It provides management of the virtualised client and its virtualised devices, network and storage.

- It provides a more stable set of application programming interfaces in C. Bindings to libvirt are now available in a number of other popular programming languages, and libraries for libvirt are already available directly in Python, Perl, Java, Ruby, PHP, OCaml and other high-level programming languages.

- Its support for many different Hypervisors is implemented through a driver-based architecture. libvirt provides different drivers for different Hypervisors, including a driver for Xen, a QEMU driver for QEMU/KVM, a VMware driver, etc. Driver source files like qemu_driver.c, xen_driver.c, xenapi_driver.c, vmware_driver.c, vbox_driver.c can be easily found in the libvirt source code.

- It acts as an intermediate adaptation layer, allowing the underlying Hypervisor to be completely transparent to upper-level user-space management tools, as libvirt shields the details of the various underlying Hypervisors and provides a unified, more stable interface (API) for upper-level management tools.

- It uses XML to define the various VM-related managed objects.

Currently, libvirt is the most widely used tool and API for the management of virtual machines and some common virtual machine management tools (e.g. virsh, virt-install, virt-manager, etc.) and cloud computing frameworks (e.g. OpenStack, OpenNebula, Eucalyptus, etc.) all use libvirt’s APIs at the bottom.

From: Libvirt Wiki

Hands-on

Arch Linux installation and configuration

|

|

This has installed all the required software and the next step is to configure.

virsh operations

Configuring the network

Configuring console connections.

Create VM

|

|

Enter the VM

|

|