This article focuses on how to enable eBPF on a calico cluster to accelerate network data forwarding, and will also provide an introduction to eBPF and some of its advantageous features in calico.

1. eBPF

1.1 About eBPF

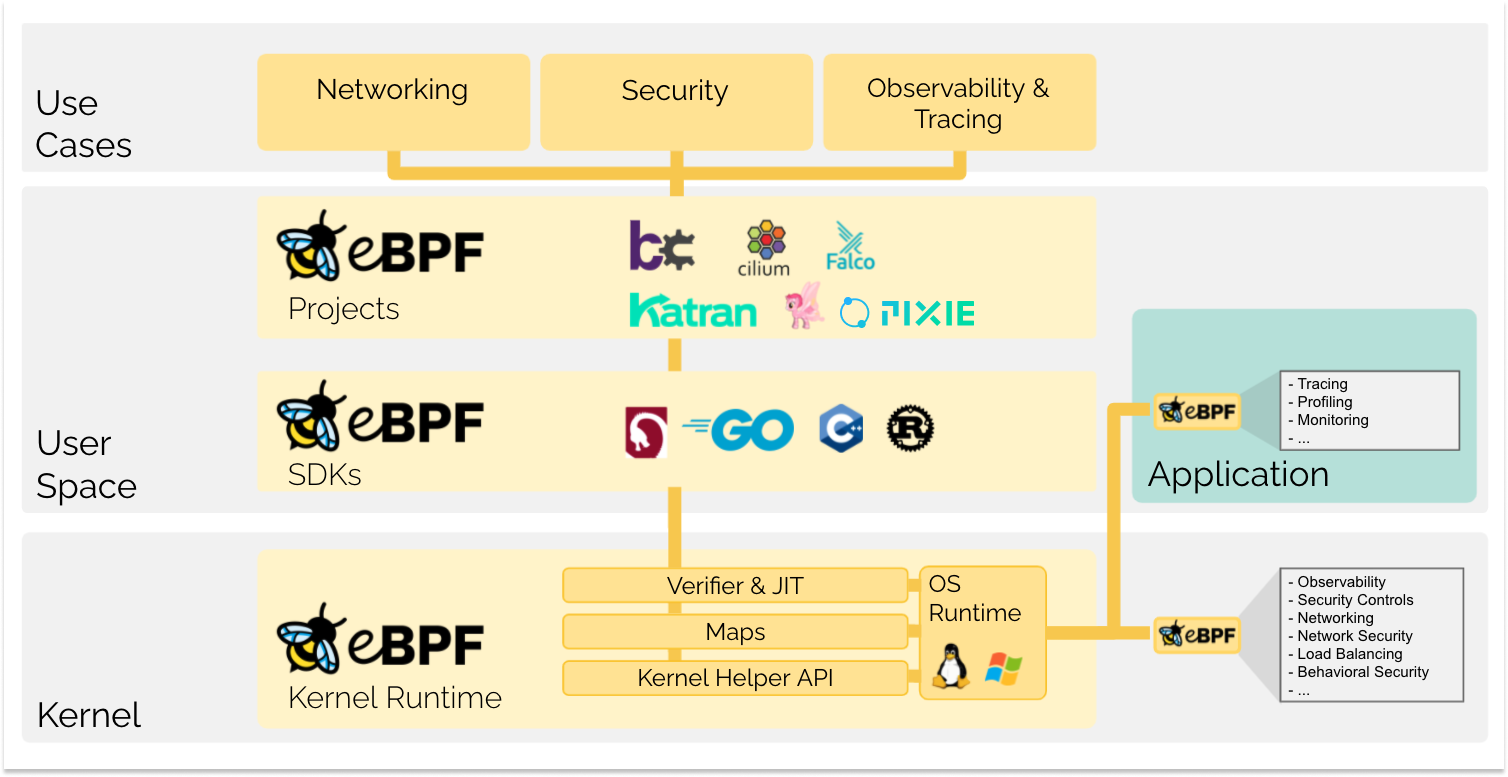

eBPF is a revolutionary technology, originating from the Linux kernel, that allows running sandboxed programs in the operating system kernel. It is used to safely and efficiently extend the functionality of the kernel without changing the kernel source code or loading kernel modules.

It allows applets to be loaded into the kernel and attached to hooks that are triggered when certain events occur. This even allows a lot of customization of the kernel’s behavior. Although the eBPF virtual machine is the same for each type of hook, the functionality of the hooks varies greatly. Because it can be dangerous to load programs into the kernel, the kernel runs all programs through a very strict static verifier; the static verifier sandboxes the program to ensure that it can only access the allowed parts of memory and that it must terminate quickly.

eBPF is a Linux kernel feature that allows fast yet safe mini-programs to be loaded into the kernel in order to customise its operation.

1.2 Advantages of eBPF

Several advantages of ebpf.

- Higher throughput IO

- Lower CPU resource usage

- Native support for K8S services without kube-proxy

- Lower first packet latency

- Preserves external client source IP for external requests

- DSR (Direct Server Return) support

- Less resource consumption than kube-proxy for ebpf data plane synchronization forwarding rules

More information can be found in this official calico introductory article.

2. calico configuration eBPF

2.1 Upgrade kernel

We use centos7 system, according to the documentation more suitable kernel version need to be greater than 5.8, so we directly use elrepo source to upgrade the latest 6.1.4 version of the kernel.

|

|

After confirming that the system kernel upgrade was successful we move on to the next step.

|

|

2.2 Configuring the API Server

By default, calico communicates with the apiserver in the cluster via kube-proxy. When we enable ebpf, we tend to turn off the kube-proxy function of the cluster. At this point, in order to ensure that calico can communicate with the apiserver, we need to manually configure a stable and available address first. Generally speaking, it is sufficient to use the VIP and port that we configured when we initialized the cluster.

|

|

After updating the configuration, check whether the pod restarted successfully and whether the cluster is normal.

2.3 Configuring kube-proxy

Since ebpf will conflict with kube-proxy, it is better to disable kube-proxy. There are two ways to disable kube-proxy, one is to remove it directly from daemonset and the other is to set a specific node to not run kube-proxy by using nodeSelector. The official documentation shows that there are advantages to both approaches. If you are using ebpf directly when initializing the k8s cluster, you can consider disabling kube-proxy in the initialization parameters, but here we already have kube-proxy installed, so it is more elegant to use nodeSelector to control it.

|

|

At this point we look at the kube-proxy status of the cluster and see that DESIRED and CURRENT are both 0.

2.4 Configuring eBPF

To enable eBPF you only need to modify the linuxDataplane parameter. Note that eBPF mode does not support configuring hostPorts, so you need to set it to empty at the same time.

Wait for the calico rolling reboot to complete, that is, successfully enable eBPF. note that in the process of rolling reboot, there will be some nodes using eBPF and some nodes using iptables.

2.5 Configuring DSR

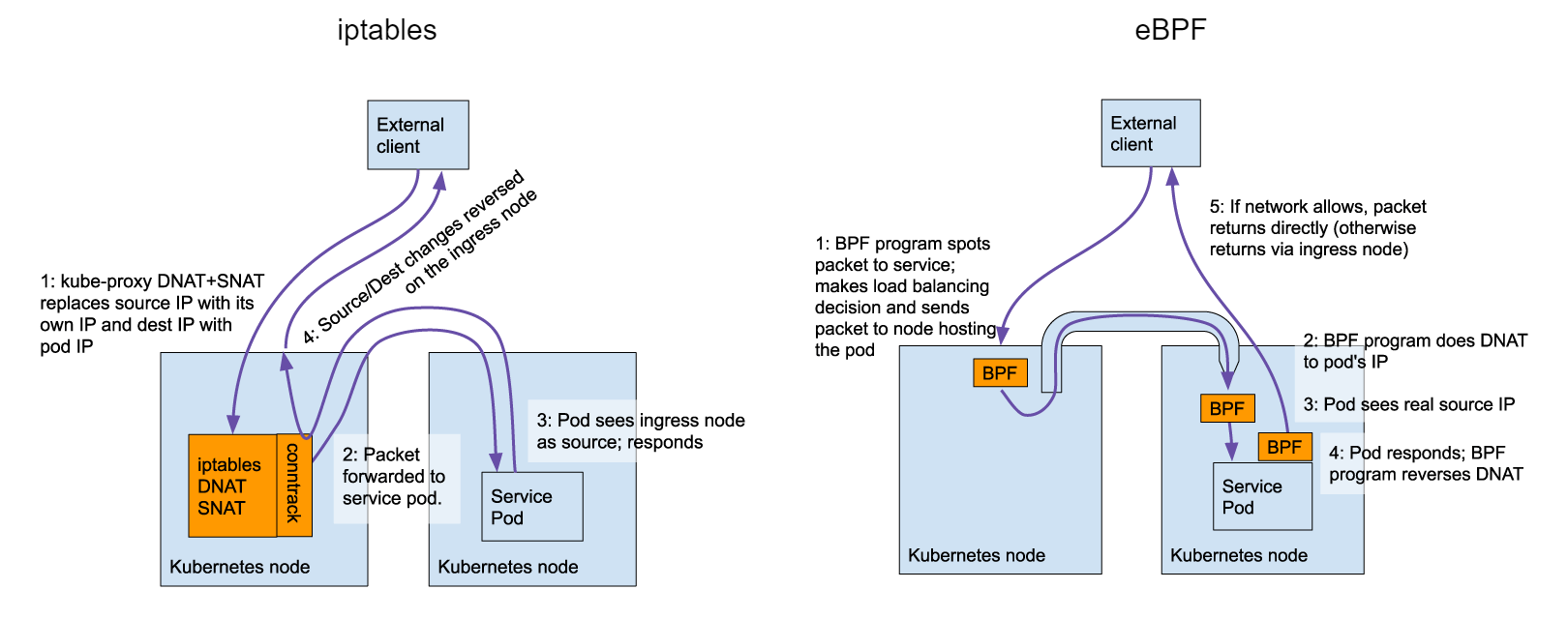

eBPF also has a nice feature called DSR (Direct Server Return), which means that after receiving an external request, the pod itself will directly return the packet to the client, instead of returning the packet via the original path, which can effectively shorten the return path and thus improve performance.

DSR mode works very similar to LVS DR mode, but generally speaking, DSR requires the network itself to be properly connected between pod and client, so the feature is not enabled by default.

We can use calicoctl to modify the bpfExternalServiceMode parameter in felixconfiguration and change it from the default Tunnel to DSR to enable it.

If you need to turn off DSR mode just modify it back.

3. Checking eBPF

3.1 calico-bpf

Officially, calico-bpf is built into calico-node to help us troubleshoot and locate some problems in eBPF mode, and we can also use it to check if the cluster’s eBPF is working properly.

The corresponding ds/calico-node can also be replaced with the pod name of the calico-node on top of a particular node.

|

|

3.2 Accessing the service

We test on a machine outside the cluster, accessing podIP , clusterIP and loadbalancerIP respectively to see if the client IP address 10.31.100.100 is returned correctly.

With eBPF configured, accessing both clusterIP and loadbalancerIP from outside the cluster is able to return the client’s IP address properly.

4. Disable eBPF

Disabling eBPF is also very simple, just reverse the above process, here directly disable calico’s eBPF function (DSR only takes effect under eBPF), and then enable k8s cluster kube-proxy can be.

|

|

Once the configuration is complete, wait for the calico reboot of all nodes to finish and for the kube-proxy to start.