I. Product Description

After Kubernetes deployment, we often need to open the service to external users to access . If you are using a cloud platform (e.g. AWS), this requirement is very simple to handle and can be achieved through the LoadBalancer of the cloud platform.

But with a self-built kubernetes bare-metal cluster, it is much more problematic. Bare metal clusters do not support load balancing by default, and the available solutions are no more than Ingress, NodePort, ExternalIPs, and other ways to achieve external access. Unfortunately, these solutions are not perfect, and they all have more or less the same drawbacks, making bare metal clusters second-class citizens in the Kubernetes ecosystem.

MetalLB aims to address this pain point by providing a LoadBalancer that integrates with standard network devices, so that external services on bare-metal clusters are as “up and running” as possible, reducing O&M management costs.

II. Deployment Requirements

MetalLB deployments require the following environments to operate.

- A cluster running Kubernetes 1.13.0 or later, which does not yet have network load balancing capabilities.

- Some IPv4 addresses for MetalLB allocation.

- If using BGP mode, one or more BGP-enabled routers are required.

- If using layer 2 mode, access to port 7946 must be allowed between cluster nodes , communication between user agents.

- The cluster’s network type needs to support MetalLB, as detailed in the following figure.

| Network Type | Compatibility |

|---|---|

| Antrea | Yes |

| Calico | Mostly |

| Canal | Yes |

| Cilium | Yes |

| Flannel | Yes |

| Kube-ovn | Yes |

| Kube-router | Mostly |

| Weave Net | Mostly |

III. Working Principle

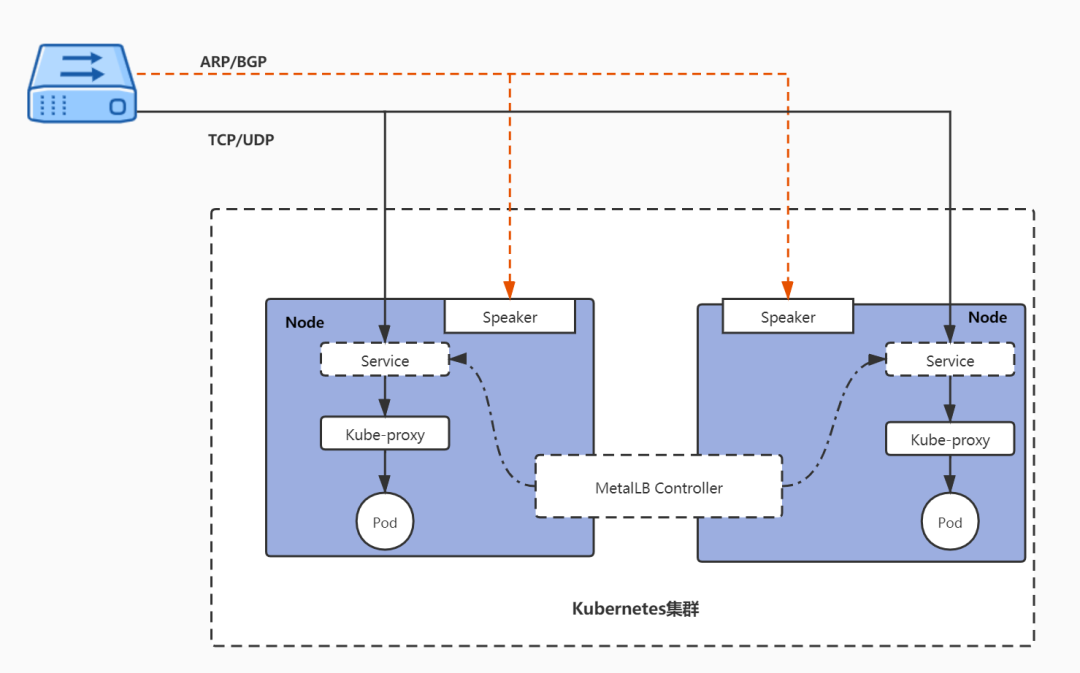

Metallb contains two components, Controller and Speaker. Controller is deployed in Deployment mode, while Speaker is deployed to each Node node inside the cluster using Daemonset.

The specific working principle is shown in the figure below. The Controller is responsible for listening to service changes, and when the service is configured in LoadBalancer mode, it is assigned to the corresponding IP address from the IP pool and manages the life cycle of the IP; the Speaker will broadcast or respond according to the selected protocol to realize the communication of IP addresses. Response. When the service traffic reaches the specified Node via TCP/UDP protocol, the traffic is processed by the Kube-Proxy component running on top of the Node and distributed to the corresponding service Pod.

MetalLB supports two modes, one is Layer2 mode and the other is BGP mode.

Layer2 mode

In Layer 2 mode, Metallb selects one of the Node nodes to be the Leader, and all traffic related to the service IP flows to that node. In this node, kube-proxy propagates the received traffic to the Pod of the corresponding service, and when the leader node fails, another node takes over. From this perspective, the 2-tier model is more like high availability than load balancing, as only one node can be responsible for receiving data at the same time.

In Layer 2 mode there will be two limitations: single node bottleneck and slow failover.

Since Layer 2 mode will use a single elected Leader to receive all traffic from the service IP, this means that the ingress bandwidth of the service is limited to the bandwidth of a single node, and the traffic handling capacity of a single node will become the bottleneck for receiving external traffic for the whole cluster.

In terms of failover, the current mechanism is that MetalLB notifies each node by sending Layer 2 packets and re-elects the Leader, which can usually be done within a few seconds. However, if it is caused by an unplanned incident, the service IP will not be accessible at this point until the client with the failure refreshes its cache entries.

BGP Mode

BGP mode is true load balancing, which requires the router to support the BGP protocol. each node in the cluster will establish a BGP-based peering session with the network router and use the session to advertise the load-balanced IP. the routes published by MetalLB are equivalent to each other, which means that the router will use all the target nodes and perform load balancing between them. Once the packets arrive at the node, kube-proxy takes care of the last hop of traffic routing to the Pod of the corresponding service.

The way load balancing is done depends on your particular router model and configuration. Commonly, each connection is balanced based on packet hashing, which means that all packets for a single TCP or UDP session are directed to a single computer in the cluster.

The BGP model also has its own limitations. The model assigns a given packet to a specific next hop by hashing certain fields in the packet header and using that hash as an index for the back-end array. However, the hashes used in routers are usually unstable, so whenever the number of back-end nodes changes, existing connections are randomly re-hashed, which means that most existing connections will be forwarded to another back-end that is not aware of the original connection status. To reduce this trouble, it is recommended to use a more stable BGP algorithm, e.g., the ECMP hashing algorithm.

IV. Deployment and Installation

Metallb supports deployment via Kuberntes list, Helm and Kustomize. In this article, we will take Kuberntes list as an example to introduce the deployment and installation of the product with the latest version v0.13.4.

Note: Since Metallb no longer uses configmap from v0.13.0 and is configured with custom resources instead, this example will be configured differently from the old version.

-

Enabling ARP mode for kube-proxy

If the cluster is using kube-proxy in IPVS mode, ARP mode must be enabled starting with kubernetes v.1.14.2.

-

Install MetalLB related components

Running the following command to install the relevant components will deploy MetalLB to the metalb-system namespace by default.

1$ kubectl apply -f https://raw.githubusercontent.com/metallb/metallb/v0.13.4/config/manifests/metallb-native.yaml -

Configuration of the mode

Layer2 mode configuration

Create an IPAddressPool and specify the IP pool to be used for allocation.

Create a broadcast statement. If no IP pool is specified here, all IP pool addresses will be used by default.

BGP mode configuration

For a basic configuration with a BGP router and a range of IP addresses, you need 4 pieces of information.

- the IP address of the router to which MetalLB should be connected.

- the AS number of the router.

- the AS number that MetalLB should use.

- the IP address range indicated by the CIDR prefix.

Example: If you now assign a pool of IP addresses with AS number 64500 and 192.168.10.0/24 to the MetalLB and connect it to a router with AS number 64501 at address 10.0.0.1, the configuration is shown below.

Create BGPPeer.

Configuring IP address pools.

Creating broadcast statements.

V. Functional Verification

In this example we use the Layer2 configuration above to test.

-

Create the sample yaml file and execute it, including svc and deployment.

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36apiVersion: v1 kind: Service metadata: name: myapp-svc spec: selector: app: myapp ports: - protocol: TCP port: 80 targetPort: 80 type: LoadBalancer # Select the LoadBalancer type --- apiVersion: apps/v1 kind: Deployment metadata: name: myapp-deployment labels: app: myapp spec: replicas: 2 selector: matchLabels: app: myapp template: metadata: labels: app: myapp spec: containers: - name: nginx image: nginx:1.19.4 ports: - containerPort: 80 -

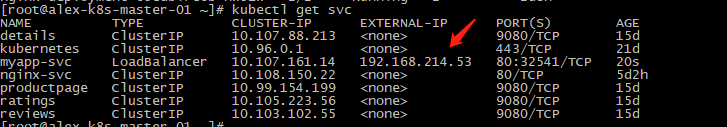

Check the status of the created SVC, which has acquired the IP

-

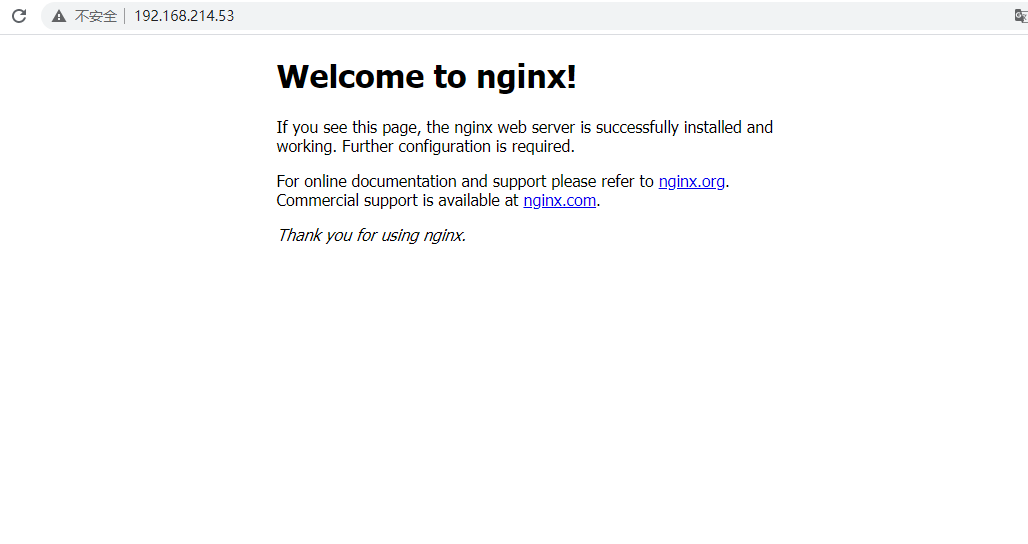

Accessing the application through an external browser

VI. Project Maturity

The MetalLB project is currently in beta, but has been used by multiple people and companies in multiple production and non-production clusters. Based on the frequency of bug reports, no major bugs have been identified at this time.