1. Background

The team was short of testers and had no choice but to do it ourselves. Automating tests for the systems we develop saves manpower, improves efficiency and increases confidence in the quality assurance of the system.

Our goal is to have automated tests covering three environments, as follows:

- Automated testing in the CI/CD pipeline.

- Post-release automated smoke/acceptance testing in various stage environments.

- Post-release automated smoke/acceptance testing in production environments.

We will create a unified use case library or different use case libraries for different environments, but none of this matters, what matters is the language we use to write these use cases and the tools we use to drive them.

Here’s a look at how the solution came about.

2. Solution

Initially colleagues in the group used YAML files to describe the test cases and wrote a separate tool in Go to read them and execute them. This tool worked fine. However, there were some problems with this solution.

-

Writing complexity

To write the simplest use case of a successful connect connection, we have to configure almost 80 lines of yaml. a slightly more complex test scenario would be around 150 lines of configuration.

-

Difficult to extend

The initial YAML structure was inadequately designed and lacked extensibility, making it necessary to create a new use case file when extending a use case.

-

Inadequate expression

Our system is a messaging gateway and some use cases will rely on certain timings, but use cases written in YAML based on this cannot express such use cases clearly.

-

Poor maintainability

he tool that drives the execution of the use case to see the execution logic. It is difficult to cover this tool quickly.

To do this we wanted to redesign a tool where test developers could write use cases using an external DSL grammar supported by the tool, which would then read these use cases and execute them.

Note: According to the classification of DSLs in Martin Fowler’s book Domain Specific Languages, there are three options for DSLs: generic profiles (xml, json, yaml, toml), custom domain languages, which together are called external DSLs. e.g. regular expressions, awk, sql, xml, etc. Using a fragment/subset of a common programming language as a DSL is called an internal dsl, like ruby etc.

After a rough evaluation of the DSL grammar based on the number of scenarios to be tested and the complexity of the use cases (I even generated a few versions of the DSL grammar with the help of ChatGPT), I found that this “small language” is “small but complete”. If one were to write a use case in such a DSL, it would probably be comparable in code size to one written in a general-purpose language (e.g. Python).

If this is the case, there is no point in designing your own external DSL. It would be better to use Python for the whole thing. But on second thought, since we are using a subset of a common language and the team members are not familiar with Python, why not go back to Go.

Let’s make a bold move: use the Go testing framework as an “internal DSL” to write use cases and use the go test command as a test driver to execute them. Also, with GPT-4, it shouldn’t be a big problem to generate TestXxx, additional use cases and so on.

Let’s take a look at how to organise and write use cases and use the go test driver for automated testing.

3. Implementation

3.1. Test case organization

Here is an example of an automated test of an open source mqtt broker.

Note: You can build a standalone version of the open source mqtt broker service locally as the object being tested, for example using Eclipse’s mosquitto.

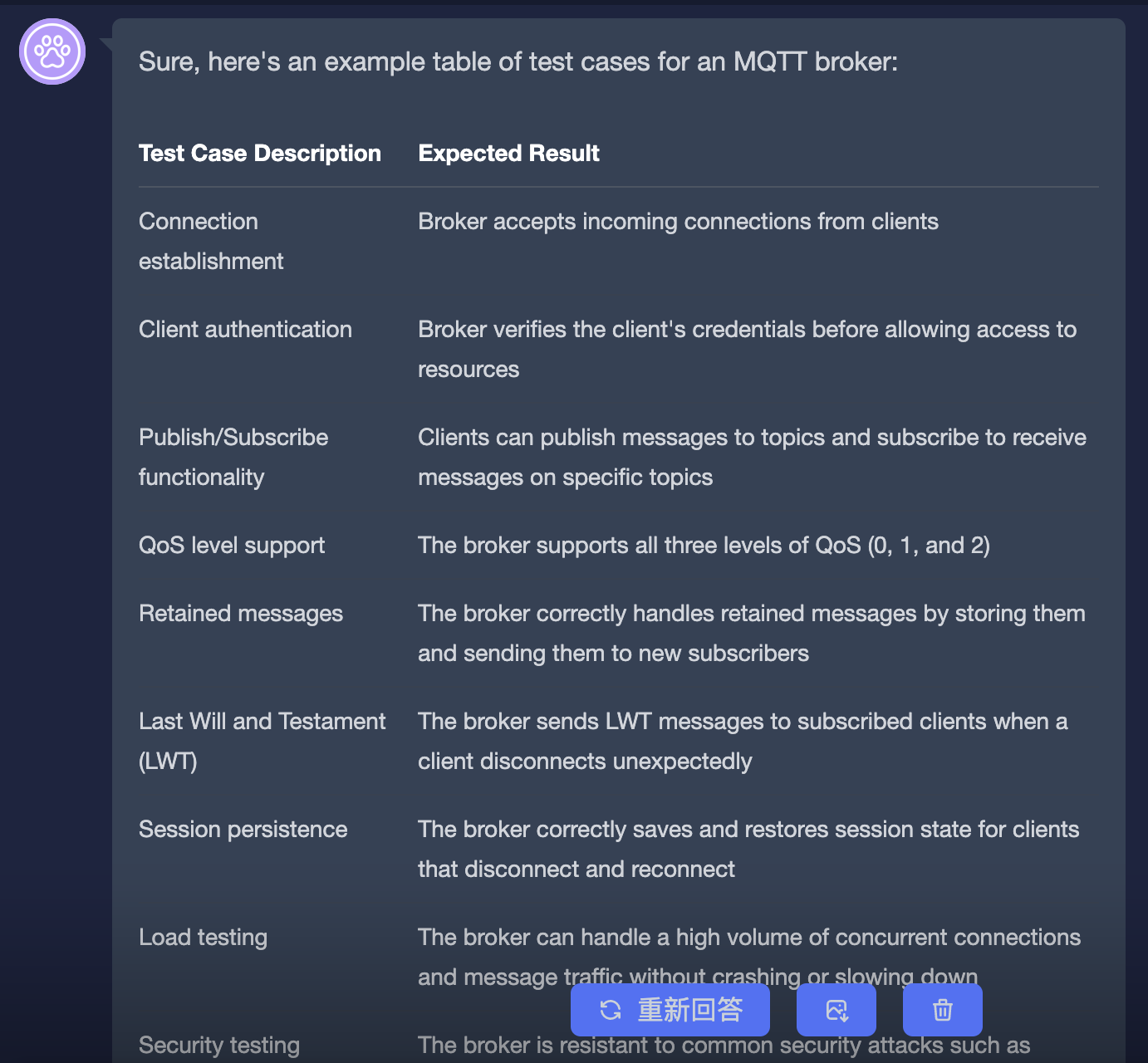

Before organising the use cases, I asked ChatGPT what aspects of an mqtt broker test should be included in the use cases, and ChatGPT gave me a simple table:

If you know anything about the MQTT protocol, then you should find the answers given by ChatGPT to be very good.

Here we will organise the use cases in three scenarios: connection, subscribe and publish:

|

|

A brief description of the layout of this test case organisation:

- We divide the test cases into multiple scenarios, here including connection, subscribe and publish;

- Since it is driven by go test, each directory where the test source files are stored follows Go’s requirements for Test, e.g. source files end with

_test.go, etc. - Each scenario directory holds the test case files, and a scenario can have multiple

_test.gofiles. Here each TestXxx in the_test.gofile is set to be a test suite, and the TestXxx in turn is based on a subtest to write a use case, where each subtest case is a minimal test case; - The

scenario_test.goin each scenario directory is the TestMain entry for the packages in this directory, mainly to consider passing in uniform command line flags and parameter values for all packages, and also you set up and teardown in TestMain for that scenario setting. typical code for this file is as follows:

|

|

Let’s move on to the implementation of the specific test case.

3.2. Test case implementation

Let’s take a slightly more complex test case for the subscribe scenario and look at the test suites and cases in subscribe_test.go in the subscribe directory.

|

|

The test cases in this test file are not that different from the unit tests we write every day! However, there are a few things to note.

- Test function naming

Here, two Test suites are named Test_Subscribe_S0001_SubscribeOK and Test_Subscribe_S0002_SubscribeFail. The naming format is

|

|

The reason for this naming is the need to organise the test cases and also to distinguish between the different cases in the generated Test report.

-

testcase is presented via subtest

Each TestXxx is a test suite, while each table-driven sub test corresponds to a test case.

-

Both test suite and test case can be individually marked as parallelizable or not

The Parallel method of testing.T identifies whether a particular TestXxx or test case (subtest) is parallelizable or not.

-

For each test case, we call setup and teardown

This ensures that the test cases are independent of each other and do not affect each other.

3.3. Test execution and report generation

After designing the layout and writing the use cases, the next step is to execute the use cases. So how do we execute these use cases?

As mentioned earlier, our solution is based on the go test driver, and our execution will also use go test.

Under the top-level directory automated-testing, execute the following command:

|

|

go test will iterate through the tests for each packet under automated-testing, passing in the -addr flag when executing the test for each packet. If there is no mqtt broker service listening on port localhost:30083, then the above command will output the following message:

|

|

This is also a test failure situation.

When automating tests, we usually save the error or success information to a test report file (mostly html), so how do we generate our test report file based on the above test result content?

Firstly, go test supports the presentation of the output in a structured form by passing in the -json flag, so that we can simply read out the fields and write them to html based on the json output. The good thing is that there is a ready-made open source tool to do this: go-test-report. Here is the command line pipeline that allows go test and go-test-report to work together to generate test reports:

Note: Installation of the go-test-report tool:

go install github.com/vakenbolt/go-test-report@latest

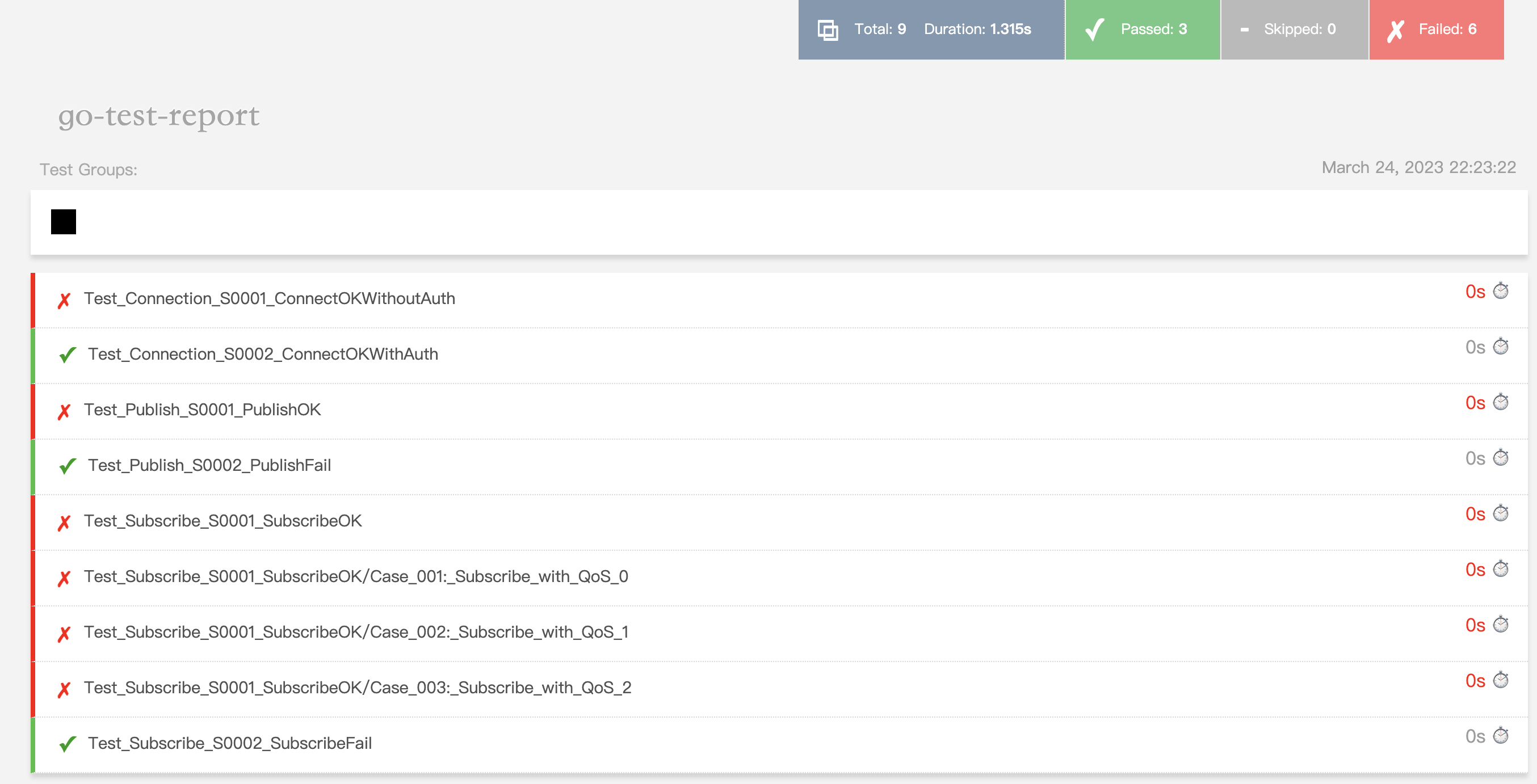

Once executed, a test_report.html file will be generated in the current directory, which can be opened with a browser to see the results of the test execution:

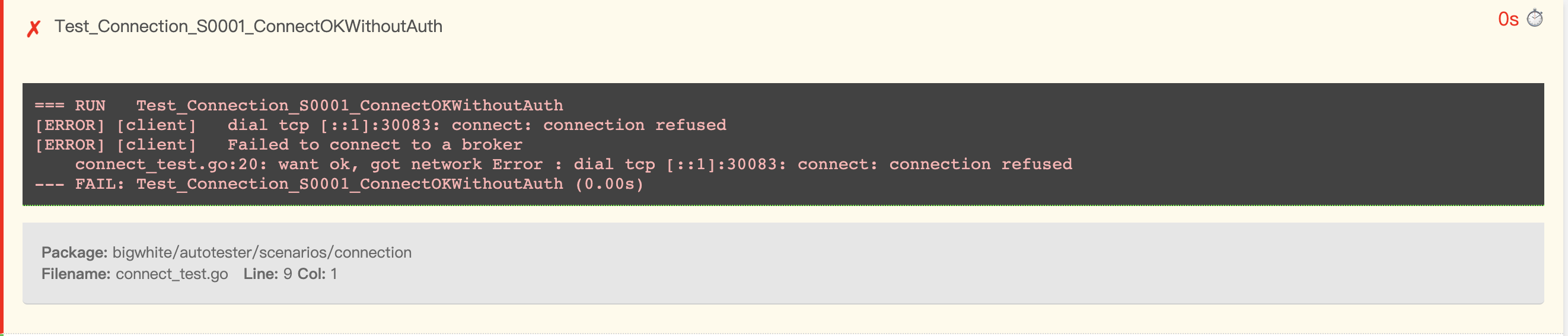

With the output of the test report, we can clearly see which cases passed and which ones failed. And by using the name of the Test suite or the name of the Test case, we can quickly locate which case of which suite under which scenario is reporting the error! We can also click on the name of a test suite, e.g. Test_Connection_S0001_ConnectOKWithoutAuth, and open the error details to see the source file and the specific line number of the error.

To make it easier to quickly type in the above commands, we can put them into a Makefile for easy input and execution, i.e. in the top-level directory, execute make to execute the tests.

To pass in the service address of a custom mqtt broker, use: $make broker_addr=192.168.10.10:10083.

4. Summary

In this article, we have described how to implement go test driven automated testing based on go test, describing the structural layout of such a test, how to write the use cases, execution and report generation, etc.

The shortcoming of this solution is that it requires go and go-test-report to be deployed in the environment where the test cases are located.

go test supports the compilation of tests into a single executable, although it does not support the compilation of tests from multiple packages into a single executable:

In addition, the go test executable does not support converting the output to JSON format. Therefore, it is not possible to interface with go-test-report to save the test results in a file for subsequent viewing.

The source code covered in this article can be downloaded here.

5. Ref

https://tonybai.com/2023/03/30/automated-testing-driven-by-go-test/