logging module is a standard module built in Python, mainly used for outputting runtime logs, you can set the output log level, log save path, log file rollback, etc. Compared with print, it has the following advantages.

- you can set different logging levels to output only important information in the release version without having to display a lot of debugging information

- print outputs all the information to the standard output, which seriously affects the developer’s ability to view other data from the standard output; logging allows the developer to decide where and how to output the information.

The main advantages of the logging module are.

- Support for multiple threads

- Classification of logging by different levels

- Flexibility and configurability

- Separation of how logging is done from what is logged

Components of the logging module

The logging library is modular in design, with four main components of the logging module:

| Component name | corresponding class name | function description |

|---|---|---|

| Logger | Logger | exposes functions to the application that determine which logs are valid based on logger and filter levels |

| Handler | Handler | sends log records created by the logger to the appropriate destination output |

| Filter | Filter | provides more granular control tools to determine which log records to output and which to discard |

| Formatter | Formatter | determines the final output format of the log records |

Log event information is passed between Logger, Handler, Filter, Formatter in the form of LogRecord instances.

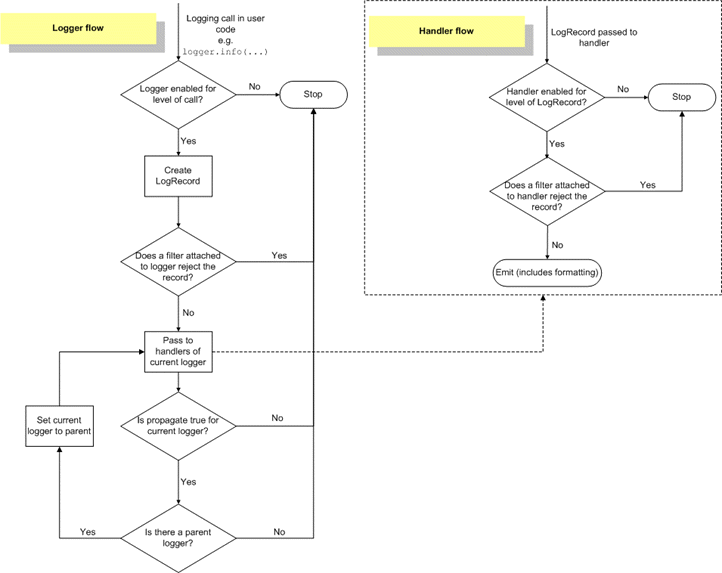

The official logging module workflow diagram is as follows.

The entire workflow of logging.

- Determine whether the Logger object is available for the set level, if so, execute downward, otherwise, the process ends.

- Create LogRecord object, if the Filter object registered to Logger object returns False after filtering, no logging is done and the process is finished, otherwise, the process is executed downward.

- If the log level of the Handler object is larger than the set log level, then judge whether the Filter object registered to the Handler object returns True after filtering and release the output log information, otherwise, the process ends.

- If the incoming Handler is greater than the level set in the Logger, that is, the Handler is valid, the process is executed downward, otherwise, the process ends.

- Determine if this Logger object has a parent Logger object, if not (which means the current Logger object is the top Logger object root Logger), the process ends. Otherwise, set the Logger object as its parent Logger object, repeat steps 3 and 4 above, and output the log output from the parent Logger object until it is the root Logger.

Association between components.

- The logger (logger) needs to output the log information to the target location through the processor (handler)

- Different processors (handlers) can output logs to different locations

- The logger can set multiple handlers to output the same log record to different locations

- Each handler can set its own filter to filter the logs so that only the logs of interest are retained

- Each handler can set its own formatter to output the same log in different formats to different locations

For a log message to be finalized, it needs to be filtered in the following order.

- Logger level filtering

- Logger’s filter filtering

- Logger’s processor level filtering

- Filtering by the logger’s processor

In summary: the logger is the entry point, and the real work is done by the handler, which can also filter and format the log content to be output through the filter and formatter. A Logger can contain one or more Handlers and Filters, and a Logger has a one-to-many relationship with a Handler or Fitler. A Logger instance can add multiple Handlers, and a Handler can add multiple formatters or multiple filters, and the logging level will be inherited.

LogRecord

Logger creates LogRecord instances each time it records a log. These properties of LogRecord are used to merge log data records into formatted strings. The following table lists the common property names.

| property name | format | description |

|---|---|---|

| args | No formatting of args | argument tuple, will be merged into msg to produce message |

| asctime | %(asctime)s | Time when LogRecord was created, default form is 2003-07-08 16:49:45,896 |

| filename | %(filename)s | the filename part of pathname |

| funcName | %(funcName)s | the name of the function where the log call was made |

| levelname | %(levelname)s | the level name of the message DEBUG, INFO, WARNING, ERROR, CRITICAL |

| lineno | %(lineno)d | the source line number where the log call was made (if available) |

| module | %(module)s | module (the name part of the file name) |

| msecs | %(msecs)d | the millisecond portion of the LogRecord creation time |

| message | %(message)s | Log message, the result of the calculation of msg % args. This property is set when Formatter.format() is called. |

| msg | No formatting required for msg | The original formatted string passed in during the log call, and merged with args to produce either a message or an arbitrary object (see Using Arbitrary Objects as Messages) |

| name | %(name)s | the name of the logger that records the log |

| pathname | %(pathname)s | the full pathname of the file where the log call was made (if available) |

| process | %(process)d | process ID (if available) |

| processName | %(processName)s | process name (if available) |

| thread | %(thread)d | thread ID (if available) |

| threadName | %(threadName)s | thread name (if available) |

Logger

The Logger object is the most commonly used object in the logging library. The Logger object serves three main purposes.

- Expose info, warning, error and other methods for the application, through which the application can create the corresponding level of logging.

- Determine which logs can be passed to the next process based on the Filter and logging level settings.

- Passing logs to all relevant Handlers.

Loggers are never instantiated directly, but are often obtained through the module-level function logging.getLogger(name), where root is used if name is not given. each instance has a name, and they are conceptually organized into a hierarchical namespace, using dots(periods) as a separator. For example, ‘scan’ is the parent Logger of ‘scan.text’ , ‘scan.html’, ‘scan.pdf The name of the logger can be arbitrarily named to indicate the part of the application where the logged information is generated. This naming scheme, the back of the loggers is the front logger of the child logger, automatically inherit the log information of the parent loggers, because of this, there is no need to configure all the loggers of an application once, as long as the top-level logger is configured, and then the child loggers inherit the line as needed.

A good habit of naming Logger is to use module-level logger, and use logging in each module, the command is as follows: logger = logging.getLogger(__name__)

If you add a Handler to a Logger and one or more of its ancestors, it may be printed multiple times with the same records. In general, you should not add a Handler to more than one Logger. If you add a Handler to only the appropriate Logger and it is an elder in the Logger hierarchy, then it will see all events logged by the child Loggers.

The most common methods of Logger objects are divided into two categories: configuration methods and message sending methods

The most common configuration methods are as follows.

| Method | Description |

|---|---|

| -Logger.setLevel() | -sets the minimum severity level of log messages that the logger will process |

| Logger.addHandler() and Logger.removeHandler() | add and remove a handler object for this logger object |

| Logger.addFilter() and Logger.removeFilter() | add and remove a filter object for the logger object |

Note on the Logger.setLevel() method: The lowest level of the built-in levels is DEBUG, and the highest level is CRITICAL. e.g. setLevel(logging.INFO), where the function argument is INFO, then the logger will only handle INFO, WARNING, ERROR and CRITICAL level logging, and DEBUG level messages will be ignored/discarded.

logger.setLevel(logging.ERROR) # Set the logging level to ERROR, i.e. only logs with a logging level greater than or equal to ERROR will be output. Note that the root logger will always have an explicit level setting (default is WARNING).

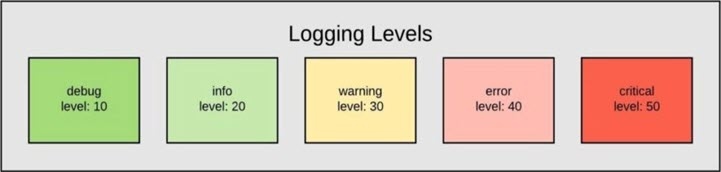

Log level (from highest to lowest).

| level | value | description |

|---|---|---|

| CRITICAL | 50 | The message logged when a serious error occurs that prevents the application from continuing to run |

| ERROR | 40 | The message logged when some function does not work properly due to a more serious problem |

| WARNING | 30 | The message logged when something undesirable happens (e.g., low disk free space), but the application is still running normally |

| INFO | 20 | Second only to DEBUG in terms of detail, usually only key node information is logged to confirm that everything is working as we expect |

| DEBUG | 10 | The most detailed logging information, typically used for problem diagnosis |

| NOTSET | 0 |

Once the logger object is configured, logging can be created using the following methods.

| Method | Description |

|---|---|

Logger.debug(msg, *arg, **kwargs), Logger.info(msg, *arg, **kwargs), Logger.warning(msg, *arg, **kwargs), Logger.error(msg, *arg, **kwargs), Logger.critical(msg, *arg, **kwargs) |

create a log record with the level corresponding to their method name |

Logger.exception(msg, *arg, **kwargs) |

creates a log message similar to Logger.error() |

Logger.log(level, msg, *arg, **kwargs) |

need to get an explicit log level parameter to create a log record |

The four keyword arguments exc_info, stack_info, stacklevel and extra are checked in kwargs .

- If the exc_info parameter does not evaluate to False, the exception information is added to the log message, usually by passing in a tuple (similar in format to what exc_info() returns) or an exception instance; otherwise, sys.exc_info() is called on its own to get the exception information.

- The value of stack_info defaults to False; if it is True, stack information is added to the log message, including the process where logging is called. Unlike exc_info, which records the stack frame from the bottom of the stack to the logging call in the current thread, the latter is the exception trace when searching for exception handlers.

- stacklevel defaults to 1, if greater than 1, the log event will skip the corresponding number of line numbers and function names when generating LogRecord, stack frame creation.

- extra is a reserved keyword argument to pass a dictionary that can be populated into LogRecord’s

__dict__for subsequent use of these user-defined attributes. For example, if fmt=’%(asctime)-15s %(clientip)s %(user)-8s %(message)s’ is defined in the formatter, then you can pass in: warning( “Problem: %s”, “connection timeout”, extra={‘clientip’: ‘192.168.0.1’, ‘user’: ‘fbloggs’}).

Description.

- The difference between exception() and Logger.error() is that

Logger.exception()will output stack trace information, plus usually just call the method in an exception handler. - log() is not as convenient compared to

Logger.debug()andLogger.info(), although it needs to pass one more level parameter, but when you need to log a custom level, you still need this method to do it.

handler

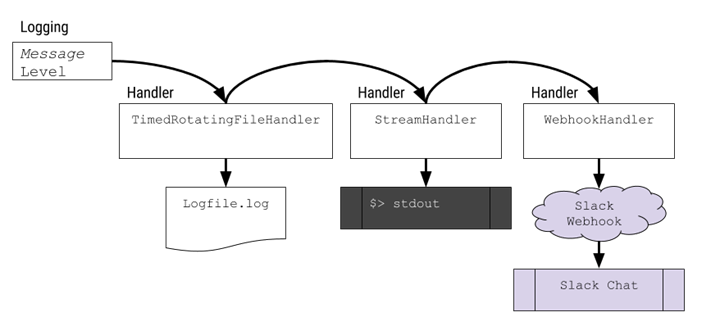

The role of the Handler object is to distribute messages (based on the level of the log message) to the location specified by the handler (file, network, mail, etc.). the Logger object can add zero or more Handler objects to itself via the addHandler() method. For example, an application may want to implement several logging requirements as follows.

- Send all logs to a log file

- Send all logs with severity level greater than or equal to error to stdout (standard output)

- Send all logs with a severity level of critical to an email address

This scenario requires 3 different handlers, each of which sends a log of a specific severity level to a specific location.

There are only a very few methods in a handler that the application developer needs to care about. For application developers using the built-in handler object, it seems that the only relevant handler methods are the following configuration methods.

| Method | Description |

|---|---|

| Handler.setLevel() | Sets the minimum severity level of log messages that the handler will handle |

| Handler.setFormatter() | set a formatter object for the handler |

| Handler.addFilter() and Handler.removeFilter() | add and remove a filter object for the handler |

It is important to note that application code should not instantiate and use Handler instances directly. Because Handler is a base class, it only defines the interface that all handlers should have, and provides some default behavior that subclasses can use directly or override. Here are some commonly used Handlers.

| handler(handler) | class defination(type definition) | package(package location) | describe(description) |

|---|---|---|---|

| StreamHandler | StreamHandler(stream=None) | logging | output LogRecord to a stream like sys.stdout, sys.stderr, or any file-like object |

| FileHandler | FileHandler(filename, mode=‘a’, encoding=None, delay=False) | logging | Inherits the StreamHandler output function to output LogRecord to a hard drive file |

| NullHandler | NullHandler | logging | A new handler added to Python 3.1 that does not do any formatting or output operations, it is essentially a “null operation” for library developers to use |

| WatchedFileHandler | WatchedFileHandler(filename, mode=‘a’, encoding=None, delay=False) | logging.handlers | Similar to FileHandler, which can only be used under Unix/Linux to monitor the closing and reopening of logs when log files are changed due to log rotation by programs such as newsyslog or logrotation |

| RotatingFileHandler | RotatingFileHandler(filename, mode=‘a’, maxBytes=0, backupCount=0, encoding=None, delay=False) | logging. False) | logging.handlers |

| TimedRotatingFileHandler | TimedRotatingFileHandler(filename, when=‘h’, interval=1, backupCount=0, encoding= None, delay=False, utc=False, atTime=None) | logging.handlers | subclass of BaseRotatingHandler, rotates logs based on time. when specifies the interval type, interval specifies the interval time, and the rotated old log files are appended with The extension depends on the rotation interval, generally %Y-%m-%d_%H:%M:%S is used. backupCount is not 0, then only backupCount old log files are saved at most |

| SocketHandler | SocketHandler(host, port) | logging.handlers | outputs LogRecord to a network socket, the default is a TCP socket, if the port is not set then a Unix domain socket is used |

| DatagramHandler | DatagramHandler(host, port) | logging.handlers | Inherits from SocketHandler output function, the default is to use a UDP socket, if not set the port will use a Unix domain socket |

| SysLogHandler | SysLogHandler(address=(’localhost’, SYSLOG_UDP_PORT), facility=LOG_USER, socktype=socket. DGRAM) | logging.handlers | exports LogRecord to the remote or local Unxi system log (Unix syslog). If address is not set, the default is (’localhost’, 514); the default is a UDP socket, if you want to use a TCP socket (not supported by Windows), you need to set the socktype to socket.SOCK_STREAM |

| NTEventLogHandler | NTEventLogHandler(appname, dllname=None, logtype=‘Application’) | logging.handlers | support output LogRecord to the local Windows NT, Windows 2000, or Windows XP event log, provided you have the Win32 extension for Python installed; appname will be shown in the event log, provided you create an appropriate registry entry with that name; dllname should give the .dll or .dll name that defines the log message dll or .exe that defines the log message, the default is win32service.pyd; logtype can be Application, System, and Security |

| SMTPHandler | SMTPHandler(mailhost, fromaddr, toaddrs, subject, credentials=None, secure=None, timeout=1.0) | logging.handlers | output via SMTP protocol LogRecord to a specified set of email addresses; supports the use of the security protocol TLS and requires identity credentials |

| MemoryHandler | MemoryHandler(capacity, flushLevel=ERROR, target=None, flushOnClose=True) | logging.handlers | is a more general implementation of BufferingHandler that allows buffer the LogRecord into memory, and when the buffer size specified by capacity is exceeded, the log will be flushed to target |

| HTTPHandler | HTTPHandler(host, url, method=‘GET’, secure=False, credentials=None, context=None) | logging. handlers | supports exporting LogRecord to a web server, supports GET and POST methods, and when using HTTPS connections, the secure, credentials, and context parameters need to be specified |

| QueueHandler | QueueueHandler(queue) | logging.handlers | supports exporting LogRecord to queues, such as those implemented in queue and multiprocessing. Logging can work in a separate thread, which is beneficial in some web applications where the client thread needs to respond as quickly as possible and any potentially slow operations can be done on a separate thread. Its enqueue(record) method uses the queue’s put_nowait() method by default, but you can of course override this behavior |

| QUeueListener | QueueueListener(queue, *handlers, respect_handler_level=False) | logging.handlers | This is not actually a handler but needs to work with the QueueHandler. The dequeue(block) method calls the queue’s get() method by default, but you can override this behavior |

Filter

Filter actually complements logger.level by providing a more granular tool for determining which LogRecord to output. Multiple filters can be applied to both logger and handler. Users can declare their own filter as an object, use the filter method to get LogRecord as input, and provide True/False as output. filter can be used by Handler and Logger for more granular and complex filtering than level. filter is a filter base class that only allows log events under a certain logger level to pass the filter. The class is defined as follows.

For example, if a filter is instantiated with a name parameter value of ‘A.B’ passed in, then that filter instance will only allow log records generated by loggers with names similar to the following rules to pass the filter: ‘A.B’, ‘A.B ‘A.B,C’, ‘A.B.C.D’, ‘A.B.D’, and loggers with names ‘A.BB ‘, ‘B.A.B’ loggers generate logs that are filtered out. If the value of name is an empty string, then all log events are allowed to pass the filter.

The filter method is used to specifically control whether the passed record can pass the filter, if the method returns a value of 0 means it cannot pass the filter, a non-zero return value means it can pass the filter.

Description.

- The record can also be changed inside the filter(record) method if needed, such as adding, removing or modifying some properties.

- We can also do some statistical work with filter, for example, we can count the number of records processed by a special logger or handler, etc.

Formatter

The Formater object is used to configure the final order, structure and content of log messages. Unlike the logging.Handler base class, application code can instantiate the Formatter class directly. Alternatively, if your application requires some special processing behavior, a subclass of Formatter can be implemented to accomplish this.

The constructor method of the Formatter class is defined as follows.

|

|

As can be seen, the constructor takes 3 optional parameters.

- fmt: specifies the message formatting string, if this parameter is not specified then the original value of message is used by default

- datefmt: specifies the date formatting string, or “%Y-%m-%d %H:%M:%S” if not specified.

- style: new parameter in Python 3.2, can take the values of ‘%’, ‘{’ and ‘$’, if not specified, the default is ‘%’

formatter specifies the form of the final output of the LogRecord. If not provided, the default is to use ‘%(message)s’, a value that contains only the messages passed in when LogRecord is called. The general formatter is provided by the logging library and is constructed as ft = logging.Formatter.__init__(fmt=None, datefmt=None, style='%'), and then placeholders can be used in a LogRecord declare all attributes in a LogRecord; if datafmt is not specified it defaults to the %Y-%m-%d %H:%M:%S style.

| field/property name | usage format | description |

|---|---|---|

| asctime | %(asctime)s | the time when the log event occurred-human readable time, e.g., 2003-07-08 16:49:45,896 |

| created | %(created)f | the time of the log event - the timestamp, which is the value returned by the call to the time.time() function at that time |

| relativeCreated | %(relativeCreated)d | the relative number of milliseconds between the time the logging event occurred and the time the logging module loaded (not sure what this is for yet) |

| msecs | %(msecs)d | the millisecond portion of the logging event occurrence event |

| levelname | %(levelname)s | the log level in text form (‘DEBUG’, ‘INFO’, ‘WARNING’, ‘ERROR’, ‘CRITICAL’) of this log |

| levelno | %(levelno)s | the log level in numeric form for this log entry (10, 20, 30, 40, 50) |

| name | %(name)s | the name of the logger used, the default is ‘root’ because the default is rootLogger |

| message | %(message)s | The text content of the log entry, as computed by msg % args |

| pathname | %(pathname)s | the full path to the source file where the logging function was called |

| filename | %(filename)s | the filename part of pathname, including the file suffix |

| module | %(module)s | the name part of filename, without the suffix |

| lineno | %(lineno)d | The line number of the source code where the logging function is called |

| funcName | %(funcName)s | the name of the function that called the logging function |

| process | %(process)d | process ID |

| processName | %(processName)s | process name, new in Python 3.1 |

| thread | %(thread)d | thread ID |

| threadName | %(thread)s | thread name |

Configuration of the logging module

The main benefit of a logging system such as this is that it is possible to control the amount and content of logging output obtained from an application without having to change the source code of that application. Therefore, while it is possible to perform configuration via the logging API, it must also be possible to change the logging configuration without changing the application at all. For long-running applications like Zope, it should be possible to change the logging configuration while the application is running.

The configuration includes the following.

- The level of logging that the logger or handler should be interested in.

- Which handlers should be attached to which loggers.

- Which filters should be attached to which handlers and loggers.

- Specify properties specific to certain handlers and filters.

In general, each application has its own requirements for how users configure logging output. However, each application will specify the required configuration for the logging system through a standard mechanism.

The simplest configuration is that of a single handler, which is written to stderr and connected to the root logger. This configuration is set by calling the basicConfig() function after importing the logging module.

Basic configuration basicConfig

The following is the simplest setup.

|

|

This will build a base StreamHandler so that any LogRecord above the INFO level will be output on the console. Here are some parameters for the base setup.

| parameter name | description |

|---|---|

| filename | Specifies the filename of the log output target file. Specify this setting so that the log confidence will not be output to the console |

| filemode | Specifies the open mode of the log file, the default is ‘a’. Note that this option is only available when filename is specified |

| format | Specifies the log format string, which specifies the fields to be included in the log output and their order. logging module defined format fields are listed below. |

| datefmt | Specifies the date/time format. Note that this option is only valid if the format contains the time field %(asctime)s |

| level | Specifies the logging level of the logger |

| stream | Specifies the log output target stream, such as sys.stdout, sys.stderr, and network stream; note that stream and filename cannot be provided at the same time, otherwise a ValueError exception will be thrown |

| style | A new configuration item added in Python 3.2. Specifies the style of the format string, which can take the values ‘%’, ‘{’, and ‘$’, with the default being ‘%’ |

| handlers | A new configuration item added in Python 3.3. If this option is specified, it should be an iterable object that creates multiple Handlers that will be added to the root logger. Otherwise, a ValueError exception will be thrown. |

Dictionary configuration dictConfig

A dictionary can be used to describe the settings of the elements. This dictionary should consist of different parts, including logger, handler, formatter and some basic generic parameters. Code example.

|

|

When referenced, dictConfig will disable all running loggers unless disable_existing_loggers is set to False. This is usually required because many modules declare a global logger that will be instantiated when it is imported before dictConfig is called.

File configuration fileConfig

logging.conf is configured using pattern matching, with the regular expression r’^[(. *)]$’, thus matching all components. For multiple instances of the same component a comma ‘,’ is used to separate them.

Configuration file.

|

|

Then use in the code.

Example of logging module usage

Basic Use

Output results.

The default logging level for printing is WARNING, which is not output to a file, and the format is default. Of course, we can pass some parameters in basicConfig to customize it.

|

|

After running it, we can see the following in test.log.

Note that at this point the output goes to the file, so the screen is not printing anything.

Custom logger

Code example.

|

|

Console output results.

File output results.

|

|

Note: If you create a custom Logger object, don’t use the logging output methods in logging, they use the default Logger object and the output log messages will be duplicated.

Problems in practice

Chinese messy code solution

Temporarily disable log output

Log files are divided by time or by size

|

|

Logging JSON logs

|

|