20,000 words long article takes you from the source code to dissect the Go language GC implementation.

Introduction

Three-color marking method

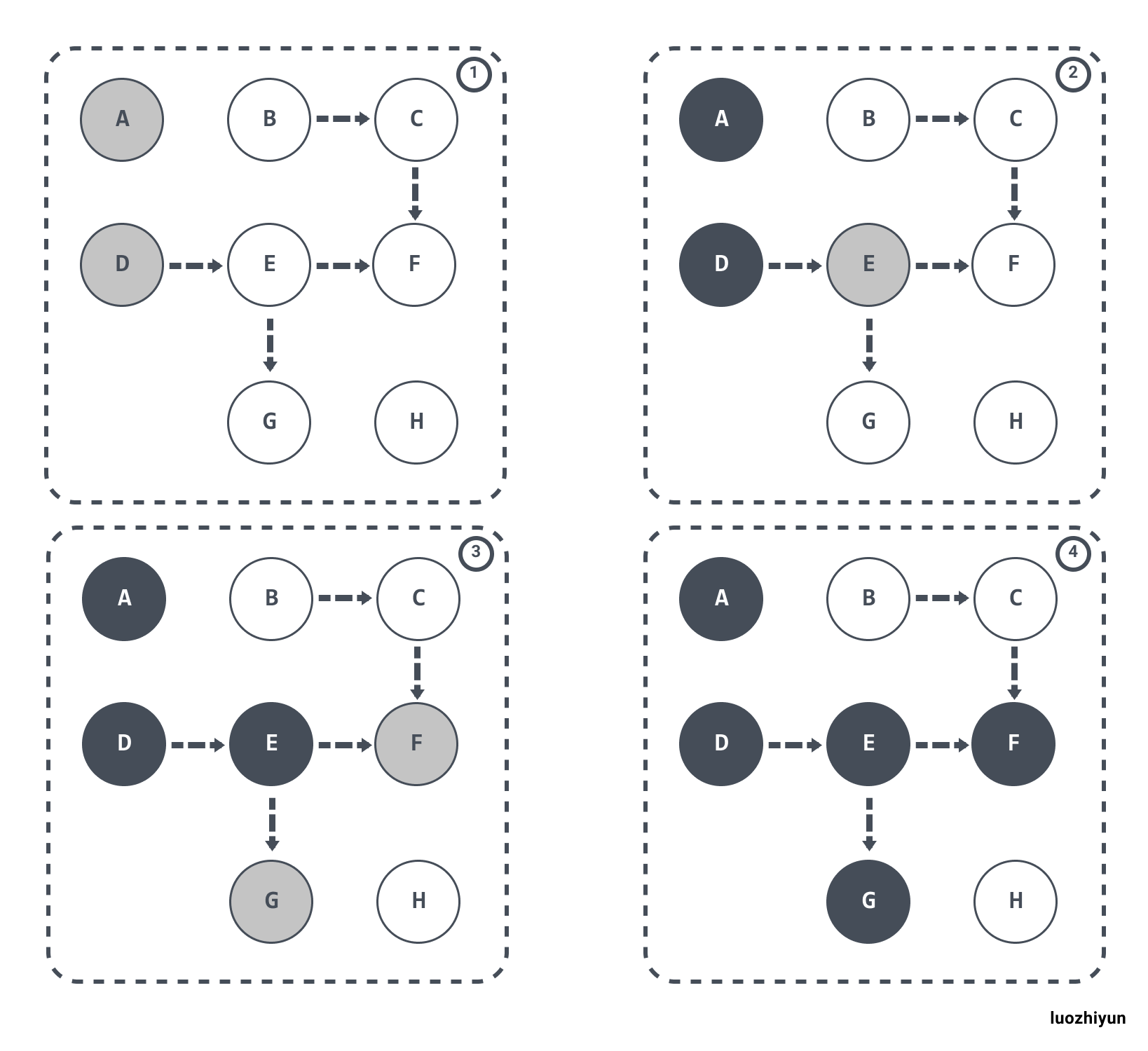

The three-color marking method divides the color of the object into three colors: black, gray, and white.

- Black: the object has been marked and all the properties under the object have been marked (the object required by the program).

- gray: the object has been marked, but the properties under the object are not all marked (the GC needs to find garbage from this object).

- white: the object has not been marked (object garbage).

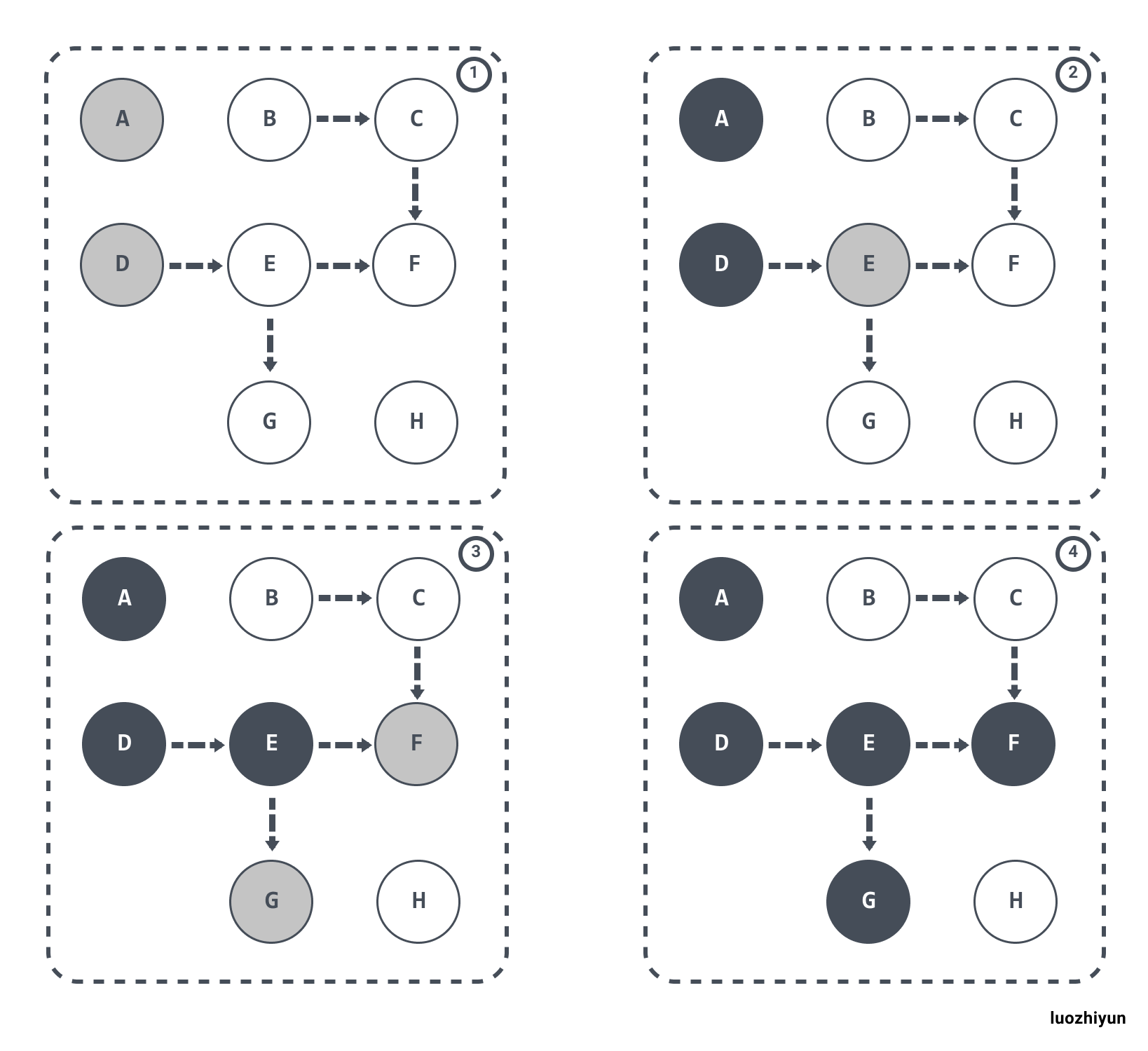

When the garbage collector starts working, traversal access is made from GC Roots, and the access steps can be divided into the following steps.

- the GC Roots object will be marked in gray.

- the object is then fetched from the gray collection, marked as black, and the object to which it refers is marked as gray

- repeating step 2 until there are no more grey collections to mark.

- when finished, the remaining white objects that are not marked are GC Roots unreachable and can be recycled.

The process is roughly as follows.

Let’s talk about the problems that can exist with the three-color marking method.

Problems with the three-color markup method

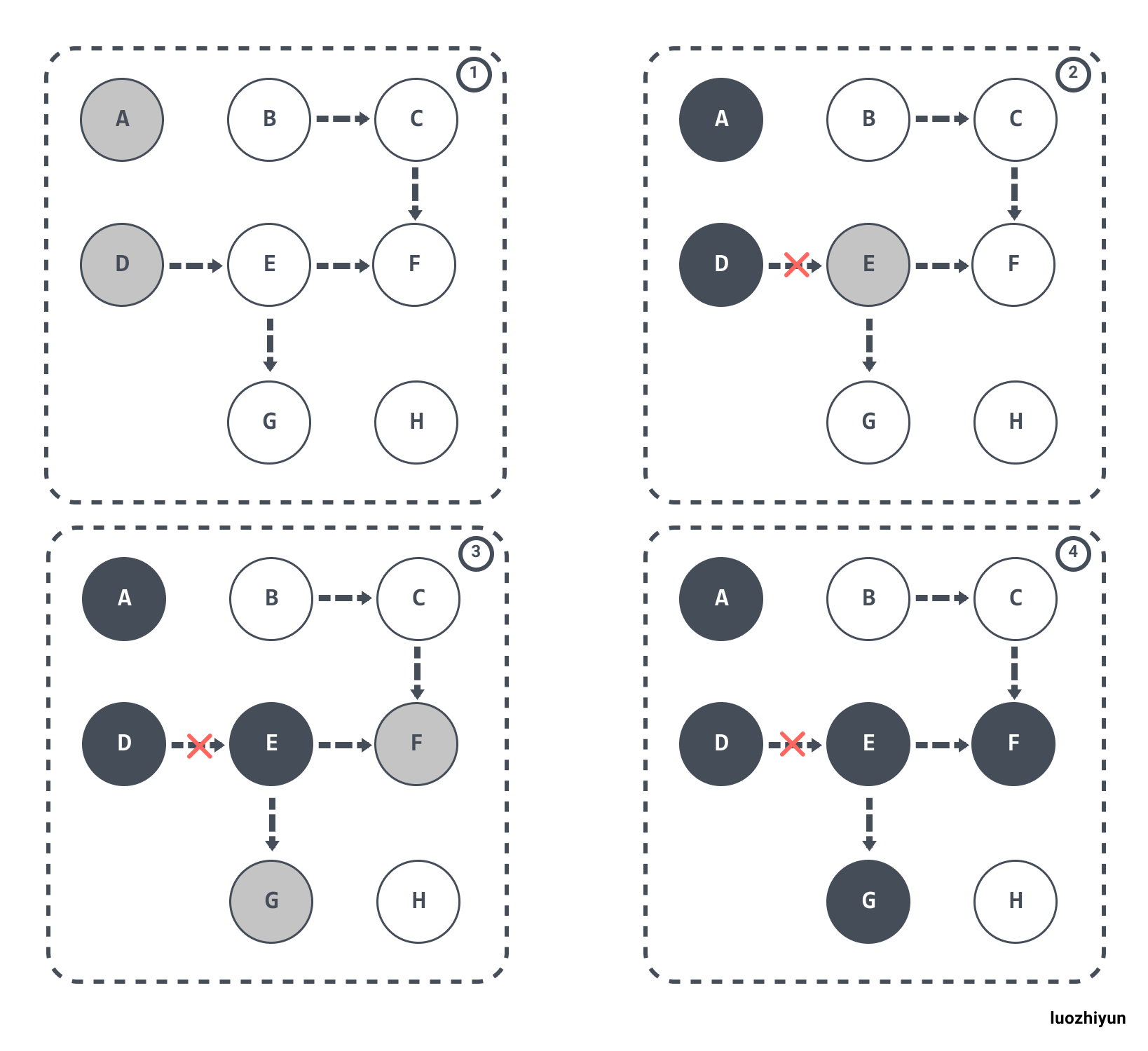

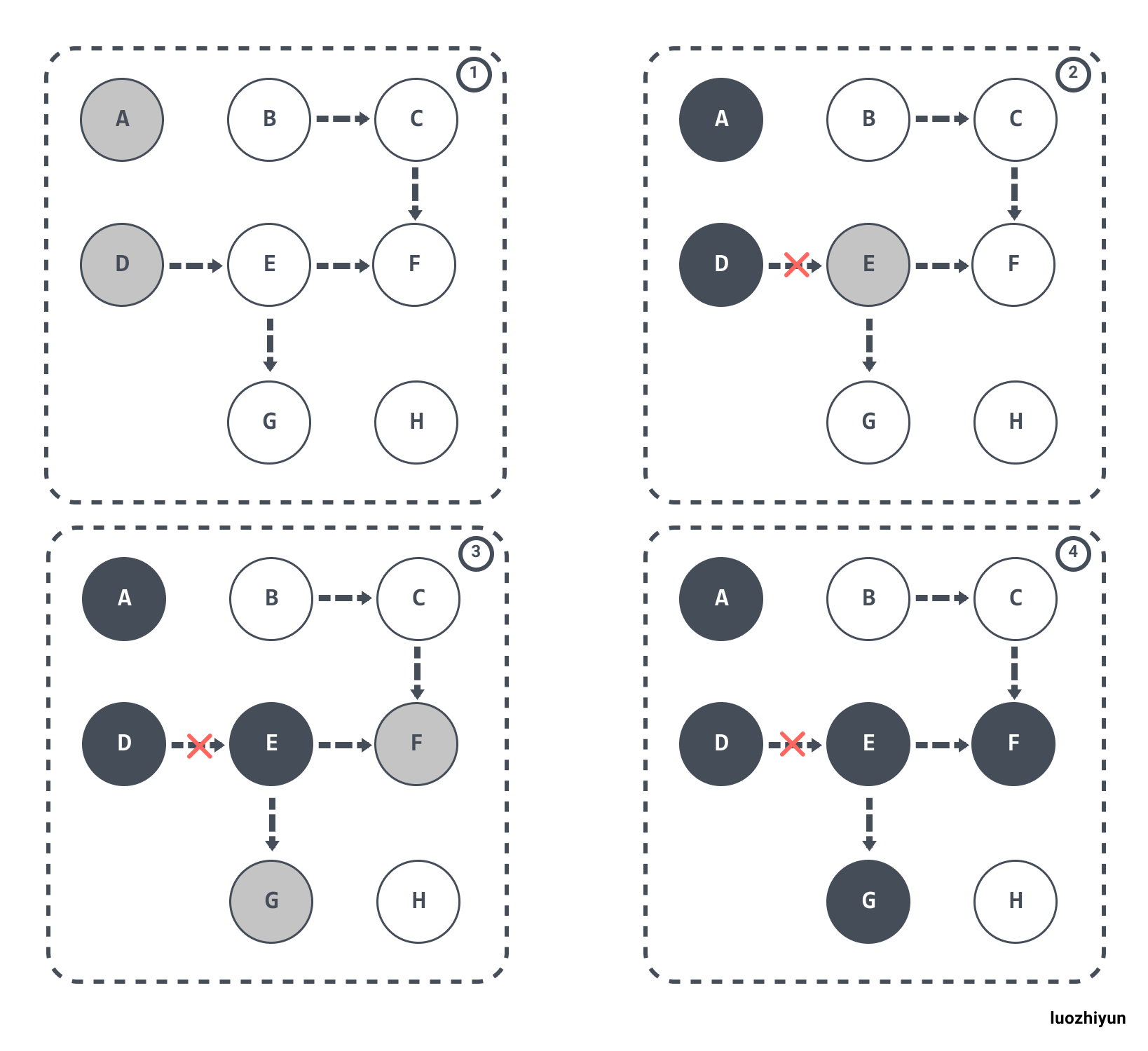

Multi-tagging - floating garbage problem

Suppose E has already been marked (grayed out), and D and E are disconnected from each other, the objects E/F/G should be recycled, but since E has been grayed out, it will still be treated as a living object and continue to be traversed. The result is that this part of the object will still be marked as alive, i.e., this part of memory will not be reclaimed in this GC round.

The memory that should have been recovered but was not recovered is called “floating garbage”. The process is shown in the figure below.

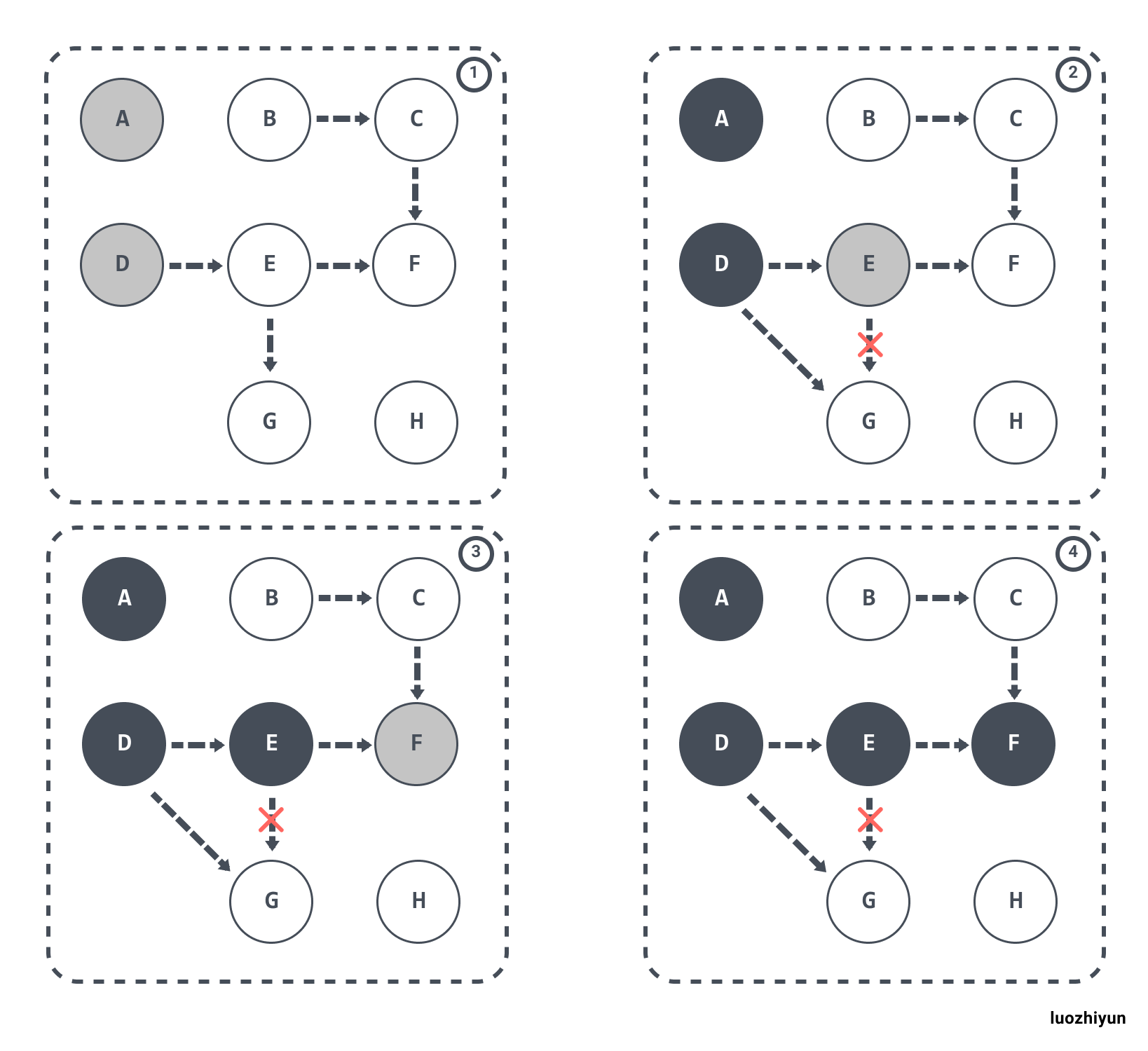

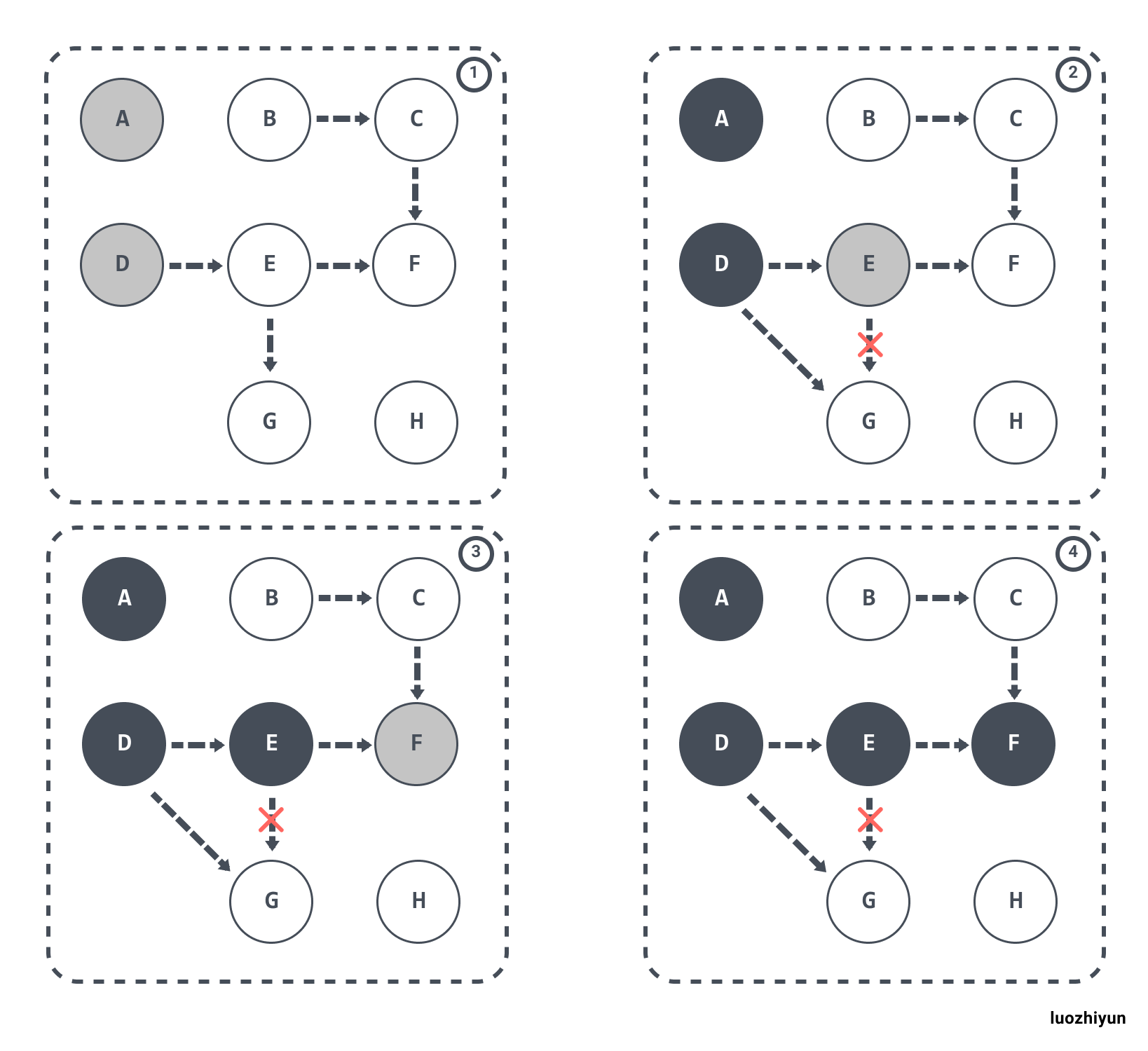

Missing marker - hanging pointer problem

In addition to the above multi-labeling problem, there is also the missing-labeling problem. When the GC thread has traversed until E becomes gray and D becomes black, gray E breaks the reference to white G and black D references white G. At this point, it cuts back to the GC thread and continues running because E no longer has a reference to G, so it does not place G in the gray collection. Even though D has re-referenced G, it will not be re-traversed because D is already black.

The end result is that G stays in the white collection and is eventually purged as garbage. This has a direct impact on the correctness of the application and is unacceptable, and is something Go needs to address at GC time.

Memory Barrier

In order to solve the hanging pointer problem above, we need to introduce barrier techniques to guarantee data consistency.

A memory barrier , is a type of barrier instruction that causes a central processing unit (CPU) or compiler to enforce an ordering constraint on memory operations issued before and after the barrier instruction. This typically means that operations issued prior to the barrier are guaranteed to be performed before operations issued after the barrier.

Then in order to guarantee correctness in the marking algorithm, then we need to meet any of the following conditions.

- strong tri-color invariant: the black object does not point to the white object, but only to the gray object or to the black object.

- weak tri-color invariant: even if a black object points to a white object, there is always a path from the gray object to that white object.

Depending on the type of operation, we can divide memory barriers into Read barriers and Write barriers. In Go, the Write barrier is used.

The reason for this is also mentioned in “Uniprocessor Garbage Collection Techniques”.

If a non copying collector is used the use of a read barrier is an unnecessary expense.there is no need to protect the mutator from seeing an invalid version of a pointer. Write barrier techniques are cheaper, because heap writes are several times less common than heap reads

The Read barrier is costly for a garbage collector that does not require object copies because there is no need to preserve the version pointer problem for read operations for this type of garbage collector. The Write barrier code is comparatively smaller, because the write operations in the heap are much smaller than the read operations in the heap.

Let’s see how the Write barrier does this.

Dijkstra Write barrier

Prior to Go 1.7, the Dijkstra Write barrier was used, using an implementation similar to the following pseudo-code.

1

2

3

|

writePointer(slot, ptr):

shade(ptr)

*slot = ptr

|

If the object is white, shade(ptr) will mark the object as gray. This ensures strong tricolor invariance, and it ensures that the object pointed to by the ptr pointer is not white until it is assigned to *slot.

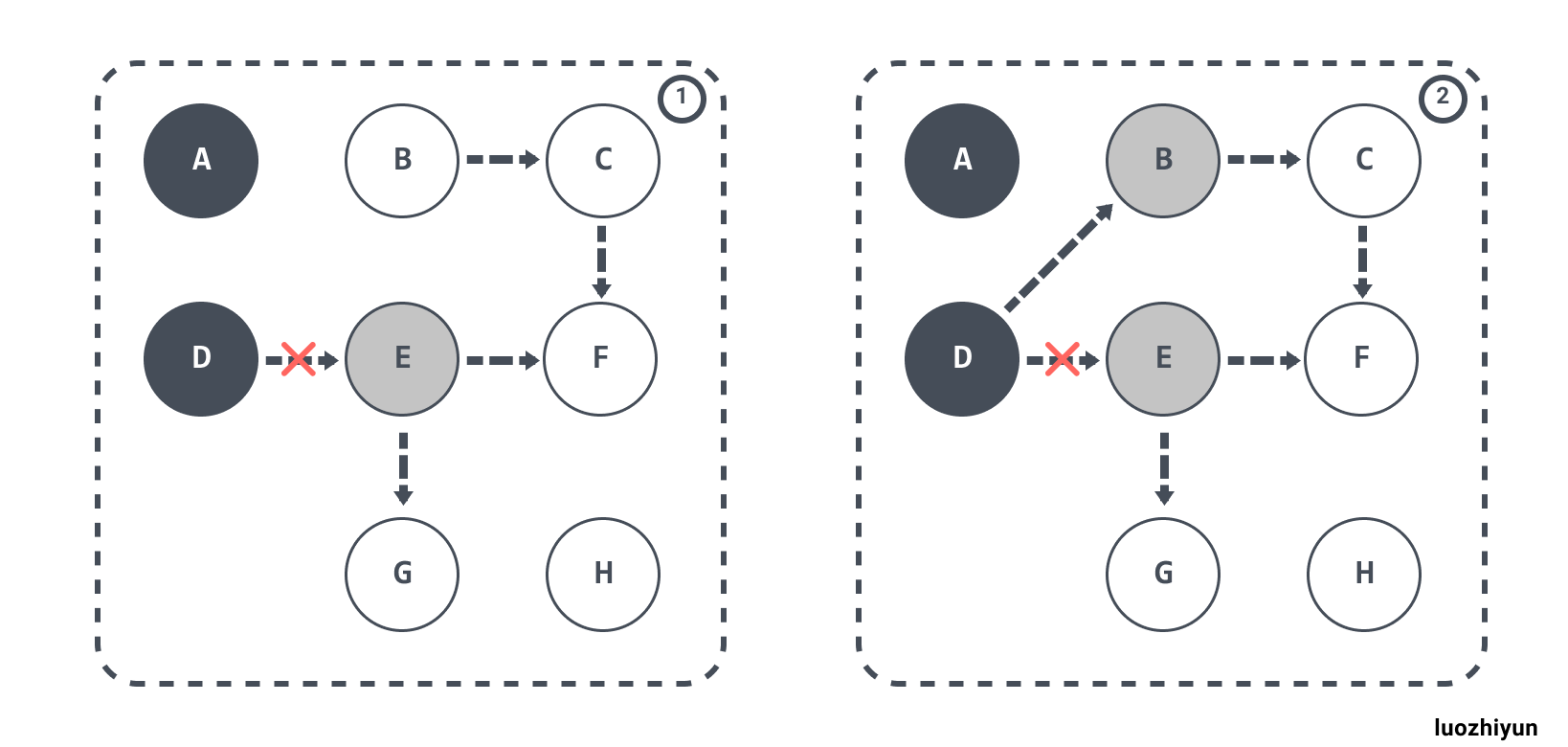

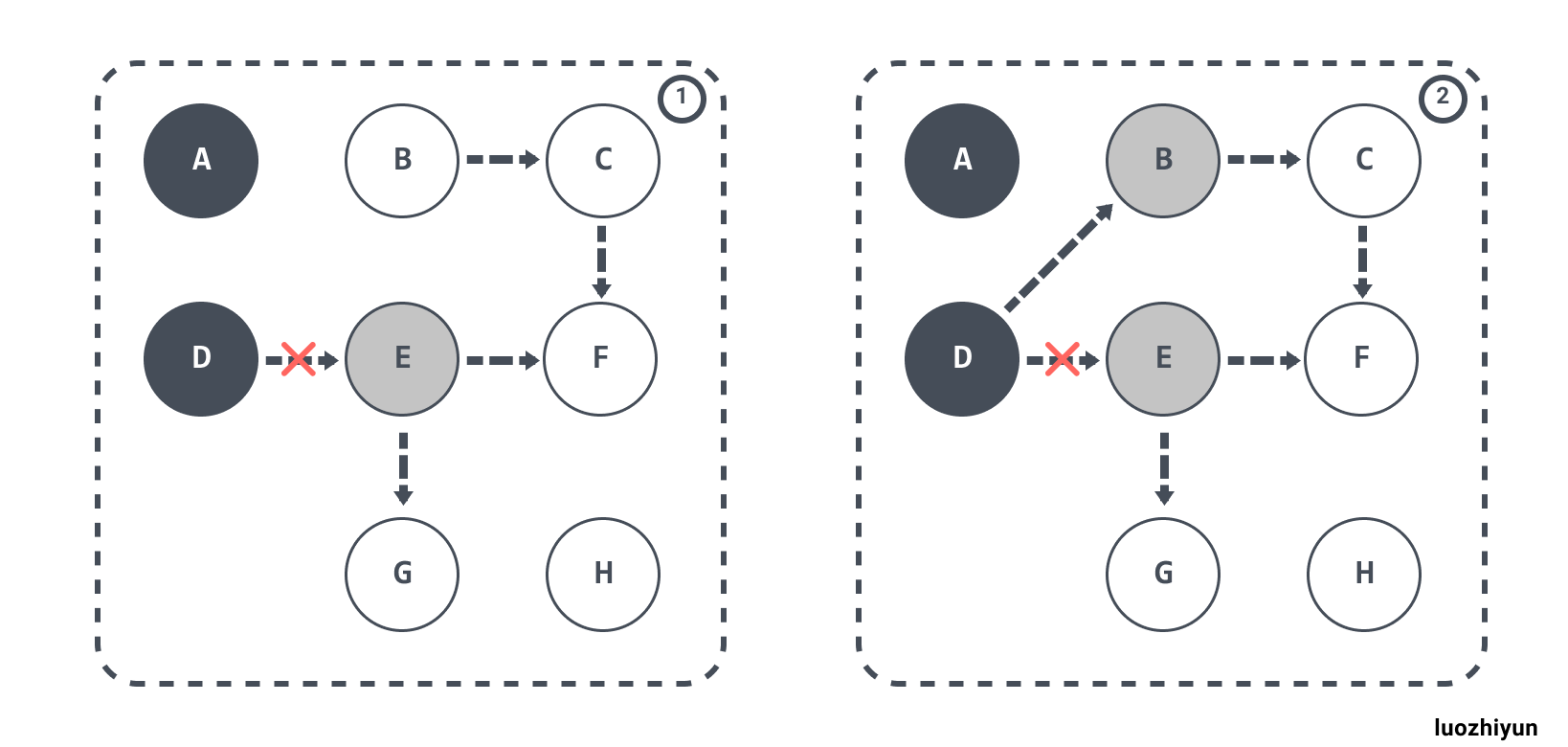

As follows, the D object pointed to by the root object is marked black and the E object pointed to by the D object is marked gray; if D breaks its reference to E and refers to the B object instead, then the write barrier is triggered to mark the B object gray.

The Dijkstra Write barrier is very simple to implement and guarantees strong tricolor invariance. However, it has some drawbacks as presented in “Proposal: Eliminate STW stack re-scanning”.

In particular, it presents a trade-off for pointers on stacks: either writes to pointers on the stack must have write barriers, which is prohibitively expensive, or stacks must be permagrey.

The Go 1.7 option is to mark the stacks as constant gray, but these stacks need to be rescanned (re-scan) during the mark termination phase STW. The reason for this is described below.

without stack write barriers, we can’t ensure that the stack won’t later contain a reference to a white object, so a scanned stack is only black until its goroutine executes again, at which point it conservatively reverts to grey. Thus, at the end of the cycle, the garbage collector must re-scan grey stacks to blacken them and finish marking any remaining heap pointers.

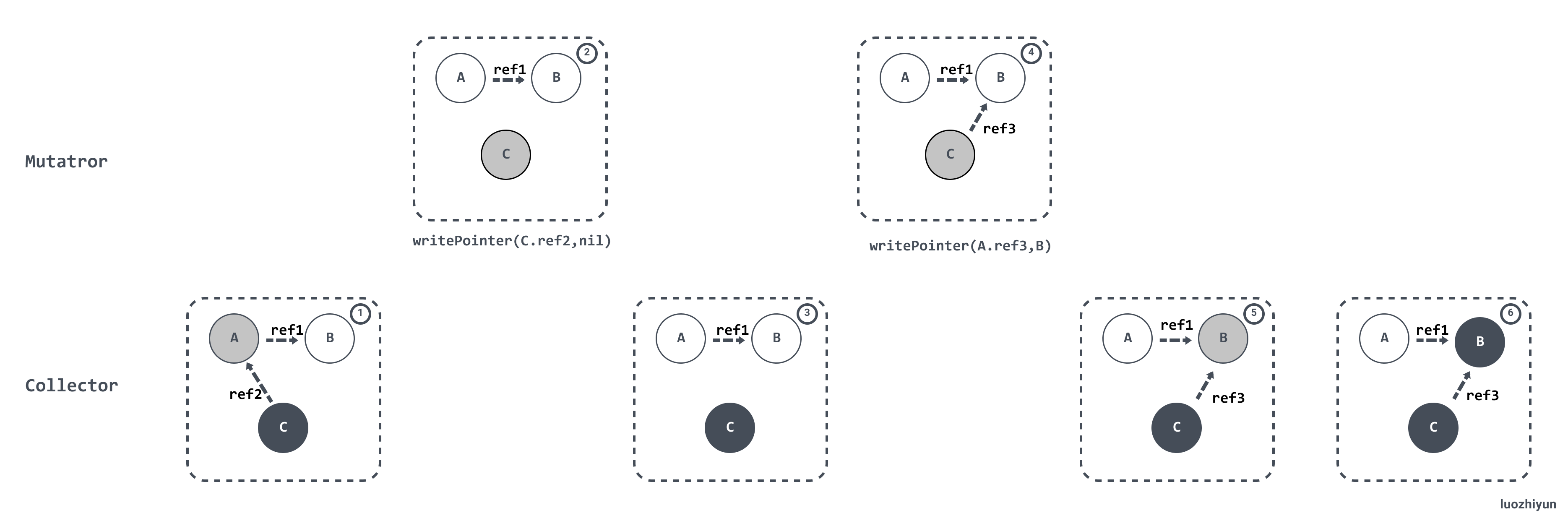

Yuasa Write barrier

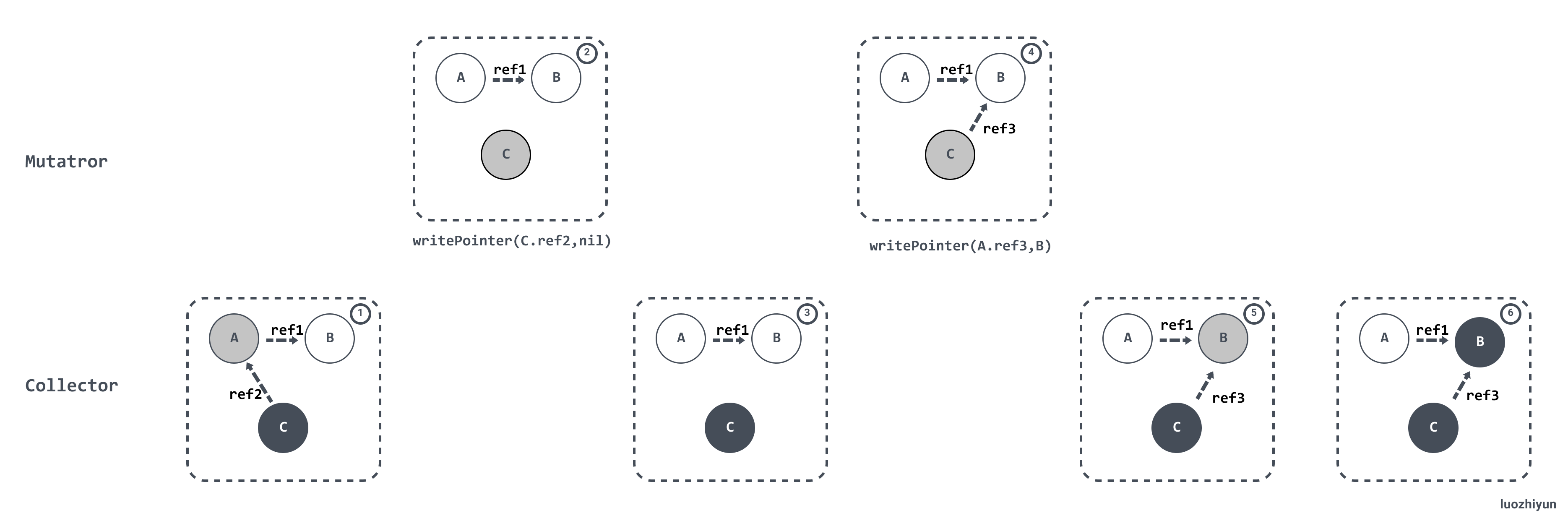

Yuasa Write barrier is a deletion barrier technique proposed by Yuasa in “Real-time garbage collection on general-purpose machines”. The idea is to notify the concurrently executing collector through the write barrier when the assignor deletes a white pointer from a gray or white object.

The algorithm guarantees the correctness of the program when garbage collection is performed incrementally or concurrently using a write barrier as shown below, with the following pseudo-code implementation.

1

2

3

|

writePointer(slot, ptr)

shade(*slot)

*slot = ptr

|

To prevent losing the path from the gray object to the white object, it should be assumed that slot may turn black. To ensure that ptr does not turn white before being assigned to slot, shade( slot) first marks slot as gray, and the write operation always creates a path from gray to gray or gray to white objects, so that removing the write barrier ensures weak tricolor invariance and that downstream objects referenced by the old object must be referenced by the gray object.

Hybrid write barrier

As mentioned above, prior to Go 1.7, the Dijkstra Write barrier was used to ensure tricolor invariance. Go must ensure that object references do not change during rescans, so it performs a pause procedure (STW), marks all stack objects as gray, and rescans, which typically takes 10-100 milliseconds.

The Proposal: Eliminate STW stack rescanning https://go.googlesource.com/proposal/+/master/design/17503-eliminate-rescan.md shows that in order to eliminate re-scanning, Go used a Hybrid write barrier in 1.8, combining the Yuasa write barrier and the Dijkstra write barrier, with the following pseudo-code implementation.

1

2

3

4

5

|

writePointer(slot, ptr):

shade(*slot)

if current stack is grey:

shade(ptr)

*slot = ptr

|

This not only simplifies the GC process, but also reduces the cost of rescanning during the mark termination phase. The basic idea of the hybrid write barrier is:

the write barrier shades the object whose reference is being overwritten, and, if the current goroutine’s stack has not yet been scanned, also shades the reference being installed.

Also, during GC all newly allocated objects immediately turn black, as seen in the mallocgc function in go\src\runtime\malloc.go during memory allocation.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

|

func mallocgc(size uintptr, typ *_type, needzero bool) unsafe.Pointer {

...

dataSize := size

// 获取mcache,用于处理微对象和小对象的分配

c := gomcache()

var x unsafe.Pointer

// 表示对象是否包含指针,true表示对象里没有指针

noscan := typ == nil || typ.ptrdata == 0

// maxSmallSize=32768 32k

if size <= maxSmallSize {

// maxTinySize= 16 bytes

if noscan && size < maxTinySize {

...

} else {

...

}

// 大于 32 Kb 的内存分配,通过 mheap 分配

} else {

...

}

...

// 在 GC 期间分配的新对象都会被标记成黑色

if gcphase != _GCoff {

gcmarknewobject(span, uintptr(x), size, scanSize)

}

...

return x

}

|

During the marking phase of garbage collection, newly created objects are marked black to prevent objects in newly allocated stack memory and heap memory from being reclaimed incorrectly.

Analysis

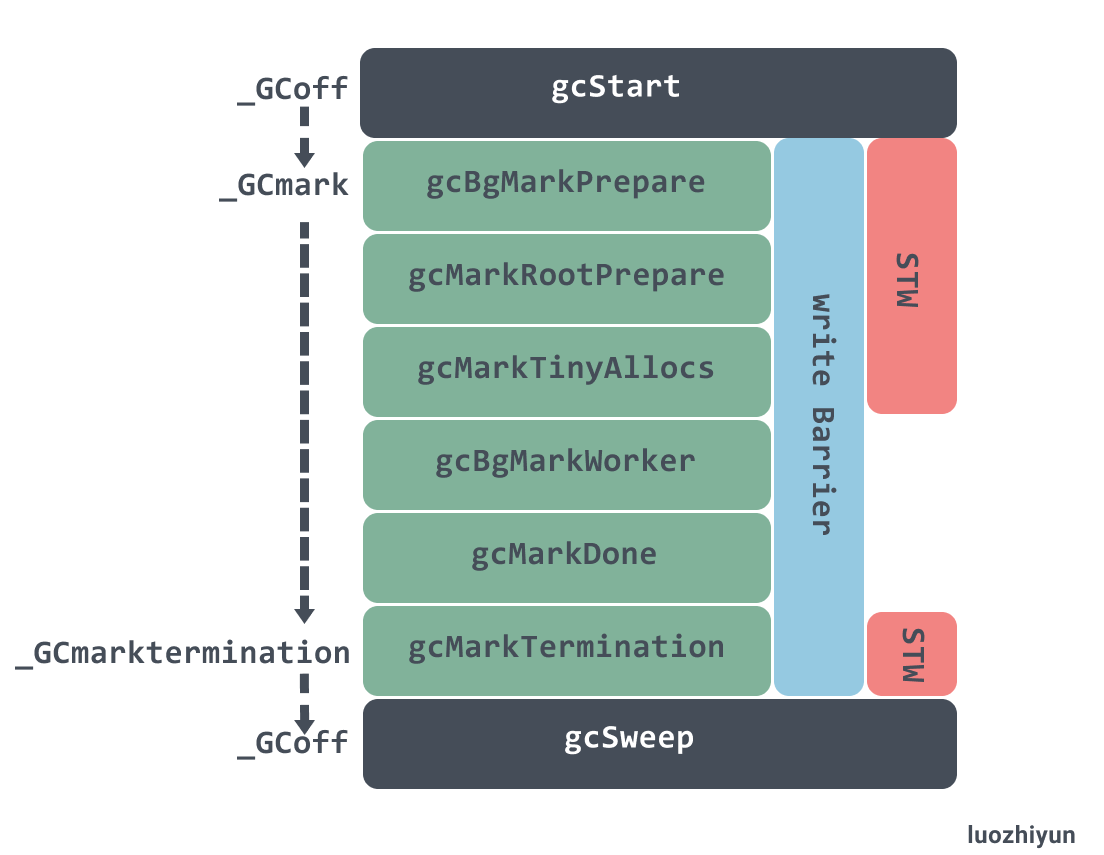

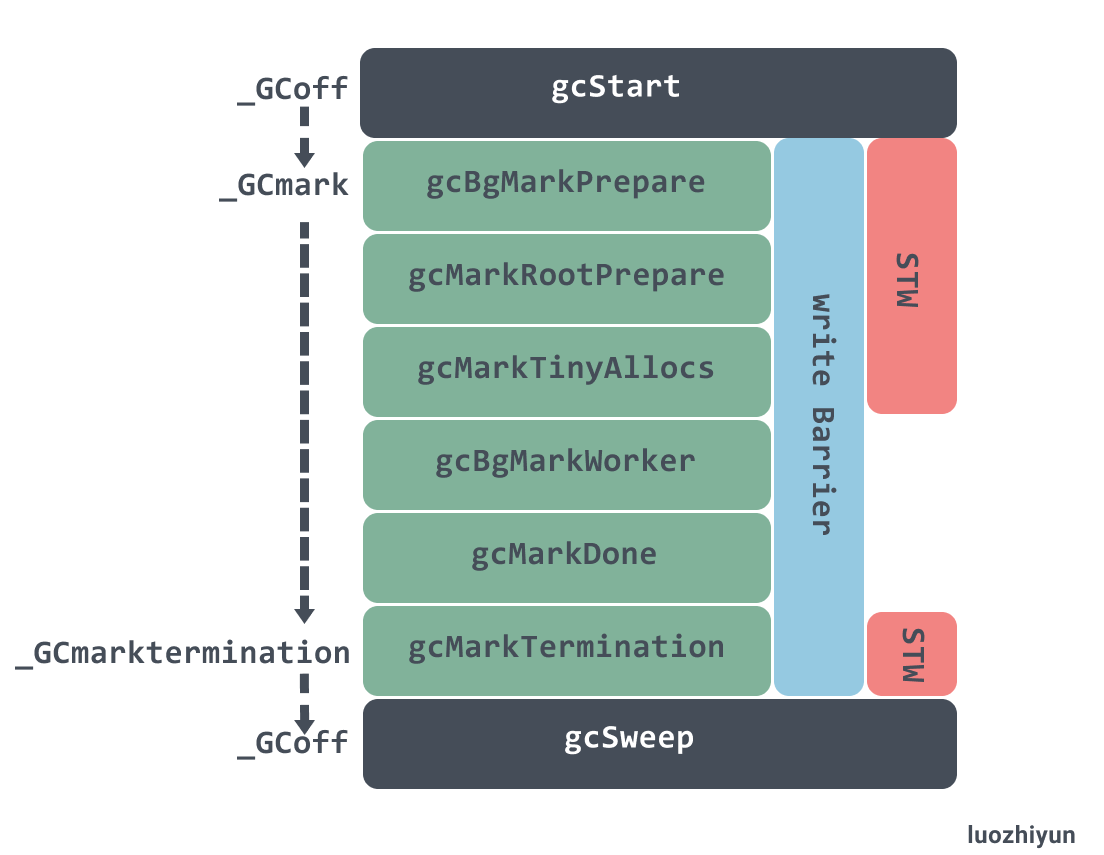

GC phase Garbage collection phase

The GC-related code is in the runtime/mgc.go file. As we can see from the comments, there are 4 phases of GC.

- sweep termination

- STW will be triggered and all P’s (processors) will go to safe-point.

- the cleanup of the uncleared span.

- the mark phase

- Changing the

_GCoff GC state to _GCmark, turning on the Write Barrier, mutator assists, and queuing the root object.

- Resume program execution, mark workers (mark processes) and mutator assists (assist threads) will start concurrently marking objects in memory. for any pointer writes and new pointer values, which are overwritten by the write barrier, and all newly created objects are marked directly in black.

- GC performs root node marking, which includes scanning all stacks, global objects, and runtime data structures that are not in the heap. Scanning the goroutine stack draws causes the goroutine to stop and greys out all pointers found on the stack, and then the execution of the goroutine continues.

- GC turns the gray object black and greys out the object it points to as it traverses the gray object queue.

- The GC uses the distributed termination algorithm to detect when there are no more root mark jobs or gray objects, and if there are none left the GC switches to mark termination.

- mark termination

- STW, which then turns the GC phase to

_GCmarktermination , closing the GC worker threads as well as mutator assists (assist threads).

- performing cleanup, such as flush mcache.

- the sweep phase

- transitioning the GC state to

_GCoff, initializing the cleanup state and closing the Write Barrier

- resuming program execution, with newly created objects from then on being white.

- Concurrently clean up all memory management units in the background

It is important to note that mutator assists is mentioned above, as there is a case:

during the collection that the Goroutine dedicated to GC will not finish the Marking work before the heap memory in-use reaches its limit

If the collector determines that it needs to slow down allocations, it will recruit the application Goroutines to assist with the Marking work. This is called a Mutator Assist. One positive side effect of Mutator Assist is that it helps to finish the collection faster.

Next GC timing

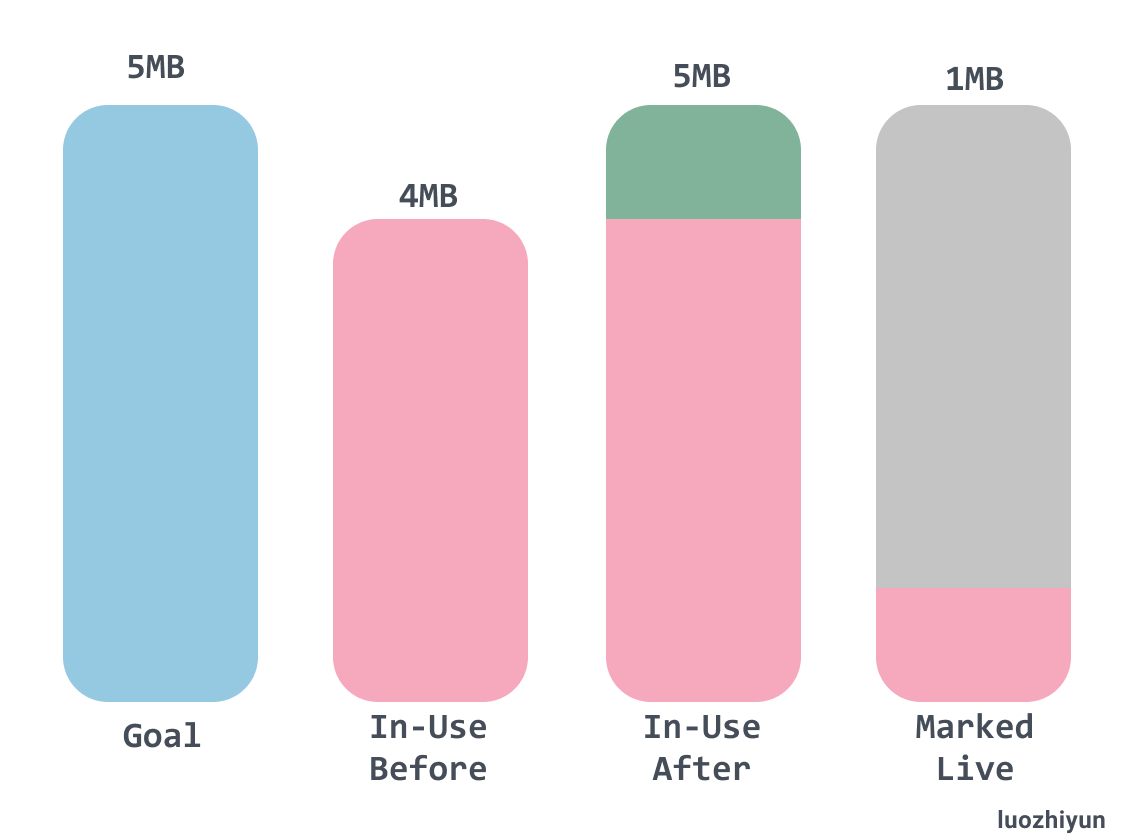

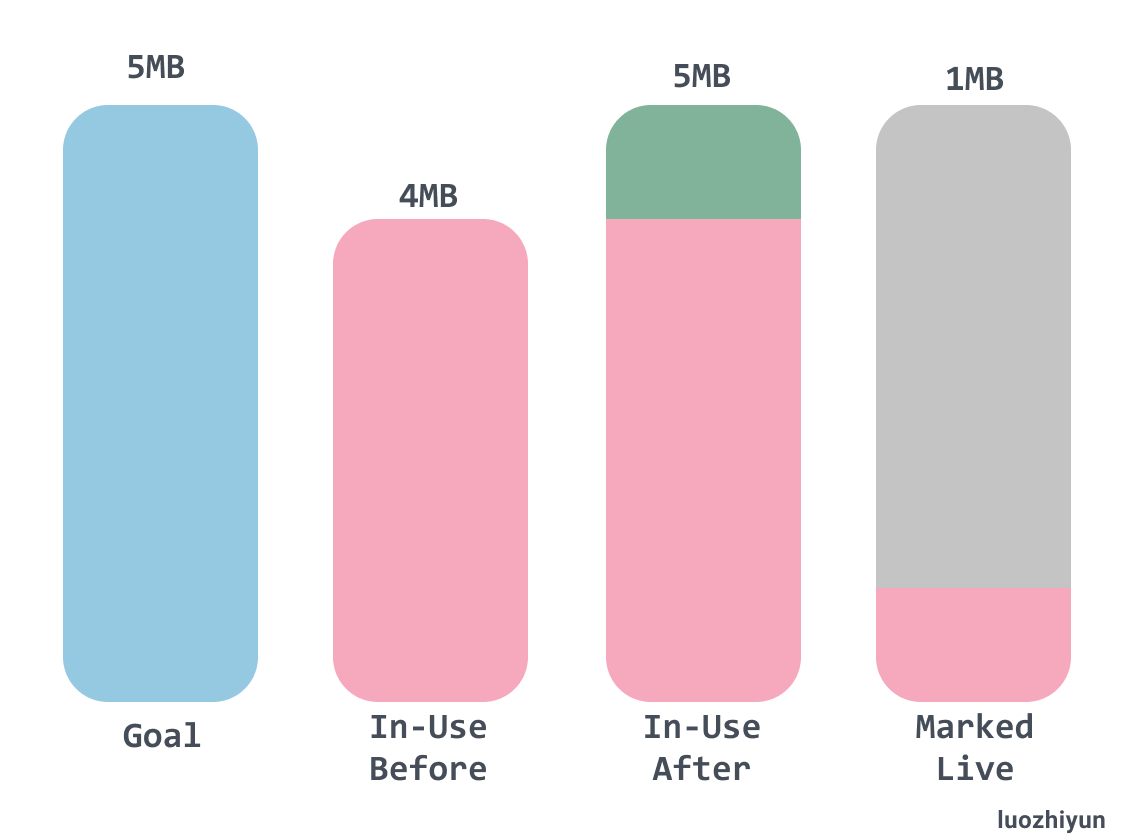

The timing of the next GC can be controlled by an environment variable, GOGC, which defaults to 100, i.e. a 100% increase in heap memory before triggering a GC.

This value represents a ratio of how much new heap memory can be allocated before the next collection has to start.

The official explanation is that if 4M of memory is currently used, the next GC will be when the memory reaches 8M. Let’s look at a concrete example.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

|

package main

import (

"fmt"

)

func main() {

fmt.Println("start.")

container := make([]int, 8)

fmt.Println("> loop.")

for i := 0; i < 32*1000*1000; i++ {

container = append(container, i)

}

fmt.Println("< loop.")

}

|

Note that it is recommended to use a Linux environment when doing experiments. If you don’t have a Linux environment, you can run a virtual machine under win10 like I did. Then you can use vscode to remote to Linux for experimentation.

After compiling, you can use gctrace to track the GC situation.

1

2

3

4

5

6

7

8

9

|

[root@localhost gotest]# go build main.go

[root@localhost gotest]# GODEBUG=gctrace=1 ./main

start.

> loop.

gc 1 @0.004s 4%: 0.22+1.4+0.021 ms clock, 1.7+0.009/0.40/0.073+0.16 ms cpu, 4->5->1 MB, 5 MB goal, 8 P

gc 2 @0.006s 4%: 0.10+1.6+0.020 ms clock, 0.83+0.12/0/0+0.16 ms cpu, 4->6->1 MB, 5 MB goal, 8 P

gc 3 @0.009s 16%: 0.035+5.5+2.2 ms clock, 0.28+0/0.47/0.007+18 ms cpu, 4->15->15 MB, 5 MB goal, 8 P

...

< loop.

|

The above shows the case of 3 GCs, let’s see what it means exactly.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

|

gc 1 @0.004s 4%: 0.22+1.4+0.021 ms clock, 1.7+0.009/0.40/0.073+0.16 ms cpu, 4->5->1 MB, 5 MB goal, 8 P

gc 1 :程序启动以来第1次GC

@0.004s:距离程序启动到现在的时间

4%:当目前为止,GC 的标记工作所用的CPU时间占总CPU的百分比

垃圾回收的时间

0.22 ms:标记开始 STW 时间

1.4 ms:并发标记时间

0.021 ms:标记终止 STW 时间

垃圾回收占用cpu时间

1.7 ms:标记开始 STW 时间

0.009 ms:mutator assists占用的时间

0.40 ms:标记线程占用的时间

0.073 ms:idle mark workers占用的时间

0.16 ms:标记终止 STW 时间

内存

4 MB:标记开始前堆占用大小

5 MB:标记结束后堆占用大小

1 MB:标记完成后存活堆的大小

5 MB goal:标记完成后正在使用的堆内存的目标大小

8 P:使用了多少处理器

|

From the GC memory information above, we can see that the heap size is 4MB before the GC tagging starts, and since the tagging is done concurrently, the size of the heap used when the tagging is done is 5MB, which means that 1MB of memory allocation happens during the GC. Finally, we can see that the heap size that survives after the GC marking is only 1MB, which means that the next round of GC can be started when the heap occupies 2MB of memory.

From the above, we can see that the memory size of the Goal section is 5MB, which is equal to the actual memory usage of the In-Use After section, but it is not equal in many complex cases because the memory size of the Goal section is projected based on the current memory usage.

the goal is calculated based on the current amount of the heap memory in-use, the amount of heap memory marked as live, and timing calculations about the additional allocations that will occur while the collection is running.

Trigger GC condition

The trigger GC condition is verified by gcTrigger.test, let’s see how gcTrigger.test determines if garbage collection should be triggered.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

|

func (t gcTrigger) test() bool {

if !memstats.enablegc || panicking != 0 || gcphase != _GCoff {

return false

}

switch t.kind {

case gcTriggerHeap:

// 堆内存的分配达到达控制器计算的触发堆大小

return memstats.heap_live >= memstats.gc_trigger

case gcTriggerTime:

if gcpercent < 0 {

return false

}

lastgc := int64(atomic.Load64(&memstats.last_gc_nanotime))

// 如果一定时间内没有触发,就会触发新的循环

return lastgc != 0 && t.now-lastgc > forcegcperiod

case gcTriggerCycle:

// 要求启动新一轮的GC, 已启动则跳过

return int32(t.n-work.cycles) > 0

}

return true

}

|

The trigger time of gcTriggerTime is determined by forcegcperiod, which is 2 minutes by default. Let’s take a look at the heap memory size triggering GC.

The gcTriggerHeap heap memory allocation reaches the trigger heap size calculated by the controller, the heap_live value is calculated at the time of memory allocation, and the gc_trigger calculation is done in the runtime.gcSetTriggerRatio function.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

|

func gcSetTriggerRatio(triggerRatio float64) {

// gcpercent 由环境变量 GOGC 决定

if gcpercent >= 0 {

// 默认是 1

scalingFactor := float64(gcpercent) / 100

// 最大的 maxTriggerRatio 是 0.95

maxTriggerRatio := 0.95 * scalingFactor

if triggerRatio > maxTriggerRatio {

triggerRatio = maxTriggerRatio

}

// 最大的 minTriggerRatio 是 0.6

minTriggerRatio := 0.6 * scalingFactor

if triggerRatio < minTriggerRatio {

triggerRatio = minTriggerRatio

}

} else if triggerRatio < 0 {

triggerRatio = 0

}

memstats.triggerRatio = triggerRatio

trigger := ^uint64(0)

if gcpercent >= 0 {

// 当前标记存活的大小乘以1+系数triggerRatio

trigger = uint64(float64(memstats.heap_marked) * (1 + triggerRatio))

...

}

memstats.gc_trigger = trigger

...

}

|

The gcSetTriggerRatio function gets the heap size for the next GC trigger based on the calculated triggerRatio. triggerRatio is adjusted after each GC, and the triggerRatio is calculated in gcControllerState.endCycle, which is called in gcControllerState.endCycle. gcControllerState.endCycle` is called in MarkDone.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

|

func (c *gcControllerState) endCycle() float64 {

const triggerGain = 0.5

// 目标Heap增长率 = (下次 GC 完后堆大小 - 堆存活大小)/ 堆存活大小

goalGrowthRatio := float64(memstats.next_gc-memstats.heap_marked) / float64(memstats.heap_marked)

// 实际Heap增长率, 等于总大小/存活大小-1

actualGrowthRatio := float64(memstats.heap_live)/float64(memstats.heap_marked) - 1

// GC标记阶段的使用时间

assistDuration := nanotime() - c.markStartTime

// GC标记阶段的CPU占用率, 目标值是0.25

utilization := gcBackgroundUtilization

// Add assist utilization; avoid divide by zero.

if assistDuration > 0 {

// assistTime 是G辅助GC标记对象所使用的时间合计

// 额外的CPU占用率 = 辅助GC标记对象的总时间 / (GC标记使用时间 * P的数量)// 额外的CPU占用率 = 辅助GC标记对象的总时间 / (GC标记使用时间 * P的数量)

utilization += float64(c.assistTime) / float64(assistDuration*int64(gomaxprocs))

}

// 触发系数偏移值 = 目标增长率 - 原触发系数 - CPU占用率 / 目标CPU占用率 * (实际增长率 - 原触发系数)

triggerError := goalGrowthRatio - memstats.triggerRatio - utilization/gcGoalUtilization*(actualGrowthRatio-memstats.triggerRatio)

// 根据偏移值调整触发系数, 每次只调整偏移值的一半

triggerRatio := memstats.triggerRatio + triggerGain*triggerError

return triggerRatio

}

|

For triggerRatio in general is still complicated, we can know according to the offset value.

- the larger the actual growth rate, the smaller the triggerRatio offset value is, and the next trigger GC will be earlier when it is smaller than 0.

- the larger the CPU occupancy, the smaller the offset value of the trigger factor, and the next GC trigger will be earlier when it is smaller than 0.

- the larger the original trigger factor, the smaller the trigger factor offset value, the earlier the next trigger GC will be triggered when it is smaller than 0.

The above analysis also explains why the GODEBUG=gctrace=1 analysis is executed early even though the heap memory has not yet reached 2 times, mainly due to the influence of triggerError offset.

Start GC

We can call runtime.GC to trigger a GC manually when testing, but in practice, the entry point to trigger a GC is not normally called manually. The normal trigger would be to call runtime.mallocgc when memory is requested or to call runtime.forcegchelper when the Go background monitor thread sysmon checks in regularly.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

|

func GC() {

// 获取 GC 循环次数

n := atomic.Load(&work.cycles)

// 等待上一个循环的标记终止、标记和清除终止阶段完成

gcWaitOnMark(n)

// 触发新一轮的 GC

gcStart(gcTrigger{kind: gcTriggerCycle, n: n + 1})

// 同上

gcWaitOnMark(n + 1)

// 等待清理全部待处理的内存管理单元

for atomic.Load(&work.cycles) == n+1 && sweepone() != ^uintptr(0) {

sweep.nbgsweep++

// 让出 P

Gosched()

}

for atomic.Load(&work.cycles) == n+1 && atomic.Load(&mheap_.sweepers) != 0 {

Gosched()

}

mp := acquirem()

cycle := atomic.Load(&work.cycles)

if cycle == n+1 || (gcphase == _GCmark && cycle == n+2) {

// 将该阶段的堆内存状态快照发布出来( heap profile)

mProf_PostSweep()

}

releasem(mp)

}

|

- gcWaitOnMark will be called to wait for the completion of the mark-terminate, mark-clear-terminate phase of the previous cycle.

- gcStart is called to trigger a new round of GC and gcWaitOnMark is called to wait for the mark termination, mark and clear termination phases of the current loop to complete.

- call sweepone to wait for all pending memory management units to be cleared, and then call Gosched to let out P.

- calls mProf_PostSweep to post a snapshot of the heap memory state for that phase after completing the current round of garbage collection cleanup.

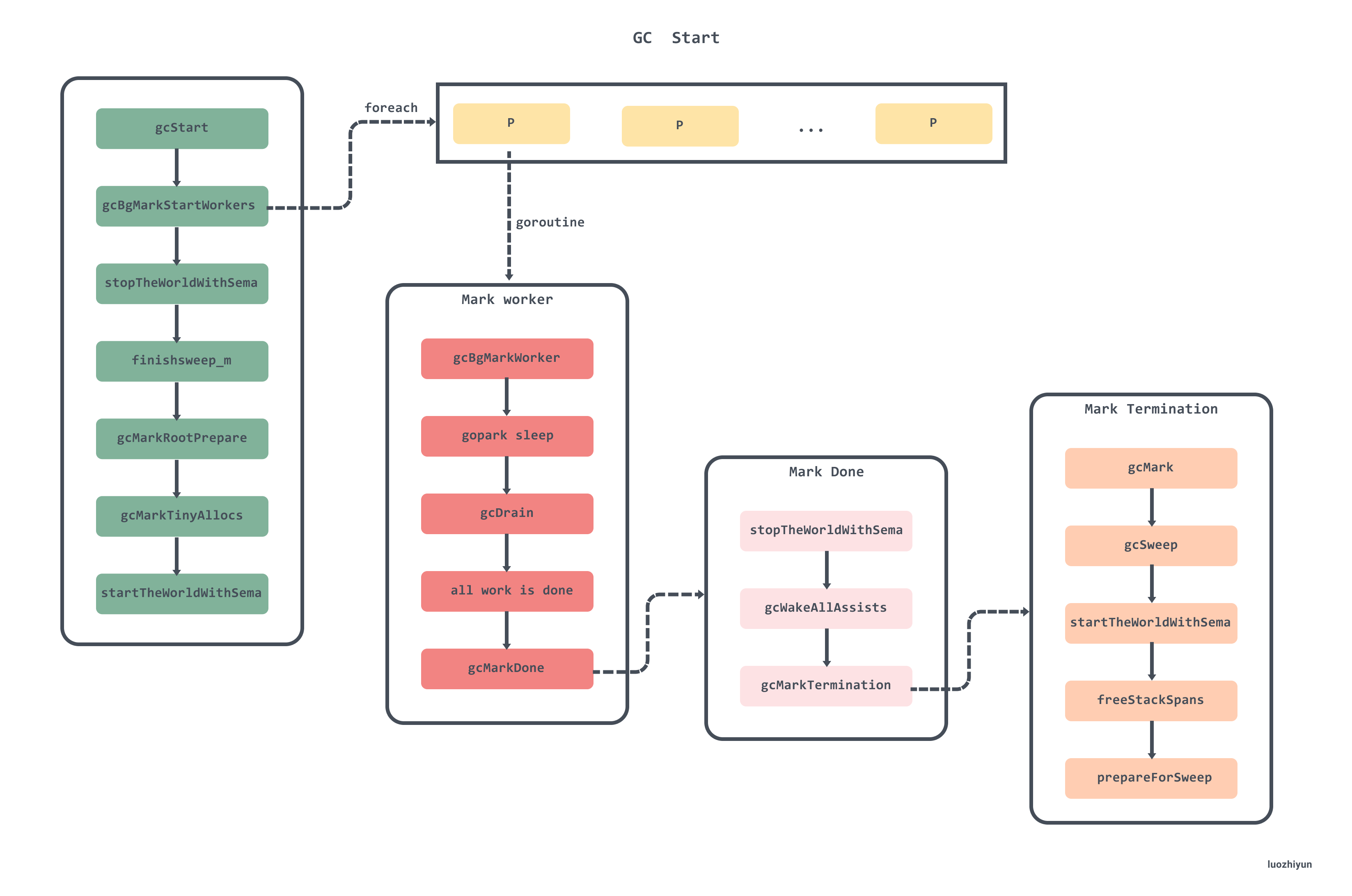

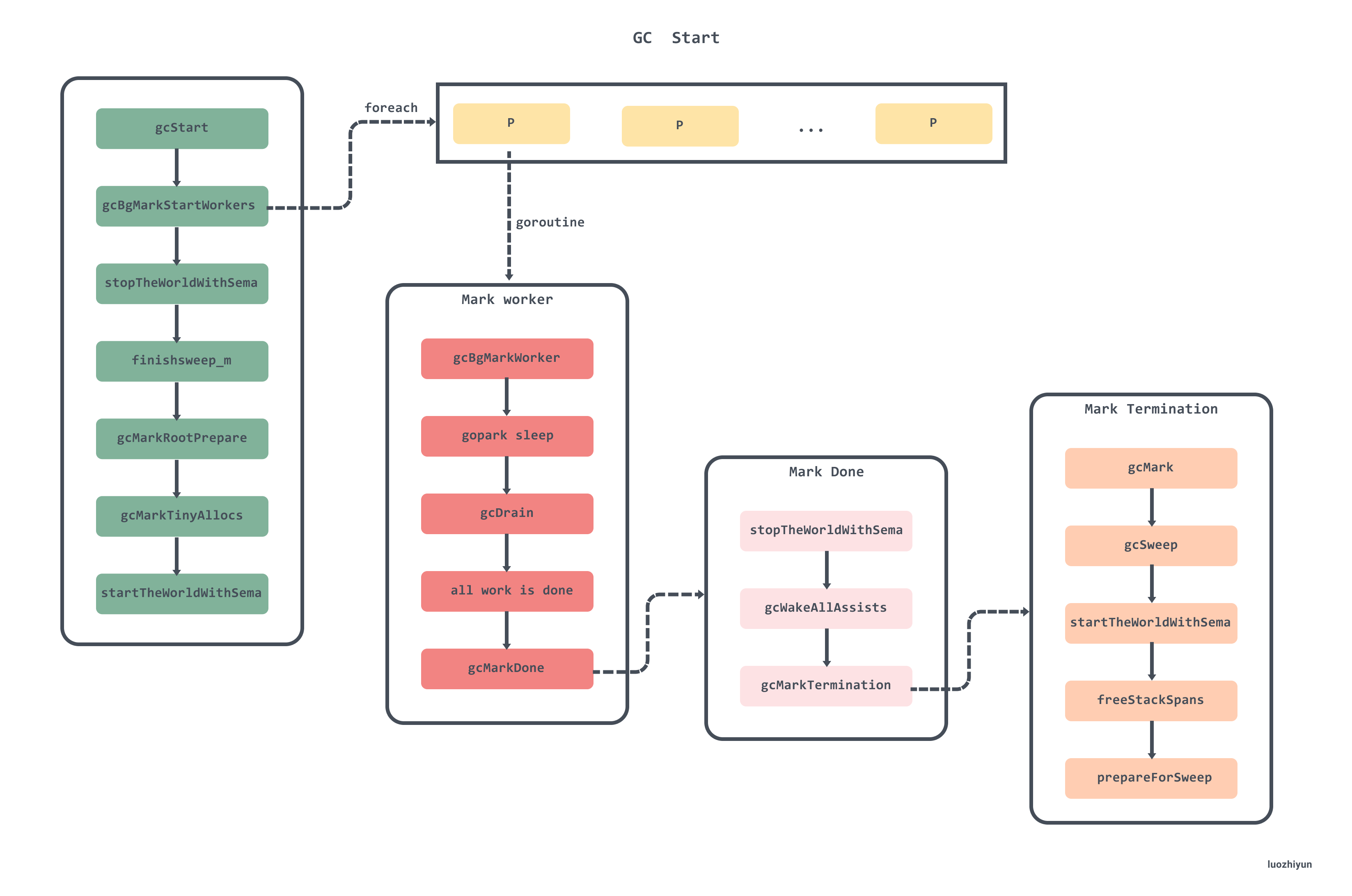

GC startup

The following diagram is a more complete GC flow and can be used as a navigation when looking at the source code.

The gcStart function is rather long, so here’s a look at gcStart in sections.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

|

func gcStart(trigger gcTrigger) {

...

// 验证垃圾收集条件 ,并清理已经被标记的内存单元

for trigger.test() && sweepone() != ^uintptr(0) {

sweep.nbgsweep++

}

// 获取全局的 startSema信号量

semacquire(&work.startSema)

// 再次验证垃圾收集条件

if !trigger.test() {

semrelease(&work.startSema)

return

}

// 检查是不是手动调用了 runtime.GC

work.userForced = trigger.kind == gcTriggerCycle

semacquire(&gcsema)

semacquire(&worldsema)

// 启动后台标记任务

gcBgMarkStartWorkers()

// 重置标记相关的状态

systemstack(gcResetMarkState)

// work 初始化工作

work.stwprocs, work.maxprocs = gomaxprocs, gomaxprocs

if work.stwprocs > ncpu {

work.stwprocs = ncpu

}

work.heap0 = atomic.Load64(&memstats.heap_live)

work.pauseNS = 0

work.mode = mode

// 记录开始时间

now := nanotime()

work.tSweepTerm = now

work.pauseStart = now

// 暂停程序 STW

systemstack(stopTheWorldWithSema)

// 在并发标记前,确保清理结束

systemstack(func() {

finishsweep_m()

})

// 清理sched.sudogcache 以及 sync.Pools

clearpools()

// GC 次数

work.cycles++

// 在开始 GC 之前清理控制器的状态,标记新一轮GC已开始

gcController.startCycle()

work.heapGoal = memstats.next_gc

// 设置全局变量中的GC状态为_GCmark

// 然后启用写屏障

setGCPhase(_GCmark)

// 初始化后台扫描需要的状态

gcBgMarkPrepare() // Must happen before assist enable.

// 扫描栈上、全局变量等根对象并将它们加入队列

gcMarkRootPrepare()

// 标记所有tiny alloc等待合并的对象

gcMarkTinyAllocs()

// 启用 mutator assists(协助线程)

atomic.Store(&gcBlackenEnabled, 1)

// 记录标记开始的时间

gcController.markStartTime = now

mp = acquirem()

// 启动程序,后台任务也会开始标记堆中的对象

systemstack(func() {

now = startTheWorldWithSema(trace.enabled)

// 记录停止了多久, 和标记阶段开始的时间

work.pauseNS += now - work.pauseStart

work.tMark = now

})

semrelease(&worldsema)

...

}

|

- two calls to

trigger.test to check if the conditions for garbage collection are met, a function we talked about above.

- call

semacquire(&work.startSema) to lock and gcBgMarkStartWorkers to start the background marker task, which we will focus on later.

- initialize the work structure, set the number of Goroutines needed for garbage collection and the number of GCs completed, etc.

- call

gcController.startCycle to clear the state of the controller and mark the start of a new GC cycle before starting the GC.

- call setGCPhase to set the GC state in the global variable to _GCmark, and then enable the write barrier.

- call gcBgMarkPrepare to initialize the state needed for the background scan.

- call gcMarkRootPrepare to scan the root objects on the stack, global variables, etc. and add them to the queue.

- call gcMarkTinyAllocs to mark all tiny alloc memory blocks.

- set gcBlackenEnabled to enable mutator assists (assisted threads).

- call startTheWorldWithSema to start the program after recording the start time of the marker, and the background task will also start marking objects in the heap.

The following diagram shows the state change during gcStart and the method of STW stopping, with the writing barrier enabled period.

The above is just a rough description of the role of each function, the following to analyze some important functions.

startCycle

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

|

func (c *gcControllerState) startCycle() {

c.scanWork = 0

c.bgScanCredit = 0

c.assistTime = 0

c.dedicatedMarkTime = 0

c.fractionalMarkTime = 0

c.idleMarkTime = 0

// 设置 next_gc 最小值

if memstats.next_gc < memstats.heap_live+1024*1024 {

memstats.next_gc = memstats.heap_live + 1024*1024

}

// gcBackgroundUtilization 默认是 0.25

// 是GC所占的P的目标值

totalUtilizationGoal := float64(gomaxprocs) * gcBackgroundUtilization

// dedicatedMarkWorkersNeeded 等于P的数量的25% 加上 0.5 去掉小数点

c.dedicatedMarkWorkersNeeded = int64(totalUtilizationGoal + 0.5)

utilError := float64(c.dedicatedMarkWorkersNeeded)/totalUtilizationGoal - 1

const maxUtilError = 0.3

if utilError < -maxUtilError || utilError > maxUtilError {

if float64(c.dedicatedMarkWorkersNeeded) > totalUtilizationGoal {

c.dedicatedMarkWorkersNeeded--

}

// 是 gcMarkWorkerFractionalMode 的任务所占的P的目标值(

c.fractionalUtilizationGoal = (totalUtilizationGoal - float64(c.dedicatedMarkWorkersNeeded)) / float64(gomaxprocs)

} else {

c.fractionalUtilizationGoal = 0

}

if debug.gcstoptheworld > 0 {

c.dedicatedMarkWorkersNeeded = int64(gomaxprocs)

c.fractionalUtilizationGoal = 0

}

for _, p := range allp {

p.gcAssistTime = 0

p.gcFractionalMarkTime = 0

}

// 计算协助GC的参数

c.revise()

}

|

Note here the process of calculating dedicatedMarkWorkersNeeded and fractionalUtilizationGoal, which will be used in the calculation of work work patterns.

marks tiny alloc

1

2

3

4

5

6

7

8

9

10

11

12

13

|

func gcMarkTinyAllocs() {

for _, p := range allp {

// 标记各个 P 中的 mcache 中的 tiny

c := p.mcache

if c == nil || c.tiny == 0 {

continue

}

_, span, objIndex := findObject(c.tiny, 0, 0)

gcw := &p.gcw

// 标记存活对象,并把它加到 gcwork 标记队列

greyobject(c.tiny, 0, 0, span, gcw, objIndex)

}

}

|

The tiny block data structure is also talked about in the section on memory allocation. Here we will mainly find and mark the tiny in the mcache of all the P’s and add it to the gcwork marker queue, as for what is the gcwork marker queue, we will talk about it below in the section on performing markers.

write Barrier Write Barrier

When setting the GC stage marker, it will determine if the write barrier needs to be turned on based on the currently set value.

1

2

3

4

5

|

func setGCPhase(x uint32) {

atomic.Store(&gcphase, x)

writeBarrier.needed = gcphase == _GCmark || gcphase == _GCmarktermination

writeBarrier.enabled = writeBarrier.needed || writeBarrier.cgo

}

|

The compiler will call the writebarrier function in src\cmd\compile\internal\ssa\writebarrier.go, just as its comment says.

1

2

3

4

5

6

7

8

9

|

// writebarrier pass inserts write barriers for store ops (Store, Move, Zero)

// when necessary (the condition above). It rewrites store ops to branches

// and runtime calls, like

//

// if writeBarrier.enabled {

// gcWriteBarrier(ptr, val) // Not a regular Go call

// } else {

// *ptr = val

// }

|

Add a write barrier to the execution of Store, Move, Zero, etc. assembly operations.

We can find the location of the gcWriteBarrier assembly code in go/src/runtime/asm_amd64.s:1395 via the dlv breakpoint. This assembly function calls runtime.wbBufFlush to add the write barrier’s cache tasks to the GC’s work queue for processing.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

|

func wbBufFlush(dst *uintptr, src uintptr) {

...

systemstack(func() {

...

wbBufFlush1(getg().m.p.ptr())

})

}

func wbBufFlush1(_p_ *p) {

// 获取缓存的指针

start := uintptr(unsafe.Pointer(&_p_.wbBuf.buf[0]))

n := (_p_.wbBuf.next - start) / unsafe.Sizeof(_p_.wbBuf.buf[0])

ptrs := _p_.wbBuf.buf[:n]

_p_.wbBuf.next = 0

gcw := &_p_.gcw

pos := 0

for _, ptr := range ptrs {

// 查找到对象

obj, span, objIndex := findObject(ptr, 0, 0)

if obj == 0 {

continue

}

mbits := span.markBitsForIndex(objIndex)

// 判断是否已被标记

if mbits.isMarked() {

continue

}

// 进行标记

mbits.setMarked()

// 标记 span.

arena, pageIdx, pageMask := pageIndexOf(span.base())

if arena.pageMarks[pageIdx]&pageMask == 0 {

atomic.Or8(&arena.pageMarks[pageIdx], pageMask)

}

if span.spanclass.noscan() {

gcw.bytesMarked += uint64(span.elemsize)

continue

}

ptrs[pos] = obj

pos++

}

// 将对象加入到 gcWork队列中

gcw.putBatch(ptrs[:pos])

// 重置 write barrier 缓存

_p_.wbBuf.reset()

}

|

The write barrier is actually the same as the concurrency marker, so you can read the concurrency marker and come back to it. wbBufFlush1 iterates through the write barrier cache, then calls findObject to find the object and mark it with a flag bit, and finally adds the object to the gcWork queue for scanning and resets the write barrier cache.

stopTheWorldWithSema and startTheWorldWithSema

stopTheWorldWithSema and startTheWorldWithSema are a pair of core functions used to pause and resume programs.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

|

func stopTheWorldWithSema() {

_g_ := getg()

lock(&sched.lock)

sched.stopwait = gomaxprocs

// 标记 gcwaiting,调度时看见此标记会进入等待

atomic.Store(&sched.gcwaiting, 1)

// 发送抢占信号

preemptall()

// 暂停当前 P

_g_.m.p.ptr().status = _Pgcstop // Pgcstop is only diagnostic.

sched.stopwait--

// 遍历所有的 P ,修改 P 的状态为 _Pgcstop 停止运行

for _, p := range allp {

s := p.status

if s == _Psyscall && atomic.Cas(&p.status, s, _Pgcstop) {

if trace.enabled {

traceGoSysBlock(p)

traceProcStop(p)

}

p.syscalltick++

sched.stopwait--

}

}

// 停止空闲的 P 列表

for {

p := pidleget()

if p == nil {

break

}

p.status = _Pgcstop

sched.stopwait--

}

wait := sched.stopwait > 0

unlock(&sched.lock)

if wait {

for {

// 等待 100 us

if notetsleep(&sched.stopnote, 100*1000) {

noteclear(&sched.stopnote)

break

}

// 再次进行发送抢占信号

preemptall()

}

}

// 安全检测

bad := ""

if sched.stopwait != 0 {

bad = "stopTheWorld: not stopped (stopwait != 0)"

} else {

for _, p := range allp {

if p.status != _Pgcstop {

bad = "stopTheWorld: not stopped (status != _Pgcstop)"

}

}

}

if atomic.Load(&freezing) != 0 {

lock(&deadlock)

lock(&deadlock)

}

if bad != "" {

throw(bad)

}

}

|

This method will check if all P’s have been suspended by calling sched.stopwait. First, it sends a preempt signal to preempt all running Gs by calling preemptall, then it goes through the P’s and suspends all idle P’s with status _Psyscall, and waits for them to stop if there are still P’s that need to be stopped.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

|

func startTheWorldWithSema(emitTraceEvent bool) int64 {

mp := acquirem() // disable preemption because it can be holding p in a local var

// 判断收到的 netpoll 事件并添加对应的G到待运行队列

if netpollinited() {

list := netpoll(0) // non-blocking

injectglist(&list)

}

lock(&sched.lock)

procs := gomaxprocs

if newprocs != 0 {

procs = newprocs

newprocs = 0

}

// 扩容或者缩容全局的处理器

p1 := procresize(procs)

// 取消GC等待标记

sched.gcwaiting = 0

// 如果 sysmon (后台监控线程) 在等待则唤醒它

if sched.sysmonwait != 0 {

sched.sysmonwait = 0

notewakeup(&sched.sysmonnote)

}

unlock(&sched.lock)

// 唤醒有可运行任务的P

for p1 != nil {

p := p1

p1 = p1.link.ptr()

if p.m != 0 {

mp := p.m.ptr()

p.m = 0

if mp.nextp != 0 {

throw("startTheWorld: inconsistent mp->nextp")

}

mp.nextp.set(p)

notewakeup(&mp.park)

} else {

// Start M to run P

newm(nil, p, -1)

}

}

startTime := nanotime()

if emitTraceEvent {

traceGCSTWDone()

}

// 如果有空闲的P,并且没有自旋中的M则唤醒或者创建一个M

wakep()

releasem(mp)

return startTime

}

|

startTheWorldWithSema is much simpler, first fetching the pending task from the netpoller and adding it to the global queue; then traversing the P chain to wake up the P with a runnable task.

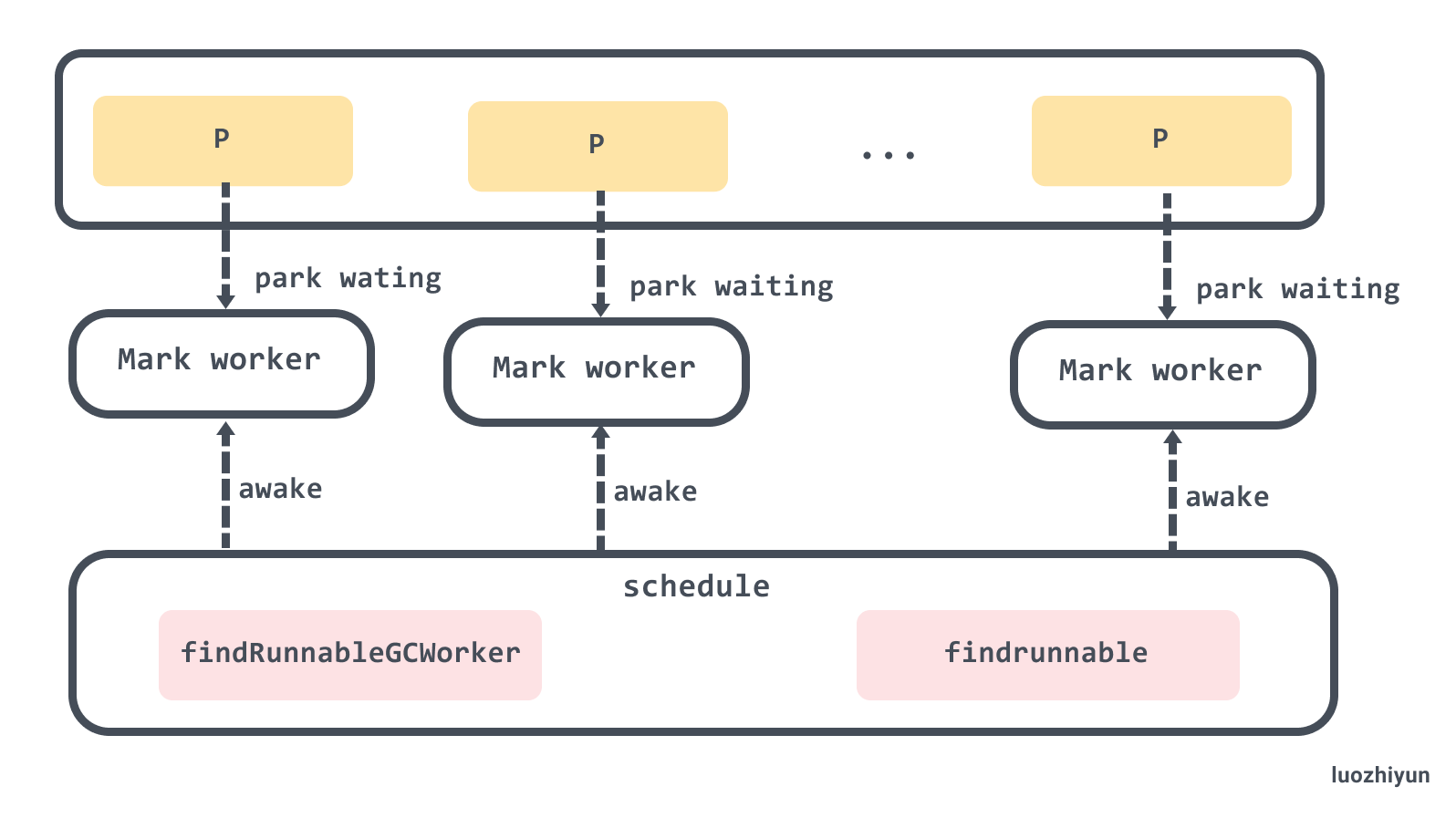

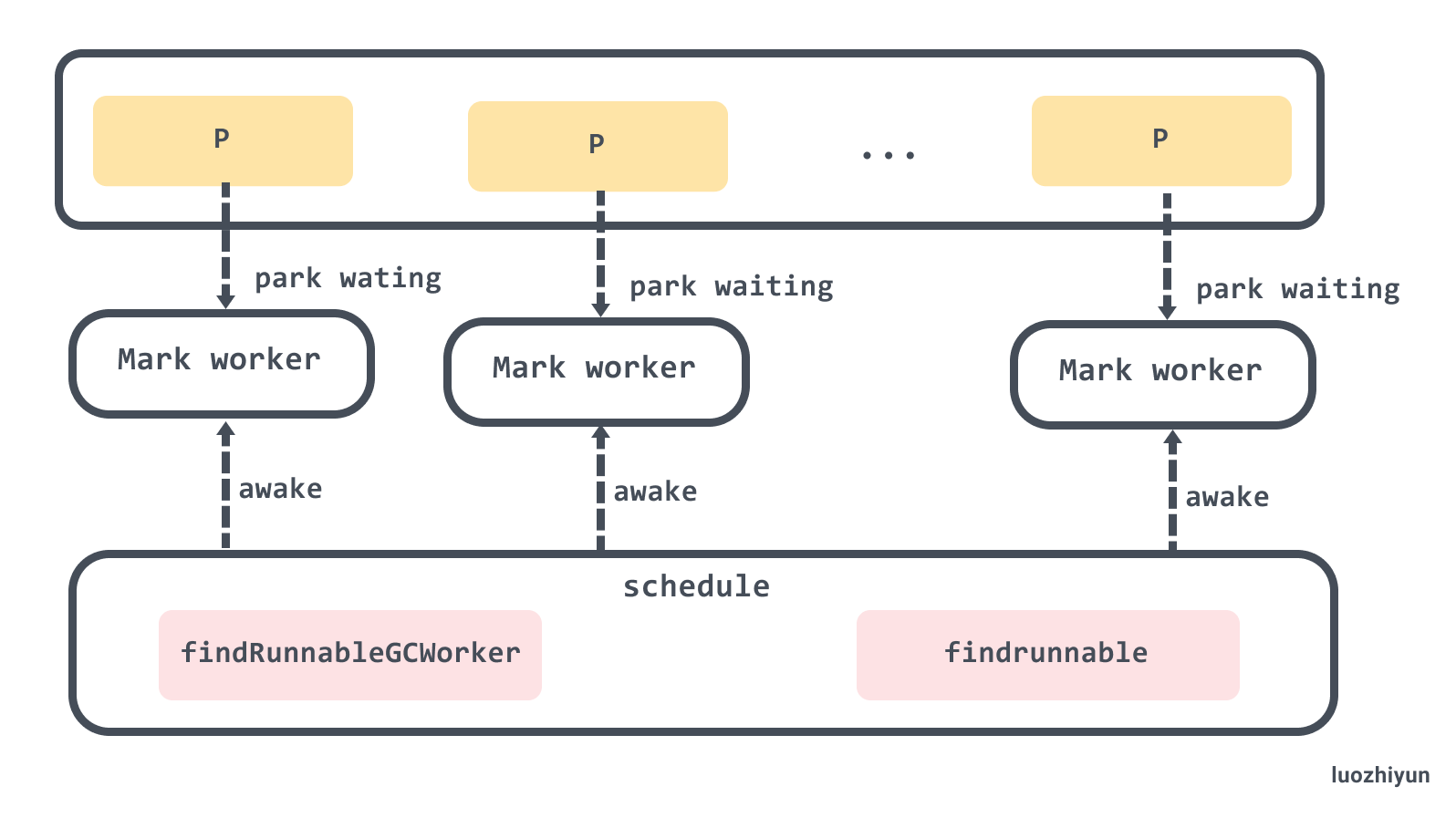

Create a background tag worker

1

2

3

4

5

6

7

8

9

10

11

12

13

|

func gcBgMarkStartWorkers() {

// 遍历所有 P

for _, p := range allp {

// 如果已启动则不重复启动

if p.gcBgMarkWorker == 0 {

// 为全局每个处理器创建用于执行后台标记任务的 Goroutine

go gcBgMarkWorker(p)

// 启动后等待该任务通知信号量 bgMarkReady 再继续

notetsleepg(&work.bgMarkReady, -1)

noteclear(&work.bgMarkReady)

}

}

}

|

gcBgMarkStartWorkers creates a Goroutine for each P globally to perform background marker tasks. Each Goroutine runs gcBgMarkWorker, and notetsleepg waits for gcBgMarkWorker to notify the semaphore bgMarkReady before continuing.

Here, although a background marker task is started for each P, only 25% of them can work at the same time. The scheduler controls this by calling the gcController.findRunnableGCWorker method in the scheduling loop runtime.schedule.

Before we look at this method, let’s understand a concept, Mark Worker Mode There are currently three types of Mark Worker Modes, which are designed to ensure the utilization of the marker threads in the background.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

|

type gcMarkWorkerMode int

const (

// gcMarkWorkerDedicatedMode indicates that the P of a mark

// worker is dedicated to running that mark worker. The mark

// worker should run without preemption.

gcMarkWorkerDedicatedMode gcMarkWorkerMode = iota

// gcMarkWorkerFractionalMode indicates that a P is currently

// running the "fractional" mark worker. The fractional worker

// is necessary when GOMAXPROCS*gcBackgroundUtilization is not

// an integer. The fractional worker should run until it is

// preempted and will be scheduled to pick up the fractional

// part of GOMAXPROCS*gcBackgroundUtilization.

gcMarkWorkerFractionalMode

// gcMarkWorkerIdleMode indicates that a P is running the mark

// worker because it has nothing else to do. The idle worker

// should run until it is preempted and account its time

// against gcController.idleMarkTime.

gcMarkWorkerIdleMode

)

|

From the code comments you can know that.

- gcMarkWorkerDedicatedMode : P is exclusively responsible for marking objects that will not be preempted by the scheduler.

- gcMarkWorkerFractionalMode : mainly because now the default marker thread occupancy to be 25%, so if the number of CPU cores is not a multiple of 4, it can not divide the integer, start this type of work mode to help garbage collection to achieve the goal of utilization.

- gcMarkWorkerIdleMode: indicates that P currently has only the marker thread running and no other G that can be executed, it will run the marker task of garbage collection until it is preempted.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

|

func (c *gcControllerState) findRunnableGCWorker(_p_ *p) *g {

...

// 原子减少对应的值, 如果减少后大于等于0则返回true, 否则返回false

decIfPositive := func(ptr *int64) bool {

if *ptr > 0 {

if atomic.Xaddint64(ptr, -1) >= 0 {

return true

}

// We lost a race

atomic.Xaddint64(ptr, +1)

}

return false

}

// 减少dedicatedMarkWorkersNeeded, 成功时后台标记任务的模式是Dedicated

if decIfPositive(&c.dedicatedMarkWorkersNeeded) {

_p_.gcMarkWorkerMode = gcMarkWorkerDedicatedMode

} else if c.fractionalUtilizationGoal == 0 {

// No need for fractional workers.

return nil

} else {

// 执行标记任务的时间

delta := nanotime() - gcController.markStartTime

if delta > 0 && float64(_p_.gcFractionalMarkTime)/float64(delta) > c.fractionalUtilizationGoal {

// Nope. No need to run a fractional worker.

return nil

}

_p_.gcMarkWorkerMode = gcMarkWorkerFractionalMode

}

gp := _p_.gcBgMarkWorker.ptr()

casgstatus(gp, _Gwaiting, _Grunnable)

return gp

}

|

In findRunnableGCWorker, the Mark Worker Mode of gcMarkWorkerDedicatedMode is used to determine if the Mark Worker Mode of gcMarkWorkerDedicatedMode is used. dedicatedMarkWorkersNeeded is initialized in gcControllerState.startCycle.

I won’t write the formula, it’s already mentioned in gcControllerState.startCycle, in layman’s terms if the current CPU is 8 cores, then dedicatedMarkWorkersNeeded is 2, if it’s 6 cores, because it can’t be divided by 4, the calculation is dedicatedMarkWorkersNeeded is 1, so the above gcMarkWorkerFractionalMode is needed to ensure CPU utilization.

gcMarkWorkerIdleMode is called when the scheduler executes a findrunnable preemption: gcMarkWorkerIdleMode is called when the scheduler executes a findrunnable preemption.

1

2

3

4

5

6

7

8

9

10

11

12

13

|

func findrunnable() (gp *g, inheritTime bool) {

...

stop:

// 处于 GC 阶段的话,获取执行GC标记任务的G

if gcBlackenEnabled != 0 && _p_.gcBgMarkWorker != 0 && gcMarkWorkAvailable(_p_) {

_p_.gcMarkWorkerMode = gcMarkWorkerIdleMode

gp := _p_.gcBgMarkWorker.ptr()

//将本地 P 的 GC 标记专用 G 职位 Grunnable

casgstatus(gp, _Gwaiting, _Grunnable)

return gp, false

}

...

}

|

When the preemption scheduling runs here, usually P can’t preempt G anymore and wants to hibernate, so it is safe to perform the marker task before hibernating.

Concurrent scan markers

The concurrent scan marker can be roughly summarized as follows.

- wrapping the current incoming P into parkInfo, then calling gopark to put the current G into hibernation, before hibernating it will bind the P’s gcBgMarkWorker to the G and wait for it to wake up.

- calling gcDrain to execute the marker with different policies according to Mark Worker Mode.

- determine if all background marker tasks are completed, and if there are no more tasks, call gcMarkDone to prepare for the completion of the marker phase.

Background Marking Hibernation Waiting

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

|

func gcBgMarkWorker(_p_ *p) {

gp := getg()

type parkInfo struct {

m muintptr

attach puintptr

}

gp.m.preemptoff = "GC worker init"

// 初始化 park

park := new(parkInfo)

gp.m.preemptoff = ""

// 设置当前的M并禁止抢占

park.m.set(acquirem())

// 设置当前的P

park.attach.set(_p_)

// 通知gcBgMarkStartWorkers可以继续处理

notewakeup(&work.bgMarkReady)

for {

// 让当前 G 进入休眠

gopark(func(g *g, parkp unsafe.Pointer) bool {

park := (*parkInfo)(parkp)

releasem(park.m.ptr())

// 设置关联的 P

if park.attach != 0 {

p := park.attach.ptr()

park.attach.set(nil)

// 把当前的G设到P的gcBgMarkWorker成员

if !p.gcBgMarkWorker.cas(0, guintptr(unsafe.Pointer(g))) {

return false

}

}

return true

}, unsafe.Pointer(park), waitReasonGCWorkerIdle, traceEvGoBlock, 0)

...

}

}

|

In gcBgMarkStartWorkers we see that it will iterate through all the P’s and then create a G responsible for Mark Work for each P. Although a background marker task is started for each P, it is not possible for each P to perform the marker task, the default resource usage of the background marker task is 25%, so the gcBgMarkWorker initializes the park and binds the G to the P’s gcBgMarkWorker and then hibernates it.

The scheduler controls which Mark Work can be executed by calling the gcController.findRunnableGCWorker method in the scheduling loop runtime.schedule, the code above has already been posted, so I won’t repeat it here.

Background markers

After waking up, we will choose a different marker execution strategy based on gcMarkWorkerMode, and different execution strategies will call runtime.gcDrain :

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

|

func gcBgMarkWorker(_p_ *p) {

gp := getg()

...

for {

...

// 检查P的gcBgMarkWorker是否和当前的G一致, 不一致时结束当前的任务

if _p_.gcBgMarkWorker.ptr() != gp {

break

}

// 禁止G被抢占

park.m.set(acquirem())

// 记录开始时间

startTime := nanotime()

_p_.gcMarkWorkerStartTime = startTime

decnwait := atomic.Xadd(&work.nwait, -1)

systemstack(func() {

// 设置G的状态为等待中这样它的栈可以被扫描

casgstatus(gp, _Grunning, _Gwaiting)

// 判断后台标记任务的模式

switch _p_.gcMarkWorkerMode {

default:

throw("gcBgMarkWorker: unexpected gcMarkWorkerMode")

case gcMarkWorkerDedicatedMode:

// 这个模式下P应该专心执行标记

gcDrain(&_p_.gcw, gcDrainUntilPreempt|gcDrainFlushBgCredit)

if gp.preempt {

// 被抢占时把本地运行队列中的所有G都踢到全局运行队列

lock(&sched.lock)

for {

gp, _ := runqget(_p_)

if gp == nil {

break

}

globrunqput(gp)

}

unlock(&sched.lock)

}

// 继续执行标记

gcDrain(&_p_.gcw, gcDrainFlushBgCredit)

case gcMarkWorkerFractionalMode:

// 执行标记

gcDrain(&_p_.gcw, gcDrainFractional|gcDrainUntilPreempt|gcDrainFlushBgCredit)

case gcMarkWorkerIdleMode:

// 执行标记, 直到被抢占或者达到一定的量

gcDrain(&_p_.gcw, gcDrainIdle|gcDrainUntilPreempt|gcDrainFlushBgCredit)

}

// 恢复G的状态到运行中

casgstatus(gp, _Gwaiting, _Grunning)

})

...

}

}

|

The difference between the different Mark Worker Modes has been described above, so if you don’t remember, you can turn up the page. The main part of the marker execution is in the switch judgment, where different parameters are passed into the gcDrain function according to the different modes.

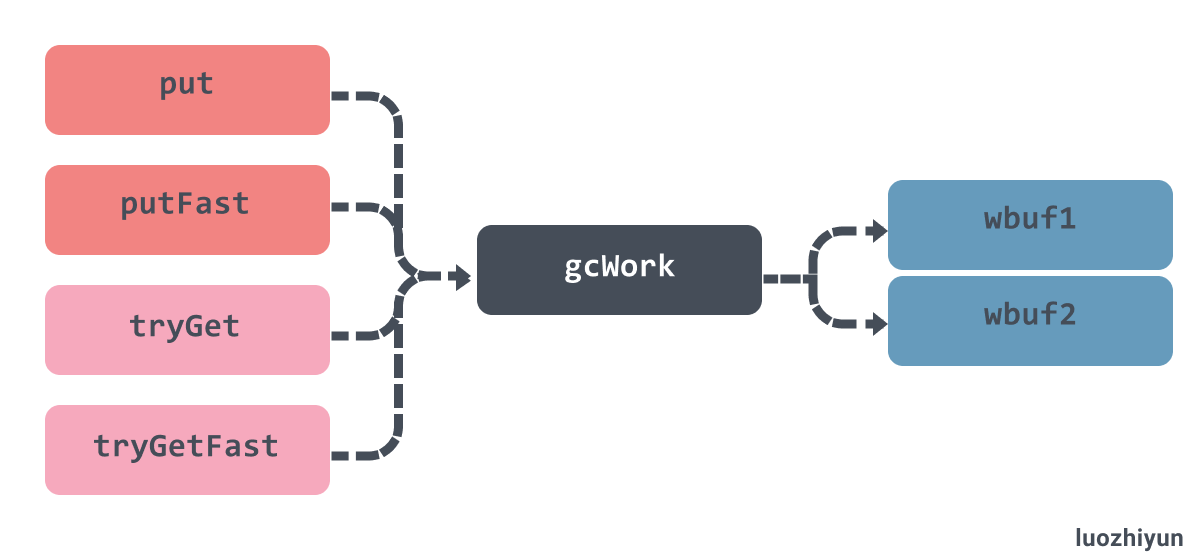

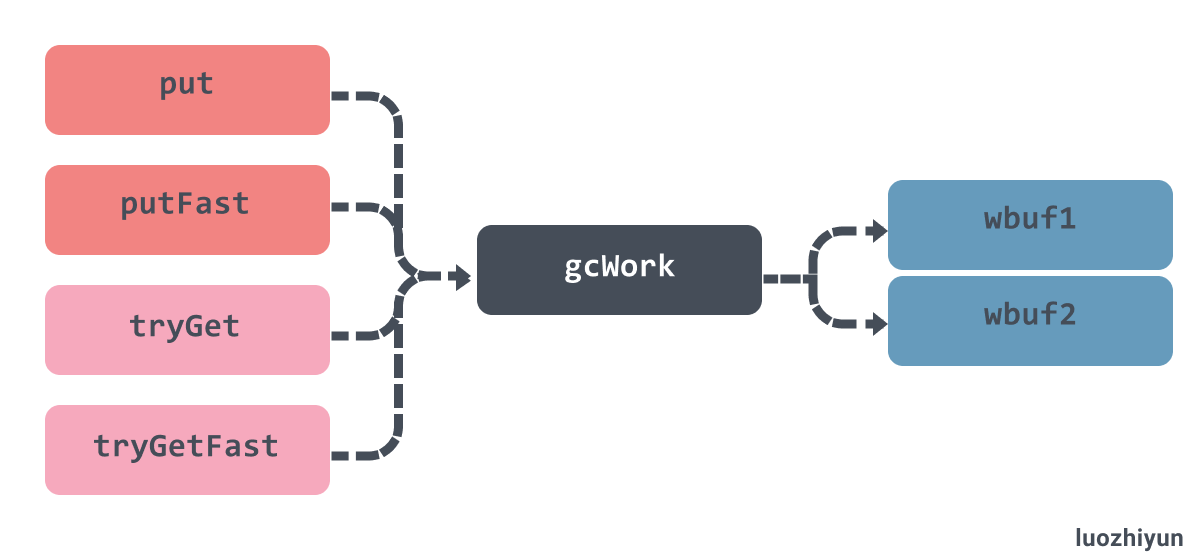

Note that what is passed into gcDrain is a gcWork structure, which is equivalent to a private cache space for each P that holds the objects to be scanned, providing an abstraction for the garbage collector to produce and consume tasks, and which holds two important work buffers wbuf1 and wbuf2: wbuf1 and wbuf2.

When we add or remove objects to this structure, it always operates the wbuf1 buffer first, and triggers a buffer switch once the wbuf1 buffer runs out of space or is empty. And when both buffers are running low or empty, it will insert or fetch objects from the global working buffer.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

|

func (w *gcWork) tryGet() uintptr {

wbuf := w.wbuf1

...

// wbuf1缓冲区无数据时

if wbuf.nobj == 0 {

// wbuf1 与 wbuf2 进行对象互换

w.wbuf1, w.wbuf2 = w.wbuf2, w.wbuf1

wbuf = w.wbuf1

if wbuf.nobj == 0 {

owbuf := wbuf

// 从 work 的 full 队列中获取

wbuf = trygetfull()

...

}

}

wbuf.nobj--

return wbuf.obj[wbuf.nobj]

}

|

Continuing with the gcBgMarkWorker method above, you have to perform mark completion after marking.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

|

func gcBgMarkWorker(_p_ *p) {

gp := getg()

...

for {

...

// 累加所用时间

duration := nanotime() - startTime

switch _p_.gcMarkWorkerMode {

case gcMarkWorkerDedicatedMode:

atomic.Xaddint64(&gcController.dedicatedMarkTime, duration)

atomic.Xaddint64(&gcController.dedicatedMarkWorkersNeeded, 1)

case gcMarkWorkerFractionalMode:

atomic.Xaddint64(&gcController.fractionalMarkTime, duration)

atomic.Xaddint64(&_p_.gcFractionalMarkTime, duration)

case gcMarkWorkerIdleMode:

atomic.Xaddint64(&gcController.idleMarkTime, duration)

}

incnwait := atomic.Xadd(&work.nwait, +1)

// 判断是否所有后台标记任务都完成, 并且没有更多的任务

if incnwait == work.nproc && !gcMarkWorkAvailable(nil) {

// 取消和P的关联

_p_.gcBgMarkWorker.set(nil)

// 允许G被抢占

releasem(park.m.ptr())

// 准备进入完成标记阶段

gcMarkDone()

// 休眠之前会重新关联P

// 因为上面允许被抢占, 到这里的时候可能就会变成其他P

// 如果重新关联P失败则这个任务会结束

park.m.set(acquirem())

park.attach.set(_p_)

}

}

}

|

gcBgMarkWorker checks if it is the last worker based on incnwait, then calls the gcMarkWorkAvailable function to verify that all gcwork tasks and global tasks have been processed, and if both are confirmed to be OK, then calls gcMarkDone to enter the completion marking phase.

Marker Scanning

Let’s take a look at gcDrain.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

|

func gcDrain(gcw *gcWork, flags gcDrainFlags) {

gp := getg().m.curg

// 看到抢占标志时是否要返回

preemptible := flags&gcDrainUntilPreempt != 0

// 是否计算后台的扫描量来减少协助线程和唤醒等待中的G

flushBgCredit := flags&gcDrainFlushBgCredit != 0

// 是否只执行一定量的工作

idle := flags&gcDrainIdle != 0

// 记录初始的已扫描数量

initScanWork := gcw.scanWork

checkWork := int64(1<<63 - 1)

var check func() bool

if flags&(gcDrainIdle|gcDrainFractional) != 0 {

// drainCheckThreshold 默认 100000

checkWork = initScanWork + drainCheckThreshold

if idle {

check = pollWork

} else if flags&gcDrainFractional != 0 {

check = pollFractionalWorkerExit

}

}

// 如果根对象未扫描完, 则先扫描根对象

if work.markrootNext < work.markrootJobs {

// 一直循环直到被抢占或 STW

for !(gp.preempt && (preemptible || atomic.Load(&sched.gcwaiting) != 0)) {

// 从根对象扫描队列取出一个值

job := atomic.Xadd(&work.markrootNext, +1) - 1

if job >= work.markrootJobs {

break

}

// 执行根对象扫描工作

markroot(gcw, job)

if check != nil && check() {

goto done

}

}

}

...

}

|

The gcDrain function starts with a different strategy depending on the flags.

- gcDrainUntilPreempt: returns when G is preempted.

- gcDrainIdle: calls

runtime.pollWork and returns when P contains other pending G executions.

- gcDrainFractional: calls

runtime.pollFractionalWorkerExit and returns when the CPU usage exceeds 20% of fractionalUtilizationGoal.

After setting the check variable, you can execute runtime.markroot to scan for root objects, and after each scan, the check function will be called to check if the marker task should be exited, and if so, it will jump to the done block to exit the marker.

When the marker is finished, the pending task is retrieved.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

|

func gcDrain(gcw *gcWork, flags gcDrainFlags) {

...

// 根对象已经在标记队列中, 消费标记队列

// 一直循环直到被抢占或 STW

for !(gp.preempt && (preemptible || atomic.Load(&sched.gcwaiting) != 0)) {

// 将本地一部分工作放回全局队列中

if work.full == 0 {

gcw.balance()

}

// 获取任务

b := gcw.tryGetFast()

if b == 0 {

b = gcw.tryGet()

if b == 0 {

wbBufFlush(nil, 0)

b = gcw.tryGet()

}

}

// 获取不到对象, 标记队列已为空, 跳出循环

if b == 0 {

break

}

// 扫描获取到的对象

scanobject(b, gcw)

// 如果已经扫描了一定数量的对象,gcCreditSlack值是2000

if gcw.scanWork >= gcCreditSlack {

// 把扫描的对象数量添加到全局

atomic.Xaddint64(&gcController.scanWork, gcw.scanWork)

if flushBgCredit {

// 记录这次扫描的内存字节数用于减少辅助标记的工作量

gcFlushBgCredit(gcw.scanWork - initScanWork)

initScanWork = 0

}

checkWork -= gcw.scanWork

gcw.scanWork = 0

if checkWork <= 0 {

checkWork += drainCheckThreshold

if check != nil && check() {

break

}

}

}

}

done:

// 把扫描的对象数量添加到全局

if gcw.scanWork > 0 {

atomic.Xaddint64(&gcController.scanWork, gcw.scanWork)

if flushBgCredit {

// 记录这次扫描的内存字节数用于减少辅助标记的工作量

gcFlushBgCredit(gcw.scanWork - initScanWork)

}

gcw.scanWork = 0

}

}

|

Here before fetching the cache queue runtime.gcWork.balance will be called, which will cache part of the work of gcWork back into the global queue, this method is mainly used to balance the load situation of different P’s.

Then fetch gcWork’s cached tasks and give the fetched tasks to scanobject to execute. This function will start scanning from the passed location and will color the active objects it finds. runtime.gcFlushBgCredit will record the number of memory bytes scanned this time to reduce the workload of auxiliary markers.

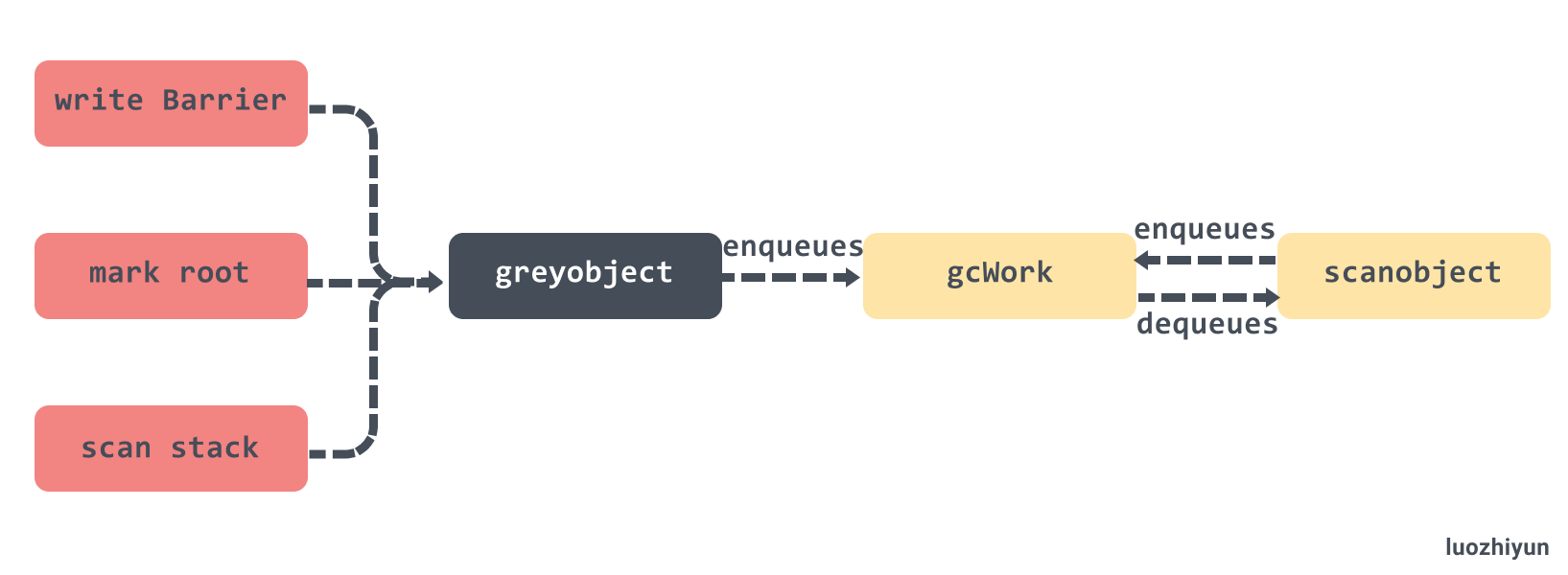

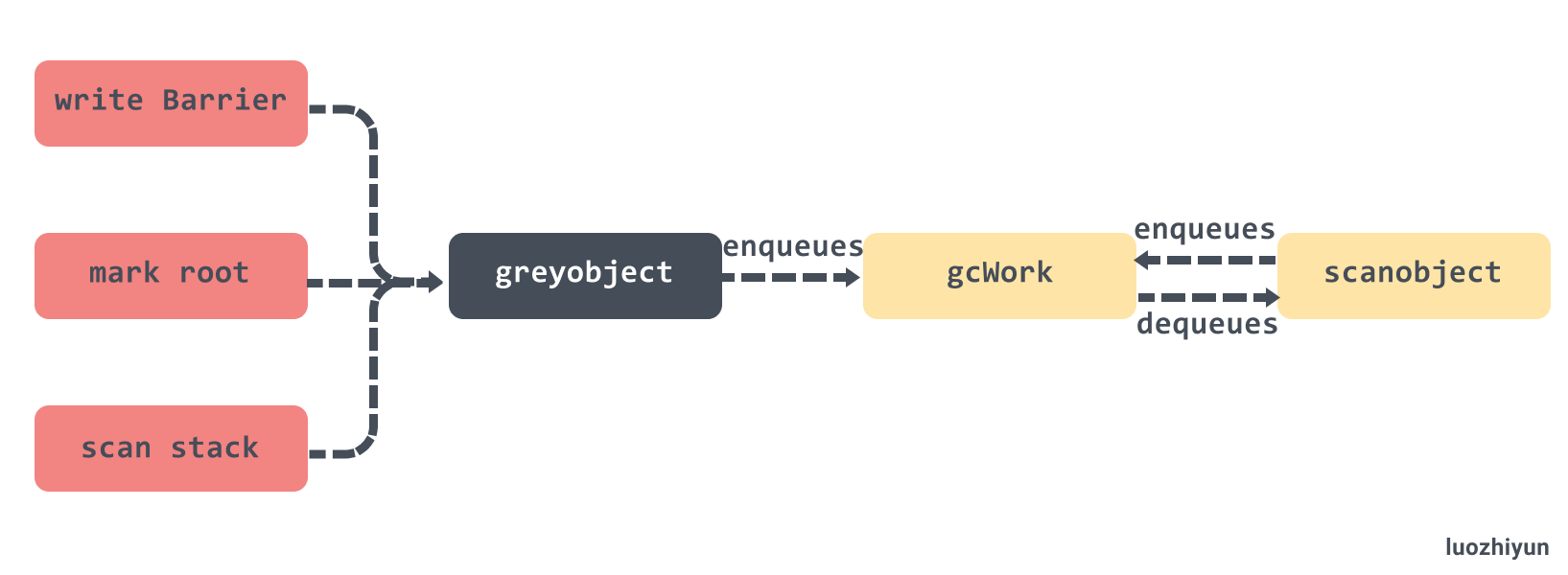

Here I’ll summarize the gcWork outgoing and incoming queues. gcWork’s outgoing queue is our scanobject method above, which fetches the gcWork cache object and executes it, but also queues the active object into gcWork again if it is found.

In addition to scanobject, the write barrier, root object scan, and stack scan all add additional gray objects to gcWork waiting to be processed.

root marker

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

|

func markroot(gcw *gcWork, i uint32) {

baseFlushCache := uint32(fixedRootCount)

baseData := baseFlushCache + uint32(work.nFlushCacheRoots)

baseBSS := baseData + uint32(work.nDataRoots)

baseSpans := baseBSS + uint32(work.nBSSRoots)

baseStacks := baseSpans + uint32(work.nSpanRoots)

end := baseStacks + uint32(work.nStackRoots)

switch {

// 释放mcache中的所有span, 要求STW

case baseFlushCache <= i && i < baseData:

flushmcache(int(i - baseFlushCache))

// 扫描可读写的全局变量

case baseData <= i && i < baseBSS:

for _, datap := range activeModules() {

markrootBlock(datap.data, datap.edata-datap.data, datap.gcdatamask.bytedata, gcw, int(i-baseData))

}

// 扫描未初始化的全局变量

case baseBSS <= i && i < baseSpans:

for _, datap := range activeModules() {

markrootBlock(datap.bss, datap.ebss-datap.bss, datap.gcbssmask.bytedata, gcw, int(i-baseBSS))

}

// 扫描 finalizers 队列

case i == fixedRootFinalizers:

for fb := allfin; fb != nil; fb = fb.alllink {

cnt := uintptr(atomic.Load(&fb.cnt))

scanblock(uintptr(unsafe.Pointer(&fb.fin[0])), cnt*unsafe.Sizeof(fb.fin[0]), &finptrmask[0], gcw, nil)

}

// 释放已中止的 G 的栈

case i == fixedRootFreeGStacks:

systemstack(markrootFreeGStacks)

// 扫描 MSpan.specials

case baseSpans <= i && i < baseStacks:

markrootSpans(gcw, int(i-baseSpans))

// 扫描各个 G 的栈

default:

// 获取需要扫描的 G

var gp *g

if baseStacks <= i && i < end {

gp = allgs[i-baseStacks]

} else {

throw("markroot: bad index")

}

// 记录等待开始的时间

status := readgstatus(gp) // We are not in a scan state

if (status == _Gwaiting || status == _Gsyscall) && gp.waitsince == 0 {

gp.waitsince = work.tstart

}

// 转交给g0进行扫描

systemstack(func() {

userG := getg().m.curg

selfScan := gp == userG && readgstatus(userG) == _Grunning

// 如果是扫描自己的,则转换自己的g的状态

if selfScan {

casgstatus(userG, _Grunning, _Gwaiting)

userG.waitreason = waitReasonGarbageCollectionScan

}

// 挂起 G,让对应的 G 停止运行

stopped := suspendG(gp)

if stopped.dead {

gp.gcscandone = true

return

}

if gp.gcscandone {

throw("g already scanned")

}

// 扫描g的栈

scanstack(gp, gcw)

gp.gcscandone = true

resumeG(stopped)

if selfScan {

casgstatus(userG, _Gwaiting, _Grunning)

}

})

}

}

|

When I saw the above scanned BSS and Date related memory blocks I was also very puzzled, we can see with the explanation of the Wikipedia Data segment https://en.wikipedia.org/wiki/Data_segment that:

The .data segment contains any global or static variables which have a pre-defined value and can be modified.

The BSS segment, also known as uninitialized data, is usually adjacent to the data segment.

Data segments are usually global variables that are initialized in advance, and BSS segments are usually data that are not initialized.

Because there are too many caches, data segments, stack memory sweeps, many bit operations and pointer operations involved, the code implementation is rather complicated. Here is a brief look at scanblock, scanstack.

scanblock

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

|

func scanblock(b0, n0 uintptr, ptrmask *uint8, gcw *gcWork, stk *stackScanState) {

b := b0

n := n0

// 遍历扫描的地址

for i := uintptr(0); i < n; {

// 找到bitmap中对应的byte

bits := uint32(*addb(ptrmask, i/(sys.PtrSize*8)))

if bits == 0 {

i += sys.PtrSize * 8

continue

}

// 遍历 byte

for j := 0; j < 8 && i < n; j++ {

// 如果该地址包含指针

if bits&1 != 0 {

p := *(*uintptr)(unsafe.Pointer(b + i))

if p != 0 {

// 标记在该地址的对象存活, 并把它加到标记队列

if obj, span, objIndex := findObject(p, b, i); obj != 0 {

greyobject(obj, b, i, span, gcw, objIndex)

} else if stk != nil && p >= stk.stack.lo && p < stk.stack.hi {

stk.putPtr(p, false)

}

}

}

bits >>= 1

i += sys.PtrSize

}

}

}

|

scanstack

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

83

84

85

|

func scanstack(gp *g, gcw *gcWork) {

...

// 判断是否可以安全的进行 收缩栈

if isShrinkStackSafe(gp) {

// Shrink the stack if not much of it is being used.

// 收缩栈

shrinkstack(gp)

} else {

// Otherwise, shrink the stack at the next sync safe point.

// 否则下次安全点再进行收缩栈

gp.preemptShrink = true

}

var state stackScanState

state.stack = gp.stack

if gp.sched.ctxt != nil {

scanblock(uintptr(unsafe.Pointer(&gp.sched.ctxt)), sys.PtrSize, &oneptrmask[0], gcw, &state)

}

scanframe := func(frame *stkframe, unused unsafe.Pointer) bool {

scanframeworker(frame, &state, gcw)

return true

}

// 枚举所有调用帧

gentraceback(^uintptr(0), ^uintptr(0), 0, gp, 0, nil, 0x7fffffff, scanframe, nil, 0)

// 枚举所有defer的调用帧

tracebackdefers(gp, scanframe, nil)

// Find and trace other pointers in defer records.

// 扫描defer中的代码块

for d := gp._defer; d != nil; d = d.link {

...

}

if gp._panic != nil {

state.putPtr(uintptr(unsafe.Pointer(gp._panic)), false)

}

// 扫描并找到所有可达的栈对象

state.buildIndex()

for {

p, conservative := state.getPtr()

if p == 0 {

break

}

obj := state.findObject(p)

if obj == nil {

continue

}

t := obj.typ

// 已被扫描过

if t == nil {

continue

}

// 标记扫描

obj.setType(nil)

gcdata := t.gcdata

var s *mspan

if t.kind&kindGCProg != 0 {

s = materializeGCProg(t.ptrdata, gcdata)

gcdata = (*byte)(unsafe.Pointer(s.startAddr))

}

b := state.stack.lo + uintptr(obj.off)

if conservative {

scanConservative(b, t.ptrdata, gcdata, gcw, &state)

} else {

scanblock(b, t.ptrdata, gcdata, gcw, &state)

}

if s != nil {

dematerializeGCProg(s)

}

}

for state.head != nil {

x := state.head

state.head = x.next

x.nobj = 0

putempty((*workbuf)(unsafe.Pointer(x)))

}

if state.buf != nil || state.cbuf != nil || state.freeBuf != nil {

throw("remaining pointer buffers")

}

}

|

greyobject

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

|

func greyobject(obj, base, off uintptr, span *mspan, gcw *gcWork, objIndex uintptr) {

// obj should be start of allocation, and so must be at least pointer-aligned.

if obj&(sys.PtrSize-1) != 0 {

throw("greyobject: obj not pointer-aligned")

}

mbits := span.markBitsForIndex(objIndex)

// 检查是否所有可到达的对象都被正确标记的机制, 仅除错使用

if useCheckmark {

...

} else {

...

// 被标记过了直接返回

if mbits.isMarked() {

return

}

// 设置标记

mbits.setMarked()

// 标记 span

arena, pageIdx, pageMask := pageIndexOf(span.base())

if arena.pageMarks[pageIdx]&pageMask == 0 {

atomic.Or8(&arena.pageMarks[pageIdx], pageMask)

}

// span的类型是noscan, 则不需要把对象放入标记队列

if span.spanclass.noscan() {

gcw.bytesMarked += uint64(span.elemsize)

return

}

}

// 尝试存入gcwork的缓存中,或全局队列中

if !gcw.putFast(obj) {

gcw.put(obj)

}

}

|

Object scanning

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

|

func scanobject(b uintptr, gcw *gcWork) {

// 获取 b 的 heapBits 对象

hbits := heapBitsForAddr(b)

// 获取 span

s := spanOfUnchecked(b)

// span 对应的对象大小

n := s.elemsize

if n == 0 {

throw("scanobject n == 0")

}

// 每次最大只扫描128KB

if n > maxObletBytes {

// Large object. Break into oblets for better

// parallelism and lower latency.

if b == s.base() {

if s.spanclass.noscan() {

// Bypass the whole scan.

gcw.bytesMarked += uint64(n)

return

}

// 把多于128KB的对象重新放回gcworker中,下次再扫描

for oblet := b + maxObletBytes; oblet < s.base()+s.elemsize; oblet += maxObletBytes {

if !gcw.putFast(oblet) {

gcw.put(oblet)

}

}

}

n = s.base() + s.elemsize - b

if n > maxObletBytes {

n = maxObletBytes

}

}

var i uintptr

for i = 0; i < n; i += sys.PtrSize {

// 获取对应的bit

// Find bits for this word.

if i != 0 {

hbits = hbits.next()

}

bits := hbits.bits()

// 检查scan bit判断是否继续扫描

if i != 1*sys.PtrSize && bits&bitScan == 0 {

break // no more pointers in this object

}

// 如果不是指针则继续

if bits&bitPointer == 0 {

continue // not a pointer

}

// 取出指针的值

obj := *(*uintptr)(unsafe.Pointer(b + i))

if obj != 0 && obj-b >= n {

// 根据地址值去堆中查找对象

if obj, span, objIndex := findObject(obj, b, i); obj != 0 {

// 调用 greyobject 标记对象并把对象放到标记队列中

greyobject(obj, b, i, span, gcw, objIndex)

}

}

}

gcw.bytesMarked += uint64(n)

gcw.scanWork += int64(i)

}

|

Assist marker mutator assists

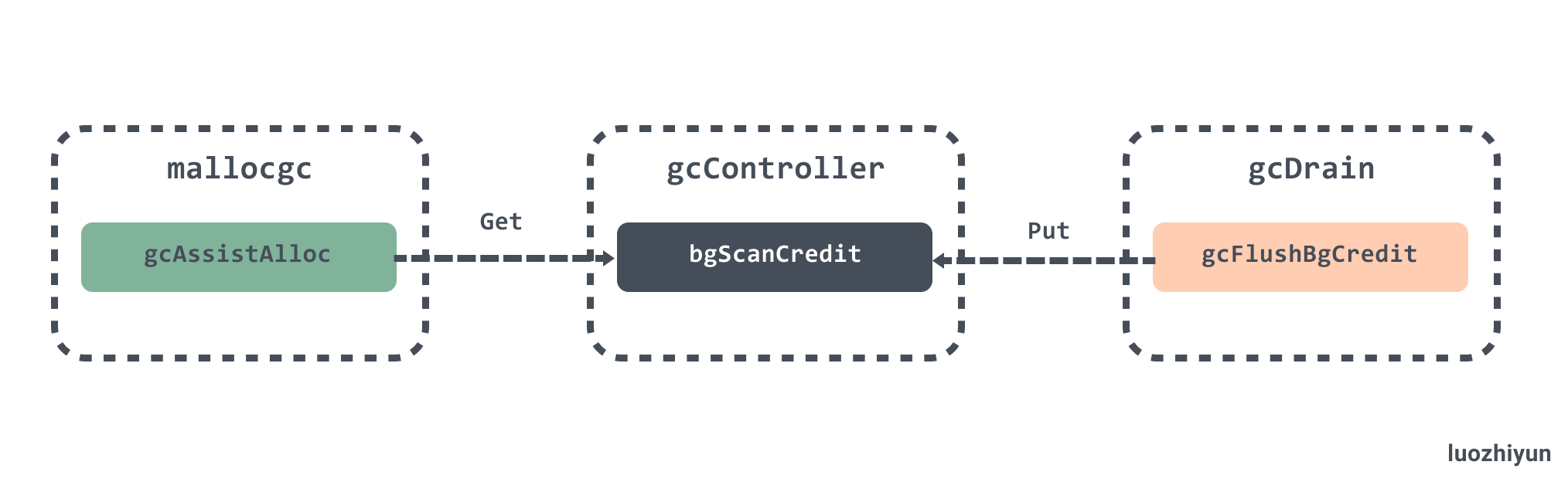

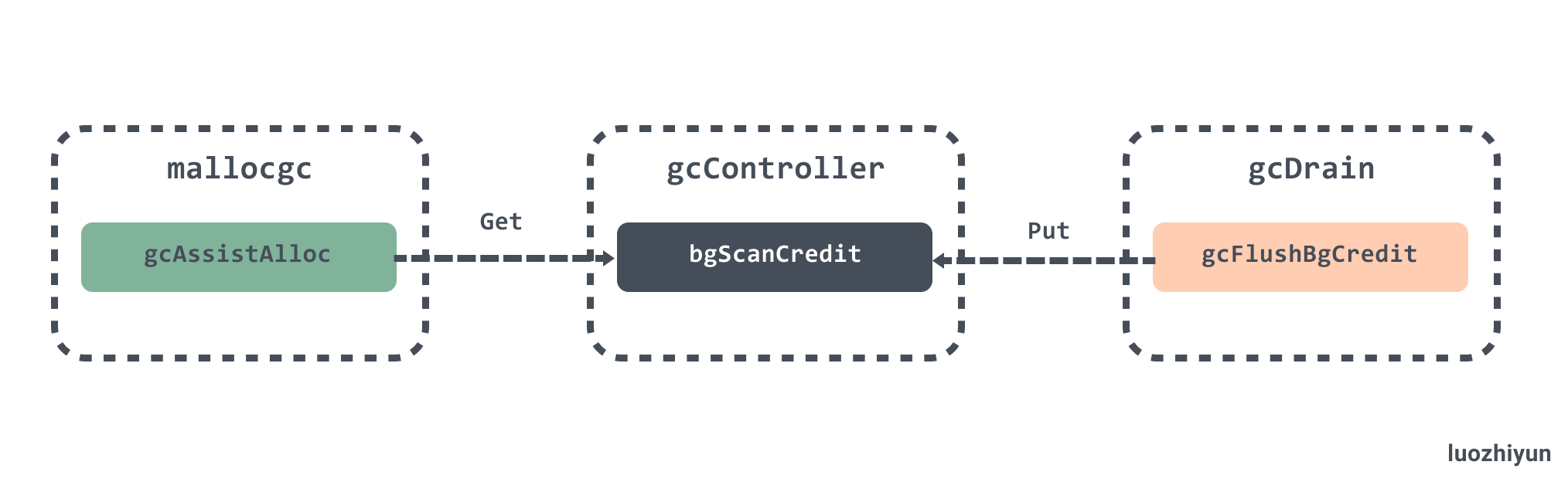

The role of mutator assists was mentioned at the beginning of the analysis, mainly to prevent the heap from growing too fast, if a G running at the same time allocates memory during GC execution, then the G will be asked to assist the GC to do part of the work, it follows a very simple and plain principle, allocate as much memory as you need to complete the tagging task.

The entry point for mutator assists is in the mallocgc function in go\src\runtime\malloc.go.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

|

func mallocgc(size uintptr, typ *_type, needzero bool) unsafe.Pointer {

...

var assistG *g

if gcBlackenEnabled != 0 {

assistG = getg()

if assistG.m.curg != nil {

assistG = assistG.m.curg

}

// 减去内存值

assistG.gcAssistBytes -= int64(size)

if assistG.gcAssistBytes < 0 {

// This G is in debt.

gcAssistAlloc(assistG)

}

}

...

return x

}

|

Each time mallocgc allocates memory it checks for a negative value in the gcAssistBytes field, which stores the number of bytes of the object currently marked by the Goroutine auxiliary. If it is negative, then gcAssistAlloc is called to fetch it from the global credit bgScanCredit.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

|

func gcAssistAlloc(gp *g) {

...

retry:

// 计算需要完成的标记任务数量

debtBytes := -gp.gcAssistBytes

scanWork := int64(gcController.assistWorkPerByte * float64(debtBytes))

if scanWork < gcOverAssistWork {

scanWork = gcOverAssistWork

debtBytes = int64(gcController.assistBytesPerWork * float64(scanWork))

}

// 获取全局辅助标记的字节数

bgScanCredit := atomic.Loadint64(&gcController.bgScanCredit)

stolen := int64(0)

if bgScanCredit > 0 {

if bgScanCredit < scanWork {

stolen = bgScanCredit

gp.gcAssistBytes += 1 + int64(gcController.assistBytesPerWork*float64(stolen))

} else {

stolen = scanWork

gp.gcAssistBytes += debtBytes

}

// 全局信用扣除stolen点数

atomic.Xaddint64(&gcController.bgScanCredit, -stolen)

scanWork -= stolen

// 减到 0 说明 bgScanCredit 是由足够的信用可以处理 scanWork

if scanWork == 0 {

return

}

}

// 到这里说明 bgScanCredit 小于 scanWork

// 需要调用 gcDrainN 完成指定数量的标记任务并返回

systemstack(func() {

// 执行标记任务

gcAssistAlloc1(gp, scanWork)

})

completed := gp.param != nil

gp.param = nil

if completed {

gcMarkDone()

}

if gp.gcAssistBytes < 0 {

if gp.preempt {

Gosched()

goto retry

}

// 如果全局信用仍然不足将当前 Goroutine 陷入休眠

// 加入全局的辅助标记队列并等待后台标记任务的唤醒

if !gcParkAssist() {

goto retry

}

}

}

|

If the global credit is still insufficient, the current Goroutine is put into hibernation, added to the global auxiliary marker queue and waits for the background marker task to wake up.

The call to gcFlushBgCredit when scanning memory is responsible for waking up the secondary tagging Goroutine.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

|

func gcFlushBgCredit(scanWork int64) {

// 辅助队列中不存在等待的 Goroutine

if work.assistQueue.q.empty() {

// 当前的信用会直接加到全局信用 bgScanCredit

atomic.Xaddint64(&gcController.bgScanCredit, scanWork)

return

}

scanBytes := int64(float64(scanWork) * gcController.assistBytesPerWork)

lock(&work.assistQueue.lock)

// 如果辅助队列不为空

for !work.assistQueue.q.empty() && scanBytes > 0 {

gp := work.assistQueue.q.pop()

// 唤醒 Goroutine

if scanBytes+gp.gcAssistBytes >= 0 {

scanBytes += gp.gcAssistBytes

gp.gcAssistBytes = 0

ready(gp, 0, false)

} else {

gp.gcAssistBytes += scanBytes

scanBytes = 0

work.assistQueue.q.pushBack(gp)

break

}

}

// 标记任务量仍然有剩余,这些标记任务都会加入全局信用

if scanBytes > 0 {

scanWork = int64(float64(scanBytes) * gcController.assistWorkPerByte)

atomic.Xaddint64(&gcController.bgScanCredit, scanWork)

}

unlock(&work.assistQueue.lock)

}

|

gcFlushBgCredit fetches the sleeping auxiliary queue Goroutine, wakes up the auxiliary Goroutine if there is enough credit currently available, and if there is any left, then all these marker tasks will be added to the global credit.

The overall set of mechanisms is as follows.

Mark completion

As we analyzed above in gcBgMarkWorker, gcMarkDone will be called to perform the marker completion operation after the marker is completed.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

|

func gcMarkDone() {

semacquire(&work.markDoneSema)

top:

// 再次检查任务是否已执行完毕

if !(gcphase == _GCmark && work.nwait == work.nproc && !gcMarkWorkAvailable(nil)) {

semrelease(&work.markDoneSema)

return

}

semacquire(&worldsema)

gcMarkDoneFlushed = 0

systemstack(func() {

gp := getg().m.curg

casgstatus(gp, _Grunning, _Gwaiting)

// 遍历所有的 P

forEachP(func(_p_ *p) {

// 将 P 对应的write barrier buffer 中的对象加入到 gcWork 中

wbBufFlush1(_p_)

// 将 gcWork 中的缓存对象加入到全局队列中

_p_.gcw.dispose()

// 表示 gcWork 的数据都已迁移到 全局队列中

if _p_.gcw.flushedWork {

atomic.Xadd(&gcMarkDoneFlushed, 1)

_p_.gcw.flushedWork = false

} else if debugCachedWork {

...

}

...

})

casgstatus(gp, _Gwaiting, _Grunning)

})

if gcMarkDoneFlushed != 0 {

if debugCachedWork {

// Release paused gcWorks.

atomic.Xadd(&gcWorkPauseGen, 1)

}

semrelease(&worldsema)

goto top

}

// 记录完成标记阶段开始的时间和STW开始的时间

now := nanotime()

work.tMarkTerm = now

work.pauseStart = now

// 禁止G被抢占

getg().m.preemptoff = "gcing"

// STW

systemstack(stopTheWorldWithSema)

...

// 禁止辅助GC和后台标记任务的运行

atomic.Store(&gcBlackenEnabled, 0)

// 唤醒所有因为辅助GC而休眠的G

gcWakeAllAssists()

semrelease(&work.markDoneSema)

schedEnableUser(true)

// 计算下一次触发gc需要的heap大小

nextTriggerRatio := gcController.endCycle()

// 执行标记终止

gcMarkTermination(nextTriggerRatio)

}

|

gcMarkDone will call the forEachP function to iterate through all P’s and move the tasks in the corresponding P’s gcWork to the global queue, adding 1 to gcMarkDoneFlushed if there are tasks in the gcWork, and after iterating through all P’s it will determine that if gcMarkDoneFlushed is not 0, then it will jump to the top marker and continue cycling until there are no tasks in the local queue.

Next, gcBlackenEnabled is set to 0, which means that the auxiliary marker and background marker are closed; the blocked auxiliary marker is woken up; schedEnableUser is called to resume the scheduling of the user’s Goroutine; note that it is currently in the STW phase, so the woken up Goroutine will not be executed immediately, but will wait until STW is finished.

Finally, gcMarkTermination is called to perform mark termination.

Mark Termination

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

|

func gcMarkTermination(nextTriggerRatio float64) {

// 禁止辅助GC和后台标记任务的运行

atomic.Store(&gcBlackenEnabled, 0)

// 将 GC 阶段切换到 _GCmarktermination

setGCPhase(_GCmarktermination)

work.heap1 = memstats.heap_live

// 记录开始时间

startTime := nanotime()

mp := acquirem()

mp.preemptoff = "gcing"

_g_ := getg()

_g_.m.traceback = 2

gp := _g_.m.curg

// 设置 G 的状态为等待中

casgstatus(gp, _Grunning, _Gwaiting)

gp.waitreason = waitReasonGarbageCollection

// 切换到 g0 运行

systemstack(func() {

// 开始 STW 中的标记

gcMark(startTime)

})

systemstack(func() {

work.heap2 = work.bytesMarked

...

// 设置当前GC阶段到关闭, 并禁用写屏障

setGCPhase(_GCoff)

// 唤醒后台清扫任务

gcSweep(work.mode)

})

_g_.m.traceback = 0

casgstatus(gp, _Gwaiting, _Grunning)