KPTI in a Nutshell

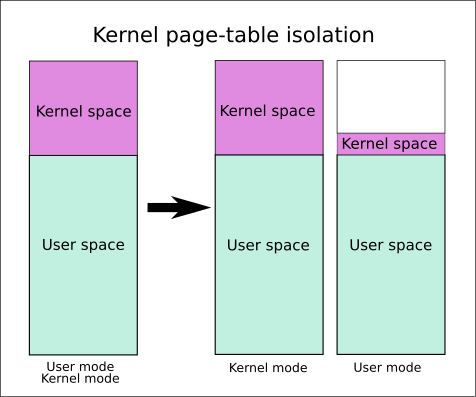

The KPTI (Kernel Page Table Isolation) mechanism was originally designed to mitigate KASLR bypass and CPU-side channel attacks.

In the KPTI mechanism, the isolation of memory in kernel state space from user state space has been further enhanced.

- The page table in the kernel state includes the page table of user-space memory and the page table of kernel-space memory.

- The page table in the user state includes only the page table of user space memory and the page table of kernel space memory as necessary, such as the memory used for handling system calls, interrupts, and other information.

In short, the KPTI mechanism avoids meltdown security vulnerabilities by switching the page table during the process of falling from user state to kernel state, such as system calls, so that the kernel state cannot directly access the user state virtual memory and execute the user state code segments.

KPTI in xv6-riscv

Context switching

The context switch for user state into kernel state is defined in uservec in kernel/trampoline.S.

|

|

You can see that the statement csrw satp, t1 switches the user state page table to the kernel state page table, which means that although you can access the user state page table in the kernel state, you cannot access the user state code segment directly through virtual memory because we do not have a direct mapping for the user code segment in the kernel page table.

Kernel access to user state data

How the kernel accesses user state data is defined in the copyout and copyin functions in kernel/vm.c.

|

|

As you can see, in order to access the user state data, we need to first translate the user state virtual address into a physical address through the user state page table, and then directly access the user state data through the physical address, then since the data segment in the kernel is mapped 1:1, the memory access will not be wrong when it goes through the MMU address translation, the kernel address mapping is defined in the kernel/vm.c in the kvmmake function.

|

|