Recently, I have been doing CSI-related work, and as I have been developing it, I have come to believe that the details of CSI are quite tedious. By organizing the CSI workflow, I can deepen my understanding of CSI and share my knowledge of CSI with you.

I will introduce CSI through two articles, the first of which will focus on the basic components and workings of CSI, based on Kubernetes as the COs (Container Orchestration Systems) for CSI. The second article will take a few typical CSI projects and analyze the specific implementation.

Basic components of CSI

There are two types of CSI cloud providers, one of in-tree type and one of out-of-tree type. The former is a storage plugin that runs inside the k8s core component; the latter is a storage plugin that runs independently of the k8s component. This article focuses on the out-of-tree type of plugins.

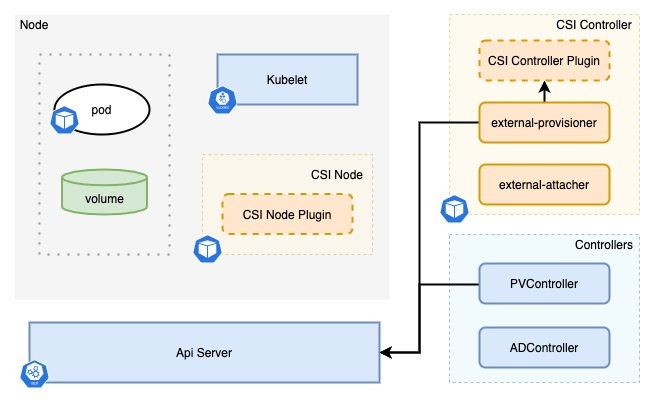

The out-of-tree type of plug-in interacts with k8s components through the gRPC interface, and k8s provides a number of SideCar components to work with CSI plug-ins to achieve rich functionality. For out-of-tree plugins, the components used are divided into SideCar components and plugins that need to be implemented by third parties.

SideCar components

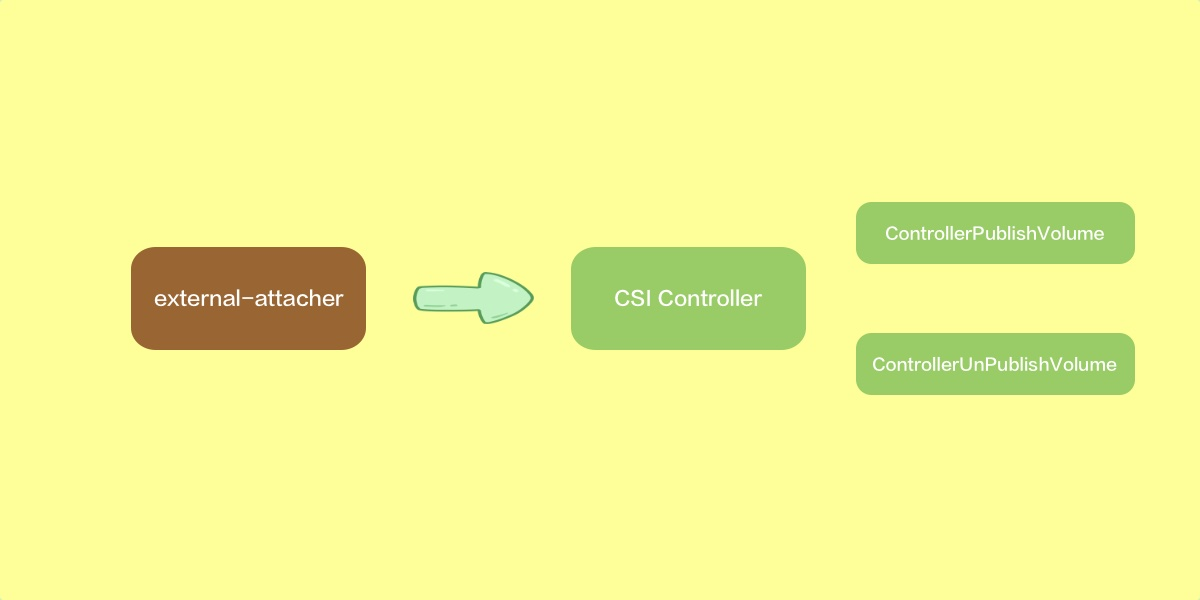

external-attacher

Listens to the VolumeAttachment object and calls the ControllerPublishVolume and ControllerUnpublishVolume interfaces of the CSI driver Controller service to attach and remove volumes to and from the node.

This component is needed if the storage system requires the attach/detach step, as the K8s internal Attach/Detach Controller does not call the CSI driver interface directly.

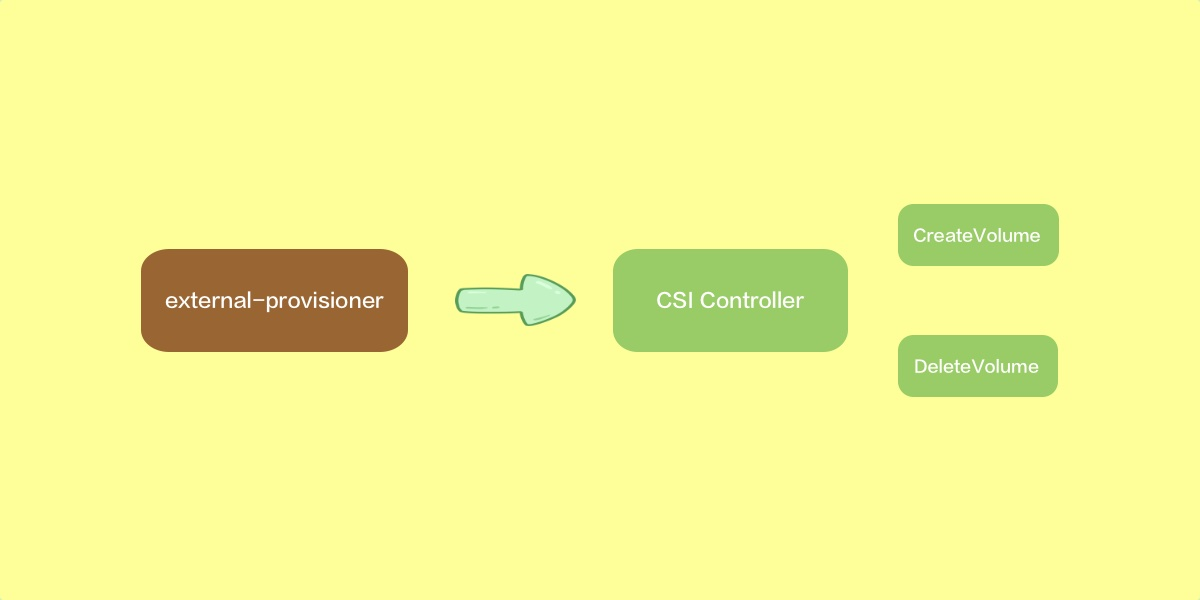

external-provisioner

Provided that the provisioner field of the StorageClass specified in the PVC is the same as the return value of the CreateVolume and DeleteVolume interfaces of the CSI driver Controller service. The GetPluginInfo interface of the Identity service returns the same value. Once the new volume is provisioned, K8s will create the corresponding PV.

If the recycling policy of the PV bound to the PVC is delete, then the external-provisioner component listens for the deletion of the PVC and calls the DeleteVolume interface of the CSI driver Controller service. Once the volume is deleted successfully, the component will also delete the corresponding PV.

The component also supports the creation of data sources from snapshots. If the Snapshot CRD data source is specified in the PVC, the component will get information about the snapshot through the SnapshotContent object and pass this content to the CSI driver when calling the CreateVolume interface, and the CSI driver will need to create the volume based on the data source snapshot.

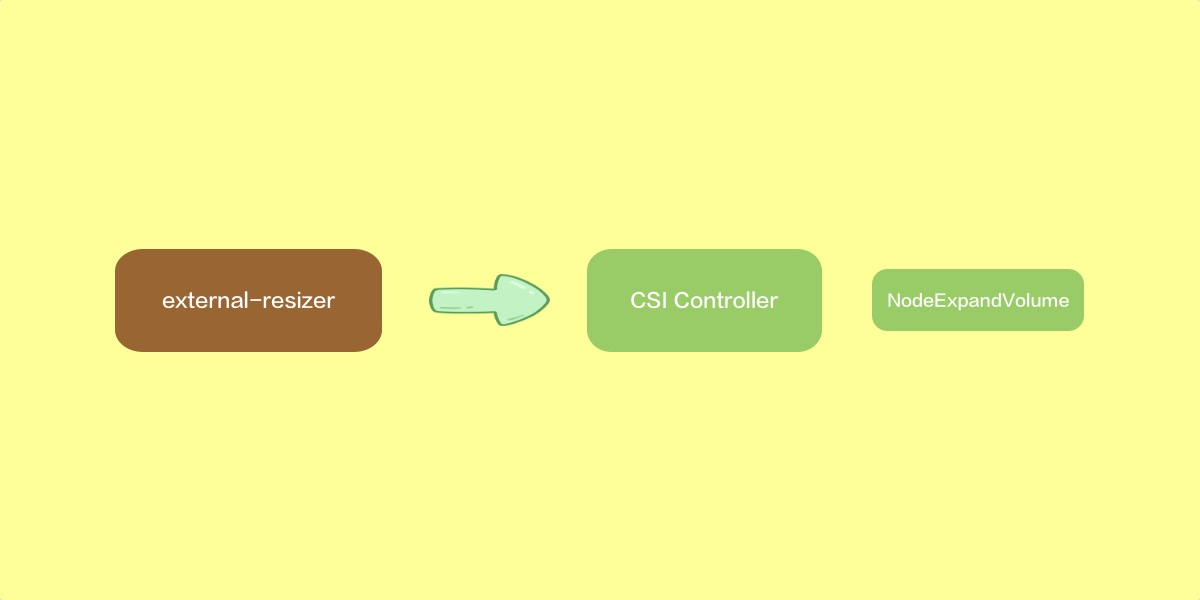

external-resizer

Listens to the PVC object and if the user requests more storage on the PVC object, the component calls the NodeExpandVolume interface of the CSI driver Controller service, which is used to expand the volume.

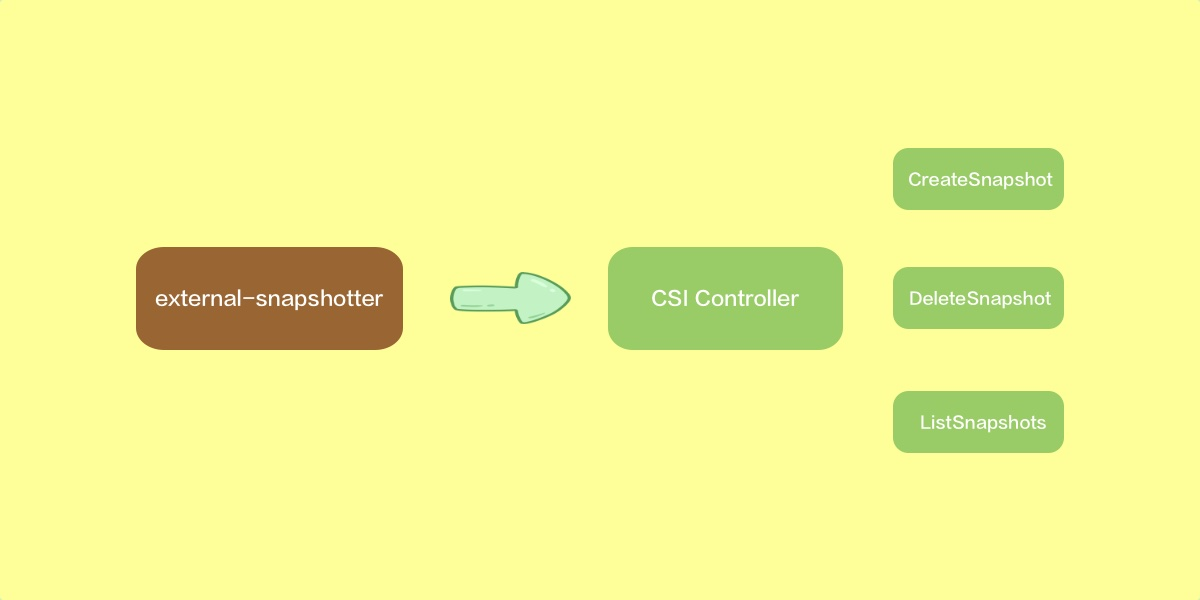

external-snapshotter

This component is used in conjunction with the Snapshot Controller, which creates the corresponding VolumeSnapshotContent based on the Snapshot objects created in the cluster, and the external-snapshotter, which listens to the VolumeSnapshotContent object. When the VolumeSnapshotContent is listened to, the corresponding parameter is passed to the CSI driver Controller service via CreateSnapshotRequest to invoke its CreateSnapshot interface. This component is also responsible for calling the DeleteSnapshot and ListSnapshots interfaces.

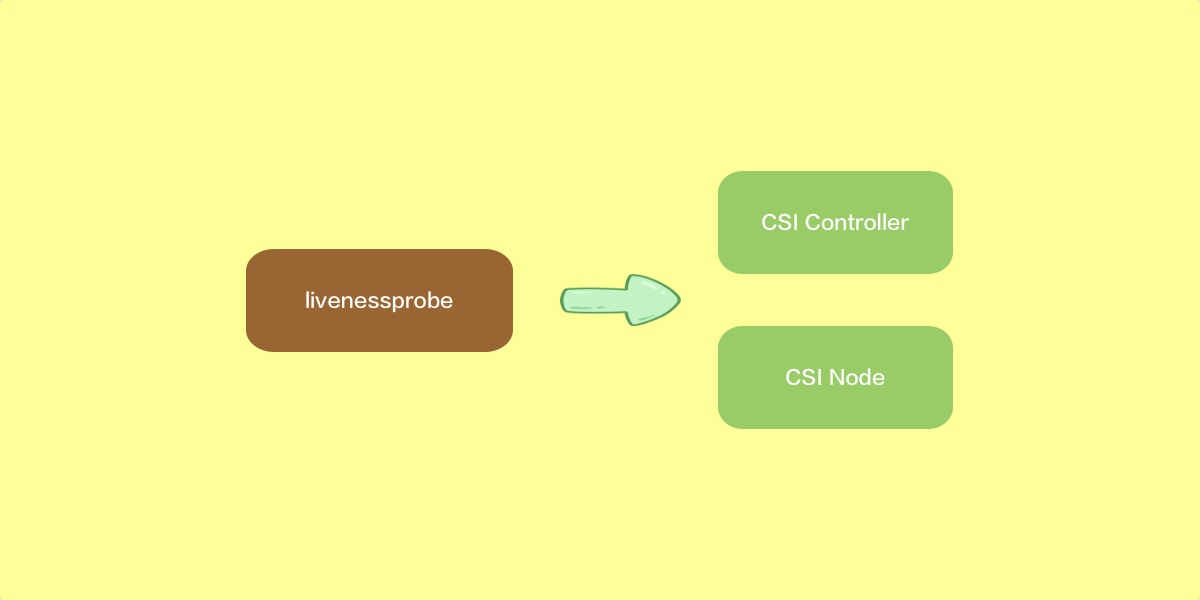

livenessprobe

Responsible for monitoring the health of the CSI driver and reporting to k8s through the Liveness Probe mechanism, and restarting the pod when an abnormality is detected in the CSI driver.

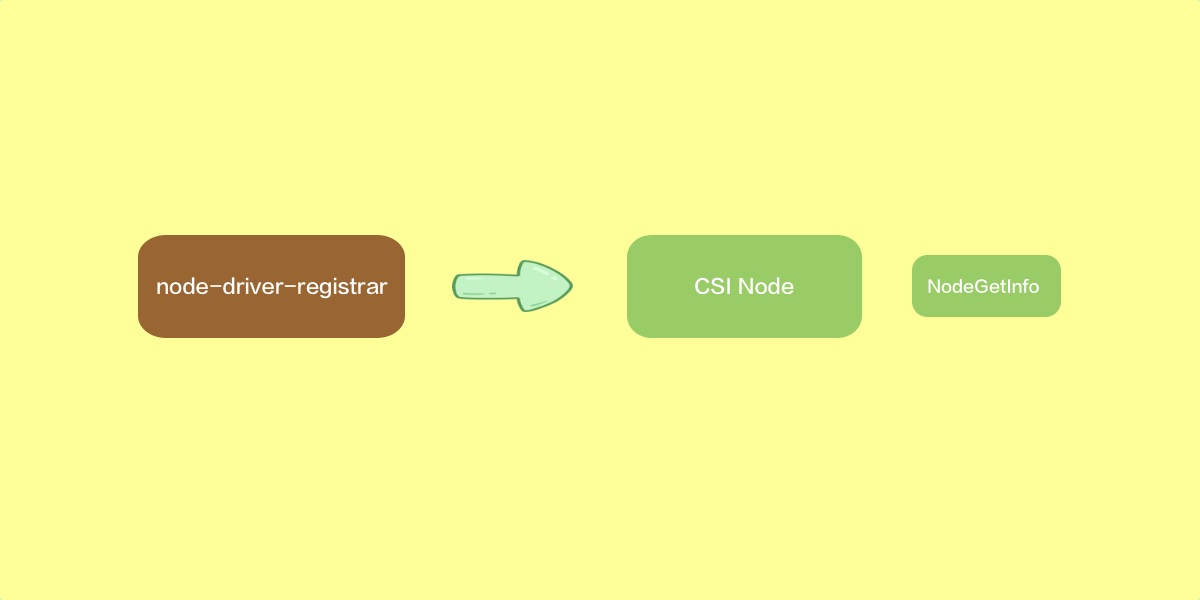

node-driver-registrar

By directly calling the NodeGetInfo interface of the CSI driver Node service, the CSI driver information is registered on the corresponding node’s kubelet through the kubelet’s plugin registration mechanism.

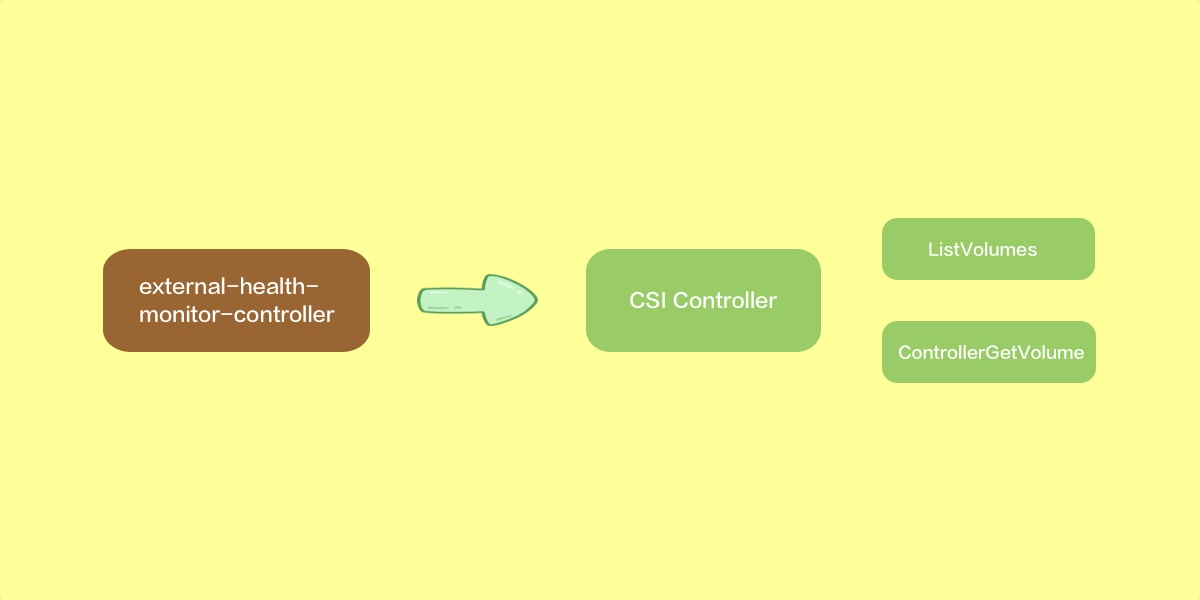

external-health-monitor-controller

The health of the CSI volume is checked and reported in the event of the PVC by calling the ListVolumes or ControllerGetVolume interface of the CSI driver Controller service.

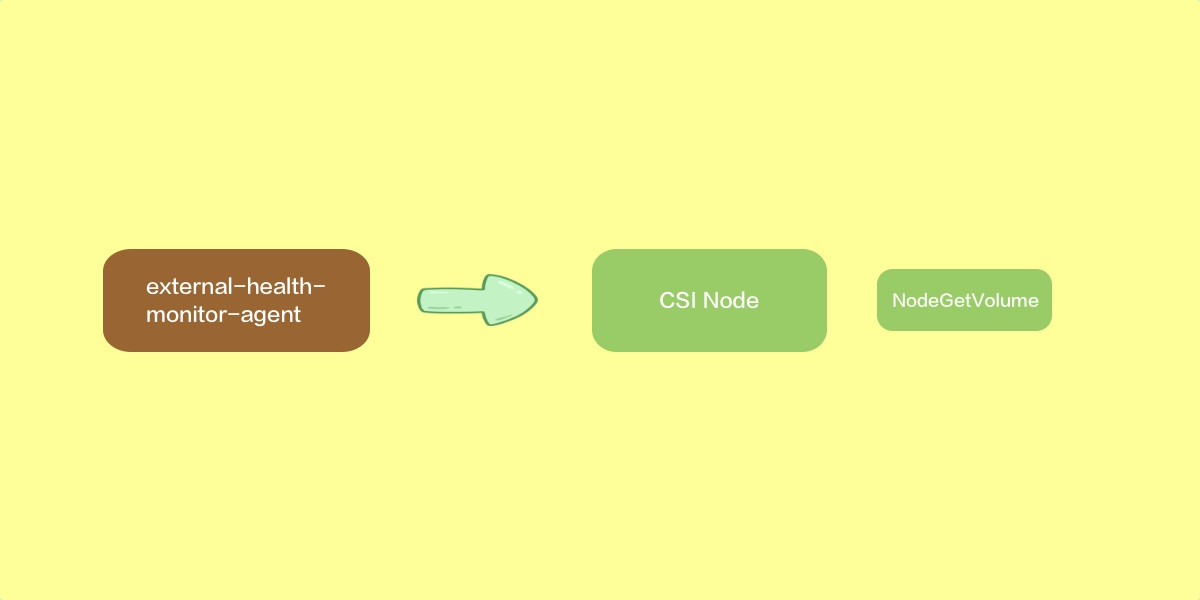

external-health-monitor-agent

Check the health of the CSI volume by calling the NodeGetVolumeStats interface of the CSI driver Node service and report it in the pod’s event.

Third-party plugins

The third-party storage provider (i.e., SP, Storage Provider) needs to implement two plugins, Controller, which is responsible for volume management and is deployed as a StatefulSet, and Node, which is responsible for mounting the volume into the pod and is deployed as a DaemonSet in each node.

The CSI plug-in interacts with kubelet and k8s external components through the Unix Domani Socket gRPC, which defines three sets of RPC interfaces that SPs need to implement in order to communicate with k8s external components. The three sets of interfaces are CSI Identity, CSI Controller, and CSI Node, which are defined in detail below.

CSI Identity

The CSI Identity is used to provide the identity information of the CSI driver and needs to be implemented by both the Controller and the Node. The interfaces are as follows.

GetPluginInfo is mandatory, the node-driver-registrar component will call this interface to register the CSI driver to the kubelet; GetPluginCapabilities is used to indicate what features the CSI driver mainly provides.

CSI Controller

is used to create/delete volumes, attach/detach volumes, volume snapshots, volume expansion/contraction, etc. The Controller plugin needs to implement this set of interfaces. The interfaces are as follows.

|

|

As mentioned above in the introduction of k8s external components, different interfaces are provided to different components for different functions. For example, CreateVolume / DeleteVolume can be used with external-provisioner to create/delete volume, ControllerPublishVolume / ControllerUnpublishVolume can be used with external-attacher for attaching/detaching volumes, etc.

CSI Node

The Node plug-in needs to implement this set of interfaces for mount/umount volume, check volume status, etc. The interfaces are as follows.

|

|

NodeStageVolume is used to implement the function of multiple pods sharing a single volume, supporting first mounting the volume to a temporary directory and then mounting it to the pod via NodePublishVolume; NodeUnstageVolume does the opposite.

Workflow

Here’s a look at the entire workflow of pod mounting volume. There are three phases in the process: Provision/Delete, Attach/Detach, and Mount/Unmount, but not every storage solution goes through these three phases, for example, NFS does not have an Attach/Detach phase.

The entire process involves not only the work of the components described above, but also the AttachDetachController and PVController components of the ControllerManager and the kubelet, and the following is a detailed analysis of the Provision, Attach, and Mount phases respectively.

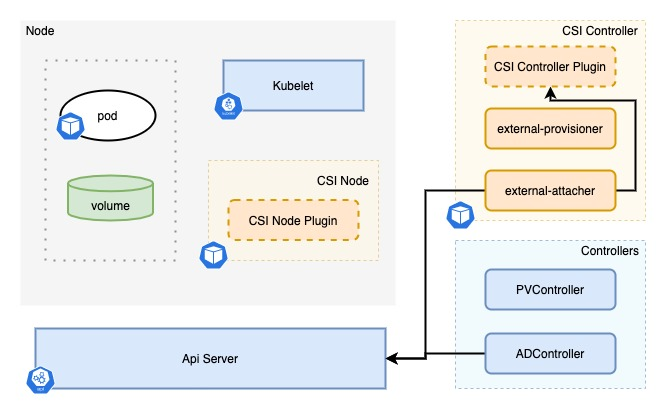

Provision

Let’s look at the Provision phase first, the whole process is shown in the figure above. Both extenal-provisioner and PVController watch PVC resources.

- When PVController watches that a PVC is created in the cluster, it determines whether there is an in-tree plugin that matches it, and if not, it determines that its storage type is out-of-tree, so it annotates the PVC with

volume.beta.kubernetes.io/storage- provisioner={csi driver name}. - call the CSI Controller’s

CreateVolumeinterface when the extenal-provisioner watches that the PVC’s annotated csi driver is consistent with its own csi driver. - when the

CreateVolumeinterface of the CSI Controller returns success, the extenal-provisioner creates the corresponding PV in the cluster. - when the PVController watches that a PV is created in the cluster, it binds the PV to the PVC.

Attach

The Attach phase refers to attaching a volume to a node, and the whole process is shown in the figure above.

- the ADController listens when a pod is dispatched to a node and is using a PV of type CSI, and calls the internal in-tree CSI plug-in interface, which creates a VolumeAttachment resource in the cluster.

- the external-attacher component watches for a VolumeAttachment resource to be created and calls the CSI Controller’s

ControllerPublishVolumeinterface. - when the CSI Controller’s

ControllerPublishVolumeinterface is called successfully, the external-attacher sets the Attached state of the corresponding VolumeAttachment object to true. - When the ADController watches that the Attached state of the VolumeAttachment object is true, it updates the internal state of the ADController ActualStateOfWorld.

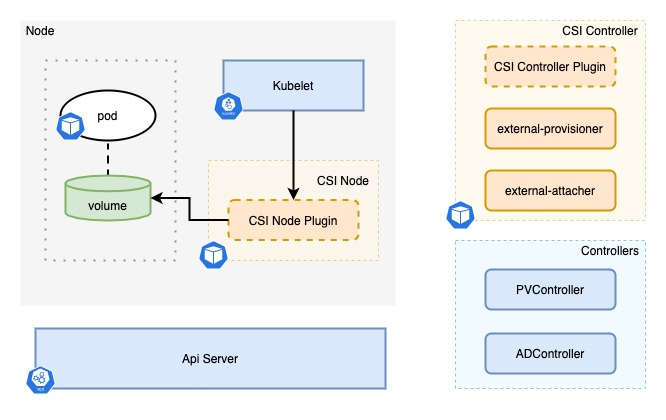

Mount

The last step of mounting the volume to the pod involves the kubelet, and the whole process is simply described as a kubelet on the corresponding node calling the CSI Node plug-in to perform the mount operation during the pod creation process. The following is a breakdown of the kubelet’s internal components.

First, in the main function syncPod where the kubelet creates the pod, the kubelet calls the WaitForAttachAndMount method of its subcomponent volumeManager and waits for the volume mount to complete.

|

|

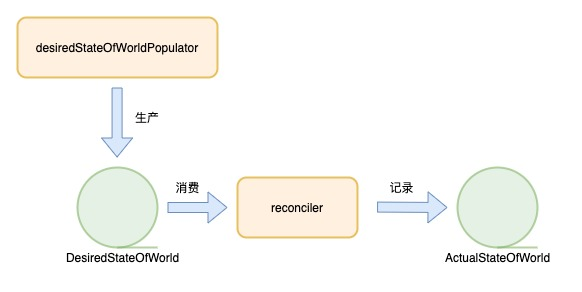

The volumeManager contains two components: the desiredStateOfWorldPopulator and the reconciler, which work together to complete the mount and umount process of the volume in the pod. The whole process is as follows.

The desiredStateOfWorldPopulator and reconciler have a producer-consumer model. two queues are maintained in the volumeManager (technically an interface, but acting as a queue here), namely DesiredStateOfWorld and The former maintains the desired state of the volume in the current node; the latter maintains the actual state of the volume in the current node.

The desiredStateOfWorldPopulator only does two things in its own loop, one is to get the newly created Pod from the podManager of the kubelet and record the information of the volume it needs to mount into DesiredStateOfWorld; the other thing is to get the information of the volume being mounted from the podManager of the current node into DesiredStateOfWorld. The other thing is to get the deleted pods from the podManager of the current node and check if their volumes are in the ActualStateOfWorld record, and if not, remove them from DesiredStateOfWorld as well, thus ensuring that DesiredStateOfWorld records the desired state of all the volumes in the node. This ensures that DesiredStateOfWorld records the desired state of all volumes in the node. The relevant code is as follows (some code has been removed to streamline the logic).

|

|

The reconciler is the consumer, and it does three main things.

unmountVolumes(): iterates through the volume in ActualStateOfWorld, determines if it is in DesiredStateOfWorld, and if not, calls the CSI Node’s interface to perform an unmount and records it in ActualStateOfWorld.mountAttachVolumes(): Get the volume from DesiredStateOfWorld that needs to be mounted, call the CSI Node’s interface to perform mount or expand, and record it in ActualStateOfWorld.unmountDetachDevices(): iterate through the volume in ActualStateOfWorld, and if it has been attached, but there is no pod in use and not recorded in DesiredStateOfWorld, then unmount/detach it.

Let’s take mountAttachVolumes() as an example and see how it calls the CSI Node interface.

|

|

The execution of the mount is done in rc.operationExecutor, look at the operationExecutor code.

|

|

This function constructs the executor function first and then executes it, then look at the constructor again.

|

|

Here we first go to the plugin list of the CSI registered to the kubelet and find the corresponding plugin, then execute volumeMounter.SetUp and finally update the ActualStateOfWorld record. Here, the external CSI plugin is csiMountMgr, and the code is as follows.

|

|

As you can see, the CSI Node NodePublishVolume / NodeUnPublishVolume interface is called by the volumeManager’s csiMountMgr in the kubelet.

Summary

This article analyzes the entire CSI system from three aspects: the CSI components, the CSI interface, and the process of how volume is mounted on a pod.

CSI is the standard storage interface for the entire container ecosystem, and the CO communicates with the CSI plug-in via gRPC. In order to achieve universality, k8s has designed many external components to work with the CSI plug-in to achieve different functions, thus ensuring the purity of k8s internal logic and the simplicity of the CSI plug-in.